Decoding user disclosure intentions in generative AI: Exploring the influence of system attributes on trust and privacy concerns within the extended technology acceptance model

Vol.20,No.1(2026)

As generative artificial intelligence becomes more prevalent, understanding the factors that influence users' willingness to disclose personal information to these platforms is crucial. This study explores how Perceived Autonomy and Perceived Empathy in generative AI platforms influence users’ Behavioral Intent to Disclose personal information. Using a sample of 1,034 generative AI users in China, Structural Equation Modeling (SEM) was employed to analyze the relationships between autonomy, empathy, trust, privacy concerns, and disclosure intentions. The results show that both Perceived Autonomy and Perceived Empathy positively influence Behavioral Intent to Disclose personal information on generative AI platforms. These relationships are positively mediated by Trust in Generative AI and negatively mediated by Privacy Concerns, highlighting the role of trust in enhancing disclosure and privacy concerns in reducing it. These findings align with the Extended Technology Acceptance Model, which emphasizes the role of trust and privacy as external factors in shaping user behavior. This study provides valuable insights into how AI system attributes influence disclosure decisions, offering guidance for the design of more trustworthy and privacy-sensitive generative AI platforms.

perceived autonomy; perceived empathy; trust; privacy concerns; disclosure intention; generative AI

Jing Niu

School of Journalism and Information Communication, Huazhong University of Science and Technology, Wuhan

Jing Niu is a professor in the School of Journalism and Information Communication at the Huazhong University of Science and Technology. Her research interests include media ethics with a special focus on the ethical use of new media technologies and artificial intelligence. She also has extensive research experience on privacy issues regarding social media.

Bilal Mazhar

School of Media and Communication, Shenzhen University, Shenzhen

Bilal Mazhar is a postdoctoral researcher at the School of Media and Communication of Shenzhen University. His research interest lies at the intersection of new media technologies, including artificial intelligence, and the privacy issues regarding these technologies. He has published papers in well-reputed journals on how social media users protect their privacy online. He is currently working on artificial intelligence users' sensitive information disclosure and privacy concerns.

Yilin Ren

School of Journalism and Communication, Nanjing University, Nanjing

Yilin Ren is a Ph.D. student in the School of Journalism and Communication at Nanjing University. Her research interests include artificial intelligence use and social media user behaviour.

Inam Ul Haq

School of Journalism and Information Communication, Huazhong University of Science and Technology, Wuhan

Inam Ul Haq is a PhD student in the School of Journalism and Information Communication at the Huazhong University of Science and Technology, Wuhan, China. His research interests include artificial intelligence, with a special focus on AI-driven social media advertising. Additionally, his work explores topics such as electronic word-of-mouth (eWOM), marketing communication, and consumer behavior.

Abdulai, A.-F. (2025). Is generative AI increasing the risk for technology‐mediated trauma among vulnerable populations? Nursing Inquiry, 32(1), Article e12686. https://doi.org/10.1111/nin.12686

Afroogh, S., Akbari, A., Malone, E., Kargar, M., & Alambeigi, H. (2024). Trust in AI: Progress, challenges, and future directions. Humanities and Social Sciences Communications, 11(1), Article 1568. https://doi.org/10.1057/s41599-024-04044-8

Alabed, A., Javornik, A., & Gregory-Smith, D. (2022). AI anthropomorphism and its effect on users‘ self-congruence and self–AI integration: A theoretical framework and research agenda. Technological Forecasting and Social Change, 182, Article 121786. https://doi.org/10.1016/j.techfore.2022.121786

Albishri, N., Rai, J. S., Attri, R., Yaqub, M. Z., & Walsh, S. T. (2025). Breaking barriers: Investigating generative AI adoption and organizational use. Journal of Enterprise Information Management, 1–22. https://doi.org/10.1108/JEIM-01-2025-0010

Ali, H., & Aysan, A. F. (2025). Ethical dimensions of generative AI: A cross-domain analysis using machine learning structural topic modeling. International Journal of Ethics and Systems, 41(1), 3–34. https://doi.org/10.1108/ijoes-04-2024-0112

An, S., Eck, T., & Yim, H. (2023). Understanding consumers’ acceptance intention to use mobile food delivery applications through an extended technology acceptance model. Sustainability, 15(1), Article 832. https://doi.org/10.3390/su15010832

Andreoni, M., Lunardi, W. T., Lawton, G., & Thakkar, S. (2024). Enhancing autonomous system security and resilience with generative AI: A comprehensive survey. IEEE Access, 12, 109470–109493. https://doi.org/10.1109/access.2024.3439363

Asman, O., Torous, J., & Tal, A. (2025). Responsible design, integration, and use of generative AI in mental health. JMIR Mental Health, 12, Article e70439. https://doi.org/10.2196/70439

Banh, L., & Strobel, G. (2023). Generative artificial intelligence. Electronic Markets, 33(1), Article 63. https://doi.org/10.1007/s12525-023-00680-1

Barnes, A. J., Zhang, Y., & Valenzuela, A. (2024). AI and culture: Culturally dependent responses to AI systems. Current Opinion in Psychology, 58, Article 101838. https://doi.org/10.1016/j.copsyc.2024.101838

Belainine, B., Sadat, F., & Lounis, H. (2020). Modelling a conversational agent with complex emotional intelligence. Proceedings of the AAAI Conference on Artificial Intelligence, 34(10), 13710–13711. https://doi.org/10.1609/aaai.v34i10.7127

Benitez, J., Henseler, J., Castillo, A., & Schuberth, F. (2020). How to perform and report an impactful analysis using partial least squares: Guidelines for confirmatory and explanatory IS research. Information & Management, 57(2), Article 103168. https://doi.org/10.1016/j.im.2019.05.003

Berberich, N., Nishida, T., & Suzuki, S. (2020). Harmonizing artificial intelligence for social good. Philosophy & Technology, 33(4), 613–638. https://doi.org/10.1007/s13347-020-00421-8

Brüns, J. D., & Meißner, M. (2024). Do you create your content yourself? Using generative artificial intelligence for social media content creation diminishes perceived brand authenticity. Journal of Retailing and Consumer Services, 79, Article 103790. https://doi.org/10.1016/j.jretconser.2024.103790

Cao, Z., & Peng, L. (2025). An empirical study of factors influencing usage intention for generative artificial intelligence products: A case study of China. Journal of Information Science, 51(6), 1513–1528. https://doi.org/10.1177/01655515241297329

Capiola, A., Lyons, J. B., Harris, K. N., aldin Hamdan, I., Kailas, S., & Sycara, K. (2023). “Do what you say?” The combined effects of framed social intent and autonomous agent behavior on the trust process. Computers in Human Behavior, 149, Article 107966. https://doi.org/10.1016/j.chb.2023.107966

Charrier, L., Rieger, A., Galdeano, A., Cordier, A., Lefort, M., & Hassas, S. (2019). The RoPE scale: A measure of how empathic a robot is perceived. In Proceedings of the 14th ACM/IEEE International Conference on Human–Robot Interaction (HRI) (pp. 656 –657). https://doi.org/10.1109/HRI.2019.8673082

Chen, Z., Gong, Y., Huang, R., & Lu, X. (2024). How does information encountering enhance purchase behavior? The mediating role of customer inspiration. Journal of Retailing and Consumer Services, 78, Article 103772. https://doi.org/10.1016/j.jretconser.2024.103772

Chen, Y., Khan, S. K., Shiwakoti, N., Stasinopoulos, P., & Aghabayk, K. (2023). Analysis of Australian public acceptance of fully automated vehicles by extending technology acceptance model. Case Studies on Transport Policy, 14, Article 101072. https://doi.org/10.1016/j.cstp.2023.101072

Chen, D., Liu, Y., Guo, Y., & Zhang, Y. (2024). The revolution of generative artificial intelligence in psychology: The interweaving of behavior, consciousness, and ethics. Acta Psychologica, 251, Article 104593. https://doi.org/10.1016/j.actpsy.2024.104593

Chen, L., Zhang, Y., Tian, B., Ai, Y., Cao, D., & Wang, F.-Y. (2022). Parallel driving OS: A ubiquitous operating system for autonomous driving in CPSS. IEEE Transactions on Intelligent Vehicles, 7(4), 886–895. https://doi.org/10.1109/TIV.2022.3223728

Choi, S., & Zhou, J. (2023). Inducing consumers’ self-disclosure through the fit between chatbot’s interaction styles and regulatory focus. Journal of Business Research, 166, Article 114127. https://doi.org/10.1016/j.jbusres.2023.114127

Choung, H., David, P., & Ross, A. (2023). Trust and ethics in AI. AI & SOCIETY, 38(2), 733–745. https://doi.org/10.1007/s00146-022-01473-4

Chung, L. L., & Kang, J. (2023). “I'm hurt too”: The effect of a chatbot’s reciprocal self-disclosures on users’ painful experiences. Archives of Design Research, 36(4), 67–84. https://doi.org/10.15187/adr.2023.11.36.4.67

Coker, K. K., & Thakur, R. (2024). Alexa, may I adopt you? The role of voice assistant empathy and user-perceived risk in customer service delivery. Journal of Services Marketing, 38(3), 301–311. https://doi.org/10.1108/jsm-07-2023-0284

Constantinides, M., Bogucka, E., Quercia, D., Kallio, S., & Tahaei, M. (2024). RAI guidelines: Method for generating responsible AI guidelines grounded in regulations and usable by (non‑)technical roles. Proceedings of the ACM on Human‑Computer Interaction, 8(CSCW2), Article 388. https://doi.org/10.1145/3686927

Cusnir, C., & Nicola, A. (2024). Using generative artificial intelligence tools in public relations: Ethical concerns and the impact on the profession in the Romanian context. Communication & Society, 37(4), 309–323. https://doi.org/10.15581/003.37.4.309-323

Dahlin, E. (2024). And say the AI responded? Dancing around ‘autonomy’ in AI/human encounters. Social Studies of Science, 54(1), 59–77. https://doi.org/10.1177/03063127231193947

Das, S., Lee, H.-P., & Forlizzi, J. (2023). Privacy in the age of AI. Communications of the ACM, 66(11), 29–31. https://doi.org/10.1145/3625254

Dong, Y., & Wu, Y. (2025). Interacting with healthcare chatbot: Effects of status cues and message contingency on AI credibility assessment. International Journal of Human–Computer Interaction, 41(11), 6908–6920. https://doi.org/10.1080/10447318.2024.2387396

Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. https://doi.org/10.1177/002224378101800104

Giannakos, M., Azevedo, R., Brusilovsky, P., Cukurova, M., Dimitriadis, Y., Hernandez-Leo, D., Järvelä, S., Mavrikis, M., & Rienties, B. (2025). The promise and challenges of generative AI in education. Behaviour & Information Technology, 44(11), 2518–2544.. https://doi.org/10.1080/0144929x.2024.2394886

Gieselmann, M., & Sassenberg, K. (2023). The more competent, the better? The effects of perceived competencies on disclosure towards conversational artificial intelligence. Social Science Computer Review, 41(6), 2342–2363. https://doi.org/10.1177/08944393221142787

Gong, Z., & Su, L. Y.-F. (2025). Exploring the influence of interactive and empathetic chatbots on health misinformation correction and vaccination intentions. Science Communication, 47(2), 276–308. https://doi.org/10.1177/10755470241280986

Gu, C., Zhang, Y., & Zeng, L. (2024). Exploring the mechanism of sustained consumer trust in AI chatbots after service failures: a perspective based on attribution and CASA theories. Humanities and Social Sciences Communications, 11, Article 1400. https://doi.org/10.1057/s41599-024-03879-5

Gupta, A. S., & Mukherjee, J. (2025). Framework for adoption of generative AI for information search of retail products and services. International Journal of Retail & Distribution Management, 53(2), 165–181. https://doi.org/10.1108/ijrdm-05-2024-0203

Hair, J. F. Jr., Sarstedt, M., Hopkins, L., & Kuppelwieser, V. G. (2014). Partial least squares structural equation modeling (PLS-SEM). European Business Review, 26(2), 106–121. https://doi.org/10.1108/EBR-10-2013-0128

Henseler, J., Ringle, C. M., & Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science, 43(1), 115–135. https://doi.org/10.1007/s11747-014-0403-8

Hermann, E., & Puntoni, S. (2025). Generative AI in marketing and principles for ethical design and deployment. Journal of Public Policy & Marketing, 44(3), 332–349. https://doi.org/10.1177/07439156241309874

Hou, G., Li, X., & Wang, H. (2024). How to improve older adults’ trust and intentions to use virtual health agents: An extended technology acceptance model. Humanities and Social Sciences Communications, 11, Article 1677. https://doi.org/10.1057/s41599-024-04232-6

Hsieh, S. H., & Lee, C. T. (2024). The AI humanness: How perceived personality builds trust and continuous usage intention. Journal of Product & Brand Management, 33(5), 618–632. https://doi.org/10.1108/jpbm-10-2023-4797

Hu, Q., Lu, Y., Pan, Z., Gong, Y., & Yang, Z. (2021). Can AI artifacts influence human cognition? The effects of artificial autonomy in intelligent personal assistants. International Journal of Information Management, 56, Article 102250. https://doi.org/10.1016/j.ijinfomgt.2020.102250

Huynh, M.-T. (2024). Using generative AI as decision-support tools: Unraveling users’ trust and AI appreciation. Journal of Decision Systems, 1–32. https://doi.org/10.1080/12460125.2024.2428166

Hyun Baek, T., & Kim, M. (2023). Is ChatGPT scary good? How user motivations affect creepiness and trust in generative artificial intelligence. Telematics and Informatics, 83, Article 102030. https://doi.org/10.1016/j.tele.2023.102030

Inzlicht, M., Cameron, C. D., D’Cruz, J., & Bloom, P. (2024). In praise of empathic AI. Trends in Cognitive Sciences, 28(2), 89–91. https://doi.org/10.1016/j.tics.2023.12.003

Janson, A., & Barev, T. J. (2026). Making the default more legitimate–the role of autonomy and transparency for digital privacy nudges. Information Technology & People, 39(1), 428–455. https://doi.org/10.1108/itp-04-2023-0334

Jeon, M. (2024). The effects of emotions on trust in human-computer interaction: A survey and prospect. International Journal of Human–Computer Interaction, 40(22), 6864–6882. https://doi.org/10.1080/10447318.2023.2261727

Karami, A., Shemshaki, M., & Ghazanfar, M. A. (2025). Exploring the ethical implications of AI-powered personalization in digital marketing. Data Intelligence, 7, 1–50. https://doi.org/10.3724/2096-7004.di.2024.0055

Kayser, I., & Gradtke, M. (2024). Unlocking AI acceptance: An integration of NCA and PLS-SEM to analyse the acceptance of ChatGPT. Journal of Decision Systems, 1–29. https://doi.org/10.1080/12460125.2024.2443231

Kelly, S., Kaye, S.-A., & Oviedo-Trespalacios, O. (2023). What factors contribute to the acceptance of artificial intelligence? A systematic review. Telematics and Informatics, 77, Article 101925. https://doi.org/10.1016/j.tele.2022.101925

Kim, D., Kim, S., Kim, S., & Lee, B. H. (2024). Generative AI characteristics, user motivations, and usage intention. Journal of Computer Information Systems, 1–16. https://doi.org/10.1080/08874417.2024.2442438

Kim, W. B., & Hur, H. J. (2024). What makes people feel empathy for AI chatbots? Assessing the role of competence and warmth. International Journal of Human–Computer Interaction, 40(17), 4674–4687. https://doi.org/10.1080/10447318.2023.2219961

Lalot, F., & Bertram, A.-M. (2024). When the bot walks the talk: Investigating the foundations of trust in an artificial intelligence (AI) chatbot. Journal of Experimental Psychology: General, 154(2), 533–551. https://doi.org/10.1037/xge0001696

Li, M., Guo, F., Li, Z., Ma, H., & Duffy, V. G. (2024). Interactive effects of users’ openness and robot reliability on trust: evidence from psychological intentions, task performance, visual behaviours, and cerebral activations. Ergonomics, 67(11), 1612–1632. https://doi.org/10.1080/00140139.2024.2343954

Liu, S., & Wang, L. (2016). Influence of managerial control on performance in medical information system projects: The moderating role of organizational environment and team risks. International Journal of Project Management, 34(1), 102–116. https://doi.org/10.1016/j.ijproman.2015.10.003

Liu, Y., Huang, J., Li, Y., Wang, D., & Xiao, B. (2025). Generative AI model privacy: A survey. Artificial Intelligence Review, 58(1), Article 33. https://doi.org/10.1007/s10462-024-11024-6

Liu, Y., & Mensah, I. K. (2024). Using an extended technology acceptance model to understand the behavioral adoption intention of mobile commerce among Chinese consumers. Information Development, 1–19. https://doi.org/10.1177/02666669241277153

London, A. J., & Heidari, H. (2024). Beneficent intelligence: A capability approach to modeling benefit, assistance, and associated moral failures through AI systems. Minds and Machines, 34(4), Article 41. https://doi.org/10.1007/s11023-024-09696-8

Lu, B., & Wang, Z. (2022). Trust transfer in sharing accommodation: The moderating role of privacy concerns. Sustainability, 14(12), Article 7384. https://doi.org/10.3390/su14127384

Ma, M. (2025). Exploring the acceptance of generative artificial intelligence for language learning among EFL postgraduate students: An extended TAM approach. International Journal of Applied Linguistics, 35(1), 91–108. https://doi.org/10.1111/ijal.12603

Ma, N., Khynevych, R., Hao, Y., & Wang, Y. (2025). Effect of anthropomorphism and perceived intelligence in chatbot avatars of visual design on user experience: Accounting for perceived empathy and trust. Frontiers in Computer Science, 7, Article 1531976. https://doi.org/10.3389/fcomp.2025.1531976

Malhotra, N. K., Kim, S. S., & Agarwal, J. (2004). Internet users' information privacy concerns (IUIPC): The construct, the scale, and a causal model. Information Systems Research, 15(4), 336–355. https://doi.org/10.1287/isre.1040.0032

Menard, P., & Bott, G. J. (2025). Artificial intelligence misuse and concern for information privacy: New construct validation and future directions. Information Systems Journal, 35(1), 322–367. https://doi.org/10.1111/isj.12544

Mu, J., Zhou, L., & Yang, C. (2024). Research on the behavior influence mechanism of users’ continuous usage of autonomous driving systems based on the extended technology acceptance model and external factors. Sustainability, 16(22), Article 9696. https://doi.org/10.3390/su16229696

Mustofa, R. H., Kuncoro, T. G., Atmono, D., Hermawan, H. D., & Sukirman. (2025). Extending the technology acceptance model: The role of subjective norms, ethics, and trust in AI tool adoption among students. Computers and Education: Artificial Intelligence, 8, Article 100379. https://doi.org/10.1016/j.caeai.2025.100379

National Bureau of Statistics of China. (2021, May 11). 第七次全国人口普查公报(第三号) [Communiqué of the Seventh National Population Census (No. 3)]. http://www.stats.gov.cn/sj/tjgb/rkpcgb/qgrkpcgb/202302/t20230206_1902003.html

Oesterreich, T. D., Anton, E., Hettler, F. M., & Teuteberg, F. (2025). What drives individuals’ trusting intention in digital platforms? An exploratory meta-analysis. Management Review Quarterly, 75(4), 3615–3667. https://doi.org/10.1007/s11301-024-00477-2

Pan, W., Liu, D., Meng, J., & Liu, H. (2025). Human–AI communication in initial encounters: How AI agency affects trust, liking, and chat quality evaluation. New Media & Society, 27(10), 5822–5847. https://doi.org/10.1177/14614448241259149

Pelau, C., Dabija, D.-C., & Stanescu, M. (2024). Can I trust my AI friend? The role of emotions, feelings of friendship and trust for consumers’ information-sharing behavior toward AI. Oeconomia Copernicana, 15(2), 407–433. https://doi.org/10.24136/oc.2916

Piller, F. T., Srour, M., & Marion, T. J. (2024). Generative AI, innovation, and trust. The Journal of Applied Behavioral Science, 60(4), 613–622. https://doi.org/10.1177/00218863241285033

Prunkl, C. (2024). Human autonomy at risk? An analysis of the challenges from AI. Minds and Machines, 34(3), Article 26. https://doi.org/10.1007/s11023-024-09665-1

Ringwald, W. R., & Wright, A. G. C. (2021). The affiliative role of empathy in everyday interpersonal interactions. European Journal of Personality, 35(2), 197–211. https://doi.org/10.1002/per.2286

Robinson-Tay, K., & Peng, W. (2025). The role of knowledge and trust in developing risk perceptions of autonomous vehicles: A moderated mediation model. Journal of Risk Research, 28(9–10), 1130–1145. https://doi.org/10.1080/13669877.2024.2360923

Safdari, A. (2025). Toward an empathy-based trust in human-otheroid relations. AI & Society, 40(5), 3123–3138. https://doi.org/10.1007/s00146-024-02155-z

Sankaran, S., & Markopoulos, P. (2021). ”It’s like a puppet master”: User perceptions of personal autonomy when interacting with intelligent technologies. In Proceedings of the 29th Conference on User Modeling, Adaptation and Personalization (UMAP ’21) (pp. 108–118). Association for Computing Machinery. https://doi.org/10.1145/3450613.3456820

Seok, J., Lee, B. H., Kim, D., Bak, S., Kim, S., Kim, S., & Park, S. (2025). What emotions and personalities determine acceptance of generative AI?: Focusing on the CASA paradigm. International Journal of Human–Computer Interaction, 41(18), 11436–11458. https://doi.org/10.1080/10447318.2024.2443263

Shahzad, M. F., Xu, S., & Javed, I. (2024). ChatGPT awareness, acceptance, and adoption in higher education: The role of trust as a cornerstone. International Journal of Educational Technology in Higher Education, 21(1), Article 46. https://doi.org/10.1186/s41239-024-00478-x

Shin, W., Kim, E., & Huh, J. (2025). Young adults’ acceptance of data-driven personalized advertising: Privacy and Trust Equilibrium (PATE) model. Young Consumers Insight and Ideas for Responsible Marketers, 26(2), 189–206. https://doi.org/10.1108/yc-06-2024-2105

Shukla, A. K., Terziyan, V., & Tiihonen, T. (2024). AI as a user of AI: Towards responsible autonomy. Heliyon, 10(11), Article e31397. https://doi.org/10.1016/j.heliyon.2024.e31397

Sundar, S. S., & Kim, J. (2019). Machine heuristic: When we trust computers more than humans with our personal information. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ’19) (pp. 1–9). Association for Computing Machinery. https://doi.org/10.1145/3290605.3300768

Totschnig, W. (2020). Fully autonomous AI. Science and Engineering Ethics, 26(5), 2473–2485. https://doi.org/10.1007/S11948-020-00243-Z

Ursavaş, Ö. F., Yalçın, Y., İslamoğlu, H., Bakır-Yalçın, E., & Cukurova, M. (2025). Rethinking the importance of social norms in generative AI adoption: Investigating the acceptance and use of generative AI among higher education students. International Journal of Educational Technology in Higher Education, 22(1), Article 38. https://doi.org/10.1186/s41239-025-00535-z

Vimalkumar, M., Sharma, S. K., Singh, J. B., & Dwivedi, Y. K. (2021). ‘Okay google, what about my privacy?’: User‘s privacy perceptions and acceptance of voice based digital assistants. Computers in Human Behavior, 120, Article 106763. https://doi.org/10.1016/J.CHB.2021.106763

Wang, Y.-J., Wang, N., Li, M., Li, H., & Huang, G. Q. (2024). End-users‘ acceptance of intelligent decision-making: A case study in digital agriculture. Advanced Engineering Informatics, 60, Article 102387. https://doi.org/10.1016/j.aei.2024.102387

Xie, Y., Zhu, K., Zhou, P., & Liang, C. (2023). How does anthropomorphism improve human-AI interaction satisfaction: A dual-path model. Computers in Human Behavior, 148, Article 107878. https://doi.org/10.1016/j.chb.2023.107878

Xu, K., Chen, X., Liu, F., & Huang, L. (2025). What did you hear and what did you see? Understanding the transparency of facial recognition and speech recognition systems during human–robot interaction. New Media & Society, 27(10), 5776-5802. https://doi.org/10.1177/14614448241256899

Yang, B., Sun, Y., & Shen, X.-L. (2025). Building harmonious human–AI relationship through empathy in frontline service encounters: underlying mechanisms and journey stage differences. International Journal of Contemporary Hospitality Management 37(3), 740–762. https://doi.org/10.1108/ijchm-05-2024-0676

Yu, L., & Zhai, X. (2024). Ethical and regulatory challenges of generative artificial intelligence in healthcare: A Chinese perspective. Journal of Clinical Nursing. https://doi.org/10.1111/jocn.17493

Zhang, S., Zhao, X., Nan, D., & Kim, J. H. (2024). Beyond learning with cold machine: Interpersonal communication skills as anthropomorphic cue of AI instructor. International Journal of Educational Technology in Higher Education, 21(1), Article 27. https://doi.org/10.1186/s41239-024-00465-2

Zhang, Z., & Wang, J. (2024). Can AI replace psychotherapists? Exploring the future of mental health care. Frontiers in Psychiatry, 15, Article 1444382. https://doi.org/10.3389/fpsyt.2024.1444382

Authors' Contribution

Jing Niu: conceptualization, methodology, data collection, resources, writing—review & editing, supervision, funding acquisition. Bilal Mazhar: conceptualization, methodology, formal analysis, writing—original draft, writing—review & editing. Yilin Ren: conceptualization, methodology, data collection. Inam Ul Haq: methodology, formal analysis, writing—review & editing.

Editorial Record

First submission received:

February 22, 2025

Revision received:

October 5, 2025

Accepted for publication:

November 27, 2025

Editor in charge:

David Smahel

Introduction

The rapid advancement of artificial intelligence (AI) has transformed digital interactions, with generative AI platforms emerging as powerful tools for content creation, recommendation systems, and conversational interfaces (Giannakos et al., 2025). These AI-driven technologies are widely integrated into social media, customer service, healthcare, and personalized marketing, significantly enhancing user engagement (Banh & Strobel, 2023). However, as generative AI becomes more sophisticated, concerns regarding privacy, trust, and data disclosure have intensified (Karami et al., 2025). Users interact with these platforms for various purposes, often sharing personal and sensitive information without fully understanding the potential risks (Sundar & J. Kim, 2019). While some users readily disclose their data, others exhibit hesitancy and concern over privacy breaches, algorithmic biases, and data misuse (Ali & Aysan, 2025). This divergence in behavioral intent raises an essential question: What factors influence users’ willingness to disclose personal information to generative AI systems? Addressing this question is critical for ensuring the responsible and ethical deployment of AI in human-centered applications.

One of the key psychological factors influencing disclosure decisions is trust in generative AI, defined as the belief that generative AI systems are competent, reliable, and act with integrity (Choung et al., 2023). Trust is foundational in human–technology interactions, particularly when users engage with AI systems exhibiting autonomy and empathy (Choi & Zhou, 2023). Moreover, perceived autonomy in generative AI refers to the extent to which users believe the system operates independently and makes decisions effectively (Dahlin, 2024), while perceived empathy reflects generative AI users’ perception about AI’s ability to understand and respond to users’ emotions in a human-like manner (Yang et al., 2025). Both attributes significantly shape user perceptions, potentially enhancing trust in generative AI platforms. However, the presence of privacy concerns often acts as a counterforce, discouraging self-disclosure even when users perceive the generative AI as autonomous or empathetic (Gieselmann & Sassenberg, 2023). Given this dual influence, a comprehensive framework is necessary to examine how perceived autonomy and empathy drive trust and privacy concerns, ultimately shaping behavioral intent to disclose personal information.

The Extended Technology Acceptance Model (ETAM) provides a coherent theoretical foundation for studying disclosure in generative AI (M. Ma, 2025). While the original Technology Acceptance Model (TAM) emphasizes perceived usefulness and ease of use as the primary drivers of adoption, it does not account for relational and affective factors that are increasingly important in AI-mediated interactions (Shahzad et al., 2024). ETAM addresses these limitations by incorporating external variables such as trust and privacy concerns, thereby extending TAM to contexts where perceptions of risk and social connection are central (Wang et al., 2024). For example, Ursavaş et al. (2025) applied ETAM to examine generative AI adoption, introducing subjective norms, perceived enjoyment, self-efficacy, and compatibility as external factors, thereby demonstrating the model’s flexibility in accommodating context-specific variables.

Building on this approach, the present study situates perceived autonomy and perceived empathy as antecedents of trust and privacy concerns. Drawing on this, autonomy corresponds to ability, users’ belief that generative AI can act competently and independently (Shukla et al., 2024), while empathy reflects benevolence, the AI’s capacity to demonstrate concern and responsiveness (Inzlicht et al., 2024). By extending ETAM with these constructs, this study offers a more comprehensive framework for explaining disclosure decisions in generative AI, where judgments go beyond technical performance to include cognitive, emotional, and ethical considerations

(Kayser & Gradtke, 2024).

While existing literature has extensively examined trust in generative AI and privacy concerns independently, research exploring their combined mediating effects on AI-driven disclosure decisions remains limited. Prior studies on AI trustworthiness have focused on explainability, transparency, and algorithmic fairness, but fewer have investigated how perceived autonomy and empathy uniquely shape user trust. Additionally, privacy calculus theory suggests that individuals weigh risks and benefits before disclosing personal information, yet this trade-off is rarely examined in the context of generative AI platforms. Recent studies show generative AI adoption is accelerating worldwide, from U.S. consumers’ social media engagement (Brüns & Meißner, 2024) to organizational use in Saudi firms (Albishri et al., 2025) and European communication sectors (Cusnir & Nicola, 2024). Despite these advances, empirical research on Chinese users’ disclosure behaviors remains scarce. Given China’s leadership in AI adoption, understanding local trust and privacy concerns in generative AI-driven interactions is crucial (Yu & Zhai, 2024).

This study addresses existing gaps by empirically testing a model that integrates perceived autonomy, perceived empathy, trust in AI, and privacy concerns within the ETAM framework. The focus is on users of text-based generative AI systems, such as ChatGPT, DeepSeek, and Doubao, which are widely used conversational and content-generation agents. As some of the most adopted forms of generative AI, these systems provide a suitable context for examining disclosure behaviors. The study advances theoretical understanding and offers practical insights for AI ethics, platform design, and regulation, ensuring that user trust is balanced with privacy safeguards.

Literature Review

The Extended Technology Acceptance Model (ETAM)

The Technology Acceptance Model (TAM) has long provided a foundation for studying technology adoption, focusing on perceived usefulness and ease of use as key predictors of behavioral intention. While influential, TAM has been criticized for its narrow focus on functional evaluations, overlooking broader psychological, social, and ethical aspects of technology acceptance (Kayser & Gradtke, 2024). To address these gaps, the Extended Technology Acceptance Model (ETAM) incorporates external variables, such as trust, risk, or social influence, that reflect context-specific concerns beyond usability (Y. Liu & Mensah, 2024). This extension allows researchers to account for cognitive, emotional, and ethical factors that shape technology use in complex environments.

Recent studies highlight ETAM’s flexibility in adapting to emerging technologies. Research conducted by Ursavaş et al. (2025) extended TAM to generative AI by adding subjective norms, perceived enjoyment, self-efficacy, and compatibility as external factors. Similarly, Mu et al. (2024) demonstrated in autonomous driving systems that external factors like economic benefits and technological stability significantly influenced adoption, showing that user judgments extend beyond technical performance. Moreover, Hou et al. (2024) applied ETAM in healthcare, illustrating the importance of trust and perceived risk in shaping older adults’ engagement with AI health agents. Together, these studies confirm that ETAM provides a broad framework rather than prescribing fixed constructs.

Building on this perspective, the present study introduces perceived autonomy and perceived empathy as external variables particularly relevant for generative AI disclosure. Drawing on trust theory, autonomy corresponds to ability, reflecting competence and independent functioning (Shukla et al., 2024), while empathy represents benevolence, reflecting concern and responsiveness like humans (Inzlicht et al., 2024). Integrating these constructs as external factors into ETAM enables a more comprehensive account of user disclosure decisions, where judgments involve not only performance but also relational and ethical considerations (Mustofa et al., 2025).

Perceived Autonomy and Empathy in AI-Driven Self-Disclosure

The growing reliance on generative artificial intelligence (AI) has intensified discussions on the psychological factors that drive user engagement and self-disclosure. As generative AI systems become more sophisticated, users interact with them in ways that mirror human relationships, making perceived autonomy and perceived empathy critical in shaping behavioral intent (D. Chen et al., 2024). Understanding how these factors influence self-disclosure is essential for optimizing AI design and ensuring ethical interactions. The Extended Technology Acceptance Model (ETAM) provides a robust theoretical framework for examining how these perceptions influence users' willingness to disclose personal information, particularly by integrating trust and perceived risk into the traditional technology acceptance paradigm (M. Ma, 2025).

Perceived autonomy refers to the extent to which users believe generative AI operates independently, demonstrating intelligent decision-making and adaptability. When a generative AI exhibits autonomy, users are more likely to perceive it as competent, reliable, and capable of meaningful interaction (London & Heidari, 2024). Prior research on human-computer interaction suggests that autonomy enhances trust, a key determinant of user engagement (Capiola et al., 2023). Studies in social robotics and conversational agents indicate that users prefer systems that exhibit a degree of independence in their responses, as such features contribute to perceived intelligence and credibility (Dong & Wu, 2025). Autonomy fosters the perception that generative AI understands and aligns with user intentions, reducing uncertainty and increasing comfort in sharing personal information (Totschnig, 2020). This aligns with ETAM, where trust in technology strengthens behavioral intent by mitigating perceived risks (Y. Liu & Mensah, 2024). In self-disclosure contexts, individuals often assess whether the recipient, human or AI, a process and respond appropriately to shared information. If a generative AI is perceived as autonomous, users may believe it is capable of handling personal disclosures meaningfully, reinforcing the likelihood of engagement (S. Zhang et al., 2024).

Similarly, perceived empathy plays a pivotal role in fostering user-AI relationships. It means users’ view, the generative AI's ability to understand, predict, and respond to human emotions, creates a sense of connection and psychological safety (Yang et al., 2025). Human interaction literature emphasizes that empathy reduces interpersonal barriers and increases willingness to share personal thoughts and feelings (W. B. Kim & Hur, 2024). Studies on AI-mediated communication suggest that when AI exhibits empathetic behavior, such as responding in emotionally appropriate ways or acknowledging user emotions—individuals perceive it as more trustworthy and relatable (Gong & Su, 2025). This is particularly relevant in AI-driven mental health platforms and customer service applications, where empathetic responses enhance user satisfaction and disclosure intent (Z. Zhang & Wang, 2024). Within the ETAM framework, perceived empathy strengthens trust and reduces perceived risk, aligning with findings that emotional intelligence in AI positively influences adoption and sustained engagement (Coker & Thakur, 2024). Users who perceive generative AI as empathetic may feel understood and supported, reducing their hesitation to disclose personal information. On the basis of this, we propose the following hypotheses:

H1: Perceived autonomy of generative AI positively influences behavioral intent to disclose personal information.

H2: Perceived empathy of generative AI positively influences behavioral intent to disclose personal information.

Perceived Autonomy, Trust, and Privacy Concerns in Generative AI

Trust has emerged as a critical determinant of user engagement with generative AI, and perceived autonomy plays a central role in shaping this trust. Autonomy reflects the extent to which users believe the system can operate intelligently and independently (Piller et al., 2024). While trust is understood as users’ belief in the system’s competence, reliability, and integrity (Choung et al., 2023). Prior research in human–AI interaction suggests that autonomy signals control and capability, enhancing perceptions of dependability and fairness (Hsieh & Lee, 2024; Shukla et al., 2024). Importantly, users often attribute anthropomorphic qualities to generative AI systems, perceiving them as human-like in their reasoning or decision-making (Xie et al., 2023). This anthropomorphism reinforces the impression of autonomy, thereby strengthening trust (Alabed et al., 2022). Within the Extended Technology Acceptance Model (ETAM), autonomy functions as an external variable that reduces uncertainty and increases user confidence in the system’s ability to perform tasks effectively without constant human oversight (Kelly et al., 2023). By linking autonomy to generative AI contexts, its importance as a trust antecedent becomes clearer and more convincing.

At the same time, perceived autonomy may also influence users’ privacy concerns, albeit in a different direction. Privacy concerns arise when users feel a lack of control over their data, fearing misuse or unauthorized access (Dahlin, 2024). However, when generative AI is seen as autonomous, users may perceive it as less reliant on intrusive data collection and more capable of processing requests securely (Andreoni et al., 2024). Previous research on technology adoption has shown that users are less concerned about privacy risks when they believe a system operates transparently and independently (Vimalkumar et al., 2021). If generative AI is viewed as having the ability to function without constant human intervention, users may feel more comfortable sharing information, assuming that the system is designed to protect their privacy rather than exploit their data (Abdulai, 2025). This aligns with ETAM, where trust-related factors mitigate risk perceptions, influencing user attitudes toward disclosure (Ali & Aysan, 2025).

Despite extensive research on trust in technology, a gap remains in understanding how perceived autonomy operates within generative AI. Unlike traditional AI or automated systems designed for fixed, rule-based tasks, generative AI produces open-ended, creative, and often unpredictable outputs. This unpredictability introduces higher uncertainty, making user judgments about autonomy more complex than in prior contexts (Banh & Strobel, 2023). Existing studies link autonomy primarily to efficiency and usability (L. Chen et al., 2022), yet in generative AI, autonomy may simultaneously strengthen trust while heightening or alleviating privacy concerns (Hermann & Puntoni, 2025). Investigating this dual role is essential for clarifying disclosure intentions in generative AI environments. Therefore, we propose the following hypotheses:

H3: Perceived autonomy of generative AI positively influences trust in generative AI.

H4: Perceived autonomy of generative AI negatively influences privacy concerns.

Perceived Empathy, Trust, and Privacy Concerns in Generative AI

Empathy is a fundamental antecedent of trust in interpersonal relationships because it fosters connection, reduces perceived risks, and signals benevolence (Ringwald & Wright, 2021). Extending this logic to generative AI, perceived empathy refers to users’ impression of the system’s ability to recognize, understand, and respond to their emotions, which emerges as a critical determinant of trust. (Yang et al., 2025). When users perceive generative AI as empathetic, they believe it acknowledges their needs and concerns, thereby creating a sense of emotional security and reliability (Gong & Su, 2025). Human–AI interaction studies further suggest that empathetic responsiveness strengthens engagement and makes systems appear more trustworthy (Hsieh & Lee, 2024). Importantly, users often attribute anthropomorphic qualities to generative AI, perceiving its empathetic behavior as evidence of human-like understanding, which further reinforces trust (Gu et al., 2024). Similarly, research conducted by N. Ma et al. (2025) finds that the more empathetic an AI system appears, the more users assign it human-like qualities, which in turn enhances trust. Within the Extended Technology Acceptance Model (ETAM), empathy functions as an external factor that reduces uncertainty and deepens relational perceptions, making trust a pivotal mediator of user behavior (An et al., 2023).

Beyond trust, perceived empathy also influences privacy concerns, often in a paradoxical manner. On one hand, empathetic generative AI responses can make users feel valued and understood, reinforcing positive engagement. On the other hand, high levels of empathy may raise concerns about how much personal data the AI system is processing to generate such human-like responses (Karami et al., 2025). Users might question whether the generative AI has excessive access to their data or is capable of emotional manipulation, leading to heightened privacy concerns. However, when empathy is perceived positively, where users believe the system’s responses are ethical and non-intrusive, it can reduce privacy anxieties by signaling that the AI respects user boundaries rather than exploiting sensitive information (Constantinides et al., 2024). Prior research suggests that transparency in AI-driven interactions moderates this effect, where empathetic AI that clearly communicates its data usage policies mitigates privacy concerns (Xu et al., 2025). Within ETAM, perceived risk and trust are opposing forces, and empathy contributes to trust enhancement while simultaneously alleviating concerns about data security

(Coker & Thakur, 2024).

Despite extensive research on trust in AI, the interplay between perceived empathy, trust, and privacy concerns in generative AI remains underexplored. Earlier AI and chatbots primarily relied on scripted, rule-based responses, whereas generative AI produces emotionally nuanced outputs by incorporating complex emotional intelligence, enabling richer interactions through understanding and responding to emotions (Belainine et al., 2020). These unique features heighten both trust-building potential and privacy concerns, making it essential to examine empathy’s role in this new context. Based on this, we propose the following hypotheses:

H5: Perceived empathy of generative AI positively influences trust in generative AI.

H6: Perceived empathy of generative AI negatively influences privacy concerns.

Trust, Privacy Concerns, and Disclosure Intent in Generative AI

Trust plays a central role in determining users' behavioral intent to disclose personal information. When users trust a system, they are more likely to share sensitive data, believing that the system will handle it responsibly and securely (Li et al., 2024). In the case of generative AI, users who trust the platform are more inclined to provide personal information, as they believe the system can process and respond appropriately to their inputs. Trust reduces uncertainty, creating a sense of safety in disclosing sensitive data (Gupta & Mukherjee, 2025). Prior research in the fields of online platforms and digital communication has consistently demonstrated that trust significantly influences self-disclosure behaviors, as users are more likely to engage in activities such as sharing personal information when they perceive the system as reliable and secure (Oesterreich et al., 2025). Within the Extended Technology Acceptance Model (ETAM), trust is a critical external factor that mediates the relationship between perceived system characteristics (such as autonomy and empathy) and user behavior (Hou et al., 2024). When trust is established, it facilitates positive intentions, including disclosure behaviors, making it a crucial mediator in the context of generative AI (D. Kim et al., 2024).

However, despite the importance of trust, privacy concerns act as a significant barrier to users’ willingness to disclose personal information. Privacy concerns arise when users feel that their personal data is at risk of misuse or unauthorized access (Shin et al., 2025). In the context of generative AI, users may hesitate to share sensitive information if they perceive the platform as vulnerable to data breaches or misuse. Research has shown that higher privacy concerns are negatively correlated with disclosure intent, as users tend to withhold personal information from platforms they deem unsafe or untrustworthy (Huynh, 2024). Generative AI platforms, despite their increasing sophistication, are not immune to privacy concerns, particularly as users are becoming more aware of the risks associated with digital data sharing (D. Kim et al., 2024). The ETAM framework helps explain this relationship, where privacy concerns can act as a counteracting force to trust, reducing the likelihood of disclosure even if the system is perceived as reliable (Y. Chen et al., 2023). When users are concerned about the privacy of their data, their trust in the platform’s intentions and capabilities may not be enough to overcome these fears (Shin et al., 2025). On the basis of this, we propose the following hypotheses:

H7: Trust in generative AI positively influences behavioral intent to disclose personal information.

H8: Privacy concerns negatively influence behavioral intent to disclose personal information on generative AI platforms.

Trust as a Mediator in Behavioral Intent to Disclose on Generative AI

Trust is a key mediator in the relationship between perceived autonomy and behavioral intent to disclose personal information in the context of generative AI (Lalot & Bertram, 2024). When users perceive autonomy in an AI system, believing it operates independently and makes informed decisions—they are more likely to trust it. Trust, in turn, strengthens the likelihood that users will disclose personal information. Perceived autonomy signals that the system is competent and reliable, two characteristics essential for fostering trust in technology (Afroogh et al., 2024). Extended Technology Acceptance Model (ETAM) posits that external factors, such as trust, influence users’ intentions and behaviors. Trust in generative AI can reduce uncertainty, allowing users to feel more comfortable sharing personal information, as they believe the AI will handle their data responsibly and securely (Cao & Peng, 2025). Autonomy can thus increase trust, which, in turn, enhances the intention to disclose, making trust a crucial mediator in this process. Studies on technology adoption and trust dynamics have consistently shown that perceived autonomy boosts user trust, which mediates disclosure behaviors, indicating the importance of trust as a psychological mechanism driving self-disclosure intentions (Janson & Barev, 2026).

Similarly, perceived empathy also influences trust and subsequently impacts disclosure behavior. When users perceive that generative AI is empathetic, capable of understanding and responding to their emotions, they are more likely to trust the platform, believing it will act in their best interests. Empathy enhances user engagement and makes the AI appear more human-like, reducing emotional barriers to disclosure (Seok et al., 2025). Trust, as a mediator, plays a pivotal role in translating perceived empathy into behavioral intent (Pelau et al., 2024). According to ETAM, trust in technology is shaped by external factors such as empathy, and this trust influences user intentions (Hou et al., 2024). The trust-empathy-disclosure pathway highlights how empathetic behaviors of the AI encourage a sense of relational closeness, leading to greater willingness to share personal information (Safdari, 2025). When users feel emotionally connected to the system, their trust in it increases, thereby mediating the relationship between empathy and the intention to disclose personal data (Jeon, 2024). This underscores the importance of trust in enhancing the impact of perceived empathy on disclosure intentions. On the basis of this, we propose the following hypotheses:

H9a: Trust in generative AI mediates the relationship between perceived autonomy and behavioral intent to disclose personal information.

H9b: Trust in generative AI mediates the relationship between perceived empathy and behavioral intent to disclose personal information.

Privacy Concerns as a Mediator in Behavioral Intent to Disclose on Generative AI

Privacy concerns can mediate the relationship between perceived autonomy and behavioral intent to disclose personal information in generative AI platforms. Perceived autonomy refers to the belief that the generative AI operates independently, which generally enhances trust and increases the willingness to share personal data (Pan et al., 2025). However, even if autonomy increases trust, privacy concerns can act as a barrier. Users may be cautious about disclosing personal information if they fear that the AI system could misuse or inadequately protect their data (Prunkl, 2024). Extended Technology Acceptance Model (ETAM) suggests that external factors, such as privacy concerns, can influence behavioral intent by either facilitating or inhibiting the disclosure process (Y. Liu & Mensah, 2024). In this context, if users perceive generative AI autonomy as providing security and reducing risks to their data, they may be more likely to disclose information. However, when privacy concerns remain high, these concerns may override the trust in autonomy, preventing disclosure (Huynh, 2024). This mediation emphasizes the crucial role of privacy concerns in determining how perceived autonomy affects disclosure behavior.

Perceived empathy in generative AI can influence privacy concerns, mediating the relationship between empathy and behavioral intent to disclose personal information (Asman et al., 2025). Empathetic AI, which understands and responds to human emotions, fosters trust and emotional safety. However, users may still hesitate to disclose personal data due to privacy concerns (Chung & Kang, 2023). According to ETAM, privacy concerns moderate the impact of trust and empathy on behavioral intentions. Even when users feel emotionally supported, privacy concerns can suppress data sharing (Lu & Wang, 2022). If generative AI’s empathetic responses are perceived as ethical and transparent, privacy concerns may decrease, encouraging disclosure (Coker & Thakur, 2024). Thus, while empathy can enhance disclosure likelihood, its effect depends on mitigating privacy concerns, which can either facilitate or hinder the process. Based on this, we propose the following hypotheses:

H10a: Privacy concerns mediate the relationship between perceived autonomy and behavioral intent to disclose personal information.

H10b: Privacy concerns mediate the relationship between perceived empathy and behavioral intent to disclose personal information.

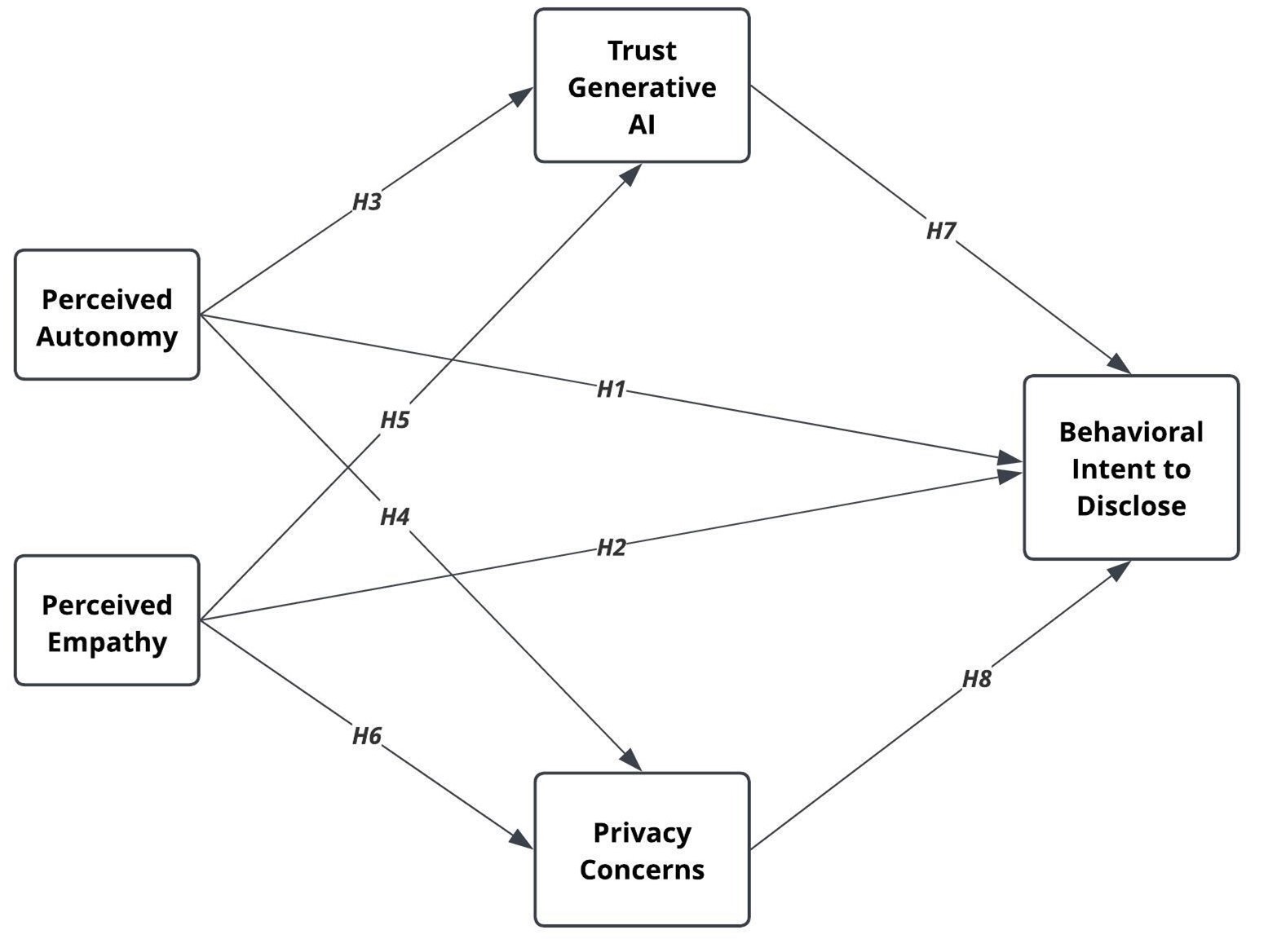

Figure 1. Hypothesized Conceptual Model.

Methods

Information of Study Samples

The study gathered data from a diverse group of generative AI platform users across China, ensuring participants were representative of various geographic and demographic groups. A target sample size of 1,100 participants was chosen based on a 95% confidence level and a 5% margin of error. To include only relevant respondents, the survey began with a screening question to confirm participants actively used generative AI platforms. Only those who affirmed their usage proceeded, ensuring all had direct experience with generative AI systems.

To ensure data quality, a screening question was placed at the beginning of the survey asking whether participants used text-based generative AI applications (e.g., ChatGPT, DeepSeek, Doubao); only users answering yes proceeded. Additional attention checks were incorporated, including an illogical item (e.g., Do you study for 50 hours a day?) and a control item requiring a specific response (e.g., disagree). Respondents failing these checks or completing the survey in under 180 seconds were excluded. These procedures improved reliability, yielding a final valid sample of 1,034 generative AI users.

To ensure the sample represented various regions of China, a quota sampling method was used, adjusted based on data from the 7th National Population Census (2021). The sample was distributed across seven regions, following population proportions: Northwest China (7.34%), Southwest China (14.55%), Central China (15.86%), North China (12.01%), South China (13.21%), East China (30.04%), and Northeast China (6.99%).

In addition to regional balance, the sample was stratified by demographic factors, including age, gender, income, time spent using generative AI in the past week, and overall AI experience. This ensured a representative sample geographically and socioeconomically, with various engagement levels. The combination of demographic and regional diversity strengthens the generalizability of the study’s findings. A detailed breakdown of the sample's demographic details is provided in Table 1.

Table 1. Basic Information of Samples.

|

|

Categories |

N |

Percent |

|

Gender |

Male |

521 |

50.39 |

|

Female |

513 |

49.62 |

|

|

Age |

19 Years or Below |

13 |

1.27 |

|

20–29 Years |

416 |

40.23 |

|

|

30–39 Years |

451 |

43.62 |

|

|

40–49 Years |

96 |

9.28 |

|

|

50–59 Years |

54 |

5.22 |

|

|

60 Years or Above |

04 |

0.38 |

|

|

Education |

Primary or Junior High School |

05 |

0.49 |

|

High, Technical, or Vocational School |

29 |

2.80 |

|

|

Associate Degree |

71 |

6.86 |

|

|

Bachelor's Degree |

711 |

68.76 |

|

|

Master's or Doctorate Degree |

218 |

21.00 |

|

|

Region |

North China |

124 |

12.01 |

|

Northeast China |

71 |

6.90 |

|

|

South China |

137 |

13.21 |

|

|

Central China |

164 |

15.86 |

|

|

East China |

311 |

30.04 |

|

|

Northwest China |

76 |

7.34 |

|

|

Southwest China |

151 |

14.55 |

|

|

Annual Income |

Below 10,000 CNY |

78 |

7.54 |

|

10,000–50,000 CNY |

84 |

8.12 |

|

|

60,000–100,000 CNY |

197 |

19.06 |

|

|

100,000–150,000 CNY |

297 |

28.72 |

|

|

150,000–200,000 CNY |

232 |

22.44 |

|

|

Above 210,000 CNY |

146 |

14.12 |

|

|

Gen AI Usage Per Day |

Less than 5 minutes |

12 |

1.16 |

|

05–10 minutes |

71 |

6.87 |

|

|

11–30 minutes |

217 |

20.98 |

|

|

31–60 minutes |

275 |

26.60 |

|

|

01–03 hours |

317 |

30.66 |

|

|

03–05 hours |

103 |

9.96 |

|

|

More than 5 hours |

39 |

3.77 |

|

|

Using Gen AI Since |

Last month |

26 |

2.51 |

|

1–3 months ago |

41 |

3.97 |

|

|

4–6 months ago |

77 |

7.45 |

|

|

7–12 months ago |

224 |

21.66 |

|

|

1–2 years ago |

435 |

42.07 |

|

|

More than 2 years ago |

231 |

22.34 |

|

|

|

Total |

1,034 |

100% |

Data Collection

Participants for this study were recruited through Credamo, a well-known paid data collection platform widely used in China, over a two-day period from January 16 to January 17, 2025. Credamo is employed by researchers from over 3,000 universities globally and offers access to a diverse respondent pool of more than 1.5 million users. Similar to platforms like Qualtrics and Amazon Mechanical Turk (MTurk), Credamo provides a wide range of participants from different age groups, geographic regions, and professional backgrounds. This platform was selected for its strong data validation processes, accurate targeting capabilities, and integrated statistical tools, making it a reliable choice for academic research (Z. Chen et al., 2024).

As the survey scales were adopted from published English research papers, a native Chinese speaker proficient in English translated the items into Chinese to ensure linguistic and conceptual equivalence. The survey was then administered in Chinese, the primary language for education and official communication in China, to ensure that all respondents could clearly understand the questions. All items were measured using a five-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). In addition, the detailed survey measurement scale, including both the original English items and their Chinese translations, is provided in Table 1 in the Appendix for reference.

Before participating, respondents were provided with detailed information about the study’s objectives, their rights, and measures for ensuring data confidentiality. They were assured that their responses would remain anonymous, participation was voluntary, and they could withdraw at any time without facing any negative consequences. This ethical approach helped build trust and engagement, which ultimately contributed to the accuracy and reliability of the collected data.

Composite Reliability and Validity of Measurement Scale

To ensure the accuracy and reliability of the measurement model, we evaluated both construct reliability and validity based on established guidelines by (Fornell & Larcker, 1981). Composite reliability (rho_c) was used to assess the internal consistency of each construct, with a threshold of .70 being considered acceptable by (S. Liu & Wang, 2016) for ensuring that the constructs are reliable and consistent. The results confirmed that all constructs exceeded this threshold, with composite reliability values ranging from .730 to .948, indicating a high degree of internal consistency across the constructs. Additionally, Cronbach’s Alpha coefficients, which further assess the reliability of the constructs, were all above .70, further supporting the stability and consistency of the measurement scale (Fornell & Larcker, 1981). These findings demonstrate that the measurement items within each construct consistently measure the intended concepts, reinforcing the reliability of the scales used in this study.

In addition to assessing reliability, we examined the average variance extracted (AVE) and factor loadings to ensure the validity of the measurement model. According to Fornell and Larcker (1981) and Hair et al. (2014), an AVE value above .50 indicates that a construct captures more than half of the variance in its indicators, suggesting that the construct is valid and meaningfully represents the underlying concept. In this study, all AVE values ranged from .532 to .826, exceeding the .50 threshold, which confirms that the constructs effectively capture the intended variance. Moreover, the factor loadings for all items were above .70, demonstrating strong convergent validity (Hair et al., 2014), which means that each item is strongly associated with its respective construct. These results further support the conclusion that the measurement model is valid and that the constructs used in this study are valid representations of the theoretical concepts they are meant to measure.

Overall, the results from the composite reliability, Cronbach’s Alpha, AVE, and factor loadings analyses confirm that the measurement model is both reliable and valid. The detailed results for these assessments are provided in Table 2 in appendix, which illustrates the strong internal consistency and convergent validity of the constructs. These findings further support the robustness of the measurement model, ensuring that the constructs effectively represent the underlying theoretical concepts, as emphasized by Hair et al. (2014) and Fornell and Larcker (1981).

To assess discriminant validity, both the Heterotrait-Monotrait (HTMT) ratio and the Fornell-Larcker Criterion were used, following established guidelines. The HTMT ratios in this study ranged from .496 to .816, all falling below the threshold of .85 recommended by Henseler et al. (2015) and Benitez et al. (2020). This indicates that the constructs are sufficiently distinct, with no significant overlap between the measured variables. These results confirm the discriminant validity of the constructs, ensuring that each variable captures a unique aspect of the theoretical framework.

Additionally, the Fornell-Larcker Criterion was applied, which requires that the square root of the Average Variance Extracted (AVE) for each construct be greater than the correlations between that construct and the other constructs (Fornell & Larcker, 1981). The results confirm that this criterion is met, further supporting the discriminant validity of the measurement model. Table A3 in appendix shows the results in detail.

Testing Model Fit

The model fit for the estimated model was assessed using multiple indicators to ensure it met the necessary criteria for a good fit. The results show a strong model fit, with an SRMR value of .031, which is well below the recommended threshold of .08, indicating minimal discrepancy between the observed and predicted correlations. The Normed Fit Index (NFI) for the estimated model is .959, which exceeds the commonly accepted cutoff of .90, further confirming the validity of the model. Additionally, the Root Mean Square Error of Approximation (RMSEA) value of .045 falls within the acceptable range, suggesting that the model provides an accurate and reliable fit to the data. These results collectively indicate that the estimated model effectively represents the relationships among the constructs and fits the data well.

Results

Descriptive and Correlation Analysis

The descriptive statistics in Table 2 provide an overview of the core variables. Perceived Autonomy (PAU) showed a moderate mean (M = 3.47, SD = 0.95), while Perceived Empathy (PE; M = 4.13, SD = 0.54) and Trust in Generative AI (TGA; M = 4.06, SD = 0.50) indicated relatively higher levels. Privacy Concerns (PC) had the lowest mean (M = 3.03, SD = 1.24), suggesting moderate concern, while Behavioral Intent to Disclose (BID; M = 3.43, SD = 0.91) reflected moderate disclosure intentions. Skewness and kurtosis values were within acceptable ranges, indicating approximate normality.

The correlation analysis revealed significant relationships consistent with the conceptual model. BID was positively correlated with PAU (r = .500), PE (r = .510), and TGA (r = .540), suggesting that higher perceptions of autonomy, empathy, and trust increase disclosure intentions. PAU and PE were positively correlated (r = .392), while TGA and PC were negatively correlated (r = −.548), indicating that higher trust is associated with lower privacy concerns.

Overall, the descriptive and correlational results confirm a coherent relationship structure, supporting the study’s conceptual model and suitability for hypothesis testing.

Table 2. Descriptive and Correlation Analysis of the Core Variables.

|

Variables |

Mean |

SD |

Skewness |

Kurtosis |

1 |

2 |

3 |

4 |

5 |

|

|

1 |

PAU |

3.47 |

0.958 |

−0.547 |

−0.797 |

0 |

|

|

|

|

|

2 |

PE |

4.13 |

0.543 |

−1.042 |

1.489 |

.392** |

0 |

|

|

|

|

3 |

TGA |

4.06 |

0.502 |

−1.267 |

1.800 |

.412** |

.591** |

0 |

|

|

|

4 |

PC |

3.03 |

1.244 |

−0.089 |

−1.643 |

−.450** |

−.461** |

−.548** |

0 |

|

|

5 |

BID |

3.43 |

0.918 |

−0.475 |

−0.791 |

.500** |

.510** |

.540** |

−.733** |

0 |

Measurement of Structural Model

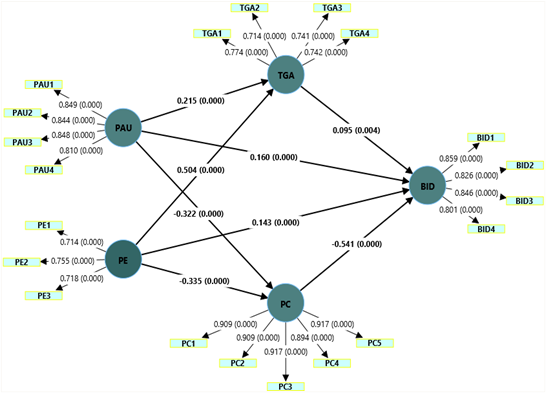

The findings in table 3 indicate that Perceived Autonomy positively influences Behavioral Intent to Disclose with a path coefficient of β = .160, t = 6.511, and p < .001, confirming that as users perceive generative AI as more autonomous, they are more likely to disclose personal information. This supports Hypothesis 1, highlighting the positive relationship between perceived autonomy and disclosure intentions. Similarly, Perceived Empathy positively influences Behavioral Intent to Disclose with a path coefficient of β = .143, t = 4.864, and p < .001, confirming Hypothesis 2. The results show that users who perceive generative AI as more empathetic tend to disclose personal information more willingly.

Perceived Autonomy also positively influences Trust in Generative AI with a path coefficient of β = .215, t = 7.532, and p < .001, supporting Hypothesis 3. This suggests that greater perceived autonomy in generative AI platforms strengthens user trust in those systems. On the other hand, Perceived Autonomy negatively influences Privacy Concerns with a path coefficient of β = −.322, t = 10.456, and p < .001, confirming Hypothesis 4. As users perceive generative AI as more autonomous, their concerns about privacy tend to decrease.

Perceived Empathy positively influences Trust in Generative AI with a path coefficient of β = .504, t = 17.002, and p < .001, confirming Hypothesis 5. This highlights the role of empathy in fostering trust in generative AI. Similarly, Perceived Empathy negatively influences Privacy Concerns with a path coefficient of β = −.335, t = 12.312, and p < .001, confirming Hypothesis 6. As users perceive generative AI as more empathetic, their privacy concerns tend to decrease.

Trust in Generative AI positively influences Behavioral Intent to Disclose with a path coefficient of β = .095, t = 2.890, and p = .004, confirming Hypothesis 7. This suggests that higher trust in generative AI increases the likelihood of disclosing personal information. Lastly, Privacy Concerns negatively influence Behavioral Intent to Disclose with a path coefficient of β = −.541, t = 19.205, and p < .001, confirming Hypothesis 8. Higher privacy concerns significantly reduce the likelihood of disclosing personal information to generative AI.

Table 3. Results of Direct Effects.

|

Relationships |

β |

SD |

t-value |

p-values |

Result |

|

|

H1 |

Perceived Autonomy --> Behavioral Intent to Disclose |

.160 |

0.025 |

6.511 |

<.001 |

Significant |

|

H2 |

Perceived Empathy --> Behavioral Intent to Disclose |

.143 |

0.029 |

4.864 |

<.001 |

Significant |

|

H3 |

Perceived Autonomy --> Trust in Generative AI |

.215 |

0.029 |

7.532 |

<.001 |

Significant |

|

H4 |

Perceived Autonomy --> Privacy Concerns |

−.322 |

0.031 |

10.456 |

<.001 |

Significant |

|

H5 |

Perceived Empathy --> Trust in Generative AI |

.504 |

0.030 |

17.002 |

<.001 |

Significant |

|

H6 |

Perceived Empathy --> Privacy Concerns |

−.335 |

0.027 |

12.312 |

<.001 |

Significant |

|

H7 |

Trust in Generative AI --> Behavioral Intent to Disclose |

.095 |

0.033 |

2.890 |

.004 |

Significant |

|

H8 |

Privacy Concerns --> Behavioral Intent to Disclose |

−.541 |

0.028 |

19.205 |

<.001 |

Significant |

|

Note. *p < .05; **p < .01; ***p < .001 |

||||||

Figure 2. Path Analysis of Structural Model.

The structural equation modeling results provide strong support for the mediating hypotheses tested in this study (Table 4). Perceived Autonomy indirectly influenced Behavioral Intent to Disclose through both Trust in Generative AI and Privacy Concerns. Specifically, higher perceptions of autonomy increased user trust (β = .020, t = 2.589, p = .010) and reduced privacy concerns (β = .174, t = 9.200, p < .001), which in turn enhanced disclosure intentions. Since the direct effect of perceived autonomy on disclosure remained significant, these findings indicate partial mediation, with autonomy shaping trust and reducing privacy fears that jointly facilitate willingness to share personal information.

Parallel effects were observed for Perceived Empathy. Empathy indirectly influenced Behavioral Intent to Disclose through Trust in Generative AI (β = .048, t = 2.851, p = .004) and Privacy Concerns (β = .181, t = 10.103, p < .001). Users who perceived generative AI systems as empathetic reported stronger trust and reduced privacy concerns, both of which contributed to greater disclosure. As the direct effect of empathy on disclosure also remained significant, these results likewise demonstrate partial mediation.

Taken together, these findings underscore the dual mediating roles of Trust in Generative AI and Privacy Concerns, offering a nuanced understanding of how perceptions of autonomy and empathy jointly shape disclosure behaviors in generative AI environments.

Table 4. Specific Indirect Effects.

|

Hypothesis |

β |

SD |

t-value |

p-values |

2.50% |

97.50% |

Result |

|

Perceived Autonomy → Trust in Generative AI → Behavioral Intent to Disclose |

.020 |

0.008 |

2.589 |

.010 |

.006 |

.037 |

Partial Mediation |

|

Perceived Autonomy → Privacy Concerns |

.174 |

0.019 |

9.200 |

<.001 |

.138 |

.213 |

Partial Mediation |

|

Perceived Empathy → Trust in Generative AI → Behavioral Intent to Disclose |

.048 |

0.017 |

2.851 |

.004 |

.016 |

.082 |

Partial Mediation |

|

Perceived Empathy → Privacy Concerns |

.181 |

0.018 |

10.103 |

<.001 |

.147 |

.217 |

Partial Mediation |

Discussion

Discussion of Research Findings

This study applies the Extended Technology Acceptance Model (ETAM) to examine how user perceptions of generative AI shape disclosure behaviors. Rather than claiming to fundamentally extend ETAM, our contribution lies in contextualizing its framework within generative AI by incorporating Perceived Autonomy and Perceived Empathy as external variables that influence trust, privacy concerns, and disclosure intentions. This approach is consistent with prior applications of ETAM, where researchers have integrated additional external factors, such as subjective norms, enjoyment, and compatibility (Ursavaş et al., 2025), economic benefits and technological stability in autonomous driving (Mu et al., 2024), and trust and perceived risk in healthcare AI systems (Hou et al., 2024), to account for contextual determinants of technology use. In a similar manner, our study adapts ETAM to the disclosure context of generative AI by showing how user judgments about autonomy and empathy affect trust and privacy, thereby influencing behavioral intent.

The findings demonstrate that Perceived Autonomy positively influences Behavioral Intent to Disclose. Users who view generative AI as capable of functioning independently are more willing to share personal information (Sankaran & Markopoulos, 2021). This aligns with ETAM’s focus on external judgments shaping trust and risk assessments, and with prior studies linking autonomy with user engagement (Shukla et al., 2024). Importantly, Perceived Autonomy was also negatively associated with Privacy Concerns, suggesting that perceptions of competence and independence reduce anxieties about misuse of data. This finding resonates with the broader ETAM logic that perceived ability strengthens trustworthiness and mitigates perceived risks (Das et al., 2023).

Similarly, Perceived Empathy showed a positive relationship with Behavioral Intent to Disclose. Users who perceive generative AI as emotionally responsive feel safer and more comfortable disclosing personal information, reinforcing arguments that emotional engagement encourages adoption (Gong & Su, 2025). The negative effect of Perceived Empathy on Privacy Concerns indicates that relational qualities can reduce anxieties, as users interpret empathetic responses as signals of benevolence and ethical handling of data (Safdari, 2025). These findings echo prior ETAM applications, where benevolence-related factors such as compatibility and responsiveness enhanced trust and reduced resistance (Mustofa et al., 2025). These findings must also be interpreted in light of persistent skepticism regarding AI’s ability to authentically comprehend emotions. Although users may perceive empathetic responses, such perceptions are shaped by simulation rather than genuine understanding, which could explain variations in trust across contexts (Berberich et al., 2020).

Trust in Generative AI emerged as a critical mediator, consistent with the Extended Technology Acceptance Model’s emphasis on trust as a determinant of behavioral outcomes (Robinson-Tay & Peng, 2025). It strengthened the pathways from Perceived Autonomy and Perceived Empathy to Behavioral Intent to Disclose, demonstrating that both competence- and benevolence-based perceptions enhance user confidence in generative AI (Seok et al., 2025). Privacy Concerns also mediated these relationships, underscoring that risk perceptions remain central in disclosure contexts (Y. Liu et al., 2025). Importantly, since the direct effects of autonomy and empathy on disclosure were also significant, the mediation observed was partial. This highlights that trust and privacy concerns complement, rather than replace, the direct influence of these perceptions.

The cultural and contextual dimensions of our findings further underscore ETAM’s adaptability. The sample was drawn from China, where rapid adoption of generative AI platforms coexists with distinct privacy norms (Cao & Peng, 2025). In collectivist cultures, autonomy may be interpreted less as a threat and more as assurance of system reliability (Barnes et al., 2024), explaining why Perceived Autonomy reduced Privacy Concerns more strongly than in studies of Western users, who often retain higher levels of privacy anxiety despite autonomous functioning (D. Kim et al., 2024). Similarly, the emphasis on relational harmony in Chinese culture may heighten the influence of Perceived Empathy in fostering trust and disclosure (Berberich et al., 2020). This indicates that future ETAM research should explicitly account for cultural differences in how users value autonomy, empathy, and privacy.

In sum, the results confirm ETAM’s utility as a flexible framework for analyzing disclosure in generative AI environments. By integrating Perceived Autonomy and Perceived Empathy as external factors, this study demonstrates that disclosure intentions are shaped not only by functional performance but also by cognitive and emotional perceptions of competence, benevolence, trust, and risk. These insights enrich the application of ETAM, while also pointing to the importance of cultural context in shaping user behavior toward emerging AI technologies.

Theoretical and Practical Implications

From a theoretical perspective, this study extends the Extended Technology Acceptance Model (ETAM) by highlighting the complex interplay between Perceived Autonomy, Perceived Empathy, Trust, and Privacy Concerns in shaping Behavioral Intent to Disclose. By incorporating both cognitive and emotional dimensions, the findings enhance the understanding of how external factors like trust and privacy concerns mediate the effects of system attributes on user behavior. This broadens ETAM’s applicability to generative AI-driven interactions, offering deeper insights into the psychological mechanisms that influence technology adoption and user engagement.