I’ll be there for you? The bystander intervention model and cyber aggression

Vol.18,No.2(2024)

The Bystander Intervention Model (BIM) has been validated for face-to-face emergencies and dictates that observers’ decision to intervene hinges on five sequential steps, while barriers block progress between steps. The current study is the first, to our knowledge, to apply the BIM in its entirety to cyber aggression and explore the ways that individual factors such as experiences with depression, social anxiety, and cyber aggression either as the target or the aggressor influence bystanders. In our pre-registered study, emerging adults (N = 1,093) viewed pilot-tested cyber aggressive content and reported how they would engage with each of the steps and barriers of the BIM, if they were observing this content as a bystander in real life. Regarding the actions they would take, most participants chose non-intervention (36.3%) or private direct intervention (39.4%). Path analysis suggested that overall, the BIM can explain bystanders’ responses to cyber aggression. Nonetheless, there were some discrepancies with prior work on face-to-face emergencies, specifically that cyber bystander intervention does not appear to be as linear. As well, in contrast to the face-to-face applications of the BIM that prescribes barriers to affect only a single specific step, here we found some barriers were negatively linked to multiple steps. These findings elucidate ways in which cyber aggression in the online context may be similar to, as well as different from, aggression that occurs face-to-face. Implications of these findings for interventions are discussed.

cyber aggression; Bystander Intervention Model; helping; cyberbullying

Vasileia Karasavva

Department of Psychology, University of British Columbia, Vancouver, BC, Canada

Vasileia Karasavva, MA, is a Clinical Psychology Graduate Student at the University of British Columbia. Her research focuses on online interactions and online antisocial behaviors, including technology-facilitated sexual violence. She is interested in cyberpsychology research that can then be applied in policy, treatment, and educational material with the ultimate goal of creating the blueprint for healthier scripts for online communications.

Amori Mikami

Department of Psychology, University of British Columbia, Vancouver, BC, Canada

Amori Yee Mikami, PhD is a Professor in the Department of Psychology at the University of British Columbia and a registered clinical psychologist in British Columbia, Canada. She is the principal investigator of the Peer Relationships in Childhood lab and her research program is focused on understanding the ways children, adolescents, and emerging adults relate to one another, with a special interest for youth with attention-deficit/hyperactivity disorder (ADHD).

Allison, K. R., & Bussey, K. (2016). Cyber-bystanding in context: A review of the literature on witnesses' responses to cyberbullying. Children and Youth Services Review, 65, 183–194. https://doi.org/10.1016/j.childyouth.2016.03.026

Álvarez-García, D., Barreiro-Collazo, A., Núñez Pérez, J. C., & Dobarro, A. (2016). Validity and reliability of the Cyber-aggression Questionnaire for Adolescents (CYBA). The European Journal of Psychology Applied to Legal Context, 8(2), 69–77. https://doi.org/10.1016/j.ejpal.2016.02.003

Anderson, J., Bresnahan, M., & Musatics, C. (2014). Combating weight-based cyberbullying on Facebook with the dissenter effect. Cyberpsychology, Behavior, and Social Networking, 17(5), 281–286. https://doi.org/10.1089/cyber.2013.0370

Anker, A. E., & Feeley, T. H. (2011). Are nonparticipants in prosocial behavior merely innocent bystanders? Health Communication, 26(1), 13–24. https://doi.org/10.1080/10410236.2011.527618

Bastiaensens, S., Vandebosch, H., Poels, K., Van Cleemput, K., DeSmet, A., & De Bourdeaudhuij, I. (2014). Cyberbullying on social network sites. An experimental study into bystanders’ behavioural intentions to help the victim or reinforce the bully. Computers in Human Behavior, 31, 259–271. https://doi.org/10.1016/j.chb.2013.10.036

Bastiaensens, S., Vandebosch, H., Poels, K., Van Cleemput, K., DeSmet, A., & De Bourdeaudhuij, I. (2015). ‘Can I afford to help?’ How affordances of communication modalities guide bystanders' helping intentions towards harassment on social network sites. Behaviour & Information Technology, 34(4), 425–435. https://doi.org/10.1080/0144929X.2014.983979

Blair, C. A., Foster Thompson, L., & Wuensch, K. L. (2005). Electronic helping behavior: The virtual presence of others makes a difference. Basic and Applied Social Psychology, 27(2), 171–178. https://doi.org/10.1207/s15324834basp2702_8

Brody, N. (2021). Bystander intervention in cyberbullying and online harassment: The role of expectancy violations. International Journal of Communication, 15(2021), 647–667. https://ijoc.org/index.php/ijoc/article/view/14169

Burn, S. M. (2009). A situational model of sexual assault prevention through bystander intervention. Sex Roles, 60(11), 779–792. https://doi.org/10.1007/s11199-008-9581-5

Chekroun, P., & Brauer, M. (2002). The bystander effect and social control behavior: The effect of the presence of others on people’s reactions to norm violations. European Journal of Social Psychology, 32(6), 853–867. https://doi.org/10.1002/ejsp.126

Chen, J., Short, M., & Kemps, E. (2020). Interpretation bias in social anxiety: A systematic review and meta-analysis. Journal of Affective Disorders, 276, 1119–1130. https://doi.org/10.1016/j.jad.2020.07.121

Clark, M., & Bussey, K. (2020). The role of self-efficacy in defending cyberbullying victims. Computers in Human Behavior, 109, Article 106340. https://doi.org/10.1016/j.chb.2020.106340

DeSmet, A., Bastiaensens, S., Van Cleemput, K., Poels, K., Vandebosch, H., & De Bourdeaudhuij, I. (2012). Mobilizing bystanders of cyberbullying: An exploratory study into behavioural determinants of defending the victim. Studies in Health Technology and Informatics, 10, 58–63. https://pubmed.ncbi.nlm.nih.gov/22954829/

DiFranzo, D., Taylor, S. H., Kazerooni, F., Wherry, O. D., & Bazarova, N. N. (2018, April). Upstanding by design: Bystander intervention in cyberbullying. In Proceedings of the 2018 CHI conference on human factors in computing systems (pp. 1–12). ACM. https://doi.org/10.1145/3173574.3173785

Dillon, K. P. (2015). A proposed cyberbystander intervention model for the 21st century. Association of Internet Researchers Selected Papers of Internet Research, 5. https://spir.aoir.org/ojs/index.php/spir/article/view/8394

Dillon, K. P., & Bushman, B. J. (2015). Unresponsive or un-noticed?: Cyberbystander intervention in an experimental cyberbullying context. Computers in Human Behavior, 45, 144–150. https://doi.org/10.1016/j.chb.2014.12.009

Doumas, D. M., & Midgett, A. (2020). Witnessing cyberbullying and internalizing symptoms among middle school students. European Journal of Investigation in Health, Psychology and Education, 10(4), 957–966. https://doi.org/10.3390/ejihpe10040068

Estévez, E., Cañas, E., Estévez, J. F., & Povedano, A. (2020). Continuity and overlap of roles in victims and aggressors of bullying and cyberbullying in adolescence: A systematic review. International Journal of Environmental Research and Public Health, 17(20), Article 7452. https://doi.org/10.3390/ijerph17207452

Ferreira, P. C., Simão, A. M. V., Paiva, A., & Ferreira, A. (2020). Responsive bystander behaviour in cyberbullying: A path through self-efficacy. Behaviour & Information Technology, 39(5), 511–524. https://doi.org/10.1080/0144929X.2019.1602671

Fischer, P., Greitemeyer, T., Pollozek, F., & Frey, D. (2006). The unresponsive bystander: Are bystanders more responsive in dangerous emergencies? European Journal of Social Psychology, 36(2), 267–278. https://doi.org/10.1002/ejsp.297

Fischer, P., Krueger, J. I., Greitemeyer, T., Vogrincic, C., Kastenmüller, A., Frey, D., Heene, M., Wicher, M., & Kainbacher, M. (2011). The bystander-effect: A meta-analytic review on bystander intervention in dangerous and non-dangerous emergencies. Psychological Bulletin, 137(4), 517–537. https://doi.org/10.1037/a0023304

Gahagan, K., Vaterlaus, J. M., & Frost, L. R. (2016). College student cyberbullying on social networking sites: Conceptualization, prevalence, and perceived bystander responsibility. Computers in Human Behavior, 55, 1097–1105. https://doi.org/10.1016/j.chb.2015.11.019

High, A. C., & Young, R. (2018). Supportive communication from bystanders of cyberbullying: Indirect effects and interactions between source and message characteristics. Journal of Applied Communication Research, 46(1), 28–51. https://doi.org/10.1080/00909882.2017.1412085

Internet World Stats. (2023). Internet usage and population statistics. https://www.internetworldstats.com/stats.htm

Johnson, K. L. (2016). Oh, what a tangled web we weave: Cyberbullying, anxiety, depression, and loneliness [Master’s thesis, University of Mississippi]. eGrove. https://egrove.olemiss.edu/cgi/viewcontent.cgi?article=1803&context=etd

Kim, Y. (2021). Understanding the bystander audience in online incivility encounters: Conceptual issues and future research questions. In Proceedings of the 54th Hawaii International Conference on System Sciences (pp. 2934–2943). ScholarSpace. https://doi.org/10.24251/HICSS.2021.357

Koehler, C., & Weber, M. (2018). “Do I really need to help?!” Perceived severity of cyberbullying, victim blaming, and bystanders’ willingness to help the victim. Cyberpsychology: Journal of Psychosocial Research on Cyberspace, 12(4), Article 4. https://doi.org/10.5817/CP2018-4-4

Latané, B., & Darley, J. M. (1970). The unresponsive bystander: Why doesn’t he help? Appleton-Century-Crofts. https://lib.ugent.be/catalog/rug01:000065386

Liebowitz, M. R. (1987). Social phobia. Modern Problems of Pharmacopsychiatry, 22, 141–173. https://doi.org/10.1159/000414022

Macháčková, H., Dědková, L., Ševčíková, A., & Černá, A. (2013). Bystanders’ support of cyberbullied schoolmates. Journal of Community & Applied Social Psychology, 23(1), 25–36. https://doi.org/10.1002/casp.2135

Menolascino, N. (2016). Empathy, perceived popularity and social anxiety: Predicting bystander intervention among middle school students [Unpublished master’s thesis]. UMass, Boston.

Midgett, A., & Doumas, D. M. (2019). Witnessing bullying at school: The association between being a bystander and anxiety and depressive symptoms. School Mental Health, 11, 454–463. https://doi.org/10.1007/s12310-019-09312-6

Patchin, J. W., & Hinduja, S. (2015). Measuring cyberbullying: Implications for research. Aggression and Violent Behavior, 23, 69–74. https://doi.org/10.1016/j.avb.2015.05.013

Polanin, J. R., Espelage, D. L., & Pigott, T. D. (2012). A meta-analysis of school-based bullying prevention programs’ effects on bystander intervention behavior. School Psychology Review, 41(1), 47–65. https://doi.org/10.1080/02796015.2012.12087375

Radloff, L. S. (1977). The CES-D Scale: A self-report depression scale for research in the general population. Applied Psychological Measurement, 1(3), 385–401. https://doi.org/10.1177/014662167700100306

Rudnicki, K., Vandebosch, H., Voué, P., & Poels, K. (2022). Systematic review of determinants and consequences of bystander interventions in online hate and cyberbullying among adults. Behaviour & Information Technology, 42(5), 527–544. https://doi.org/10.1080/0144929X.2022.2027013

Runions, K. C., & Bak, M. (2015). Online moral disengagement, cyberbullying, and cyber-aggression. Cyberpsychology, Behavior, and Social Networking, 18(7), 400–405. https://doi.org/10.1089/cyber.2014.0670

Schlosser, A. E. (2020). Self-disclosure versus self-presentation on social media. Current Opinion in Psychology, 31, 1–6. https://doi.org/10.1016/j.copsyc.2019.06.025

Shultz, E., Heilman, R., & Hart, K. J. (2014). Cyber-bullying: An exploration of bystander behavior and motivation. Cyberpsychology: Journal of Psychosocial Research on Cyberspace, 8(4), Article 3. https://doi.org/10.5817/CP2014-4-3

Steer, O. L., Betts, L. R., Baguley, T., & Binder, J. F. (2020). “I feel like everyone does it”- adolescents’ perceptions and awareness of the association between humour, banter, and cyberbullying. Computers in Human Behavior, 108, Article 106297. https://doi.org/10.1016/j.chb.2020.106297

Thompson, J. D., & Cover, R. (2021). Digital hostility, internet pile-ons and shaming: A case study. Convergence: The International Journal of Research Into New Media Technologies, 28(6), 1770–1782. https://doi.org/10.1177/13548565211030461

Van Cleemput, K., Vandebosch, H., & Pabian, S. (2014). Personal characteristics and contextual factors that determine “helping,” “joining in,” and “doing nothing” when witnessing cyberbullying. Aggressive Behavior, 40(5), 383–396. https://doi.org/10.1002/ab.21534

Wang, S. (2021). Standing up or standing by: Bystander intervention in cyberbullying on social media. New Media & Society, 23(6), 1379–1397. https://doi.org/10.1177/1461444820902541

Watts, L. K., Wagner, J., Velasquez, B., & Behrens, P. I. (2017). Cyberbullying in higher education: A literature review. Computers in Human Behavior, 69, 268–274. https://doi.org/10.1016/j.chb.2016.12.038

Webber, M. A., & Ovedovitz, A. C. (2018). Cyberbullying among college students: A look at its prevalence at a U.S. catholic university. International Journal of Educational Methodology, 4(2), 101–107. https://doi.org/10.12973/ijem.4.2.101

Wong, R. Y. M., Cheung, C. M. K., Xiao, B., & Thatcher, J. B. (2021). Standing up or standing by: Understanding bystanders’ proactive reporting responses to social media harassment. Information Systems Research, 32(2), 561–581. https://doi.org/10.1287/isre.2020.0983

Zhang, W., & Zhang, L. (2012). Explicating multitasking with computers: Gratifications and situations. Computers in Human Behavior, 28(5), 1883–1891. https://doi.org/10.1016/j.chb.2012.05.006

Authors’ Contribution

Karasavva Vasileia: conceptualization, data curation, formal analysis, writing—original draft, writing—review and editing. Amori Yee Mikami: conceptualization, writing—review and editing, supervision.

Editorial Record

First submission received:

August 15, 2023

Revision received:

November 30, 2023

February 3, 2024

Accepted for publication:

March 6, 2024

Editor in charge:

Fabio Sticca

Introduction

With around 90% of emerging adults in North America on the internet regularly (Internet World Stats, 2023), it is no exaggeration to say that when you are online, people are watching. The publicness of online interactions (e.g., for an audience of hundreds or thousands), and their permanence (e.g., interactions stay indefinitely on a page), could accentuate their impact, relative to interactions occurring face-to-face (Kim, 2021). This may explain the deleterious effect on wellbeing from being the target of cyber aggression (Johnson, 2016). However, the same publicness of cyber aggression may offer a resource for intervention: bystander action. Around 1 in 2 emerging adults have been onlookers to cyber aggression (Gahagan et al., 2016). Bystanders could alter the dynamics of the situation through their choice of action or inaction (Wang, 2021). This study aimed to understand the process of bystander intervention in cyber aggression, and barriers to intervention. Grounded in the five-step Bystander Intervention Model (BIM) validated in instances of face-to-face aggression, we tested: (1) the applicability of the BIM to cyber aggression and the ways that individual factors, like experiences with internalizing symptoms or cyber aggression, influence action, (2) the steps of the BIM that are impacted by most barriers and the barriers that impact most steps, and (3) the actions that cyber bystanders choose. This work advances scientific knowledge about the similarities and differences between online and face-to-face contexts, and the role of bystanders in cyber aggression. It is also of interest beyond the academic community and can inform anti-cyberbullying campaigns.

Cyber Aggression and Bystanders

Cyber aggression is a generic term that encapsulates intentionally harmful, hurtful, or offending behaviors, such as the sending of rude, threatening, or offensive messages, using electronic means (Álvarez-García et al., 2016; Kim, 2021). Relative to face-to-face aggression, cyber aggression can occur at any time and can spread almost instantly (Rudnicki et al., 2022). Anonymity and distance may encourage cyber aggressors (Watts et al., 2017) and shield them from witnessing the consequences of their actions, hindering empathy and promoting aggression recurrences (Álvarez-García et al., 2016). The reported prevalence of cyber aggression among emerging adults ranges from as low as 7% to as high as 62% (Webber & Ovedovitz, 2018).

Given that cyber aggression often takes place in public spaces, like the comments of a social media profile, galvanizing observing bystanders to intervene may be possible. Cyber bystanders can support the target, side with the aggressor, or do nothing (Dillon & Bushman, 2015; Wang, 2021). Bystander intervention to support the target is associated with decreased future victimization and targets’ improved outcomes (Midgett & Doumas, 2019). Bystanders who side with the aggressor can contribute to pile-ons attacking the target, which increase targets’ distress and can tragically result in suicide (Thompson & Cover, 2021). However, evidence suggests that most bystanders choose to do nothing (Dillon & Bushman, 2015).

The Bystander Intervention Model (BIM)

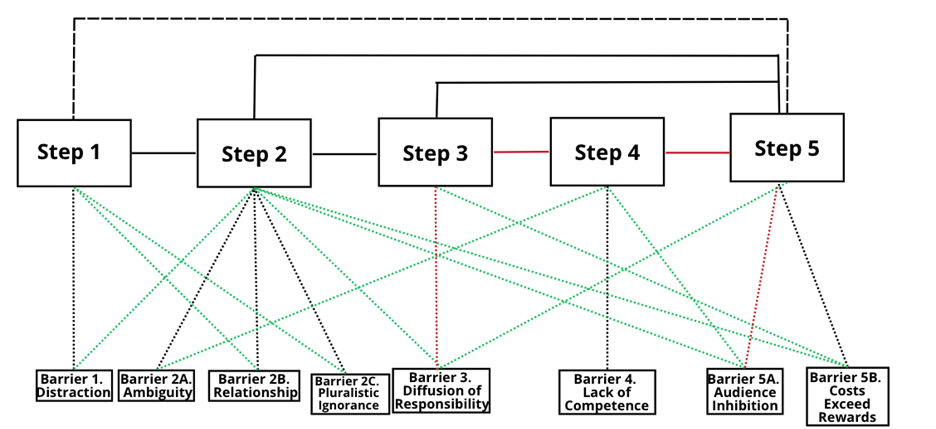

Figure 1.The Bystander Intervention Model in Face-to-Face Aggression.

Note. Dotted lines denote a significant negative relationship and solid lines denote a significant positive relationship between variables. Step 1 = Noticing the Event; Step 2 = Interpreting Event as Problematic; Step 3 = Taking Personal Responsibility; Step 4 = Knowing What to Do; Step 5 = Taking Action; Barrier 1 = Distraction; Barrier 2A = Ambiguity; Barrier 2B = Relationship; Barrier 2C = Pluralistic Ignorance; Barrier 3 = Diffusion of Responsibility; Barrier 4 = Lack of Competence; Barrier 5A = Audience Inhibition; Barrier 5B = Costs Exceed Rewards.

As described below, across both adolescent and adult samples, the BIM by Latané and Darley (1970; Figure 1) postulates bystander intervention to hinge on five sequential steps, while failure to complete a step is thought to break the chain of action, resulting in bystander non-intervention. The BIM has been applied to a plethora of offline situations including face-to-face bullying (Polanin et al., 2012). It has been validated regardless of characteristics of the involved people or the seriousness of the situation (Dillon & Bushman, 2015), which highlights its utility in diverse settings. Some studies have investigated bystander intervention in cyber aggression, but they focus on a few steps and/or barriers and not the entire model sequence (Kim, 2021).

Step 1—Noticing the Event

The first step on the chain of providing help according to the BIM is to notice that something is happening. Noticing cyber aggression has been shown to be a necessary but not sufficient condition for intervention in previous work (Dillon & Bushman, 2015; Ferreira et al., 2020). In a virtual environment where participants witnessed episodes of cyberbullying in real time, simply noticing the cyberbullying increased the likelihood of the bystander intervening by more than four times (Dillon & Bushman, 2015). Yet, although 68% of participants noticed the cyberbullying, only 10% chose to directly intervene (Dillon & Bushman, 2015).

To notice cyber aggression, bystanders must overcome distraction (Barrier 1). In the attention economy, cyber aggression competes with online and offline cues for a cyber bystander’s focus. People also multitask while online, which may increase the “noise” to parse through (Zhang & Zhang, 2012). Prior work has shown that online environmental distractions, like music, pop-up ads, and time restrictions were not related to bystander intervention in cyberbullying (Dillon & Bushman, 2015). However, purposeful distractions (i.e., distractions specific to the reason the user was online or feelings of state distraction) have yet to be tested.

Step 2—Interpreting the Event as Problematic

After noticing an incident of cyber aggression, bystanders must interpret it as a problem that requires intervention. Bystanders are more likely to both notice and respond to emergencies that are vivid, involve readily identified targets, and are clearly dangerous (Fischer et al., 2006). Research shows that cyberbullying incidents that are perceived as more severe induce higher intentions of intervening among bystanders (Bastiaensens et al., 2014; Koehler & Weber, 2018).

The lack of non-verbal physical and social cues in cyber aggression (Steer et al., 2020), as well as the different norms between platforms about what is socially acceptable (Brody, 2021), can add ambiguity (Barrier 2A) to if an incident is problematic. Whether an interaction is perceived as aggressive versus as playful banter also depends on the relationship between those involved, but these relationships, especially between people online, are not always clear. It is possible that cyber bystanders who assume a closer relationship between the aggressor and the target (Barrier 2B) may interpret an aggressive comment as less serious (Steer et al., 2020). In a sample of undergraduate students, bystanders who felt like they were lacking contextual information about the cyberbullying incident, including information about the relationship between those involved, were more likely to remain passive (Shultz et al., 2014).

Pluralistic ignorance (Barrier 2C) is the tendency to rely on the overt reaction of others when in an ambiguous situation (Fischer et al., 2011). Seeing other onlookers not taking action may lead bystanders to conclude that the situation is not critical enough for intervention (Fischer et al., 2011). Relative to in face-to-face emergencies, the number of onlookers witnessing an incident of cyber aggression is usually less obvious (Blair et al., 2005). Given that online posts have seemingly infinite reach, cyber bystanders may always assume that numerous others have noticed the incident too. As a consequence, cyber bystanders may view lack of pushback to an aggressive comment as an endorsement of its non-seriousness by many others who have presumably seen it as well (Anderson et al., 2014). In contrast, research finds cyber bystanders are more likely to act when other bystanders do so (Bastiaensens et al., 2014, 2015).

Step 3—Taking Personal Responsibility

The BIM posits that bystanders must decide that it is their personal responsibility to help, as opposed to thinking that someone else should help or is helping. In the cyberbullying literature, research shows that cyber bystanders who do not feel personal responsibility are more likely to remain passive (Van Cleemput et al., 2014). Diffusion of responsibility (Barrier 3), or the tendency of bystanders to not intervene when others are present, is a cornerstone of the BIM. In both field and laboratory studies, bystanders are more likely to act when they are the only witnesses of an emergency (Fischer et al., 2011). As discussed in Barrier 2C, bystanders may presume that a large number of people are observing a public online post. Research to date supports the idea that diffusion of responsibility applies to cyberbullying (Brody, 2021). In contrast, direct requests for help from a cyberbullying target were shown to increase supportive intervention and to counter diffusion of responsibility (Macháčková et al., 2013).

Step 4—Knowing What to Do

Bystanders must also know also what to do to meaningfully offer assistance. Previous work found that bystanders’ confidence in their ability to handle an emergency was linked to greater willingness to intervene in both face-to-face (Anker & Feeley, 2011) and online emergencies (Clark & Bussey, 2020; Ferreira et al., 2020). Generally, bystanders are more likely to intervene when they have had practice, training, or previous experience with dealing with an emergency (Dillon, 2015). Results from cyberbullying research support this; cyber bystanders who expect they know their intervention will have a positive outcome, presumably because they feel confident in their abilities, are more likely to act (Allison & Bussey, 2016).

Lack of competence (Barrier 4) could impede Step 4. Specific to the online context, cyber bystanders need the ability to navigate confrontation (social competence), understand the norms of social media to correctly read the situation (social media competence), and interact with the interface to block someone, report them to moderators, or send a private message (mechanical competence). Research on cyber bystanders supports that self-efficacy is important in intervention, but it tends to focus on the confidence to defend the target or confront the aggressor without examining confidence in social media or mechanical skills (Allison & Bussey, 2016).

Step 5—Taking Action

Once they have completed all previous steps in the chain of intervention, bystanders decide on the action they will take, and carry it out. Bystanders who choose to help the target of the aggression can do so directly (e.g., by taking the side of the target), or indirectly (e.g., by seeking help from an authority, like a moderator). Direct intervention is thought to require more time, effort, and resources, and to involve more risks than indirect intervention (Dillon & Bushman, 2015; Wang, 2021). There are additional options unique to the online context. Cyber bystanders who choose direct intervention can do so publicly (e.g., by posting a response to the aggressive comment) or privately (e.g., by sending a supportive private message to the target). Public, direct intervention may motivate other onlookers to also take action (Anderson et al., 2014). At the same time, it may be risky, as it leaves the bystander vulnerable to scrutiny or to aggression. That being said, private interventions are also valuable. In one study, targets of cyber aggression reported that the most helpful response was emotional support from bystanders (High & Young, 2018), which could possibly be provided via a private channel or a public post.

At this step, bystanders must overcome the barrier of audience inhibition (Barrier 5A), which is the fear of being judged or embarrassed (Chekroun & Brauer, 2002). Concerns about impression management and self-presentation may be heightened online, and shape people’s actions (Schlosser, 2020)—including discouraging bystanders from intervening in cyber aggression. Fear of negative social consequences has been linked with greater passivity in cyber bystander intervention (DeSmet et al., 2012). Another barrier is an unfavorable costs-benefits analysis (Barrier 5B) where a bystander perceives the costs of intervening to exceed the rewards. For example, a bystander may be reluctant to intervene if they see the cost of potential retaliation or embarrassment outweighing the reward of defending the target (Wong et al., 2021).

Individual Factors

Intervention in cyber aggression is a complex interplay between multiple factors and the situation taking place. Here, we will highlight internalizing symptoms and past experiences with cyber aggression, as little prior research has examined them in the context of cyber bystander intervention. People who experience internalizing symptoms like social anxiety may be less likely to take personal responsibility (Step 3) or know what to do (Step 4; Menolascino, 2016). Social anxiety could also predispose people to view ambiguous social situations through a negative lens (Chen et al., 2020), making it unlikely to hinder Steps 1 and 2 of the BIM. Depressive symptoms such as feelings of amotivation and helplessness may make bystanders unsure about what to do, leading to non-intervention (Doumas & Midgett, 2020).

Previous experience with cyber aggression, either as the target or aggressor, could also affect the way bystanders react to cyber aggression. Cyber aggressors blame the target, minimize the consequences of their actions, or use euphemistic language to make their harmful act seem benign (Runions & Bak, 2015). This may reduce the likelihood that a cyber aggressor will engage in Step 2 or Step 3 of the BIM, when viewing cyber aggression as a bystander. The relationship between prior experience as a target and bystander intervention is uncertain. Having been a target of cyber aggression might make bystanders more empathetic towards the target, prompting them to act (Van Cleemput et al., 2014). In contrast, heightened fear of re-experiencing cyber aggression because they have drawn attention to themselves may decrease willingness to intervene (Rudnicki et al., 2022). Our analyses of the role of depression, social anxiety, and prior experiences with cyber aggression, on the BIM are exploratory.

Research Questions and Hypotheses

Cyber bystanders have a unique ability to intervene in cyber aggression and alleviate its impact, yet few do so. This underscores the importance of studying the process of bystander intervention in cyber aggression, and barriers to intervention. We are not aware of any work applying the full BIM in its entirety in the context of cyber aggression. It is critical to avoid the pitfall of assuming that well-established conceptual models for face-to-face experiences pertain to the cybersphere, without evaluating this. Most previous research has examined intervention in binary form (i.e., doing nothing versus publicly intervening). This ignores other options cyber bystanders have, such as privately reaching out to the target, or alerting an authority.

RQ1 concerned actions taken. We hypothesized that most participants would choose non-intervention, while those who did choose to act would largely pick the private direct method (H1). RQ2 tested the applicability of the BIM to cyber aggression. We expected that each BIM step would be positively linked with the next step on the chain of helping (H2), and that a negative relationship would exist between each of the BIM steps and their respective barriers (H3). Finally, RQ3 explored individual characteristics, like internalizing psychopathology or previous experiences with cyber aggression, that might influence bystanders’ responses at each step of the BIM. As these analyses were exploratory, we did not make directional hypotheses.

Methods

Participants

The final sample consisted of 1,073 participants (age range 18–25 years, M = 20.18, SD = 1.63, Mdn = 20.00), who completed this study in exchange for partial course credit at a public university in Western Canada. Participants reported their gender identity as: women = 74.0%; men = 23.5%; non-binary = 1.9%; not listed = 0.2%; prefer not to disclose = 0.5%. The majority of participants (94.6%) did not consider themselves as a person who is trans, transgender, gender non-conforming or an analogous term. Their reported racial/ethnic identity was: Asian = 56.9%; White/European = 32.4%; East Indian = 4.7%; Middle Eastern = 4.4%; Hispanic/Latino = 3.5%; Black/African Canadian = 2.2%; Aboriginal/Indigenous Canadian/Native Canadian/First Nations/Metis/Inuit = 2.2%; Other = 6.3%; these percentages sum to more than 100% because participants were asked to select all identities that applied, which could be more than one.

Procedure

Data collection took place online on Qualtrics between July 5th, 2022 and November 30th, 2022. The study was pre-registered on the Open Science Framework (https://osf.io/xkh9r/). After indicating consent and completed a demographic they were asked to view a Twitter timeline, belonging to a fictitious person named “Becky R”. Participants were told that Becky R’s timeline was public and open for anyone to view and to leave comments. Participants were also instructed to imagine that they knew and were friends with the original poster (Becky R), but they did not know any of the commenters or the relationship between the commenters and the original poster.

All interactions between Becky R and the commenters were benign, except for one. This occurred on a post with the caption “OMG JOSH PROPOSED <3” that included a real video originally posted publicly on YouTube by an actual user proposing marriage with a song. Along with supportive comments (e.g., “The guy can actually sing! Congratulations!” and “Happy for the two of you”), we included a cyber aggressive comment that had occurred on the original posted video: “With no natural predators there’s a chance these two might breed”. This comment was chosen following pilot testing (N = 473), to ensure that it was acceptably realistic and aggressive. It was also selected because although it is an insult, it is also funny, so there is room for variability in bystander responses. Nobody had responded to this cyber aggressive comment in the timeline participants viewed. During pilot-testing we also explored different platforms (e.g., Facebook, Instagram, Reddit, and TikTok), and landed on Twitter as nearly all participants were familiar with it and agreed it was believable for Twitter comments to comprise of a mix of strangers and friends of the original poster. Participants were randomly assigned to view a timeline with the post containing the aggressive comment located as the second, the tenth, or the twentieth post. Everything else in the timeline was the same for all participants.

Participants viewed the Twitter timeline and we assessed their responses to Step 1 and Barrier 1. Then we drew their attention to the aggressive comment (regardless of their response in Step 1) and assessed the rest of the steps and barriers in the BIM. For steps and barriers with no existing measures, we created measures and solicited feedback from two researchers with subject matter expertise. Internal consistency (Cronbach’s α) for each measure was acceptable; see Table 1. Confirmatory Factor Analyses (CFAs) on the items of each measure and their factor loadings are in Appendix A. Participants then received the measures assessing internalizing symptoms and experience with cyberbullying, in random order, before being debriefed.

Measures

Demographic and Social Media Use Questionnaire

Participants reported their age, gender, and racial/ethnic identity. Participants were also asked about the social media websites they frequent and to estimate the amount of time they spend on social media on a typical day, to ensure familiarity with social media.

Step 1—Noticing the Event

Participants rated their agreement using a Likert scale from 1 (Strongly Disagree) to 5 (Strongly Agree) on if they saw an interaction on the Twitter timeline that fell under the category of “online harassment”, “online hate”, “trolling”, “cyberbullying”, or “online aggression”, and to describe any such interaction they saw. Two independent coders scored descriptions to determine if they referred to the intended aggressive comment. Conflicting codes (n = 4) were settled by the first author. Participants who agreed or strongly agreed that they saw something under one of the presented cyber aggressive categories and who described the comment “With no natural predators, there’s a chance these two might breed” were coded as having noticed the event.

Barrier 1—Distraction

Using a Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree), participants rated their agreement with: I feel distracted, I have a lot of things on my mind, I am multi-tasking, and There is something else that is occupying my thoughts. The total score was computed by averaging all items, with higher scores representing greater distraction.

Step 2—Interpreting the Event as Problematic

Participants rated how “aggressive”, how “unexpected”, and how “serious” they found the highlighted comment, using a Likert scale ranging from 1 (Not at All) to 5 (Very). Participants also rated their agreement with the following item If someone left a comment like this on my social media page, I would appreciate it if someone spoke up for me and defended me on a scale from 1 (Strongly Disagree) to (Strongly Agree). Responses to the items were averaged with higher scores representing an interpretation of the event as more problematic.

Barrier 2A—Ambiguity

Participants were prompted to give their definitions of “cyber aggression”, “online harassment”, “cyberbullying”, and “online hate” in four open-ended questions to prime them to think about what they consider online aggression. Next, using a Likert scale ranging from 1 (Not at all Confident) to 5 (Extremely Confident), participants rated their confidence in their rating of how aggressive the comment they saw was. The item was reverse coded so higher scores represent greater ambiguity about whether or not the comment is cyber aggression. Other pre-registered items were removed as they did not yield an adequate Cronbach’s α.

Barrier 2B—Relationship

Participants reported their perceptions of the closeness between the original poster and the person who made the aggressive comment, ranging from 1 (Strangers) to 5 (Best Friends), with higher scores denoting a closer relationship.

Barrier 2C—Pluralistic Ignorance

Using a Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree), participants indicated whether or not they believe that most people who saw this comment would not find anything wrong with it, with higher scores representing greater pluralistic ignorance. Other pre-registered items regarding whether or not most people would ignore the comment or not think it was a big deal were dropped because they did not yield an adequate Cronbach’s α.

Step 3—Taking Personal Responsibility

With the highlighted comment still up on the screen, participants rated how responsible and how motivated they would personally feel to act if they encountered a comment like that, on a Likert scale ranging from 1 (Not at All) to 5 (Very). Participants were asked how important it would be to them that they, personally, act if they encountered such a comment, on a scale from 1 (Not at all) to 5 (Very). The responses to the questions were averaged and higher scores represent a stronger indication that the participant would take responsibility for intervening.

Barrier 3—Diffusion of Responsibility

Participants answered 9 items (e.g., Someone else will probably take action) on a Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree). Six items assessed diffusion of responsibility due to the presence of other cyber bystanders and four due to the passage of time. Once relevant items were reverse-coded, the total score was computed by taking the average of all items, with higher scores denoting greater diffusion of responsibility.

Step 4—Knowing What to Do

With the highlighted comment still on the screen, participants rated their agreement on a Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree) on four items: I know what I would do if I encountered a comment like this, I know what I would do if I encountered a comment like this, I have a clear idea of what I would do if I encountered a comment like this, I know how to support someone who got a comment like this on their page, and I am confident I would know what to do if I encountered a comment like this. Responses were averaged and higher scores represent more understanding of the options and preparedness to act.

Barrier 4—Lack of Competence

Participants indicated how comfortable they are in a list of nine situations, each answered on a Likert scale from 1 (Very Uncomfortable) to 5 (Very Comfortable). Situations represented the ability to navigate a social media interface and how to perform tasks on it (e.g., reporting a comment or sending a private message), the ability to understand and navigate social interactions on social media (e.g., picking up on social cues on social media), and the ability to navigate challenging social situations in general (e.g., knowing what to say during an argument). All items were reverse-coded, and a total score was computed by averaging all items. Higher scores denote a greater lack of competence to intervene when encountering an aggressive comment online.

Step 5—Taking Action

We presented participants with 15 potential actions and asked how likely they would be to take each of them if they encountered the highlighted comment, on a Likert scale from 1 (Very Unlikely) to 5 (Very Likely). The actions were grouped into five categories. We ranked the categories from highest to lowest as: public direct intervention (e.g., confront the person who commented), private direct intervention (e.g., privately reach out to the original poster to show support), indirect intervention (e.g., report the person who made that comment), non-intervention (e.g., do nothing), and counter-intervention (e.g., post a similar comment), based on the amount of time, effort, and resources they require. Counter-intervention is the lowest ranking option as it adds more cyber aggression. Participants were coded as endorsing the category with the highest average score in terms of their rated likelihood of doing that action. In case of a tie between two categories, participants were coded as endorsing the highest ranked of the two. All categories showed adequate reliability, assessed by Cronbach’s α (private direct intervention = .873; public direct intervention = .681; indirect intervention = .838; non-intervention = .854; counter-intervention = .770).

Barrier 5A—Audience Inhibition

Participants were given the Failure to Intervene Due to Audience Inhibition subscale which was modified to fit online harassment (Burn, 2009), along with two items that we created for this study. Each item was answered on a Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree). The total score was computed by averaging responses to all items, with higher scores denoting greater inhibition to intervene.

Barrier 5B—Costs Exceed Rewards

Participants were asked to list all the potential costs and rewards of intervening if they were to encounter the comment on social media. Next, they were presented with the prompt “I think that in this situation, the costs of intervening outweigh the rewards”, and reported their agreement to this item with a sliding scale that ranges from −5 to +5 with 0 at the center. The item was reverse coded so as negative values represent rewards overweighing the costs, while positive values represent the costs overweighing the rewards.

Depressive Symptoms

The Center for Epidemiologic Studies Depression Scale (CES-D; Radloff, 1977) is a 20-item self-report measure. Participants rated their affect and behavior in the past week on a Likert scale ranging from 0 (Rarely or none of the time–less than 1 day) to 3 (Most or all of the time–5 to 7 days). Total scores were calculated by summing the responses to all items. Scores range from 0 to 60, with higher scores demonstrating more depressive symptoms.

Social Anxiety Symptoms

The Liebowitz Social Anxiety Scale (LSAS; Liebowitz et al., 1987) asks participants to rate both their fear and avoidance of 13 social and 11 performance situations, using a Likert scale ranging from 0 (None) to 3 (Severe). The total score was computed by summing all items. Scores range from 0 to 144, with higher scores denoting higher severity of social anxiety.

Prior Experiences With Cyberbullying

We used the Cyberbullying Victimization and the Cyberbullying Offending Scales (Patchin & Hinduja, 2015) to capture historical involvement with cyber aggression. After reading a definition of cyberbullying, participants responded to items about distinct cyberbullying experiences they may have had over the past 30 days, first as a target and then as an aggressor, with each item answered on a Likert scale ranging from 0 (Never) to 4 (Many Times). Because responses were right skewed, participants who scored 0 were coded as not having engaged in cyberbullying. The rest of the participants were coded as having engaged in cyberbullying. Though the offending subscale did not show adequate reliability, we retained it owing to the exploratory nature of the aim involving experiences with cyberbullying.

Perceptions of Twitter

Because Twitter was the platform used in this study, participants reported if they had ever used Twitter before, and how civil they find Twitter, ranging from 1 (Not at all) to 5 (Very). Lastly, participants rated how realistic they found the aggressive comment to be on a scale ranging from 1 (Not at all) to 5 (Very).

Results

Descriptive Statistics and Bivariate Correlations

Descriptive statistics and bivariate correlations (Table 1) showed generally positive correlations between BIM steps. Barriers were negatively correlated with the steps to which they were assigned. All continuous variables were normal, with skewness < |2| and kurtosis < |3|; this was confirmed with visual inspection of QQ plots. We retained all potential outliers as each had leverage scores < 0.2 (meaning none were deemed to be influential). All computed variance inflation factors were < 10, indicating that intercorrelations between variables were acceptable.

Experiences With Social Media

Participants reported that they typically spend a mean of 212.75 minutes (or ~3.55 hours) every day on social media (SD = 133.09). Most participants (71.1%) endorsed they had used Twitter before, and 62.6% perceived conversations on Twitter to be not at all civil (15.3%) or mostly not civil (47.3%). Additionally, 95.4% of the sample deemed the aggressive interaction they were shown in this study as at least somewhat realistic.

Action Taken

In testing RQ1 and H1, we found that most participants reported that they would either opt for private direct intervention (39.4%) or non-intervention (36.3%), were they to encounter the comment on social media. In contrast, 9.8% endorsed indirect intervention and 9.7% endorsed public direct intervention. Only 4.8% of participants reported they would engage in counter-intervention.

Path Modelling

Based on the theory behind the BIM and other work using path analysis to validate the BIM in face-to-face situations, we constructed a path model containing the five steps in a linear sequence to address RQ2 and test H2. Next, we added the barriers to each BIM step they were theorized to influence, to test H3. Finally, to address RQ3 we added the individual factors in an exploratory manner. In each model we noted fit indices and whether the additional information resulted in incrementally better fit. We considered modification indices that are supported by theory and follow the proposed BIM structure. Hence, we rejected modification indices that prescribed (1) a step predicting a barrier, (2) a step predicting a preceding step, (3) a step or barrier predicting an individual factor. Each variable included had fewer than 3% of its values missing. Missing values were dealt with the full information maximum likelihood approach. One-way ANOVAs revealed a significant effect for aggressive comment placement on Step 2, F(2, 1,116) = 5.10, p = .006, but not on the other BIM steps. Post-hoc analysis found that the aggressive comment was seen as less problematic when it was the second post compared to when it was the tenth post, t(751) = −3.19, p = .004; placement in the twentieth post did not affect responses. Thus, comment placement was added as a covariate of Step 2 in path analysis.

Model 1

Model 1 contained the five steps of the BIM, with no barriers included. This model fit the data reasonably well, χ2(4) = 3.91, p = .419, CFI = 1.00, TLI = 1.00, RMSEA = 0.00. Although Model 1 has only 4 degrees of freedom, there are 16 parameters, which is substantially more than the available degrees of freedom. This suggests that there are not many constraints in the model. As a consequence, Model 1 is close to a just-identified model, which necessarily has perfect fit.

Table 1. Descriptive Statistics and Bivariate Correlations Between Study Variables.

|

Variable |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

|

M (SD)/ N(%) |

2.06 |

2.91 |

2.56 |

3.15 |

- |

3.03 |

2.84 |

2.36 |

3.27 |

2.93 |

2.25 |

2.51 |

-0.96 |

60.78 |

20.77 |

226 |

591 |

|

Cronbach’s α (number of items) |

.937 (5 items) |

.766 (4 items) |

.856 (3 items) |

.942 (4 items) |

.676 (15 items) |

.755 (4 items) |

- (1 item) |

- (1 item) |

- (1 item) |

.716 (10 items) |

.821 (9 items) |

.725 (4 items) |

- (1 item) |

.953 (24 items) |

.912 (20 items) |

.534 (8 items) |

.710 (8 items) |

|

1. Step 1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2. Step 2 |

.24*** [.19, .30] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

3. Step 3 |

.21*** |

.62*** |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

[.15, .26] |

[.58, .66] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

4. Step 4 |

.01 |

-.15*** |

-.12*** |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

[-.06, .06] |

[-.20, -.09] |

[-.18, -.06] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

5. Step 5 |

.07* |

.42*** |

.49*** |

-.06 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

[.01, .13] |

[.38, .47] |

[.45, .54] |

[-.12, .00] |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

6. Barrier 1 |

-.13*** |

-.11*** |

-.06* |

.00 |

-.03 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

[-.19, -.08] |

[-.17, -.06] |

[-.12, -.01] |

[-.06, .06] |

[-.09, .03] |

|

|

|

|

|

|

|

|

|

|

|

|

|

7. Barrier 2A |

-.02 |

-.10*** |

-.04 |

-.28*** |

.04 |

.01 |

|

|

|

|

|

|

|

|

|

|

|

|

|

[-.08, .04] |

[-.16, -.04] |

[-.10, .01] |

[-.33, -.22] |

[-.02, .10] |

[-.05, .07] |

|

|

|

|

|

|

|

|

|

|

|

|

8. Barrier 2B |

-.22*** |

-.44*** |

-.37*** |

.01 |

-.27*** |

-.02 |

.06* |

|

|

|

|

|

|

|

|

|

|

|

|

[-.28, -.17] |

[-.49, -.39] |

[-.42, -.32] |

[-.05, .07] |

[-.33, -.22] |

[-.07, .04] |

[.01, .12] |

|

|

|

|

|

|

|

|

|

|

|

9. Barrier 2C |

-.15*** |

-.51*** |

-.38*** |

.03 |

-.32*** |

-.00 |

.06 |

.37*** |

|

|

|

|

|

|

|

|

|

|

|

[-.21, -.09] |

[-.55, -.47] |

[-.43, -.33] |

[-.03, .09] |

[-.37, -.26] |

[-.06, .06] |

[-.00, .11] |

[.32, .42] |

|

|

|

|

|

|

|

|

|

|

10. Barrier 3 |

-.02 |

-.14*** |

-.10** |

.18*** |

-.12*** |

.09** |

-.06* |

-.01 |

.11*** |

|

|

|

|

|

|

|

|

|

|

[-.08, .04] |

[-.20, -.08] |

[-.16, -.04] |

[.12, .24] |

[-.17, -.06] |

[.03, .15] |

[-.12, -.01] |

[-.07, .05] |

[.05, .17] |

|

|

|

|

|

|

|

|

|

11. Barrier 4 |

.01 |

.05 |

-.01 |

-.37*** |

-.04 |

-.03 |

.22*** |

-.01 |

-.03 |

-.10*** |

|

|

|

|

|

|

|

|

|

[-.05, .07] |

[-.01, .11] |

[-.07, .05] |

[-.42, -.32] |

[-.10, .02] |

[-.09, .03] |

[.16, .28] |

[-.07, .05] |

[-.09, .03] |

[-.16, -.04] |

|

|

|

|

|

|

|

|

12. Barrier 5A |

-.04 |

-.10*** |

-.02 |

.28*** |

.00 |

.12*** |

-.09** |

.02 |

.05 |

.29*** |

-.28*** |

|

|

|

|

|

|

|

|

[-.10, .02] |

[-.16, -.04] |

[-.08, .04] |

[.23, .33] |

[-.06, .06] |

[.06, .18] |

[-.15, -.03] |

[-.04, .08] |

[-.01, .11] |

[.24, .35] |

[-.33, -.23] |

|

|

|

|

|

|

|

13. Barrier 5B |

-.04 |

-.27*** |

-.34*** |

-.02 |

-.32*** |

.02 |

.00 |

.17*** |

.12*** |

-.03 |

.13*** |

-.12*** |

|

|

|

|

|

|

|

[-.10, .02] |

[-.33, -.22] |

[-.39, -.29] |

[-.08, .04] |

[-.38, -.27] |

[-.03, .08] |

[-.06, .06] |

[.11, .22] |

[.06, .18] |

[-.09, .03] |

[.07, .19] |

[-.18, -.07] |

|

|

|

|

|

|

14. LSAS |

-.01 |

.10** |

.11*** |

-.16*** |

.04 |

-.14*** |

.04 |

-.03 |

-.03 |

-.14*** |

.37*** |

-.32*** |

-.01 |

|

|

|

|

|

|

[-.07, .05] |

[.04, .15] |

[.06, .17] |

[-.22, -.11] |

[-.02, .09] |

[-.20, -.08] |

[-.02, .10] |

[-.09, .03] |

[-.09, .03] |

[-.20, -.09] |

[.32, .42] |

[-.37, -.27] |

[-.07, .05] |

|

|

|

|

|

15. CES-D |

.02 |

.09** |

.14*** |

-.03 |

.06* |

-.25*** |

-.05 |

-.01 |

-.07* |

-.02 |

.15*** |

-.16*** |

-.00 |

.45*** |

|

|

|

|

|

[-.04, .08] |

[.03, .15] |

[.09, .20] |

[-.08, .03] |

[.00, .12] |

[-.31, -.20] |

[-.11, .01] |

[-.07, .05] |

[-.13, -.01] |

[-.08, .04] |

[.10, .21] |

[-.21, -.10] |

[-.06, .06] |

[.40, .49] |

|

|

|

|

16. Cyberbullying-O |

.02 |

-.05 |

-.03 |

.03 |

-.03 |

-.04 |

-.06* |

.00 |

-.03 |

-.05 |

-.10*** |

-.02 |

.04 |

-.02 |

.05 |

|

|

|

|

[-.03, .08] |

[-.11, .01] |

[-.09, .03] |

[-.03, .09] |

[-.09, .03] |

[-.10, .02] |

[-.12, -.01] |

[-.06, .06] |

[-.09, .03] |

[-.10, .01] |

[-.16, -.04] |

[-.08, .04] |

[-.02, .10] |

[-.08, .04] |

[-.01, .11] |

|

|

|

17. Cyberbullying-V |

.03 |

.03 |

.04 |

.06* |

.04 |

-.06* |

-.12*** |

.02 |

.03 |

.02 |

-.09** |

.02 |

-.00 |

.02 |

.16*** |

.27*** |

|

|

|

[-.03, .09] |

[-.03, .08] |

[-.02, .10] |

[.00, .12] |

[-.02, .10] |

[-.12, -.00] |

[-.18, -.06] |

[-.03, .08] |

[-.03, .09] |

[-.04, .08] |

[-.15, -.04] |

[-.04, .07] |

[-.06, .06] |

[-.04, .08] |

[.10, .22] |

[.22, .33] |

|

|

Note. Values in square brackets indicate the 95% confidence interval for each correlation. *indicates p < .05. **indicates p < .01, ***indicates p < .001; Step 1 = Noticing the Event; Step 2 = Interpreting Event as Problematic; Step 3 = Taking Personal Responsibility; Step 4 = Knowing What to Do; Step 5 = Taking Action; Barrier 1 = Distraction; Barrier 2A = Ambiguity; Barrier 2B = Relationship; Barrier 2C = Pluralistic Ignorance; Barrier 3 = Diffusion of Responsibility; Barrier 4 = Lack of Competence; Barrier 5A = Audience Inhibition; Barrier 5B = Costs Exceed Rewards; LSAS = Liebowitz Social Anxiety Scale (Liebowitz, et al., 1987); CES-D = The Center for Epidemiologic Studies Depression Scale (Radloff, 1977); Cyberbullying-O = Cyberbullying Offending (Patchin & Hinduja, 2015); Cyberbullying-V = Cyberbullying Victimization (Patchin & Hinduja, 2015).

|

|||||||||||||||||

Model 2

Model 2 added the barriers to the model containing the five steps. This model (Figure 2) fit the data overall, χ2(32) = 58.55, p < .001, CFI = .985, TLI = .970, RMSEA = .030. Although these statistics technically indicate a poorer fit than Model 1, because the model fit is still acceptable and has a theoretical justification, we retained it. We found a significant positive relationship between Steps 2, 3, and 5 (Table 2). We also found a negative relationship between

Steps 1 and 5, such that participants who initially noticed the aggressive comment (on their own, before we drew their attention to it) were less likely to take active, prosocial action. Step 2 was a negative predictor of Step 4, meaning that participants who interpreted the event as more problematic were less certain they knew what to do in that situation.

Figure 2. Model 2 Results: Steps and Barriers of the Bystander Intervention Model.

Note. Dotted lines denote a significant negative relationship and solid lines denote a significant positive relationship between variables. Step 1 = Noticing the Event; Step 2 = Interpreting Event as Problematic; Step 3 = Taking Personal Responsibility; Step 4 = Knowing What to Do; Step 5 = Taking Action; Barrier 1 = Distraction; Barrier 2A = Ambiguity; Barrier 2B = Relationship; Barrier 2C = Pluralistic Ignorance; Barrier 3 = Diffusion of Responsibility; Barrier 4 = Lack of Competence; Barrier 5A = Audience Inhibition; Barrier 5B = Costs Exceed Rewards; β = standardized regression coefficient.

The proposed barriers almost always impeded performance on the steps, but this was not limited to the specific step to which they were theoretically assigned. The perception that the costs of intervening exceed the benefits (Barrier 5B, expected to impede Step 5), negatively predicted individual characteristics in the BIM.

Model 3

Although the fit of Model 3 was good, CFI = .983, TLI = .959, RMSEA = .029 (Table A4), they were significantly worse than those of Model 2. χ2(8) = 352.25, p < .001. The ΔAIC and ΔBIC between Model 2 and Model 3 were both over 6 suggesting a strong preference for the model with the lower AIC value, i.e., Model 2. Our analysis of Model 3 was exploratory in nature and there is no strong theory dictating the inclusion of the selected individual characteristics in the Bystander Intervention Model. Therefore, although Model 3 seems to be a good fit and results from Model 3 can be discussed, we recommend retaining Model 2 instead, in the interest of parsimony. See Appendix B for Model 3 results.

Discussion

To our knowledge, this is the first study to comprehensively test all steps and barriers of the BIM in the context of cyber aggression; a noted gap in the literature (Kim, 2021). As the BIM prescribes, our study found a positive, sequential relationship between success in noticing the event (Step 1), interpreting the event as problematic (Step 2), and taking personal responsibility (Step 3). Also consistent with the BIM, key barriers negatively predicted each step. Some discrepancies existed between the BIM as established for face-to-face emergencies, and what was found in our data. Namely, the sequential nature of the BIM dissipated following Step 3, such that we found no relationship between Step 3 and Step 4, or Step 4 and Step 5; rather, Steps 2 and 3 directly and positively predicted Step 5. In addition, the barriers negatively related to steps other than the ones to which they were assigned, and barriers tended to impact more than one step. See Appendix C for a Figure illustrating these similarities and discrepancies.

Table 2. Regression Analysis Results for Model 2.

|

|

β (STD Error) |

z |

p |

|

Step 1: Noticing the Event |

|

|

|

|

Barrier 1: Distraction |

−.16 (.04) |

−4.57 |

< .001 |

|

Barrier 2B: Relationship |

−.13 (.02) |

−5.78 |

< .001 |

|

Barrier 2C: Pluralistic Ignorance |

−.09 (.03) |

−2.73 |

.006 |

|

Step 2: Interpreting Event as Problematic |

|

|

|

|

Step 1: Noticing the Event |

.10 (.02) |

4.70 |

< .001 |

|

Barrier 2A: Ambiguity |

−.06 (.02) |

−2.78 |

.005 |

|

Barrier 2B: Relationship |

−.16 (.02) |

−10.36 |

< .001 |

|

Barrier 2C: Pluralistic Ignorance |

−.32 (.02) |

−14.17 |

< .001 |

|

Barrier 1: Distraction |

−.08 (.02) |

−3.28 |

< .001 |

|

Barrier 3: Diffusion of Responsibility |

−.12 (.04) |

−3.09 |

.002 |

|

Barrier 5A: Audience Inhibition |

−.07 (.03) |

−2.71 |

.007 |

|

Barrier 5B: Costs Exceed Rewards |

−.07 (.01) |

−8.22 |

< .001 |

|

Step 3: Taking Personal Responsibility |

|

|

|

|

Step 1: Noticing the Event |

.03 (.03) |

1.17 |

.242 |

|

Step 2: Interpreting Event as Problematic |

.84 (.06) |

15.10 |

< .001 |

|

Barrier 3: Diffusion of Responsibility |

.01 (.05) |

0.26 |

.799 |

|

Barrier 5B: Costs Exceed Rewards |

−.06 (.01) |

−5.60 |

< .001 |

|

Step 4: Knowing What to Do |

|

|

|

|

Step 1: Noticing the Event |

.05 (.03) |

1.53 |

.125 |

|

Step 2: Interpreting Event as Problematic |

−.14 (.04) |

−3.25 |

.001 |

|

Step 3: Taking Personal Responsibility |

−.06 (.04) |

−1.71 |

.087 |

|

Barrier 4: Lack of Competence |

−.45 (.05) |

−9.61 |

< .001 |

|

Barrier 2A: Ambiguity |

−.26 (.03) |

−8.16 |

< .001 |

|

Barrier 5A: Audience Inhibition |

.24 (.04) |

6.34 |

< .001 |

|

Step 5: Taking Action |

|

|

|

|

Step 1: Noticing the Event |

−.12 (.03) |

−3.55 |

< .001 |

|

Step 2: Interpreting Event as Problematic |

.40 (.07) |

6.19 |

< .001 |

|

Step 3: Taking Personal Responsibility |

.41 (.04) |

10.04 |

< .001 |

|

Step 4: Knowing What to Do |

.02 (.03) |

0.07 |

.948 |

|

Barrier 5A: Audience Inhibition |

.06 (.04) |

1.53 |

.127 |

|

Barrier 5B: Costs Exceed Rewards |

−.05 (.01) |

−3.79 |

< .001 |

|

Barrier 3: Diffusion of Responsibility |

−.12 (.06) |

−2.07 |

.004 |

|

Note. β = standardized regression coefficient. |

|||

Our work suggests that the BIM may be applicable to cyber aggression. This may reflect cross-context continuity, or the tendency to assume similar roles and display similar behavior across online and offline contexts (Estévez et al., 2020). Cyber and face-to-face aggression share similarities, as both represent intentional, hostile behaviors that often reflect power imbalances. Still, the differences that we found compared to prior tests of the BIM in face-to-face emergencies (including bullying) may be explainable by distinctions between online and face-to-face contexts.

The Relationship Between the Steps of the BIM and Acting

Out of all steps, we found that interpreting the event as problematic (Step 2) and taking personal responsibility (Step 3) were positively linked with greater intention to act in a prosocial manner (Step 5). This is in agreement with previous work showing that bystanders intervene in cases they deem more severe (Koehler & Weber, 2018) or when they feel personally responsible (DiFranzo et al., 2018). The lack of relationship between Steps 4 and 5 could reflect that, in cyber aggression, taking personal responsibility is a more important step and bigger hurdle than knowing what to do (Step 4). Our participants were emerging adults, whose technical savviness and familiarity with the cultural norms of social media may result in Step 4 being less relevant. However, the lack of relationship between Steps 4 and 5 could also be due to our measures. The items assessing Step 4 assessed generic knowledge about how to carry out an unspecified behavior, while the items assessing Step 5 assessed intentions to engage in specific behaviors. Possibly, some participants were confident that they would do nothing (or would engage in counter-intervention) when they encountered Step 4—resulting in no association between this confidence and their endorsement of taking prosocial action in Step 5. Nonetheless, the strong negative relationship between lack of competence (Barrier 4) and Step 4 could indicate that participants were envisioning an action plan other than doing nothing.

In the path analysis results, we found a negative relationship between noticing the event (Step 1) and taking action (Step 5). Participants who readily noticed the cyber aggression may have assumed that others would notice it just as easily, leading to diffusion of responsibility. Further, noting that no one else had intervened may have led them to conclude that no action was warranted. That an online post has a seemingly infinite number of observers could make pluralistic ignorance more pronounced for cyber bystanders. Finally, some participants who view Twitter as an uncivil platform may have been simultaneously quick to spot it, as it confirmed their schemas of the platform, and less inclined to do anything about it.

We note the lack of relationship between Step 3 and Step 4 in our results. Prior tests of the BIM using path analysis have operated under the assumption that the steps are completed in a sequential order and that not engaging with a step of the model derails the bystander and results in non-intervention. To be comparable with other work testing the model in face-to-face contexts, we followed this sequence in the current study. However, the construction of the path analysis model implies that people who take personal responsibility to help (Step 3) are then more likely to know what to do (Step 4), when this may not in fact be the case, at least for cyber aggression. This is in contrast to theoretical links between other model steps, whereby succeeding in one step makes it more likely that someone will succeed in the next step. An alternative that could be explored in future work is that taking personal responsibility and knowing what to do occur simultaneously and passing both (perhaps tested through a statistical interaction) is needed to result in acting.

Interestingly, the two most popular courses of action for bystanders in our sample were non-intervention and private direct intervention. Directly reaching out to the target in private is less likely to be an option for face-to-face emergencies but could be a way for cyber bystanders to sidestep potential negative consequences or audience inhibition. Our results imply that research on bystander intervention in cyber aggression could expand the conceptualization of intervention to better account for the unique characteristics of social media interactions. Future work should explore the specific barriers and facilitators for the actions that cyber bystanders can take and evaluate the actions that have the most positive impact on cyber aggression targets.

The Step of the BIM Most Affected by Barriers

Interpreting the event as problematic (Step 2) was the step that was most affected by barriers, as ambiguity (Barrier 2A), assuming a close relationship between the aggressor and the target (Barrier 2B), pluralistic ignorance (Barrier 2C), distraction (Barrier 1), diffusion of responsibility (Barrier 3), audience inhibition (Barrier 5A), and the perception of the costs of intervening exceeding the rewards (Barrier 5B) all negatively predicted it. Some participants may view the fact that they were distracted as evidence that the cyber aggression was actually not that severe. Individuals experiencing the barrier of diffusion of responsibility may think that their own intervention is unnecessary, which could motivate them to interpret the situation as less severe. Perhaps, bystanders who are hyper-aware of their online presence may be motivated to interpret instances of cyber aggression as less severe, so as to find reasons not to act. Similarly, those who want to avoid negative consequences of acting may downplay the seriousness of the situation. These results suggest that Step 2 is the leakiest part of the pipeline of bystander intervention. Educational programs might target that step and invest in addressing barriers affecting it.

The Barrier of the BIM Affecting Most Steps

Perceiving the costs of intervening to exceed the rewards (Barrier 5B) negatively related to interpreting the event as problematic (Step 2), taking personal responsibility (Step 3), and taking prosocial action (Step 5). We highlight Barrier 5B, because even though its links with the steps did not have the largest effect sizes relative to all other barriers, it negatively predicted the most steps. Also, it negatively predicted both Step 5 and the steps that had a positive relationship with Step 5.

The unique characteristics of online interactions, such as their publicness and permanence, may enhance the potential negative consequences to bystanders if they intervene. The perceived risk of consequences such as facing backlash and becoming a target of cyber aggression may lead bystanders to avoid taking personal responsibility. It may be challenging for bystanders to choose a prosocial action that is going to help the target while simultaneously protecting themselves from all negative consequences. Educational efforts might therefore focus on providing strategies to bystanders to help them manage potential retaliation or backlash. Finally, education might emphasise the importance of collective responsibility and fostering an online community where bystanders feel empowered as well as protected when intervening.

The finding that barriers negatively predicted many steps in our study, and more than the step they were assigned to in the BIM, may reflect differences between cyber and face-to-face aggression. Online interactions are fast paced with hundreds of viewers taking part in them, as contributors or bystanders. This complexity may reduce the tight links between barriers and steps.

Individual Characteristics and the BIM

Neither internalizing symptoms nor prior cyberbullying experience related well to participants’ engagement with the BIM. Depression and social anxiety symptoms may only impact bystander intervention when symptoms are severe. As expected in a community sample, symptom levels were low overall. Barriers to intervention, especially for private intervention, may differ or be lower online compared to when in an in-person emergency. Hence, internalizing symptoms may not be as relevant to cyber bystander intervention. Alternatively, our findings could be attributable to our use of self-report questions and vignettes. Perhaps the impact of internalizing symptoms would be seen on what bystanders do in a real-life incident of cyber aggression, especially if this involves nuances such as delay before taking action. In addition, the type of cyber aggressive content may have contributed to these results. Participants with cyberbullying experiences, either as an aggressor or a target, may have compared their own experiences to the content provided in our study, influencing their responses in idiosyncratic ways.

Strengths, Limitations, and Future Directions

Strengths of this study include the use of pre-registered and pilot-tested cyber aggressive content that was based on a real-life incident. Participants also found the interaction to be realistic and something that they could see happening on Twitter. Additionally, we recruited a large sample of emerging adults (aged 18–25), who are the age group that is most active online, and thus are more likely to encounter cyber aggression incidents that warrant their intervention.

There are several study limitations. Most of our study measures were new, resulting in limited information about their psychometric properties. The pre-registered plans for computing the final score for some measures (e.g., Barrier 3) were changed due to inadequate internal consistency scores. The use of self-report measures and hypothetical vignettes may have induced demand characteristics, and not reflect participants’ real-life responses when encountering cyber aggression. Our use of a convenience sample resulted in recruiting mostly women as participants, who were predominantly Asian (57%) or White (32%) and who reported low levels of depression and social anxiety symptoms. It could be that the effect of internalizing symptoms on the BIM is stronger in samples with more severe symptomatology. There are also probably many other individual factors, which we did not test, that affect bystander behavior. Further, in our study, the target and the aggressor were White women. Future work should explore how the identity of the bystander, the target, and the aggressor interact in promoting or deterring bystander intervention.

Conclusion

We found that similar to as in face-to-face emergencies, bystanders noticing the event, interpreting it as a problem, and taking personal responsibility were all linked to acting. This underscores the significance of examining theoretical models in their entirety within online contexts, regardless of their well-established status in face-to-face interactions (Kim, 2021). Our findings suggest that when cyber bystanders decide to act, they may leverage the unique options available in online interactions, such as privately reaching out to the target. Our study also highlights the leaky pipeline of helping in cyber aggression.

Conflict of Interest

The authors have no conflicts of interest to declare.

Appendices

Appendix A

Step 1—Noticing the Event

Table A1. Factor Loadings for Items in Step 1—Noticing the Event.

|

Items in the scale |

Loadings |

|

While browsing the Twitter timeline of your friend Becky, did you see an interaction that could fall under the category of… |

Factor 1 |

|

1. Online hate |

1.111 |

|

2. Cyberbullying |

0.943 |

|

3. Online harassment |

1.000 |

|

4. Online aggression |

1.082 |

|

5. Trolling |

1.052 |

|

CFI |

1.000 |

|

TLI |

0.999 |

|

SRMR |

0.006 |

Barrier 1—Distraction

Table A2. Factor Loadings for Items in Barrier 1—Distraction.

|

Items in the scale |

Loadings |

|

|

Factor 1 |

|

1. There is something else that is occupying my thoughts |

1.000 |

|

2. I feel distracted |

1.046 |

|

3. I have a lot of things on my mind |

0.786 |

|

4. I am multi-tasking |

1.270 |

|

CFI |

0.973 |

|

TLI |

0.920 |

|

SRMR |

0.031 |

Step 2—Interpreting the Event as Problematic

Table A3. Factor Loadings for Items in Step 2—Interpreting the Event as Problematic.

|

Items in the scale |

Loadings |

|

|

Factor 1 |

|

1. How would you rate the level of aggression of the above comment made on the Twitter timeline of your friend Becky? |

1.000 |

|

2. How serious would you say the situation is? |

0.868 |

|

3. How unexpected do you find the above comment made on the Twitter timeline of your friend Becky? |

0.691 |

|

4. If someone had left a comment like this on my social media page, I would appreciate it if someone spoke up for me and defended me. |

0.860 |

|

CFI |

1.000 |

|

TLI |

1.004 |

|

SRMR |

0.002 |

Step 3—Taking Personal Responsibility

Table A4. Factor Loadings for Items in Step 3—Taking Personal Responsibility.

|

Items in the scale |

Loadings |

|

|

Factor 1 |

|

1. How important do you think it is that you would personally take action if you encountered a comment like this one made on the profile of your friend Becky, on social media? |

1.000 |

|

2. How responsible would you personally feel to take action if you encountered a comment like this one made on the profile of your friend Becky, on social media? |

0.964 |

|

3. How motivated would you personally feel to take action if you encountered a comment like this one made on the profile of your friend Becky, on social media? |

1.050 |

|

CFI |

1.000 |

|

TLI |

1.000 |

|

SRMR |

0.000 |

Barrier 3—Diffusion of Responsibility

Table A5. Factor Loadings for Items in Barrier 3—Diffusion of Responsibility.

|

Items in the scale |

Loadings |

|

|

Factor 1 |

|