The influence of communicating agent on users’ willingness to interact: A moderated mediation model

Vol.18,No.2(2024)

Empowered by AI, chatbots are increasingly being integrated to interact with users in one-on-one communication. However, academic scrutiny on the impact of chatbots on online interaction is lacking. This study aims to fill the gap by applying self-presentation theory (presenting the desired self-impression to others) to explore how the communicating agent (chatbot vs. human agent) in interactive marketing influences users’ interaction willingness, as well as the moderating roles of users’ public self-consciousness (sense of apprehension over self-presentation concern) and sensitive information disclosure (private information linked to an individual). The results of three experimental studies indicate that chatbots can improve users’ willingness to interact by mitigating the self-presentation concern. Further, users’ public self-consciousness and sensitive information disclosure moderated the impact of chatbots in online interactions. These effects were particularly impactful for users with higher public self-consciousness and in situations with sensitive information disclosure. The findings provide theoretical and practical implications for human-chatbot interaction, chatbot strategy, and the application of chatbots in online communication.

chatbot; self-presentation concern; public self-consciousness; sensitive information disclosure; human agents

Qi Zhou

School of Journalism and Information Communication, Huazhong University of Science and Technology, Wuhan, China

Qi Zhou is a postdoctoral in the School of Journalism and Information Communication at the Huazhong University of Science and Technology. She received her doctorate from the Huazhong University of Science and Technology. Her research interests focus on artificial intelligence, brand anthropomorphism, and social media influencers. Her work has been published in Computers in Human Behavior, Journal of Retailing and Consumer Services, Environmental Communication, and Current Psychology.

Bin Li

School of Journalism and Communication, Tsinghua University, Beijing, China

Bin Li is a PhD candidate in the School of Journalism and Communication at Tsinghua University. His research interest focus on influencer marketing, AI communication. He has conducted both academic and industrial research. His research interests focus on advertising avoidance, social media influencers, and intelligent communication. His work has been published in Computers in Human Behavior, Journal of Retailing and Consumer Services, Environmental Communication, and Chinese Journal of Communication.

Appel, M., Izydorczyk, D., Weber, S., Mara, M., & Lischetzke, T. (2020). The uncanny of mind in a machine: Humanoid robots as tools, agents, and experiencers. Computers in Human Behavior, 102, 274–286. https://doi.org/10.1016/j.chb.2019.07.031

Bansal, G., Zahedi, F. M., & Gefen, D. (2016). Do context and personality matter? Trust and privacy concerns in disclosing private information online. Information & Management, 53(1), 1–21. https://doi.org/10.1016/j.im.2015.08.001

Barasch, A., Zauberman, G., & Diehl, K. (2018). How the intention to share can undermine enjoyment: Photo-taking goals and evaluation of experiences. Journal of Consumer Research, 44(6), 1220–1237. https://doi.org/10.1093/jcr/ucx112

Baumeister, R. F. (1982). Self‐esteem, self‐presentation, and future interaction: A dilemma of reputation. Journal of Personality, 50(1), 29–45. https://doi.org/10.1111/j.1467-6494.1982.tb00743.x

Bazarova, N. N., Taft, J. G., Choi, Y. H., & Cosley, D. (2013). Managing impressions and relationships on Facebook: Self-presentational and relational concerns revealed through the analysis of language style. Journal of Language and Social Psychology, 32(2), 121–141. https://doi.org/10.1177/0261927X12456384

Brandtzaeg, P. B., Skjuve, M., & Følstad, A. (2022). My AI friend: How users of a social chatbot understand their human–AI friendship. Human Communication Research, 48(3), 404–429. https://doi.org/10.1093/hcr/hqac008

Chae, J. (2017). Virtual makeover: Selfie-taking and social media use increase selfie-editing frequency through social comparison. Computers in Human Behavior, 66, 370–376. https://doi.org/10.1016/j.chb.2016.10.007

Chang, Y., Lee, S., Wong, S. F., & Jeong, S.-p. (2022). AI-powered learning application use and gratification: An integrative model. Information Technology & People, 35(7), 2115–2139. https://doi.org/10.1108/ITP-09-2020-0632

Cheek, J. M., & Briggs, S. R. (1982). Self-consciousness and aspects of identity. Journal of Research in Personality, 16(4), 401–408. https://doi.org/10.1016/0092-6566(82)90001-0

Chen, Y.-N. K. (2010). Examining the presentation of self in popular blogs: A cultural perspective. Chinese Journal of Communication, 3(1), 28–41. https://doi.org/10.1080/17544750903528773

Chi, X., Han, H., & Kim, S. (2022). Protecting yourself and others: Festival tourists’ pro-social intentions for wearing a mask, maintaining social distancing, and practicing sanitary/hygiene actions. Journal of Sustainable Tourism, 30(8), 1915–1936. https://doi.org/10.1080/09669582.2021.1966017

Choi, T. R., & Sung, Y. (2018). Instagram versus Snapchat: Self-expression and privacy concern on social media. Telematics and Informatics, 35(8), 2289–2298. https://doi.org/10.1016/j.tele.2018.09.009

Chu, S.-C., & Choi, S. M. (2010). Social capital and self-presentation on social networking sites: A comparative study of Chinese and American young generations. Chinese Journal of Communication, 3(4), 402–420. https://doi.org/10.1080/17544750.2010.516575

Chung, M., Ko, E., Joung, H., & Kim, S. J. (2020). Chatbot e-service and customer satisfaction regarding luxury brands. Journal of Business Research, 117, 587–595. https://doi.org/10.1016/j.jbusres.2018.10.004

Doherty, K., & Schlenker, B. R. (1991). Self‐consciousness and strategic self‐presentation. Journal of Personality, 59(1), 1–18. https://doi.org/10.1111/j.1467-6494.1991.tb00765.x

Duan, W., He, C., & Tang, X. (2020). Why do people browse and post on WeChat moments? Relationships among fear of missing out, strategic self-presentation, and online social anxiety. Cyberpsychology, Behavior, and Social Networking, 23(10), 708–714. https://doi.org/10.1089/cyber.2019.0654

Fethi, M. D., & Pasiouras, F. (2010). Assessing bank efficiency and performance with operational research and artificial intelligence techniques: A survey. European Journal of Operational Research, 204(2), 189–198. https://doi.org/10.1016/j.ejor.2009.08.003

Fiore, A. M., Lee, S.-E., Kunz, G., & Campbell, J. R. (2001). Relationships between optimum stimulation level and willingness to use mass customisation options. Journal of Fashion Marketing and Management, 5(2), 99–107. https://doi.org/10.1108/EUM0000000007281

Fraune, M. R., Oisted, B. C., Sembrowski, C. E., Gates, K. A., Krupp, M. M., & Šabanović, S. (2020). Effects of robot-human versus robot-robot behavior and entitativity on anthropomorphism and willingness to interact. Computers in Human Behavior, 105, Article 106220. https://doi.org/10.1016/j.chb.2019.106220

Greenwald, A. G., Bellezza, F. S., & Banaji, M. R. (1988). Is self-esteem a central ingredient of the self-concept? Personality and Social Psychology Bulletin, 14(1), 34–45. https://doi.org/10.1177/0146167288141004

Haslam, N., & Loughnan, S. (2014). Dehumanization and infrahumanization. Annual Review of Psychology, 65, 399–423. https://doi.org/10.1146/annurev-psych-010213-115045

Hayes, A. F. (2017). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach. Guilford Publications.

Hill, J., Ford, W. R., & Farreras, I. G. (2015). Real conversations with artificial intelligence: A comparison between human–human online conversations and human–chatbot conversations. Computers in Human Behavior, 49, 245–250. https://doi.org/10.1016/j.chb.2015.02.026

Ho, A., Hancock, J., & Miner, A. S. (2018). Psychological, relational, and emotional effects of self-disclosure after conversations with a chatbot. Journal of Communication, 68(4), 712–733. https://doi.org/10.1093/joc/jqy026

Hoyt, C. L., Blascovich, J., & Swinth, K. R. (2003). Social inhibition in immersive virtual environments. Presence: Teleoperators and Virtual Environments, 12(2), 183–195. https://doi.org/10.1162/105474603321640932

Huang, M.-H., & Rust, R. T. (2021). A strategic framework for artificial intelligence in marketing. Journal of the Academy of Marketing Science, 49(1), 30–50. https://doi.org/10.1007/s11747-020-00749-9

Kang, S.-H., & Gratch, J. (2010). Virtual humans elicit socially anxious interactants’ verbal self‐disclosure. Computer Animation and Virtual Worlds, 21(3–4), 473–482. https://doi.org/10.1002/cav.345

Kim, T. W., & Duhachek, A. (2020). Artificial Intelligence and persuasion: A construal-level account. Psychological Science, 31(4), 363–380. https://doi.org/10.1177/0956797620904985

Konya-Baumbach, E., Biller, M., & von Janda, S. (2023). Someone out there? A study on the social presence of anthropomorphized chatbots. Computers in Human Behavior, 139, Article 107513. https://doi.org/10.1016/j.chb.2022.107513

Leary, M. R., & Kowalski, R. M. (1990). Impression management: A literature review and two-component model. Psychological Bulletin, 107(1), 34–37. https://doi.org/10.1037/0033-2909.107.1.34

Liu, B., & Sundar, S. S. (2018). Should machines express sympathy and empathy? Experiments with a health advice chatbot. Cyberpsychology, Behavior, and Social Networking, 21(10), 625–636. https://doi.org/10.1089/cyber.2018.0110

Ljepava, N., Orr, R. R., Locke, S., & Ross, C. (2013). Personality and social characteristics of Facebook non-users and frequent users. Computers in Human Behavior, 29(4), 1602–1607. https://doi.org/10.1016/j.chb.2013.01.026

Longoni, C., Bonezzi, A., & Morewedge, C. K. (2019). Resistance to medical artificial intelligence. Journal of Consumer Research, 46(4), 629–650. https://doi.org/10.1093/jcr/ucz013

Longoni, C., & Cian, L. (2020). Artificial intelligence in utilitarian vs. hedonic contexts: The “word-of-machine” effect. Journal of Marketing, 86(1), 91–108. https://doi.org/10.1177/0022242920957347

Lucas, G. M., Gratch, J., King, A., & Morency, L.-P. (2014). It’s only a computer: Virtual humans increase willingness to disclose. Computers in Human Behavior, 37, 94–100. https://doi.org/10.1016/j.chb.2014.04.043

Luo, X., Tong, S., Fang, Z., & Qu, Z. (2019). Frontiers: Machines vs. humans: The impact of artificial intelligence chatbot disclosure on customer purchases. Marketing Science, 38(6), 913–1084. https://doi.org/10.1287/mksc.2019.1192

Lutz, C., Schöttler, M., & Hoffmann, C. P. (2019). The privacy implications of social robots: Scoping review and expert interviews. Mobile Media & Communication, 7(3), 412–434. https://doi.org/10.1177/2050157919843961

Manyika, J., Chui, M., Miremadi, M., & Bughin, J. (2017). A future that works: AI, automation, employment, and productivity. McKinsey & Company. https://www.mckinsey.com/~/media/mckinsey/featured%20insights/Digital%20Disruption/Harnessing%20automation%20for%20a%20future%20that%20works/MGI-A-future-that-works-Executive-summary.ashx

McLean, G., Osei-Frimpong, K., & Barhorst, J. (2021). Alexa, do voice assistants influence consumer brand engagement?–Examining the role of AI powered voice assistants in influencing consumer brand engagement. Journal of Business Research, 124, 312–328. https://doi.org/10.1016/j.jbusres.2020.11.045

Mende, M., Scott, M. L., Van Doorn, J., Grewal, D., & Shanks, I. (2019). Service robots rising: How humanoid robots influence service experiences and elicit compensatory consumer responses. Journal of Marketing Research, 56(4), 535–556. https://doi.org/10.1177/0022243718822827

Mou, Y., & Xu, K. (2017). The media inequality: Comparing the initial human-human and human-AI social interactions. Computers in Human Behavior, 72, 432–440. https://doi.org/10.1016/j.chb.2017.02.067

Mozafari, N., Weiger, W. H., & Hammerschmidt, M. (2022). Trust me, I’m a bot – Repercussions of chatbot disclosure in different service frontline settings. Journal of Service Management, 33(2), 221–245. https://doi.org/10.1108/JOSM-10-2020-0380

Murtarelli, G., Gregory, A., & Romenti, S. (2021). A conversation-based perspective for shaping ethical human–machine interactions: The particular challenge of chatbots. Journal of Business Research, 129, 927–935. https://doi.org/10.1016/j.jbusres.2020.09.018

Nadarzynski, T., Miles, O., Cowie, A., & Ridge, D. (2019). Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: A mixed-methods study. Digital Health, 5. https://doi.org/10.1177/2055207619871808

Paulhus, D. L., Bruce, M. N., & Trapnell, P. D. (1995). Effects of self-presentation strategies on personality profiles and their structure. Personality and Social Psychology Bulletin, 21(2), 100–108. https://doi.org/10.1177/0146167295212001

Pearce, J. L. (1998). Face, harmony, and social structure: An analysis of organizational behavior across cultures. Oxford University Press.

Pelau, C., Dabija, D.-C., & Ene, I. (2021). What makes an AI device human-like? The role of interaction quality, empathy and perceived psychological anthropomorphic characteristics in the acceptance of artificial intelligence in the service industry. Computers in Human Behavior, 122, Article 106855. https://doi.org/10.1016/j.chb.2021.106855

Pounders, K., Kowalczyk, C. M., & Stowers, K. (2016). Insight into the motivation of selfie postings: Impression management and self-esteem. European Journal of Marketing, 50(9/10), 1879–1892. https://doi.org/10.1108/EJM-07-2015-0502

Reports, V. (2022, September 14). Chatbots market size to reach USD 3892.1 million by 2028 at a CAGR of 20%. PR Newswire. https://www.prnewswire.com/in/news-releases/chatbots-market-size-to-reach-usd-3892-1-million-by-2028-at-a-cagr-of-20-valuates-reports-841372826.html

Rodríguez Cardona, D., Janssen, A., Guhr, N., Breitner, M. H., & Milde, J. (2021). A matter of trust? Examination of chatbot usage in insurance business. In Proceedings of the 54th Hawaii International Conference on System Sciences (pp. 556–565). https://doi.org/10.24251/HICSS.2021.068

Rosenberg, J., & Egbert, N. (2011). Online impression management: Personality traits and concerns for secondary goals as predictors of self-presentation tactics on Facebook. Journal of Computer-Mediated Communication, 17(1), 1–18. https://doi.org/10.1111/j.1083-6101.2011.01560.x

Sands, S., Ferraro, C., Campbell, C., & Tsao, H.-Y. (2020). Managing the human–chatbot divide: How service scripts influence service experience. Journal of Service Management, 32(2), 246–264. https://doi.org/10.1108/JOSM-06-2019-0203

Schanke, S., Burtch, G., & Ray, G. (2021). Estimating the impact of “humanizing” customer service chatbots. Information Systems Research, 32(3), 736–751. https://doi.org/10.1287/ISRE.2021.1015

Schau, H. J., & Gilly, M. C. (2003). We are what we post? Self-presentation in personal web space. Journal of Consumer Research, 30(3), 385–404. https://doi.org/10.1086/378616

Scheier, M. F., & Carver, C. S. (1985). Optimism, coping, and health: Assessment and implications of generalized outcome expectancies. Health Psychology, 4(3), 219–247. https://doi.org/10.1037/0278-6133.4.3.219

Schlenker, B. R. (1980). Impression management. Brooks/Cole.

Schneider, D. J. (1969). Tactical self-presentation after success and failure. Journal of Personality and Social Psychology, 13(3), 262–268. https://doi.org/10.1037/h0028280

Schuetzler, R. M., Giboney, J. S., Grimes, G. M., & Nunamaker, J. F. (2018). The influence of conversational agent embodiment and conversational relevance on socially desirable responding. Decision Support Systems, 114, 94–102. https://doi.org/10.1016/j.dss.2018.08.011

Seitz, L., Bekmeier-Feuerhahn, S., & Gohil, K. (2022). Can we trust a chatbot like a physician? A qualitative study on understanding the emergence of trust toward diagnostic chatbots. International Journal of Human-Computer Studies, 165, Article 102848. https://doi.org/10.1016/j.ijhcs.2022.102848

Shim, M., Lee, M. J., & Park, S. H. (2008). Photograph use on social network sites among South Korean college students: The role of public and private self-consciousness. CyberPsychology & Behavior, 11(4), 489–493. https://doi.org/10.1089/cpb.2007.0104

Shim, M., Lee-Won, R. J., & Park, S. H. (2016). The self on the net: The joint effect of self-construal and public self-consciousness on positive self-presentation in online social networking among South Korean college students. Computers in Human Behavior, 63, 530–539. https://doi.org/10.1016/j.chb.2016.05.054

Sundar, A., Dinsmore, J. B., Paik, S.-H. W., & Kardes, F. R. (2017). Metaphorical communication, self-presentation, and consumer inference in service encounters. Journal of Business Research, 72, 136–146. https://doi.org/10.1016/j.jbusres.2016.08.029

Trippi, R. R., & Turban, E. (1992). Neural networks in finance and investing: Using artificial intelligence to improve real world performance. Probus.

Tsai, W.-H. S., Lun, D., Carcioppolo, N., & Chuan, C.-H. (2021). Human versus chatbot: Understanding the role of emotion in health marketing communication for vaccines. Psychology & Marketing, 38(12), 2377–2392. https://doi.org/10.1002/mar.21556

Twomey, C., & O’Reilly, G. (2017). Associations of self-presentation on Facebook with mental health and personality variables: A systematic review. Cyberpsychology, Behavior, and Social Networking, 20(10), 587–595. https://doi.org/10.1016/s0169-2070(96)00728-5

Tyler, J. M., Kearns, P. O., & McIntyre, M. M. (2016). Effects of self-monitoring on processing of self-presentation information. Social Psychology, 47(3). https://doi.org/10.1027/1864-9335/a000265

Valkenburg, P. M., Schouten, A. P., & Peter, J. (2005). Adolescents’ identity experiments on the Internet. New Media & Society, 7(3), 383–402. https://doi.org/10.1177/1461444805052282

Van den Broeck, E., Zarouali, B., & Poels, K. (2019). Chatbot advertising effectiveness: When does the message get through? Computers in Human Behavior, 98, 150–157. https://doi.org/10.1016/j.chb.2019.04.009

Vasalou, A., & Joinson, A. N. (2009). Me, myself and I: The role of interactional context on self-presentation through avatars. Computers in Human Behavior, 25(2), 510–520. https://doi.org/10.1016/j.chb.2008.11.007

Wei, Y., Lu, W., Cheng, Q., Jiang, T., & Liu, S. (2022). How humans obtain information from AI: Categorizing user messages in human-AI collaborative conversations. Information Processing & Management, 59(2), Article 102838. https://doi.org/10.1016/j.ipm.2021.102838

Yu, Y., & Huang, K. (2021). Friend or foe? Human journalists’ perspectives on artificial intelligence in Chinese media outlets. Chinese Journal of Communication, 14(4), 409–429. https://doi.org/10.1080/17544750.2021.1915832

Zarouali, B., Van den Broeck, E., Walrave, M., & Poels, K. (2018). Predicting consumer responses to a chatbot on Facebook. Cyberpsychology, Behavior, and Social Networking, 21(8), 491–497. https://doi.org/10.1089/cyber.2017.0518

Zhang, M., Liu, Y., Wang, Y., & Zhao, L. (2022). How to retain customers: Understanding the role of trust in live streaming commerce with a socio-technical perspective. Computers in Human Behavior, 127, Article 107052. https://doi.org/10.1016/j.chb.2021.107052

Zhao, X., Chen, L., Jin, Y., & Zhang, X. (2023). Comparing button-based chatbots with webpages for presenting fact-checking results: A case study of health information. Information Processing & Management, 60(2), Article 103203. https://doi.org/10.1016/j.ipm.2022.103203

Zhu, D. H., & Deng, Z. Z. (2021). Effect of social anxiety on the adoption of robotic training partner. Cyberpsychology, Behavior, and Social Networking, 24(5), 343–348. https://doi.org/10.1089/cyber.2020.0179

Authors’ Contribution

Qi Zhou: conceptualization; resources; funding acquisition; research design; writing—original draft; writing—review & editing. Bin Li: conceptualization; research design; data curation; formal analysis; writing—original draft.

Editorial Record

First submission received:

April 8, 2023

Revisions received:

October 8, 2023

December 25, 2023

Accepted for publication:

February 1, 2024

Editor in charge:

David Smahel

Introduction

As one of the most widely adopted applications of artificial intelligence (AI), chatbots have been applied in various domains, including online advertising and consumer services, to rapidly respond to users’ needs and deliver personalized, cost-effective, and efficient services (Huang & Rust, 2021; Lutz et al., 2019; Manyika et al., 2017). According to a report from PR Newswire, the online chatbots’ market size is projected to grow from $1,079.9 million in 2021 to almost $3,892.1 million by 2028 (Reports, 2022). Chatbots are a prominent trend in social development, and they have been applied across diverse industries for collecting user data and facilitating two-way, private, and personalized conversations (Yu & Huang, 2021). For example, Replika, an AI-driven chatbot customized to suit the relationship needs of users, requires users to disclose their interests and preferences to create their personalized chatbot experiences (Brandtzaeg et al., 2022). Likewise, LAIX, an AI-powered education technology company in China (Chang et al., 2022), leverages chatbots’ interactions to tailor English education classes for individual users and offer personalized guidance and recommendations based on users’ English proficiency and learning goals.

Chatbots are widely applied to alleviate users’ concerns arising from interpersonal interactions, such as health-information gathering, and ultimately reduce users’ social concerns (Konya-Baumbach et al., 2023; Seitz et al., 2022). Especially in China, Mianzi—defined as the acknowledgment of an individual’s social status and position by others—has significant importance. Mianzi makes people carefully manage their self-presentation in interpersonal interactions, especially those concerning sensitive information disclosure, such as health, finance, and performance scores (Pearce, 1998). Accordingly, chatbots have been recognized as effective communicating agents for alleviating concerns in interpersonal interactions (Tsai et al., 2021; Zhu & Deng, 2021).

However, the existing research on chatbots’ interaction effectiveness has yielded inconsistent findings. Chatbots have been associated with reduced message exchange, lower purchase rate, and diminished user satisfaction due to perceived shortcomings in professionalism and empathy (Hill et al., 2015; Luo et al., 2019; Sands et al., 2020; Tsai et al., 2021). Conversely, chatbots may be an ideal tool for encouraging user engagement in interactions characterized by evaluation apprehension to elicit the same emotional disclosure (Ho et al., 2018; Schuetzler et al., 2018). That is, chatbots’ effectiveness in enhancing social interaction may vary across situations. These mixed findings underscore the significance of social interaction in the context of human-chatbot interactions and warrant further empirical investigation. Yet, our understanding of the underlying mechanisms relating to users’ perceptions in this interaction process from the social interaction perspective and possible boundary conditions remains insufficient.

To bridge this gap, this research aims to unravel how chatbots impact users’ interaction willingness (RQ1) and when chatbots rather than human agents are more effective in enhancing interaction willingness (RQ2) from the social interaction perspective. While chatbots are increasingly becoming more human-like (i.e., anthropomorphism outlook, voice), they still lack certain social interaction traits compared to human agents. This can significantly reduce users’ need for impression management and decrease their resistance to self‐disclosure (Lucas et al., 2014). Specifically, by adopting the previously unexamined perspective of self-presentation theory, we explore the potential for chatbots to positively impact interaction willingness. We find that chatbots enhance users’ interaction willingness, which is primarily driven by self-presentation concerns. The decrease in users’ self-presentation concern, in turn, will amplify users’ willingness to engage, communicate, and interact. Based on self-presentation theory, we introduce users’ public self-consciousness—that is, users’ sense of apprehension over self-presentation concerns and sensitive information disclosure—as moderators that can impact users’ interaction willingness.

This study seeks to advance the burgeoning field of chatbot research by investigating the potential influence of chatbots compared to human agents on interaction willingness and offers both theoretical and managerial contributions. On a theoretical level, this study delves into the effectiveness of chatbots on users’ interactive experience from the perspective of social interaction, which enriches the growing body of knowledge on human-chatbot interactions and contributes to the field of HCI marketing. On a practical level, this study offers valuable insights to marketers, enhancing their understanding of users’ reaction to chatbots and provides guidelines for marketers in online communication on whether and how to incorporate chatbots into their strategies based on their marketing goals and target audience.

In the following sections, we review the relevant research on chatbots and human-chatbot interaction. Then, we develop the hypotheses and report the results of three experimental studies. Finally, we discuss the theoretical contributions and managerial implications and identify several promising areas of future research.

Literature Review and Hypotheses

Literature Review on Chatbots

Chatbots, AI-powered automated computer programs, engage users in instant, personalized, and dialogic interactions that simulate human-like conversation (Wei et al., 2022). The existing literature on chatbots can be broadly categorized into two main domains: the first pertains to the cognitive assessment of chatbots in task-oriented contexts, while the second centers on the examination of social interactions of the chatbots.

Firstly, scholars have distinguished between the effectiveness of chatbots based on their application context. In situations where chatbots are deployed for tasks that require rational analysis, algorithmic logic, and efficiency, users tend to exhibit a positive attitude toward chatbots. This was observed in the case of financial advisors, stock analysis robots, and computational analysis robots (Fethi & Pasiouras, 2010; Trippi & Turban, 1992). Conversely, when chatbots are employed in tasks that emphasize users’ uniqueness, a negative attitude often prevails. This was seen in the context of medical diagnostic robots and sales-oriented chatbots (Longoni et al., 2019; Luo et al., 2019). Overall, these studies have provided conflicting conclusions about chatbots. These disparities may be attributed to the human-like characteristics of chatbots. Given the current technological limitations in chatbots, they are often perceived as lacking certain human-like characteristics and are considered depersonalized and devoid of emotion, which results in users’ perceptions of uniqueness neglect (Longoni & Cian, 2020), empathy lack (Luo et al., 2019), self-identity discomfort (Mende et al., 2019), and less self-disclosure (Hill et al., 2015; Mou & Xu, 2017).

Recent research on chatbots has further investigated the antecedent factors that influence chatbots’ impact on users’ attitudes across varied scenarios (e.g., Chung et al., 2020; McLean et al., 2021; Van den Broeck et al., 2019; Zarouali et al., 2018). Two primary antecedent factors, namely technology-related and task-related attributes, play pivotal roles in shaping users’ satisfaction with chatbots, subsequent intention to use chatbots, as well as brand attitude and brand engagement. In terms of technology-related attributes, aligned with technology acceptance model (TAM), perceived usefulness and ease of use of chatbots can help improve users’ perceptions of helpfulness, pleasure, and arousal, which ultimately impact users’ brand attitude and further interaction with the brand (McLean et al., 2021; Schanke et al., 2021; Zarouali et al., 2018). In terms of task-related attributes, the types and quality of tasks that chatbots undertake, encompassing functions such as interaction, customization, and problem-solving, will shape users’ experience with chatbots (Chung et al., 2020). These studies largely map out related constructs that account for chatbot usage from a chatbot perspective. Other than tasks chatbots undertake, they also play a vital role in interacting with users socially. Yet, existing research has so far failed to understand the underlying mechanisms related to users’ perceptions in this interaction process from a social interaction perspective or the boundary condition of these impacts.

To address these gaps, the present research examines the potential impact of chatbots and their underlying mechanisms. While the findings of previous research on chatbots have been inconsistent, the majority of the focus remains on task competence and neglects the role of social interaction. To advance the emerging chatbot research in interactive marketing beyond online communication, this study evaluates the chatbots’ impact on users’ interaction willingness from a social interaction perspective and identifies the interpersonal communication mechanism and boundary context.

Self-Presentation Concern

In the realm of social interaction, self-presentation concern is a fundamental aspect of human social behavior that affects how individuals form impressions and build relationships, which is essential to understanding users’ interaction behavior. The social interaction of individuals with others shapes people’s views of themselves, which serves the function of presenting the desired self-impression to others (Rosenberg & Egbert, 2011). In the pursuit of self-presentation, individuals employ diverse strategies and skills to project a favorable image and avert any loss of Mianzi (Chen, 2010; Chu & Choi, 2010; Pounders et al., 2016; Valkenburg et al., 2005). Inherently, social interactions carry the potential for evaluation or judgment by others, which can significantly influence future outcomes (Leary & Kowalski, 1990). As a result, social interaction can often heighten users’ concern for self-presentation (Schlenker, 1980).

Research indicates that self-presentation concern fluctuates according to the nature of the interaction target and considers how human-like characteristics would impact the social interaction (e.g., anthropomorphized appearances, social relationship, and social norms; Duan et al., 2020; Pelau et al., 2021). For instance, individuals tend to exhibit fewer self-presentation concerns in interactions with closer relationships (Ljepava et al., 2013). Similarly, individuals who display less self-presentation concern would share more authentic information with close friends and relatives, while individuals tend to have heightened self-presentation concerns and opt for self-enhancing presentations on public social platforms (Vasalou & Joinson, 2009). In addition, the customer service setting (public versus private) can also impact consumers’ self-presentation concern due to the human-related cues (Sundar et al., 2017). Therefore, it is clear that the context and the presence of human-like characteristics during the interaction play a significant role in shaping users’ self-presentation concern. In the context of online interactions, chatbots—as a novel interaction target—possess limited human-like characteristics than human agents. The lack of human-like characteristics in interaction targets should also work as the cue in impacting individuals’ interaction behavior. As evidenced by Zhu and Deng (2021), the limited human-like characteristics in robot can reduce individuals’ fear of negative evaluation and social anxiety in interaction. Accordingly, it is reasonable to expect that users’ self-presentation concerns will differ when interacting with chatbots and with human agents.

Chatbots, Self-Presentation Concern, and Interaction Willingness

Chatbots are another interaction target with fewer human-like characteristics. Chatbots are typically perceived as unfeeling, less empathetic machines that provide a programmed service without autonomous goals or intentions (Kim & Duhachek, 2020). Accordingly, chatbots are considered to have limited emotional capacity to respond to users in ways akin to human agents, particularly in situations involving sensitive topics. Users tend to evaluate chatbots more favorably in terms of system usability, report lower levels of impression management, exhibit reduced resistance to self-disclosure, and are more likely to disclose intimate information when interacting with chatbots than human agents (Kang & Gratch, 2010; Lucas et al., 2014).

Similarly, during social interaction, individuals inherently engage in impression management, with an emphasis on presenting their desired self-image. Users actively grapple with self-presentation concerns and adopt aggressive impression management strategies because they are driven by the fear of negative evaluation (Pounders et al., 2016). This fear is mitigated when communicating in an environment with fewer human cues, such as in a private service setting or with a robotic training partner (Sundar et al., 2017; Zhu & Deng, 2021). In the realm of online communication, the decrement of human-like characteristics would lower users’ levels of self-presentation concern. However, when users communicate with human agents, the human agents possess the emotional capacity to judge the behavior of others based on their values and social norms, which will increase users’ self-presentation concern. As a result, when interacting with human agents, users’ concern about self-presentation will increase significantly.

H1a: Chatbots lead to lower users’ self-presentation concern than human agents.

Self-presentation concern plays a pivotal role during interactions and exerts a significant influence on the overall experience (Baumeister, 1982). In this context, interaction willingness refers to users’ predisposition or readiness to engage in an interaction and is a critical variable in evaluating the communication experience (Fraune et al., 2020). Users with high self-presentation concerns tend to fear judgment or rejection, which, in turn, diminishes their willingness to communicate and disclose personal information (Schau & Gilly, 2003; Shim et al., 2008). For example, Choi and Sung (2018) discovered that users with high self-presentation concerns tend to exhibit reduced willingness to communicate or disclose personal information. As evidenced in prior research (Zhu & Deng, 2021), users’ self-presentation concerns tend to decrease when playing with a robotic training partner, which bolsters their subsequent adoption willingness. Similarly, in online communication settings, the reduction of self-presentation concerns from chatbots can also promote interaction willingness. Conversely, when interacting with human agents, users tend to experience heightened self-presentation concerns, which results in decreased interaction willingness.

H1b: Lowered self-presentation concern leads to an increase in users’ interaction willingness.

Through the combination of H1a and H1b, communicating agents can promote users’ interaction willingness through self-presentation concern. Hence, we hypothesized the following:

H2: The impact of the communicating agent on users’ interaction willingness is mediated by users’ self-presentation concern.

The Moderating Role of Public Self-Consciousness

As the existing body of research on the impact of chatbots has yielded conflicting findings, our understanding of the factors that could potentially shape the effectiveness of chatbots in social interactions remains incomplete. Accordingly, we delve into the personal characteristics and situational factors that may affect the influence of chatbots on users’ interaction willingness.

At the core of the chatbots’ impact on self-presentation concern is users’ apprehension over self-presentation. Based on self-presentation theory, users’ level of public self-consciousness is a crucial individual-difference predictor of self-presentation. Public self-consciousness reflects users’ tendency to think about self-impression that matters in public (Cheek & Briggs, 1982; Greenwald et al., 1988). Those with a higher level of public self-consciousness tend to be more preoccupied with their self-presentation (Barasch et al., 2018; Murtarelli et al., 2021; Shim et al., 2016). For instance, users with elevated public self-consciousness are more likely to post relationship photos that highlight positive social qualities (Shim et al., 2008), employ positive emotional vocabulary to update public personal dynamics (Bazarova et al., 2013), and pay more attention to their impression management on social platforms (Barasch et al., 2018; Shim et al., 2016). Accordingly, users with heightened public self-consciousness place a greater emphasis on self-presentation, with self-presentation concern exerting a more pronounced influence compared to users with lower public self-consciousness.

Consequently, we propose that users with high public self-consciousness are more concerned with their self-presentation. For these individuals, the presence of human agents amplifies their fears of social judgment from the human agents. Following this vein, chatbots will lead to a much lower self-presentation concern compared to human agents and foster higher interaction willingness among users. Comparatively, users with low public self-consciousness display less concern about their self-presentation, so the distinction between communicating agents will be less impactful on their self-presentation concerns and subsequent interaction willingness.

H3: Public self-consciousness will moderate the mediated relationship of communicating agents on users’ interaction willingness via users’ self-presentation concern; that is, the indirect effect is stronger for those who have high public self-consciousness than for those who have low public self-consciousness.

The Moderating Role of Sensitive Information Disclosure

In addition to personal characteristics, we examine a second moderator from situational factors, which is sensitive information disclosure. Sensitive information disclosure refers to situations that require users to share private information that is “linked or linkable to an individual, such as health, financial, educational, and employment” (Bansal et al., 2016). This stems from previous research that revealed sensitive topics within interactions can be a significant predictor of users’ self-presentation concerns (Paulhus et al., 1995). Specifically, users tend to experience heightened self-presentation concerns when they are asked to disclose sensitive information, which is largely driven by their apprehensions of how others might judge them (Schneider, 1969; Tyler et al., 2016).

Within the context of human-chatbot interaction, sensitive information disclosure can also impact users’ self-presentation. Existing research has demonstrated that communicating agents with more human-like characteristics would enhance users’ concern about information. For instance, communicating agents with more anthropomorphized features led users to disclose less truthful, potentially embarrassing information compared to agents with more limited anthropomorphized features (Schuetzler et al., 2018). This phenomenon has been observed in different scenarios, such as task performance, where users performed more poorly in the presence of humans compared to when performing alone or in the presence of computer agents (Hoyt et al., 2003). In the context of sensitive health topics, users tend to prefer robotic training partners over human ones (Nadarzynski et al., 2019). These findings suggest that the heightened human-like qualities of communicating agents may, at times, deter users from engaging fully in the interaction. Therefore, in situations with sensitive information disclosure that may trigger evaluation apprehension, chatbots—characterized by their limited human-like features—may enhance the communication experience and outcomes compared to human agents.

Therefore, we propose that when users interact with a communicating agent, the sensitive information will also impact users’ self-presentation concern. Users believe that pre-programmed chatbots lack the ability for independent thoughts and adherence to social norms, which leads them to believe that chatbots will not judge them in the same way human agents might. Consequently, users may experience lower fears of evaluation and impression management, which makes them more comfortable with disclosing sensitive information to a chatbot (Lucas et al., 2014). The reduced evaluation apprehension can, in turn, bolster users’ willingness to engage in future interactions. In contrast, in situations involving non-sensitive information disclosure, users’ evaluation apprehension will be largely reduced because of the lack of personal information that can be judged, accordingly, the context-specific factors (e.g., communicating efficiency, perceived empathy) matter more in the interaction. In this vein, the self-presentation concern can be largely reduced when communicating with human agents. Thus, we proposed the following hypothesis:

H4: Sensitive information disclosure will moderate the mediated relationship of communicating agents on users’ interaction willingness via users’ self-presentation concern; that is, the indirect effect is stronger in the sensitive information disclosure condition than in the non-sensitive information disclosure condition.

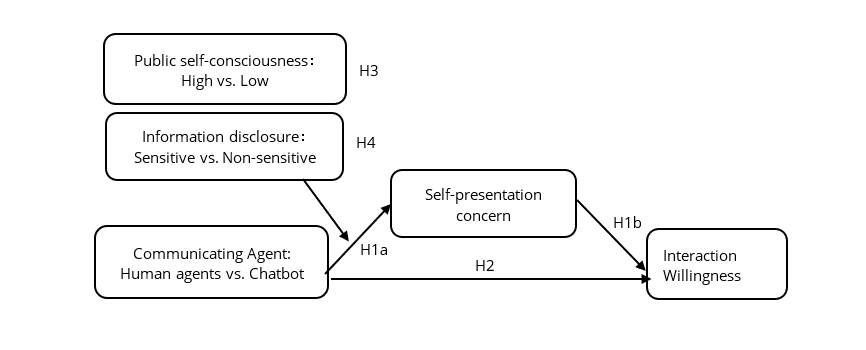

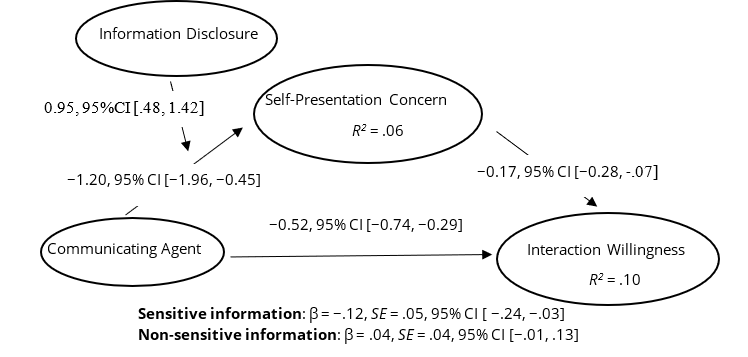

Figure 1. Theoretical Framework.

Study 1

The purpose of Study 1 was to test whether users exhibit a higher propensity to engage with communication provided by chatbots, compared with human agents, and the underlying mechanism (H1a, b, and H2), because users have less self-presentation concern when interacting with chatbots. Study 1 simulated the online communication process to investigate users’ interaction willingness toward chatbots versus human agents through a utilitarian context (i.e., education).

Methods

Research Design

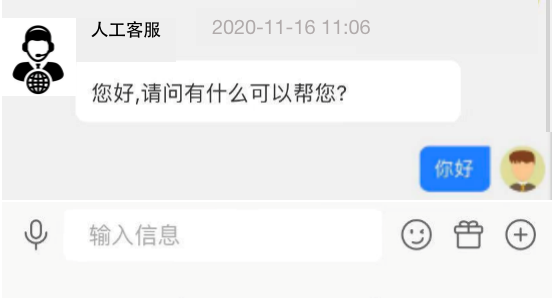

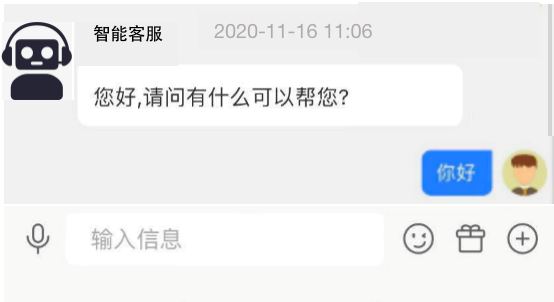

A between-subject experiment (communicating agent: chatbot vs. human agent) was conducted. 109 participants in China were recruited in January 2022 by using a professional online survey site, wjx.cn, which is a major Chinese online survey system that provides functions equivalent to other crowdsourcing platforms, such as MTurk (Chi et al., 2021; Zhang et al., 2022), with monetary reward. First, the participants were shown the same background material for the scenario: Imagine you are searching for English classes online to improve your English. Next, participants were randomly assigned to one of the two communicating agent conditions. Using Sands and colleagues (2020)’ methodology, we manipulated the communicating agent. Participants in the chatbot conditions were shown a chatbot image and the text: Hello, I am your chatbot. Tell me about your situation and complete the assessment, I will customize courses for you. Participants in the human-agent conditions were shown a human-agent image and the text: Hello, I am your agent. Tell me about your situation and complete the assessment, I will customize courses for you. After viewing the information, participants had to indicate whether they talked to a chatbot or a human agent as an attention check question (Mozafari et al., 2022), and rated their self-presentation concern (Barasch et al., 2018; 3 items, α = .83, e.g., How worried were you when talking with chatbot? and To what extent were you attempting to control your impression when talking with chatbot?; 1 = Strongly Disagree, 7 = Strongly Agree) and their interaction willingness (Fiore et al., 2001; 4 items, α = .89, e.g., I am willing to spend more time interacting in brand communication provided by chatbot/human agent; 1 = Strongly Disagree, 7 = Strongly Agree). Gender and age were also documented. The original English measurement scale was translated into Chinese and back into English by professional translators to ensure measurement equivalence. Twelve participants whose answers did not match their assigned conditions were removed from the analysis, and a total sample of 97 participants (age range = 18–60, Mage = 30.35, 56.7% female) was included for data analysis.

Results

Manipulation Check

The manipulation check for communicating agent was significant at p < .001, with participants in the chatbot group perceiving they were talking to chatbot more than those in the human-agent group (Mchatbot = 3.26, SD = 1.05;

Mhuman-agent = 5.02, SD = 1.12; t = 8.00).

Interaction Willingness

As this study predicted, the communicating agent affected users’ interaction willingness. A t-test showed that users’ interaction willingness was higher in the chatbot group than in the human-agent group (Mchatbot = 4.68, SD = 1.04; Mhuman-agent = 3.94, SD = 1.23; t = 3.17, p = .002).

Self-Presentation Concern as the Mediator

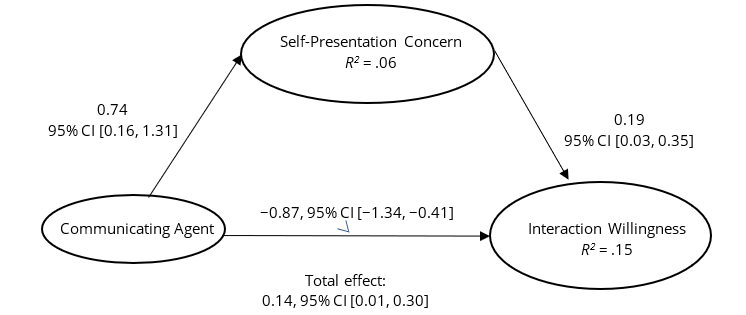

A t-test showed that users’ self-presentation concern was lower in the chatbot group than in the human-agent group (Mchatbot = 3.67, SD = 1.41; Mhuman-agent = 4.40, SD = 1.44; t = −2.55, p = .013). To examine whether self-presentation concern would mediate the relationship between communicating agent and users’ interaction willingness, the mediation analysis procedure (model 4) recommended by Hayes (2017) was performed. The mediation effect was tested using 5,000 bootstrap samples, with communicating agent as the independent variable, self-presentation concern as the mediator, and interaction willingness as the dependent variable. As predicted, the 95% confidence interval (CI) did not include zero, β = .14, SE = .08, 95% CI [.01, .30], which demonstrated that self-presentation concern mediates the relationship between communicating agent and users’ interaction willingness (see Fig. 2).

Figure 2. The Mediating Effect Test.

Discussion

The results of Study 1 indicate that users exhibit a greater inclination to interact when confronted with chatbots in comparison to human agents. Furthermore, these results support Hypotheses 1 and 2, thus confirming the mediation role of self-presentation concern. In Study 2, we use an experiment to validate the main effect that interaction willingness is higher when communicating with chatbot by testing the moderation role of public self-consciousness and using different stimuli.

Study 2

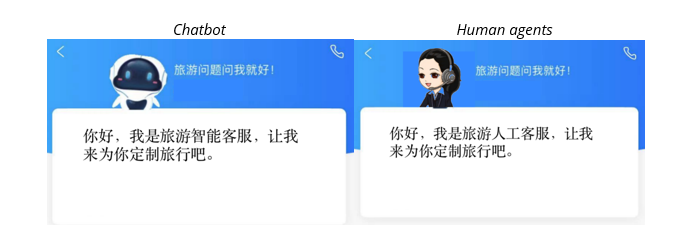

The purpose of Study 2 was to test whether users’ public self-consciousness will impact their interaction willingness through self-presentation concern (H3). Additionally, to expand the generalizability of the results, Study 2 further conducted the online experiment in a hedonic situation (i.e., travel).

Methods

Research Design

A between-subject experiment (communicating agent: chatbot vs. human agent) was conducted. The same platform from Study 1, wjx.cn, was used to recruit 186 participants who earned a monetary reward in February 2022. First, participants were asked to fill in the public self-consciousness scale (Scheier & Carver, 1985; 13 items, α = .90, e.g., I’m concerned about my style of doing things; 1 = Strongly Disagree, 7 = Strongly Agree). Then, each participant was randomly assigned to a communication condition based on communicating agent. Next, the participants were asked to read the background material about the introduction. In the chatbot group, the participants were shown a chatbot avatar and the text: With the help of the chatbot, plan your next vacation on the ‘CT’ app. In the human-agent group, the participants were shown a human-agent avatar and the text: With the help of the human agent, plan your next vacation on the ‘CT’ app. After reading the background material, the participants were asked to respond to the attention check question, manipulation question, measurement items of self-presentation concern (Barasch et al., 2018), interaction willingness (Fiore et al., 2001), gender, and age. After removing the participants who failed the attention check, a total sample of 165 participants (age range = 18–60, Mage = 32.46, 53.9% female) was included for data analysis.

Results

Manipulation Check

The manipulation check for communicating agent was significant at p < .001, with participants in the chatbot group perceiving they were talking to chatbot more than those in the human-agent group (Mchatbot = 3.31, SD = 1.01;

Mhuman-agent = 4.62, SD = 1.15; t = 7.75).

Interaction Willingness

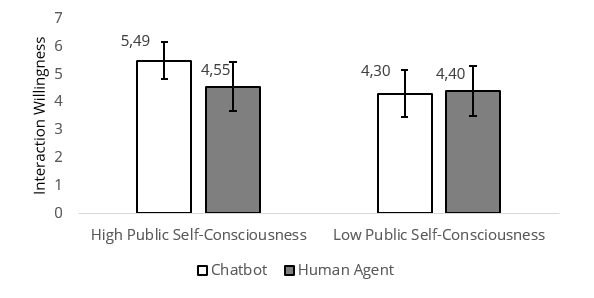

As this study predicted, regression analysis showed that the interaction between communicating agent and users’ public self-consciousness had a significant impact on users’ interaction willingness, F(1, 161) = 14.85, p = .002. To break down this interaction, a simple-effect analysis showed that only for users with a high public self-consciousness (categorized by median split) can the communicating agent have a significant impact on users’ interaction willingness, Mchatbot = 5.49, SD = 0.65; Mhuman-agent = 4.55, SD = 0.89; F(1, 161) = 13.75, p < .001. For users with a low public self-consciousness, the communicating agents have no significant impact on users’ interaction willingness, Mchatbot = 4.30, SD = 0.85; Mhuman-agent = 4.40, SD = 1.06; F(1, 161) = 0.89, p = .611 (see Fig. 3).

Mediation Effect

A t-test showed that users’ self-presentation concern is lower in the chatbot group than in the human-agent group (Mchatbot = 3.80, SD = 1.57; Mhuman-agent = 4.56, SD = 1.44; t = 3.22, p = .002). The further mediation effect results again confirm the mediation effect. The 95% confidence interval (CI) did not include zero, 0.17, 95% CI [0.05, 0.30], which demonstrated that self-presentation concern mediates the relationship between the communicating agent and users’ interaction willingness.

Figure 3. Interaction Willingness Based on Service Provider.

Public Self-Consciousness as the Moderator

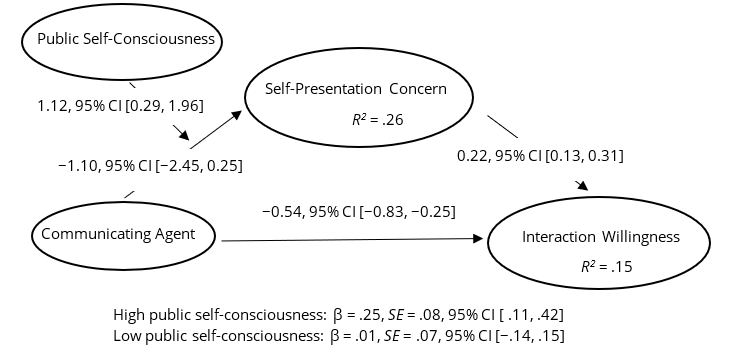

We tested the mediating role of self-presentation concern on interaction willingness moderated by public self-consciousness using SPSS PROCESS procedure (model 7, with 5,000 bootstrapped samples). The results revealed that a significant mediation effect of self-presentation concern for users with high public self-consciousness, β = .25, SE = .08, 95% CI [.11, .42], but not for users with low public self-consciousness, β = .01, SE = .07, 95% CI [−.14, .15]. This demonstrated that public self-consciousness moderates the relationship between communicating agent and users’ interaction willingness. More specifically, a simple-effect analysis showed that only for users with a high public self-consciousness can the communicating agent have a significant impact on users’ self-presentation concern, Mchatbot = 4.21, SD = 1.61; Mhuman-agent = 5.36, SD = 0.82; F(1, 161) = 15.64, p < .001. For users with a low public self-consciousness, the communicating agents have no significant impact on users’ self-presentation concern, Mchatbot = 3.43, SD = 1.46; Mhuman-agent = 3.46, SD = 1.38; F(1, 161) = 0.01, p = .934 (see Fig. 3), supporting H3 (see Fig. 4).

Figure 4. The Moderating Effect of Public Self-Consciousness.

Discussion

In addition to reinforcing the evidence supporting self-presentation concern as the psychological mechanism that mediates the relationship between the communicating agent and the users’ interaction willingness, Study 2 introduced a user-related moderator: public self-consciousness. The results of Study 2 show that users’ public self-consciousness moderates the impact of the communicating agent on users’ interaction willingness. Hypothesis 3 was supported. Given that users’ public self-consciousness is a trait that may not be recognized easily, in Study 3, we examine sensitive information disclosure as an additional variable that is important in the interaction between marketers and users.

Study 3

The purpose of Study 3 was to explore the moderation role of sensitive information disclosure and whether information disclosure could affect the impact of communicating agents on users’ interaction willingness through self-presentation concern (H4). Users’ public self-consciousness is a personal characteristic, which is hard for companies to manipulate. Study 3 further introduced information disclosure as an important and managerially relevant moderator to include in this proposed model and examined information disclosure through a context requiring significant information sharing.

Methods

Research Design

Study 3 consisted of a 2 (communicating agent: chatbot vs. human agent) * 2 (information disclosure: sensitive vs. non-sensitive) between-subject design. In this study, participants were randomly shown one of the four different situations and completed the evaluations. We recruited a separate sample consisting of 355 participants from wjx.cn in exchange for a small payment in May 2022.

First, participants were randomly assigned to one of the four experimental conditions. Following the methodology of Konya-Baumbach et al. (2023), under the manipulation of non-sensitive information, participants were instructed to shop for a remedy for a common cold, while those in the sensitive information condition were instructed to shop for a drug to increase libido through online communication. A predesigned chat script was embedded within the study to restore online interaction. In the chatbot group, the participants were shown a robot avatar that said: Hi, I am your chatbot, if you need any help, I am delighted to assist you. In the human-agent group, the participants were shown a human avatar that said: Hi, I am your agent, if you need any help, I am delighted to assist you. Then, the communicating agent asked: What drug are you looking for? Participants were required to type their answers according to the manipulation. The predesigned chat script allowed participants to interact with chatbots in two rounds. After reading the background material, the participants were asked to fill in the attention check question for the information sensitivity perception, measurement items of self-presentation concern, interaction willingness, gender, and age. After removing the participants who failed the attention check, a total sample of 306 participants (age range = 18–60, Mage = 30.58, 59.15% female) was included for data analysis.

Results

Manipulation Check

The manipulation of sensitive information disclosure was successful, with the results showing that the sensitive information disclosure context was perceived as more sensitive (Msensitive = 5.60, SD = 0.92) than the non-sensitive information disclosure context (Mnon-sensitive = 3.60, SD = 1.51, t = 14.15, p < .001).

The manipulation check for communicating agent was also significant at p < .001, with participants in the chatbot group perceiving they were talking to chatbot more than those in the human-agent group (Mchatbot = 3.32, SD = 1.21; Mhuman-agent = 5.11, SD = 1.05; t = 13.82).

Interaction Willingness

As this study predicted, a t-test revealed that the communicating agent had a significant impact on users’ interaction willingness. More specifically, chatbots can increase users’ interaction willingness more than human agents (Mchatbot = 4.99, SD = 0.96; Mhuman-agent = 4.43, SD = 1.05; t = 4.88, p < .001).

Mediation Effect

We tested the mediating role of self-presentation concern on interaction willingness using SPSS PROCESS procedure (model 4, with 5,000 bootstrapped samples). The results again demonstrated the mediating role of self-presentation concern, β = −.04, SE = .03, 95% CI [−.10, −.01].

Sensitive Information Disclosure as the Moderator

We tested the moderating role of sensitive information disclosure using Hayes (2017) PROCESS procedure (model 7, with 5,000 bootstrapped samples). The results showed a significant mediation effect of self-presentation concern when users disclose sensitive information, β = .70, SE = .16, 95% CI [.37, .01], but not when users disclose non-sensitive information, β = −.26, SE = .17, 95% CI [−.60, .08]. This demonstrated that information disclosure moderates the relationship between the communicating agent and users’ self-presentation concern (see Fig. 5). More specifically, according to simple effect analysis, this effect on users’ self-presentation concern was only significant for the sensitive information disclosure context, Mchatbot = 4.30, SD = 1.02; Mhuman-agent = 4.99, SD = 1.04; F(1, 302) = 17.73, p < .001. For the non-sensitive information disclosure context, the effect is not significant, Mchatbot = 4.74, SD = 1.20; Mhuman-agent = 4.48, SD = 0.83; F(1, 302) = 2.21, p = .138. Interaction willingness was only significant in the sensitive information disclosure context, Mchatbot = 5.30, SD = 0.82; Mhuman-agent = 4.08, SD = 1.08; F(1, 302) = 66.62, p < .001. For non-sensitive information disclosure context, the effect was not significant, Mchatbot = 4.69, SD = 0.99; Mhuman-agent = 4.88, SD = 0.83; F(1, 302) = 1.36, p = .244.

Figure 5. The Moderating Effect of Sensitive Information Disclosure.

Discussion

Study 3 demonstrated the moderating role of sensitive information disclosure for chatbot interactions, providing support for Hypothesis 4. By manipulating the information disclosure as a managerially controllable moderator that weakens the previously identified effect when communicating with different agents, users disclosing sensitive information have a higher interaction willingness with chatbots compared to human agents. These findings have significant managerial implications, suggesting that marketers should strategically choose the type of communicating agent according to the information disclosure contexts.

General Discussion

This study aimed to shed light on the impact of chatbots in communication on users’ interaction willingness. Our results indicate that chatbots can enhance users’ interaction willingness compared to human agents through the mediating role of self-presentation concern. These findings underscore the value of chatbots in online communication, especially compared to other AI applications, in contexts such as medical diagnostic robots or sales calls (Liu & Sundar, 2018; Longoni et al., 2019; Nadarzynski et al., 2019; Zhao et al., 2023). Indeed, unlike other service contexts that emphasize users’ identity, the core of user-oriented communication is ease and relaxation during interaction (Zhu & Deng, 2021). Thus, chatbots in online communication can significantly reduce interaction anxiety and promote interaction willingness through the decrement of users’ self-presentation concerns, which again proves the previous research (Kang & Gratch, 2010; Lucas et al., 2014). As self-presentation concerns work as a valuable factor in impacting users’ interactive behavior (Chu & Choi, 2010; Doherty & Schlenker, 1991; Twomey & O’Reilly, 2017), it should also play a role in further interaction with chatbots (i.e., interaction willingness). Chatbots, with fewer human-like characteristics and limited emotional capacity, are more likely to trigger lower self-presentation concerns in users, thereby increasing users’ willingness to engage in communication with chatbots over human agents (Haslam & Loughnan, 2014).

In addition, our results shed light on the nuanced interplay influenced by users’ public self-consciousness and sensitive information disclosure. As users’ emphasis on and need for self-presentation increases for users who have higher public self-consciousness and for contexts that concern sensitive information (Barasch et al., 2018, Schuetzler et al., 2018), the effectiveness of chatbots is poised to be more pronounced in such cases. Accordingly, chatbots will be more impactful for users who have higher public self-consciousness and for contexts that concern sensitive information.

Two other interesting findings were found in our study. First, the results show that users with high public self-consciousness show a higher interaction willingness tendency. We assume that this might be because they have a heightened awareness of how they are perceived by others in social situations. Therefore, they may seek reassurance and feedback from others to confirm that they are presenting themselves in a socially acceptable manner (Chae, 2017; Shim et al., 2016). Another interesting finding is that in non-sensitive information disclosure situations, chatbots can lead to greater interaction willingness than human agents. As the interaction process involves multiple psychological mechanisms, including empathy and comfort perception (Luo et al., 2019; Mende et al., 2019), the self-presentation concern may not be the major mechanism in non-sensitive information disclosure situations.

Theoretical Contributions

Chatbots have increasingly attracted the attention of researchers. In examining chatbots’ effectiveness, most research has addressed users’ attitudes toward chatbots and the service context (e.g., Fethi & Pasiouras, 2010; Longoni et al., 2019; Luo et al., 2019). Our research focuses on the impact of chatbots in online communication on users’ interaction willingness. We add three contributions to the existing knowledge.

First, this study extends the existing AI-related studies by adopting a social interaction perspective. To date, previous studies related to chatbots have mainly focused on the effect of chatbots from a task competency perspective and have conflicting conclusions (Appel et al., 2020; Longoni et al., 2019; Mende et al., 2019). Considering that social interaction will affect the impact of chatbots, this study highlights that chatbots can enhance users’ interaction willingness, thereby broadening our understanding of chatbots’ effectiveness.

Second, this study contributes another theoretically relevant mechanism to human-chatbot interaction by revealing the self-presentation concern. Unlike previous research that stemmed from task competency, this study discovered that self-presentation concern works as the mediator on the impact of the communicating agent on users’ interaction willingness. The result again confirms prior findings that users respond to chatbots socially as in interpersonal interaction (Kang & Gratch, 2010; Lucas et al., 2014). Besides, testing users’ public self-consciousness, which again demonstrates the mediation effect of self-presentation concern, also contributes to the boundary effect of self-presentation concern.

Third, this study bridges the theoretical work on interactive context and object by introducing the potential boundary role of sensitive information disclosure. Previous research has looked into the boundary effect of chatbots from chatbots’ characteristic perspective (e.g., anthropomorphism, social presence; Fethi & Pasiouras, 2010; Luo et al., 2019). This study introduces the potential boundary condition from an interactive contextual perspective. By comparing chatbots and human agents’ impact on communication, this study found that chatbots can boost users’ brand interaction willingness in sensitive information disclosure contexts.

Managerial Implications

This study offers valuable insights on the practical implications of integrating chatbots in service-oriented industries. First, our findings underscore the indispensable role of chatbots in online communication in addressing users’ self-presentation concerns, which led to increased user engagement. For marketers, the strategic deployment of chatbots—especially in contexts like the Mianzi context— can be particularly effective. In contexts where users exhibit high self-presentation concerns, practitioners can program chatbots to communicate the protection of personal information at the start, offer positive feedback and incentives, use affirmative language, and create a relaxing atmosphere during the service. These measures can shift users’ attention to the interaction process, mitigating users’ focus on the presentation of their presentations.

Second, our findings provide guidance for marketers regarding the strategic deployment of chatbots in online communication. Recognizing that the impact of chatbots varies among users with different levels of self-public consciousness, a one-size-fits-all approach may not be optimal. Marketers should consider users’ self-public consciousness and segment users according to their social activities intensity, such as social media engagement, profile information richness, and other relevant data. Chatbots should be designed with a greater focus on privacy and personalization for users with high self-public consciousness, highlighting data privacy protection measures to build trust. For users with low levels of self-public consciousness, chatbots can be designed to be more open and social to inspire more active engagement and enhance the interactive experience. Tailoring chatbots to these segments can result in more effective outcomes and improve user experiences.

Third, our findings indicate that the positive effects of chatbots are amplified in contexts that require sensitive information disclosure. Managers should identify specific information disclosure contexts when selecting communicating agents. For instance, when dealing with sensitive information such as health or financial matters, chatbots prove to be particularly effective in alleviating users’ self-presentation concerns. Therefore, managers should ensure that chatbots have high level of security and privacy protection mechanisms. Meantime, users should be clearly informed that their personal information will be strictly protected, employing technical means such as anonymization and encryption to build trust in information security. This addresses concerns users may have when it comes to sensitive information. When dealing with non-sensitive information, managers may choose communication agents based on contextual requirements and user expectations. In this case, the focus may be more on providing convenient and efficient services, with chatbots increasing user engagement. Such differentiated options help to provide communication services that are closer to users’ expectations in different contexts.

Limitations and Future Research

While this research makes valuable contributions to our understanding of human-chatbot interaction, it has several limitations and offers directions for future research. First, limited by capabilities of chatbots today, this study adopted the scenario-based investigation method. Future research can build upon these findings by conducting real-world studies involving anthropomorphic chatbots with advanced features. Examining users’ responses in authentic interactions will provide a more comprehensive understanding of chatbot impact.

Second, users’ attitudes toward chatbots can change over time, as influenced by their experiences and evolving technology. Future research could explore the longitudinal aspect of user attitudes and consider how initial experiences with chatbots affect subsequent interactions and how users’ evolving technology preferences impact their willingness to engage with chatbots, as well as how psychological factors and their respective weights influence user interactions in different contexts. Moreover, the segmentation of users’ public self-consciousness could be refined with more extensive data collection. Researchers should explore alternative ways to segment users effectively, including leveraging digital footprint data or other innovative methods. Further, users’ privacy concerns and ethical considerations are vital factors in their chatbot interactions, particularly in contexts involving sensitive information. Future research can delve deeper into how these concerns impact user behavior and attitudes and explore strategies to address ethical considerations in chatbot implementations (Rodríguez Cardona et al., 2021).

Finally, this study was conducted in a Chinese context with collectivist cultural traits. Future research could extend the investigation to countries with individualist cultures to examine how cultural differences influence users’ self-presentation concerns and their interactions with chatbots. Cross-cultural studies can provide valuable insights into the generalizability of the findings.

Conflict of Interest

The authors have no conflicts of interest to declare.

Acknowledgement

This work was supported in part by the Science Foundation of Ministry of Education of China (Grant No. 22YJCZH264) and China Postdoctoral Science Foundation (Grant No. 2021M701324).

Data Availability Statement

The processed data required to reproduce these findings cannot be shared at this time as the data also forms part of an ongoing study.

Appendix

Appendix A: Study Measures

Interaction Willingness (Fiore et al., 2001)

I am willing to disclose more information interacting with chatbot/ human agents.

I am willing to spend more time interacting with chatbot/ human agents.

I am willing to interact with chatbot/ human agents as an exciting experience.

I am interested in interacting with chatbot/ human agents.

Self-Presentation Concern (Barasch et al., 2018)

How anxious did you feel when talking with chatbot/ human agents?

How worried were you when talking with chatbot/ human agents?

To what extent were you attempting to control your impression when talking with chatbot/ human agents?

Public Self-Consciousness (Scheier & Carver, 1985)

I’m concerned about my style of doing things.

I care a lot about how I present myself to others.

I’m self-conscious about the way I look.

I usually worry about making a good impression.

Before I leave my house, I check how I look.

I’m concerned about what other people think of me.

I’m usually aware of my appearance.

It takes time to get over my shyness in new situations.

It’s hard for me to work when someone is watching me.

I get embarrassed very easily.

It’s easy for me to talk to strangers.

I feel nervous when I speak in front of a group.

Large groups make me nervous.

Manipulation for Communicating Agent (Mozafari et al., 2022)

Please rate whether you think you talked to a chatbot or a human agent.

Manipulation for Sensitive Information (Konya-Baumbach et al., 2023)

Please rate the level of sensitive of the information you disclose in the situation.

Appendix B

Figure B1. Study 1: The Stimuli for Communicating Agents.

Figure B2. Study 2: The Stimuli for Communicating Agents.

Figure B3. Study 3: Non-Sensitive Information * Human Agents.

Imagine you are Shopping for a Remedy for a Common Cold

Figure B4. Study 3: Sensitive information * Chatbot.

Imagine you are shopping for a drug to increase libido

Appendix C

Table C1. Demographic Information of Participants in Studies 1, 2 and 3.

|

Profile |

Group |

Frequency (Percent) |

||

|

Study 1 |

Study 2 |

Study 3 |

||

|

Gender |

Male |

42 (43.30) |

76 (46.06) |

125 (40.85) |

|

Female |

55 (56.70) |

89 (53.94) |

181 (59.15) |

|

|

Age |

18–25 |

20 (20.62) |

20 (12.12) |

66 (21.57) |

|

26–30 |

35 (36.08) |

59 (35.76) |

78 (25.49) |

|

|

31–40 |

40 (41.24) |

66 (40.00) |

155 (50.65) |

|

|

41–50 |

2 (2.06) |

13 (7.88) |

6 (1.96) |

|

|

Over 50 |

0 (0.00) |

7 (4.24) |

1 (0.33) |

|

|

Education |

High school |

2 (2.06) |

5 (3.03) |

9 (2.94) |

|

Some college education |

10 (10.31) |

23 (13.94) |

47 (15.36) |

|

|

College degree |

69 (71.13) |

107 (64.85) |

221 (72.22) |

|

|

Graduate school |

16 (16.50) |

30 (18.18) |

29 (9.48) |

|

Table C2. Randomization Checks.

|

Profile |

Study 1 |

Study 2 |

Study 3 |

|

Gender |

F(1, 95) = 0.45, p = .504; |

F(1, 163) = 0.00, p = .998; |

F(1, 304) = 1.26, p = .263; |

|

Age |

F(1, 95) = 0.00, p = .990; |

F(1, 163) = 1.26, p = .263; |

F(1, 304) = 0.30, p = .584; |

|

Education |

F(1, 95) = 0.45, p = .504; |

F(1, 163) = 0.10, p = .747; |

F(1, 304) = 0.59, p = .443; |

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Copyright © 2024 Qi Zhou, Bin Li