Cognitive screening for children: Piloting a new battery of interactive games in 4- to 8-year-old children

Vol.18,No.4(2024)

Cognitive screening at the preschool age can be helpful in the early detection of possible difficulties before the start of school attendance as problems during schooling can have profound consequences. While interactive tools are still limited for the Czech population, tools used for cognitive screening at a younger age often consist of traditional pen-and-paper methods. Such examinations can be very demanding and time-consuming. This pilot study therefore focuses on the usability testing of a newly developed battery of interactive games used for the screening of selected cognitive functions in children aged four to eight in a less demanding and more attractive way. The battery consists of three interactive games testing (1) attention (selective attention), (2) executive functions (inhibitory control), (3) memory (episodic-like memory). The study sample included 24 participants of preschool or younger school age, ranging from 4 to 8 years old, girls = 12 (50%). The analyses also concentrated on the comparison of the newly designed screening tool with selected traditional test methods. The results suggest that the newly developed battery is feasible, and for children, it is entertaining and attractive. The designed cognitive battery is a promising tool for the screening of cognitive functions in younger school-age children.

cognitive screening; preschool children; cognitive function; game-based assessment; memory; attention; executive function

Markéta Jablonská

National Institute of Mental Health, Klecany, Czech Republic

Markéta Jablonská is a psychologist and a researcher at the Research Center for Virtual Reality in Mental Health and Neuroscience at the National Institute of Mental Health in the Czech Republic, focusing primarily on virtual reality exposure therapy for anxiety disorders.

Iveta Fajnerová

National Institute of Mental Health, Klecany, Czech Republic; Third Faculty of Medicine, Charles University, Prague, Czech Republic

Iveta Fajnerová is the head of the Research Center for Virtual Reality in Mental Health and Neuroscience at the National Institute of Mental Health in the Czech Republic. Her research work is focused on the application of virtual reality technology in basic and clinical research, particularly in cognitive-behavioral therapy, cognitive assessment, and brain imaging.

Tereza Nekovářová

National Institute of Mental Health, Klecany, Czech Republic; Faculty of Arts, Charles University, Prague, Czech Republic

Tereza Nekovářová is the head of Neurophysiology of Cognition Research Group at the National Institute of Mental Health in the Czech Republic. Her research interest is focused on cognitive function, their neurobiology, and development both in ontogeny and phylogeny.

Alloway, T. P., Gathercole, S. E., Adams, A.-M., Willis, C., Eaglen, R., & Lamont, E. (2005). Working memory and phonological awareness as predictors of progress towards early learning goals at school entry. British Journal of Developmental Psychology, 23(3), 417–426. https://doi.org/10.1348/026151005X26804

Amukune, S., Caplovitz Barrett, K., & Józsa, K. (2022). Game-based assessment of school readiness domains of 3-8-year-old-children: A scoping review. Journal of New Approaches in Educational Research, 11(1), 146–167. https://doi.org/10.7821/naer.2022.1.741

Amunts, J., Camilleri, J. A., Eickhoff, S. B., Heim, S., & Weis, S. (2020). Executive functions predict verbal fluency scores in healthy participants. Scientific Reports, 10, Article 1114. https://doi.org/10.1038/s41598-020-65525-9

Ardila, A., Rosselli, M., Matute, E., & Inozemtseva, O. (2011). Gender differences in cognitive development. Developmental Psychology, 47(4), 984–990. https://doi.org/10.1037/a0023819

Atkinson, J., & Braddick, O. (2012). Visual attention in the first years: Typical development and developmental disorders. Developmental Medicine & Child Neurology, 54(7), 589–595. https://doi.org/10.1111/j.1469-8749.2012.04294.x

Baddeley, A. (2012). Working memory: Theories, models, and controversies. Annual Review of Psychology, 63, 1–29. https://doi.org/10.1146/annurev-psych-120710-100422

Bates, M. E., & Lemay, E. P. (2004). The d2 Test of attention: Construct validity and extensions in scoring techniques. Journal of the International Neuropsychological Society, 10(3), 392–400. https://doi.org/10.1017/S135561770410307X

Bauer, P. J., Doydum, A. O., Pathman, T., Larkina, M., Güler, O. E., & Burch, M. (2012). It’s all about location, location, location: Children’s memory for the “where” of personally experienced events. Journal of Experimental Child Psychology, 113(4), 510–522. https://doi.org/10.1016/j.jecp.2012.06.007

Bauer, P. J., & Leventon, J. S. (2013). Memory for one‐time experiences in the second year of life: Implications for the status of episodic memory. Infancy, 18(5), 755–781. https://doi.org/10.1111/infa.12005

Bayley, N. (2005). Bayley Scales of Infant and Toddler Development, Third Edition (Bayley--III®) [Database record]. APA PsycTests. https://doi.org/10.1037/t14978-000

Brüne, M., & Brüne-Cohrs, U. (2006). Theory of mind—Evolution, ontogeny, brain mechanisms and psychopathology. Neuroscience & Biobehavioral Reviews, 30(4), 437–455. https://doi.org/10.1016/j.neubiorev.2005.08.001

Cigler, H., & Durmekova, S. (2018). Verbální fluence u dětí ve věku 5–12 let: České normy a vybrané psychometrické ukazatele [Verbal fluency in children aged 5 to 12: Czech norms and psychometric properties]. E-psychologie, 12(4), 16–30. https://e-psycholog.eu/pdf/cigler_durmekova.pdf

Clayton, N. S., & Dickinson, A. (1998). Episodic-like memory during cache recovery by scrub jays. Nature, 395, 272–274. https://doi.org/10.1038/26216

Clayton, N. S., Griffiths, D. P., Emery, N. J., & Dickinson, A. (2001). Elements of episodic–like memory in animals. Philosophical Transactions of the Royal Society B: Biological Sciences, 356(1413), 1483–1491. https://doi.org/10.1098/rstb.2001.0947

Collie, A., & Maruff, P. (2003). Computerised neuropsychological testing. British Journal of Sports Medicine, 37(1), 2–3. https://doi.org/10.1136/bjsm.37.1.2

Davis, J. L., & Matthews, R. N. (2010). NEPSY-II Review: Korkman, M., Kirk, U., & Kemp, S. (2007). NEPSY—Second Edition (NEPSY-II). Harcourt Assessment. Journal of Psychoeducational Assessment, 28(2), 175–182. https://doi.org/10.1177/0734282909346716

Diamond, A. (2013). Executive functions. Annual Review of Psychology, 64, 135–168. https://doi.org/10.1146/annurev-psych-113011-143750

Eacott, M. J., & Norman, G. (2004). Integrated memory for object, place, and context in rats: A possible model of episodic-like memory? Journal of Neuroscience, 24(8) 1948–1953. https://doi.org/10.1523/JNEUROSCI.2975-03.2004

Engler, J. R., & Alfonso, V. C. (2020). Cognitive assessment of preschool children: A pragmatic review of theoretical, quantitative, and qualitative characteristics. In V. C. Alfonso, B. A. Bracken, & R. J. Nagle (Eds.), Psychoeducational assessment of preschool children (5th ed., pp. 226–249). Routledge/Taylor & Francis Group. https://doi.org/10.4324/9780429054099-10

Ezpeleta, L., Granero, R., Penelo, E., de la Osa, N., & Domènech, J. M. (2015). Behavior Rating Inventory of Executive Functioning–Preschool (BRIEF-P) applied to teachers: Psychometric properties and usefulness for disruptive disorders in 3-year-old preschoolers. Journal of Attention Disorders, 19(6), 476–488. https://doi.org/10.1177/1087054712466439

Fajnerová, I., Oravcová, I., Plechatá, A., Hejtmánek, L., Vlček, K., Sahula, V., & Nekovářová, T. (2017). The virtual episodic memory task: Towards remediation in neuropsychiatric disorders. In Conference proceedings ICVR 2017 (pp. 1–2). IEEE Xplore

Fuster, J. M. (1997). The prefrontal cortex (3rd ed.). Raven Press.

Gauthier, L., Dehaut, F., & Joanette, Y. (1989). The Bells Test: A quantitative and qualitative test for visual neglect. International Journal of Clinical Neuropsychology, 11(2), 49–54.

Gomez, M. J., Ruipérez-Valiente, J. A., & Clemente, F. J. G. (2022). A systematic literature review of game-based assessment studies: Trends and challenges. IEEE Transactions on Learning Technologies, 16(4), 500–515.

Grant, D. A., & Berg, E. A. (1948). Wisconsin Card Sorting Test [Database record]. APA PsycTests. https://doi.org/10.1037/t31298-000

Grob, A., Hagmann-von Arx, P., Jaworowska, A., Matczak, A., & Fecenec, D. (2018). IDS 2. Hogrefe.

Hanania, R., & Smith, L. B. (2010). Selective attention and attention switching: Towards a unified developmental approach. Developmental Science, 13(4), 622–635. https://doi.org/10.1111/j.1467-7687.2009.00921.x

Hawkins, G. E., Rae, B., Nesbitt, K. V., & Brown, S. D. (2013). Gamelike features might not improve data. Behavior Research Methods, 45(2), 301–318. https://doi.org/10.3758/s13428-012-0264-3

Hayne, H., & Imuta, K. (2011). Episodic memory in 3- and 4-year-old children. Developmental Psychobiology, 53(3), 317–322. https://doi.org/10.1002/dev.20527

Hokken, M. J., Krabbendam, E., van der Zee, Y. J., & Kooiker, M. J. G. (2023). Visual selective attention and visual search performance in children with CVI, ADHD, and dyslexia: A scoping review. Child Neuropsycholog, 29(3), 357–390. https://doi.org/10.1080/09297049.2022.2057940

Howard, S. J., & Melhuish, E. C. (2017). An Early Years Toolbox (EYT) for assessing early executive function, language, self-regulation, and social development: Validity, reliability, and preliminary norms. Journal of Psychoeducational Assessment, 35(3), 255–275. https://doi.org/10.1177/0734282916633009

Huizinga, M., Baeyens, D., & Burack, J. A. (2018). Editorial: Executive function and education. Frontiers in Psychology, 9, Article 1357. https://doi.org/10.3389/fpsyg.2018.01357

Hyde, J. S. (2016). Sex and cognition: Gender and cognitive functions. Current Opinion in Neurobiology, 38, 53–56. https://doi.org/10.1016/j.conb.2016.02.007

Irvan, R., & Tsapali, M. (2020). The role of inhibitory control in achievement in early childhood education. Cambridge Educational Research e-Journal, 7, 168–190. https://doi.org/10.17863/CAM.58319

Jin, Y.-R., & Lin, L.-Y. (2022). Relationship between touchscreen tablet usage time and attention performance in young children. Journal of Research on Technology in Education, 54(2), 317–326. https://doi.org/10.1080/15391523.2021.1891995

Kraft, R. H., & Nickel, L. D. (1995). Sex-related differences in cognition: Development during early childhood. Learning and Individual Differences, 7(3), 249–271. https://doi.org/10.1016/1041-6080(95)90013-6

Lumsden, J., Edwards, E. A., Lawrence, N. S., Coyle, D., & Munafò, M. R. (2016). Gamification of cognitive assessment and cognitive training: A systematic review of applications and efficacy. JMIR Serious Games, 4(2), Article e11. https://doi.org/10.2196/games.5888

Mancuso, M., Damora, A., Abbruzzese, L., Navarrete, E., Basagni, B., Galardi, G., Caputo, M., Bartalini, B., Bartolo, M., Zucchella, C., Carboncini, M. C., Dei, S., Zoccolotti, P., Antonucci, G., & De Tanti, A. (2019). A new standardization of the Bells Test: An Italian multi-center normative study. Frontiers in Psychology, 9, Article 2745. https://doi.org/10.3389/fpsyg.2018.02745

Matejcek, Z.,& Zlab, Z. (1972). Zkouska laterality [Laterality Test]. Psychodiagnostika.

Matier, K., Wolf, L. E., & Halperin, J. M. (1994). The psychometric properties and clinical utility of a cancellation test in children. Developmental Neuropsychology, 10(2), 165–177. https://doi.org/10.1080/87565649409540575

Mavridis, A., & Tsiatsos, T. (2017). Game-based assessment: Investigating the impact on test anxiety and exam performance. Journal of Computer Assisted Learning, 33(2), 137–150. https://doi.org/10.1111/jcal.12170

Miller, E. K., & Cohen, J. D. (2001). An integrative theory of prefrontal cortex function. Annual Review of Neuroscience, 24, 167–202. https://doi.org/10.1146/annurev.neuro.24.1.167

Nikolai, T., Stepankova., H., Michalec, J., Bezdicek, O., Horakova, K., Markova, H., Ruzicka, E., & Kopecek, M. (2015). Tests of verbal fluency, Czech normative study in older patients. Česká a Slovenská Neurologie a Neurochirurgie, 78/111(3), 292–299. https://doi.org/10.14735/amcsnn2015292

Pisova, M. (2020). Ontogeneze episodické paměti u dětí předškolního věku [Ontogenesis of episodic memory in preschool children] [Master’s thesis, Charles University]. Repositář závěrečných prací Univerzity Karlovy. http://hdl.handle.net/20.500.11956/119850

Pradhan, B., & Nagendra, H. R. (2008). Normative data for the letter-cancellation task in school children. International Journal of Yoga, 1(2), 72–75. https://doi.org/10.4103/0973-6131.43544

Pureza, J. R., Gonçalves, H. A., Branco, L., Grassi-Oliveira, R., & Fonseca, R. P. (2013). Executive functions in late childhood: Age differences among groups. Psychology & Neuroscience, 6(1), 79–88. https://doi.org/10.3922/j.psns.2013.1.12

Quintana, M., Anderberg, P., Sanmartin Berglund, J., Frögren, J., Cano, N., Cellek, S., Zhang, J., & Garolera, M. (2020). Feasibility-usability study of a tablet app adapted specifically for persons with cognitive impairment-SMART4MD (Support Monitoring and Reminder Technology for Mild Dementia). International Journal of Environmental Research and Public Health, 17(18), Article 6816. https://doi.org/10.3390/ijerph17186816

Rosenqvist, J., Lahti-Nuuttila, P., Laasonen, M., & Korkman, M. (2014). Preschoolers’ recognition of emotional expressions: Relationships with other neurocognitive capacities. Child Neuropsychology, 20(3), 281–302. https://doi.org/10.1080/09297049.2013.778235

Rosetti, M. F., Gómez-Tello, M. F., Victoria, G., & Apiquian, R. (2017). A video game for the neuropsychological screening of children. Entertainment Computing, 20, 1–9. http://dx.doi.org/10.1016/j.entcom.2017.02.002

Rosin, J., & Levett, A. (1989). The Trail Making Test: A review of research in children. South African Journal of Psychology, 19(1), 6–13. https://doi.org/10.1177/008124638901900102

Roth, R. M., Isquith, P. K., & Gioia, G. A. (2014). Assessment of executive functioning using the Behavior Rating Inventory of Executive Function (BRIEF). In S. Goldstein & J. A. Naglieri (Eds.), Handbook of executive functioning (pp. 301–331). Springer Science + Business Media. https://doi.org/10.1007/978-1-4614-8106-5_18

Schürerova, L. (1977). Experiences with Edfeldt’s Reversal Test and an adapted reversal test. Psychológia a Patopsychológia Dieťaťa, 12(3), 235–246.

Simpson, A., & Riggs, K. J. (2006). Conditions under which children experience inhibitory difficulty with a “button-press” go/no-go task. Journal of Experimental Child Psychology, 94(1), 18–26. https://doi.org/10.1016/j.jecp.2005.10.003

St Clair-Thompson, H. L., & Gathercole, S. E. (2006). Executive functions and achievements in school: Shifting, updating, inhibition, and working memory. Quarterly Journal of Experimental Psychology, 59(4), 745–759. https://doi.org/10.1080/17470210500162854

Theeuwes, J. (1993). Visual selective attention: A theoretical analysis. Acta Psychologica, 83(2), 93–154. https://doi.org/10.1016/0001-6918(93)90042-P

Vlcek, K., Laczó, J., Vajnerova, O., Ort, M., Kalina, M., Blahna, K., Vyhnalek, M., & Hort, J. (2006). Spatial navigation and episodic-memory tests in screening of dementia. Psychiatrie, 10, 35–38. https://www.tigis.cz/images/stories/psychiatrie/2006/SUPPL_3/08_vlcek_psych_s3-06.pdf

Wechsler, D. (1949). Wechsler Intelligence Scale for children. Psychological Corporation.

Willoughby, K. A., Desrocher, M., Levine, B., & Rovet, J. F. (2012). Episodic and semantic autobiographical memory and everyday memory during late childhood and early adolescence. Frontiers in Psychology, 3, Article 53. https://doi.org/10.3389/fpsyg.2012.00053

Willoughby, M. T., Blair, C. B., Wirth, R. J., Greenberg, M., & The Family Life Project Investigators. (2010). The measurement of executive function at age 3 years: Psychometric properties and criterion validity of a new battery of tasks. Psychological Assessment, 22(2), 306–317. https://doi.org/10.1037/a0018708

Yadav, S., Chakraborty, P., Kaul, A., Pooja, Gupta, B., & Garg, A. (2020). Ability of children to perform touchscreen gestures and follow prompting techniques when using mobile apps. Clinical and Experimental Pediatrics, 63(6), 232–236. https://doi.org/10.3345/cep.2019.00997

Zupan, Z., Blagrove, E., & Watson, D. G. (2018). Learning to ignore: The development of time-based visual attention in children. Developmental Psychology, 54(12), 2248–2264. https://doi.org/10.1037/dev0000582

Authors’ Contribution

Markéta Jablonská: investigation, formal analysis, writing—original draft. Iveta Fajnerová: conceptualization, methodology, formal analysis, writing—review & editing. Tereza Nekovářová: conceptualization, methodology, formal analysis, writing—review & editing

Editorial Record

First submission received:

April 6, 2023

Revisions received:

March 7, 2024

June 28, 2024

Accepted for publication:

July 2, 2024

Editor in charge:

Fabio Sticca

Introduction

Cognitive Assessment in Children

Measuring cognitive skills at a younger age is essential for a number of reasons: e.g. early detection of cognitive deficits, assessment of school readiness (the readiness of children to enter school in terms of physical, cognitive, social and emotional development), evaluation of school performance or revealing the strengths and weaknesses of an individual child. Despite this, freely available interactive cognitive screening tools for children are still limited for our language region. Widely used standard methods often require trained examiners (Collie & Maruff, 2003; Engler & Alfonso, 2020) or depend on teachers’ and parents’ reports (e.g., BRIEF or BRIEF-P; Ezpeleta et al., 2015; Roth et al., 2014). In addition, the task selection can be limited by age (tasks are often intended for children over the age of six, e.g., Wisconsin Card Sorting test, Grant & Berg, 1948).

The most frequently employed methods include the use of developmental scales and intelligence tests, such as the Cognitive Assessment System (CAS 2) or the Wechsler Intelligence Scale for Children (WISC) (Wechsler, 1949), complex neuropsychological batteries assessing three or more cognitive functions (e.g., Intelligence and Development Scale for children IDS, or the preschool version IDS-P; Grob et al., 2018), or tests assessing specific target abilities, such as attention, or executive functions (most often the Trail Making Test for children from 5 to 7 years old; Rosin & Levett, 1989). Importantly, it is critical to evaluate the maturation of executive functions in preschool screening as the beginning of school attendance requires flexible adaptation of behavior to different social contexts and rules. However, most of the preschool methods available in the Czech Republic are aimed at language acquisition, motor and graphomotor skills, and laterality (e.g., Edfeldt’s Reversal Test; Laterality test, Orientation test of school maturity), or are in the form of rating scales designed for teachers or parents (Matejcek & Zlab, 1972; Schürerova, 1977). Some of the available screening tools are also not suitable for the target preschool age group (e.g., Bayley III for children from 1month to 4yrs; Bayley, 2005).

Game-Based Assessment

With the progress of technology, there is an increasing effort to create new types of assessments that are computerized and self-administered. Such game-based assessment (GBA) present new possibilities, simplifying cognitive evaluation in children. GBA is portable, with digital measurement capabilities, engaging the children and reducing test anxiety (Hawkins et al., 2013; Lumsden et al., 2016; Mavridis & Tsiatsos, 2017). GBA studies are traditionally conducted in schools, concentrating on student’s evaluation, with the aim to improve the assessment of competencies, skills, or learning outcomes (Gomez et al., 2022). GBA also offers the option of online assessment which allows for widespread access and remote testing if required.

There are three types of GBA: (1) the measurement of game-related success criteria (such as the achievement of targets, completion time, etc.), (2) external assessment using tools such as pre-post questionnaires, debriefing interviews, essays, knowledge maps, and test scores, and (3) embedded assessment based on player response data (the use of click streaming, log file analysis, etc.). There are more than 1,000 computer-assisted interventions for children, but their application is more often used in education, rehabilitation, or training (e.g., Neuro-World) than in assessing cognitive skills (Amukune et al., 2022).

The availability of touchscreen technology has facilitated the adaptation of assessment procedures to children of younger age. The majority of four year olds (and a minority of two to three year olds) are nowadays able to use a tablet with simple gestures (tapping or swiping) and to follow animated instructions (Yadav et al, 2020). A current scoping review by Amukune et al., (2022) provides a good overview of these methods available for three to eight year-old-children. Among GBAs aimed at testing cognition we can list for example the Early Years Toolbox for iPad (http://www.eytoolbox.com), measuring emerging cognitive, self-regulatory, language, numeracy and social development (Howard & Melhuish, 2017), or EF Touch (https://eftouch.fpg.unc.edu/index.html; (M. T. Willoughby et al., 2010) concentrating on the assessment of executive functions. Some GBAs are available in the form of mobile applications, such as CoCon, a serious game assessing cognitive control, or the neuropsychological screening Towi, a series of games based on standardized tests evaluating cognitive performance also serving as a cognitive training tool (Rosetti et al., 2017). Despite the large number of existing GBA worldwide, to our knowledge there are no GBAs methods targeting the children population available in the Czech language. As the proposed online games were developed in the local language, there is no requirement for adaptation or translation of foreign applications, which may entail additional costs

Current Study

The goal of this study was to evaluate the usability (as defined by Quintana et al., 2020) of a newly developed child-friendly interactive screening tool, potentially applicable in the form of an online assessment with gamification features. The battery of tests was designed as a part of a broader project aimed at identifying potential risk factors predicting school success at preschool age. This tool should allow preliminary screening of children’s cognitive abilities in a fast and enjoyable way both in preschool and older-age children.

The screening tool aimed to address cognitive functions that cover cognitive domains traditionally assessed in school and preschool age and are associated with school performance and school success prediction (Alloway et al., 2005; St Clair-Thompson & Gathercole, 2006), which include attentional control, inhibitory control and working memory. These domains partially overlap with the executive functions as defined by Diamond (2013). The term "executive functions" is used to describe a broad range of cognitive processes that are involved in the control and coordination of information processing during goal-directed behavior (Fuster, 1997; Miller & Cohen, 2001). The ontogenetic development of executive functions relies on the maturation of associated brain areas, but also requires stimulation in a social context (Huizinga et al., 2018). These processes are particularly important in situations that are novel or demanding (e.g., the beginning of school attendance), and thus requiring flexible adjustment of behavior. Therefore, appropriate screening of development (maturity) of executive functions is essential for assessing the potential for school performance.

The tested prototype of the screening tool therefore contains three games, assessing the key cognitive functions: selective attention, inhibitory control, and working memory (specifically, the episodic buffer of working memory).

To address (1) selective attention, we used a nonverbal performance test based on traditional cancellation tasks (e g., Bates & Lemay, 2004; Hokken et al., 2023; Theeuwes, 1993), measuring visual attention. As (2) inhibitory control has been repeatedly shown to be associated with early childhood education (Irvan & Tsapali, 2020), we chose a go/no-go task paradigm with the main focus on inhibition and cognitive flexibility. To assess (3) short-term episodic memory, we based the memory game on the episodic-like memory (ELM) model (Clayton & Dickinson, 1998). This is regarded as a model of episodic memory, which involves the encoding of contextual information (i.e., "What", "Where", "When"). This concept was already successfully used in our previous studies with adults and a pilot study in children. The task targets primarily the episodic buffer of working memory as described by Baddeley (2012), that represents temporary storage integrating information from other components of working memory and preserves a sense of time so that events occur in a coherent sequence.

The cognitive tasks, which are presented as individual games, are situated within the context of an interactive city map that employs the use of a guide character. The tasks were created predominantly for preschool children and the first grades of primary schools, therefore each game contains several levels with increasing difficulty. The games are designed in such a way that they can be easily managed, even by children as young as four years old, for example, when instructions are provided on the basis of spoken language.

The screening tool has been developed using the Unity game engine in the form of a desktop application available online and is compatible both with touch screens or mouse. The online platform was designed in order to provide remote access to the assessment and to continuously collect data for further refinement of age-appropriate standards of cognitive tests.

In this pilot study, we primarily aimed to test the usability of the proposed set of cognitive games. Beyond this primary objective, we have focused on preliminary testing the functionality of individual games, the comprehensibility of instructions, and the attractiveness of the games to children of different ages. Moreover, the effect of age is studied in means of cognitive performance. Finally, we aimed to test the preliminary construct validity of the games using direct comparison with selected traditional short paper and pencil tasks.

Methods

Participants

The participants were selected based on several criteria, including age (4 to 8 years old), Czech as the native language, the absence of significant visual or hearing impairments, and parental consent to participate in the study. The sample was recruited using the snowball method and the dataset was collected from March 2021 to June 2021 through in person visits to the children’s home. In person meetings took place with 18 out of the 28 initially contacted parents. The total sample size recruited was 24 children, and this sample also included some siblings (6 families). Two of the children had to be excluded from the sample due to acute medical issues (hand injury not associated with the study). Thus, the final sample consisted of 22 children (50% boys, 50% girls) without serious psychiatric or neurological disorders. Some basic demographic and personal information was gathered using a questionnaire created for parents including questions about children’s age, gender, kindergarten or school attendance, number of siblings, and health issues. Descriptives of the sample can be found in Table 1.

Procedure

The participants were recruited through contact with parents using the snowball method. Communication was established and in person meetings were arranged. All the assessments took place in a home setting, directly at the place of residence of the children. The testing was done under the supervision of an experimenter/psychologist. Signed written informed consent (approved by the Ethical Committee of the National Institute of Mental Health in the Czech Republic) was obtained before the beginning of the study from each parent, and each child was verbally asked if he/she was willing to participate. In case the child was unmotivated or tired, the testing was interrupted or terminated as necessary. During the in-person meeting, the procedure was explained to the parents, the written consent was signed, and necessary information about the children was gathered. The first interaction included the parent(s) to establish a safe atmosphere, then the assessment continued without the parent’s assistance. The whole assessment lasted about 45 minutes including instructing.

For the testing using interactive games a tablet was placed in front of the child, so the child could follow instructions given by the guide persona (visualized in the form of a friendly animal (Fig.1) and could respond by touching the touch screen. The aim of the pilot testing was to verify that the instructions are comprehensible and the child is capable of performing the tasks independently. Therefore, the experimenter did not interfere with the child’s actions during the assessment (only in case the instructions needed to be repeated or explained, especially to the youngest children).

The testing procedures (for a detailed description of individual tasks and games see below) followed a specific order. The assessment started with the Sentence repetition task, followed by the Bells test and interactive games. Three interactive games were tested in the following order: (1) the Book game (2) the Animal Feeding game, (3) the Toy Store game. The Verbal fluency test was applied in between each interactive game with randomized categories of listing (Animals or Vegetables between the first and the second game or the second and the third game). At the end of the assessment, the child received a small reward (e.g., coloring books). The order of tests was chosen to prevent the potential effect of fatigue in the tasks with higher attention demands and also to prevent potential interference between the two memory tasks, therefore applied in the beginning and the end. The standard paper and pencil tasks were applied in the beginning as we wanted to maintain the motivation of the children for these non-game tasks. Only short verbal fluency tasks were inserted between the cognitive games, serving as a short break after each cognitive game to prevent visual fatigue.

Measures

Game-Based Assessment

This screening tool was developed at the National Institute of Mental Health in Czech Republic. The prototype has been designed as an interactive city map (Fig. 1) with a virtual character guiding the child through the assessment using three different games to test selected cognitive functions. The screening tool is based on 2D animations and is suitable primarily for touchscreen (on a tablet or a touch notebook) but can also be used on a computer with a regular mouse. Each game was created as a prototype and tested repeatedly before the beginning of the study. The application is launched via a link (http://hry0421.skolniuspesnost.cz/www/) with a login code protecting the anonymity of the child, and all the data is automatically saved during task completion. Tasks were designed based on general cognitive experimental paradigms such as visual search, go/no-go, and episodic-like memory tasks. Particular games are displayed in the given order during the assessment. All tasks have multiple levels with increasing difficulty.

Figure 1. Main Screen of the Application—Interactive City Map.

Book Game

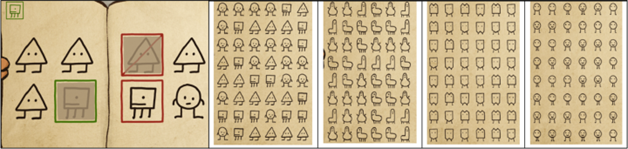

The first game is the Book game, inspired by the selective attention tasks (e g., Bates & Lemay, 2004; Hokken et al., 2023; Theeuwes, 1993) designed as an exercise book (Figure 2) with lists filled with symbols (48 symbols on 1 page). The goal is to select as many symbols as possible according to the visible template during a limited time period (60 seconds per level). This task is based on the principle of cancellation tasks (Matier et al., 1994; Pradhan & Nagendra, 2008), where the participant has to search for and cross out target stimuli embedded among distractor stimuli. The target symbol is displayed in the upper corner of the page throughout the entirety of the gaming session. This ensures that selective attention is assessed without the need for participants to recall the target symbol.The overall duration of the game is approximately 7 minutes. Target symbols differ on each level (Figure 2):

- Level 0: training

- Level 1 to 3: 1 target stimuli + 2 distractors (stimuli are more difficult to discriminate with increasing game level due to increasing similarity of the stimuli)

- Level 4: 1 target stimuli + 3 distractors

Figure 2. The Book Game - The Instructions and Correct/Incorrect Responses Shown during the Training Level 0; Game Levels 0 - 4 (described from the left)

The following outputs are recorded during the game:

- The number of correct responses (correctly marked symbols) per given time limit (60 seconds), indicating the search speed without the need for corrections;

- The number of incorrect responses (marked symbols which do not match the sample—errors), reflecting the inability to pay attention to the task according to the given rules.

For this pilot study it is not reliable to express the success rates in form of ratios of correct responses or errors with the total number of stimuli presented, as it was possible for the child to skip some pages in the book during the task (which disproportionately increases the total number of stimuli).

Animal Feeding Game

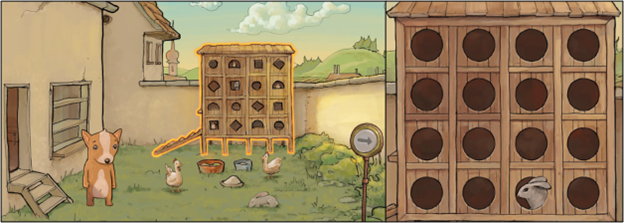

The second game, the Animal Feeding game, was inspired by the go/no-go task (e.g., Simpson & Riggs, 2006) used to assess executive functions, in particular inhibition and cognitive flexibility. This task is designed as an animal house with different types of animals used as stimuli (Figure 3). The instruction is to feed animals (target stimuli) by clicking on them, once they appear one after another in one of the visible windows. The goal is to react as fast as possible to the target stimuli (correct “go” animal) and ignore the distractors (incorrect “no-go” animal). The overall duration of the game is approximately 5 minutes with 30 stimuli per level (“go“ and “no-go“ stimuli in total). The target animals change with increasing level complexity:

- Level 0: training

- Level 1: 1 “go“ / 0 “no-go“ stimuli

- Level 2: 1 “go“ / 1 “no-go“ stimulus

- Level 3: 1 “go“ / 1 “no-go“ stimulus that was a target in the previous level

- Level 4: 2 “go“ / 1 “no-go“ stimulus

Figure 3. Animal Feeding Game Design (Main Screen on the Left, the Target Stimulus on the Right).

In this task, 30 stimuli were presented to the participant at each level. Stimuli were divided into target (“go”) stimuli and distractors (“no-go”; for level description see above). During the game, the following outputs were recorded:

- The total number of stimuli presented for each level

- The total number of “go” and “no-go“ stimuli for each level

- The total number of correct reactions to “go“ stimuli

- The total number of omitted “no-go“ stimuli

- The average reaction time of correct reactions across levels.

Two parameters that are indicators of success rate are calculated from these outputs: (1) the ratio of correct reactions out of the total number of target objects/distractors and (2), the ratio of correctly stopped reactions out of the total number of target objects/distractors. The parameters are expressed as values between zero and one (where 0 means absolute failure and 1 absolute success).

The following three parameters were utilized in the current study:

- Success rate of the correct reaction to the target stimuli (success rate ”go”).

- Success rate in stopping the incorrect reaction (success rate “no-go”). This parameter does not apply to level 1 containing only “go” stimuli.

- The average reaction time of correct reactions across levels.

These parameters represent different cognitive functions—the first reflects (success rate “go”) the correct reaction to the target stimuli associated with attention ability, and the second (success rate for “no-go”) reflects inhibitory control (the ability to stop the planned reaction when the “no-go“ stimulus is presented). The reaction time parameter represents the processing speed associated with the decision process.

Toy Store Game

The third game, Toy Store game, is designed to assess at short-term episodic memory and is based on the WWW (“What”, “Where”, “When”) model for the evaluation of episodic-like memory (Clayton & Dickinson, 1998; Clayton et al., 2001), as was applied in similar tasks (Eacott & Norman, 2004; Fajnerova et al., 2017; Vlcek et al., 2006). The task is aimed at the episodic memory, specifically at the episodic buffer of working memory (as was suggested by Baddeley, 2012) dedicated to linking information across domains to form integrated units of information with time sequencing (or episodic chronological ordering). Moreover, the episodic buffer is also assumed to have links to long-term memory (Baddeley, 2012).

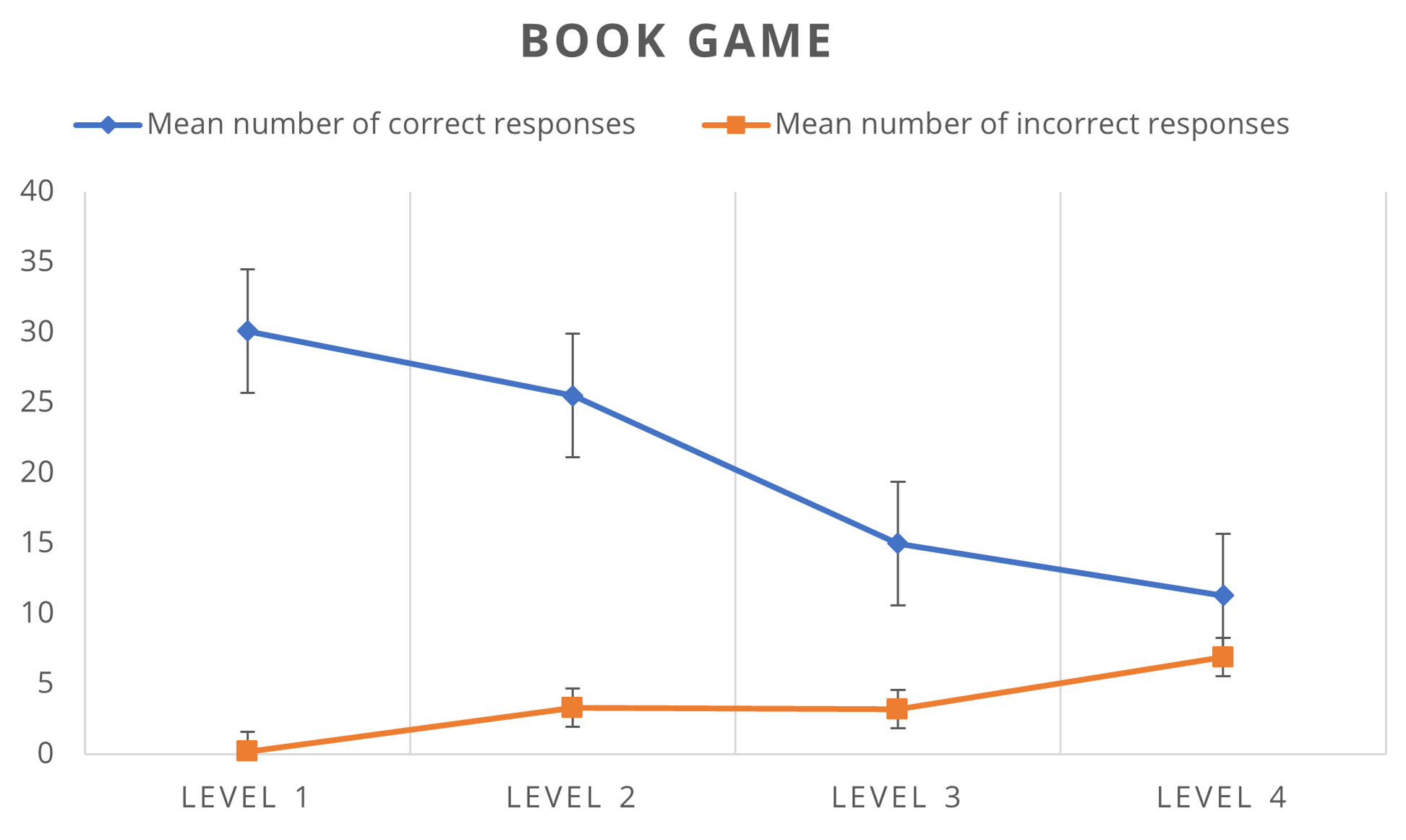

The game is designed as a shelf in a toy shop, where different items (toys) are placed in specific positions. The goal is to remember which objects were selected, in what order, and what their original positions on the shelf were. This task has three phases (Figure 4): in the first phase, the children are asked to pick toys from the shelf in a specified order following visual and verbal instructions and put them in a box (Step 1). In the second phase, the child is presented with an expanded set of toys in a box—original toys and distractors (other toys) and they are asked to choose the toys selected previously in the first phase (Step 2 – “What”). The correct set of toys is presented before the last phase, even if the child made mistakes in the second phase. In the last phase, the child is instructed to put the toys back in the correct positions on the shelf in the same order as they were picked at the beginning (Step 3 – “Where”, “When”). The total duration of all game levels is approximately 10 minutes. The number of objects and distractors increases at each level:

- Level 0: training

- Level 1: 3 targets / 3 distractors / 6 positions (3 out of 6 correct)

- Level 2: 4 targets / 4 distractors / 8 positions (4 out of 8 correct)

- Level 3: 5 targets / 5 distractors / 10 positions (5 out of 10 correct)

Figure 4. Toy Store Game Design: Step 1–3 (From Left to Right).

Based on the described steps (1–3), the following parameters are recorded during the game:

- Objects (“What”): number of correctly selected objects (toys remembered as originally selected) among the distractors;

- Positions (“Where”): number of objects placed in the correct positions among the distractor positions;

- Order (“When”): the precision of responses within the "Order" parameter (expressed as 1 = all correct or 0 = mistakes made) for each level.

The correct order of objects was automatically recorded only as numbers of “all correct” or “mistakes made” for each level, hence the relevant number of correct responses is not available due to potential subsequent errors in order position. This is why this parameter was analyzed differently from the other two. To allow direct comparison, the categories of “What” and “When” were also transformed to “all correct” levels (the highest level where no error was made).

Paper and Pencil (Standard) Tasks

The following standard (paper and pencil) tasks were used in the study:

Bells test is a cancellation task used for the assessment of selective attention and executive functions and also for the diagnostics of unilateral spatial agnosia (Mancuso et al., 2019; Pureza et al., 2013). The goal is to find and mark all target stimuli (bells) placed among other distractors as fast as possible. The test material is in the form of a template containing 35 bells spread among 264 distractors on a paper sheet (299 black and white symbols depicting, in addition to bells, also horses, houses, and others). The tested person has no information about the total number of bells. The task ends once the person announces that she/he can no longer find any bells. For our purposes, there was no time limit, but the task can also be presented as time-limited when working with adults. The Bells test was used to test the preliminary construct validity of the Book game.

The Verbal fluency test was selected as a widespread tool, commonly used to assess cognitive performance, both in pedagogical and neuropsychological practice. It is part of various test batteries assessing cognitive functioning. Executive functions such as cognitive flexibility and inhibition control, as well as processing speed, have been reported as the strongest predictors of Verbal fluency test performance (Amunts et al., 2020). For that reason, we used this test to address the association with performance tested in the Animal Feeding game (“go” success rate). In the Czech Republic two types of verbal fluency tests are traditionally used – phonemic word fluency, requiring the participant to list words starting with a certain letter (most often N, K, and P), and semantic word fluency, where the task is to list words of a given category. The enumeration period is limited to one minute. For the purposes of this study, semantic word fluency with the categories Animals and Vegetables was used. The instructions were modified so that they were understandable for younger children (Cigler & Durmekova, 2018; Nikolai et al., 2015).

Sentence repetition task is a simple verbal memory test based on sentence repetition that was used to test the association with the Toy Store game. Following the initial instructions, the administrator then reads sentences of increasing difficulty, presented in a clear and audible manner, to the child. The task is to repeat the sentence in the exact same words. The length and complexity increase with every sentence. Each correctly repeated sentence is awarded two points and one point if there was a mistake corrected by the child. The task ends if the child makes mistakes without correction in two sentences in a row. The list of sentences used for our purposes contained 17 sentences and was adopted from NEPSY battery (Davis & Matthews, 2010) and translated into the Czech language independently by two persons. This test was used in our previous study concentrating on memory assessment in preschool children (Pisova, 2020).

The above listed methods were selected to address the overall cognitive performance of the tested children, would not be overly demanding and are manageable on top of the tested cognitive games. For this reason, the tasks were not selected to directly match the newly-designed interactive cognitive games and the targeted cognitive functions, as the evaluation of construct validity was not the primary aim of the presented study. Moreover, some more fitting test methods were not available as validated tests with normative data for children (especially in the local region).

Data Analysis

Analysis was performed using Microsoft Excel and Jamovi 2.3.19.0. Demographic data were analyzed by computing means, standard deviations, and frequencies. Verification of normal distribution was made using the Shapiro-Wilk test. Since the data were not normally distributed, non-parametric methods were chosen for further analysis. Descriptive statistics describing the performance of children in individual tests were applied. Friedman’s test for repeated measures and subsequent post hoc tests (Durbin-Conover) were used to assess the effect of increasing difficulty levels of cognitive games on tested performance. A Spearman correlation analysis was used to assess the effect of age and for comparison of the performance tested using interactive games and standard test methods. The results were evaluated at the 0.05 significance level.

Results

The descriptive statistics of the study sample is presented in Table 1. Other results are presented in the following order: the GBA (descriptives of interactive games presented separately for each task); Standard test assessment (the results of the standardized paper and pencil tasks) and the results based on comparisons between the interactive games and standard tasks. Finally, we present the effect of age on the performance in both sets of individual games and standard methods.

Table 1. Descriptives of the Sample.

|

|

Age (in years) |

|||

|

Category |

N |

Min–Max |

Mean |

SD |

|

All |

22 |

4–8 |

5.9 |

1.4 |

|

Boys |

11 |

4–8 |

6.4 |

1.6 |

|

Girls |

11 |

4–7 |

5.5 |

1.2 |

Game-Based Assessment

Book Game

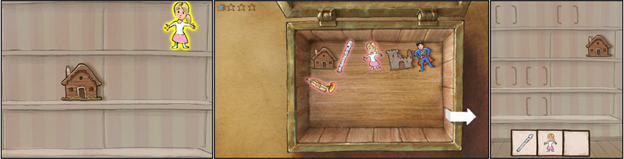

In the Book game, we analyzed the numbers of correct and incorrect responses at individual levels (for a detailed description see Methods).

Figure 5. Average Number of Correct and Incorrect Responses in Levels 1–4 of the Book Game.

Note. Error bars represent standard errors.

Descriptive statistics of the listed parameters can be found in Appendix in Table A1. The average number of correct responses decreased with every level, indicating increasing difficulty across levels. This assumption was verified using the nonparametric Friedman’s test, χ2(3) = 43.3, p < .001. The post-hoc analysis (Durbin-Conover) confirmed the difference between particular levels (for a detailed description of post hoc pairwise comparisons see Table A2). The increasing difficulty was also verified using Friedman’s test in the average number of incorrect responses, see Figure 5. The results were significant for this parameter as well, χ2(3) = 24.1, p < .001, but the post-hoc analysis (Table A2) didn’t confirm a significant difference between levels 2 and 3 in this cohort (for a more detailed description of test levels see Methods).

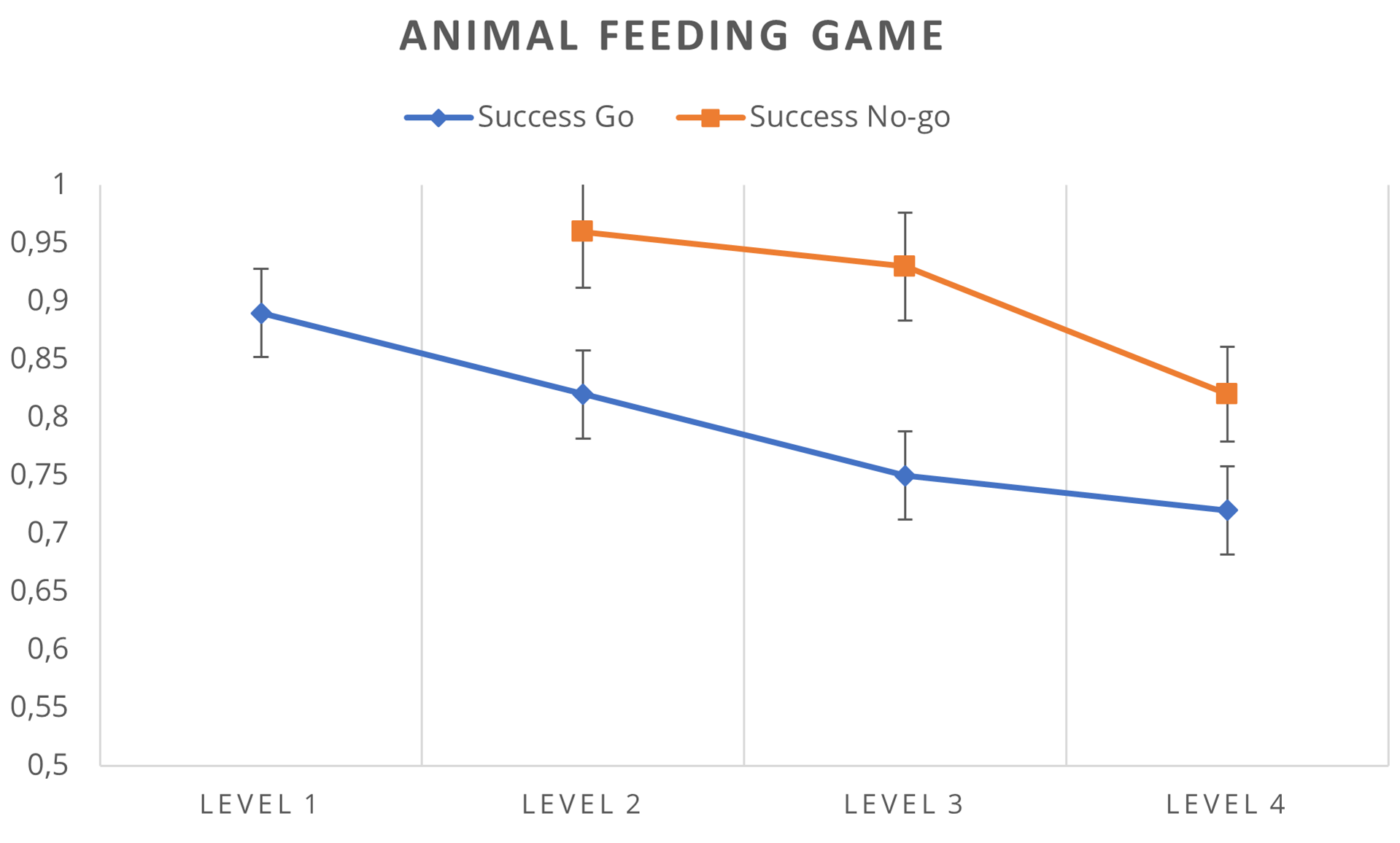

Animal Feeding Game

In this task, we analyzed the success rates of the reactions to “go” and “no-go” stimuli and the average reaction times calculated separately for each level (for detailed description see Methods).

Descriptive statistics for the selected parameters can be seen in Table A3. The average success rate (“go“ and “no-go”) decreased with increasing levels (see Figure 6), and we can therefore assume increasing difficulty across levels. This assumption was confirmed using the nonparametric Friedman’s test. The results were significant, Success rate “go“: χ2(3) = 23.1, p < .001; “no-go“: χ2(2) = 12.2, p = .002, indicating a difference in difficulty between levels. The post-hoc analysis (see Table A4) confirmed significant differences only between level 1 (level without “no-go“ stimuli) and the other levels (levels 2 to 4 with a different number of “go“ and “no-go“ stimuli), and between levels 2 and 4.

Figure 6. The Success Rate of “Go“ and “No-Go“ Reactions in Levels 1–4 of the Animal Feeding Game.

Note. Error bars represent standard errors.

On the other hand, the average reaction time increased with every level, and the difference between levels was verified using the Friedmans test, χ2(3) = 39.9, p < .001, confirmed also by the post-hoc analysis (Table A4).

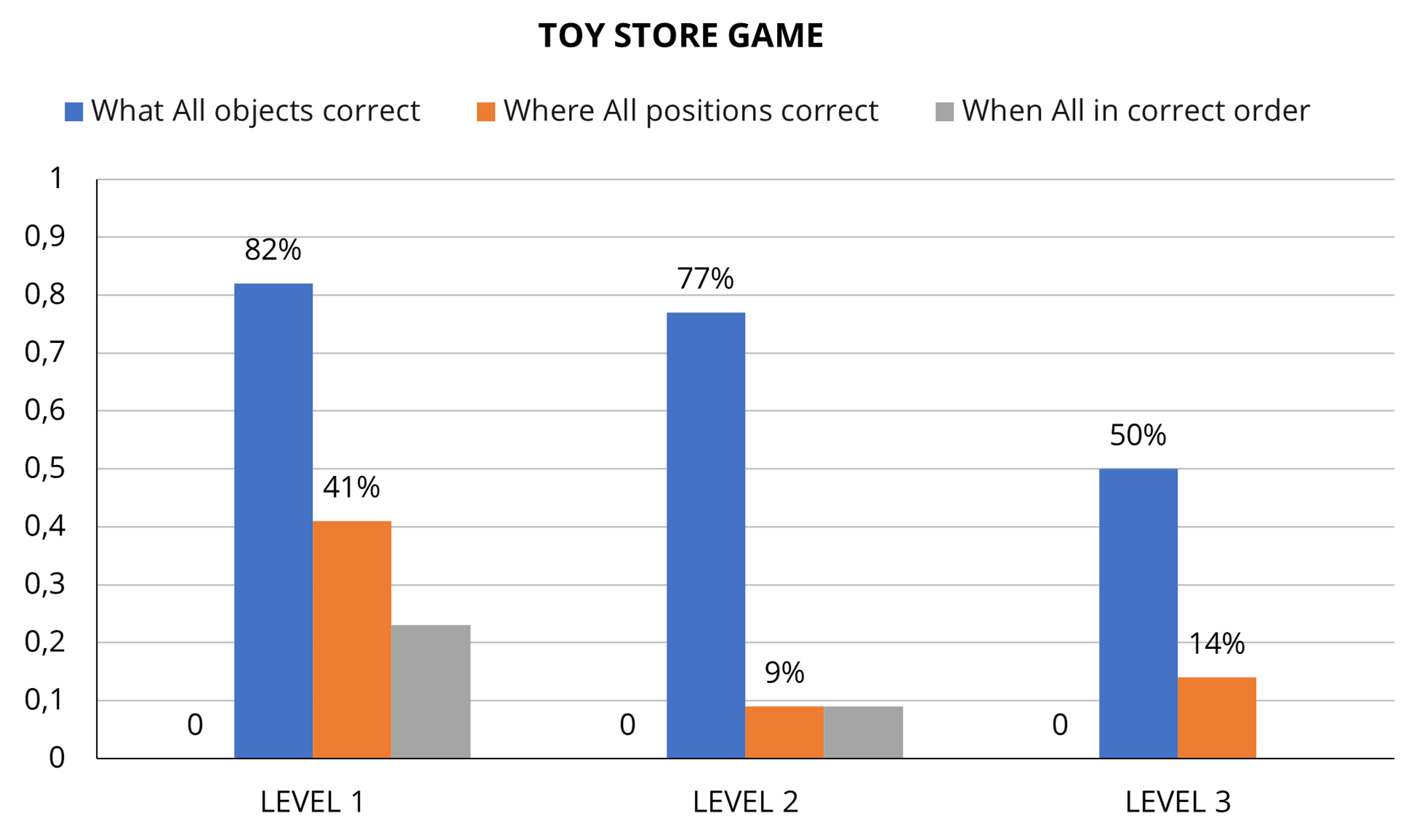

Toy Store Game

Each level of the Toy Store game contains recollection of the following information: Objects (“What”), Positions (“Where”) and position Order (“When”; detailed description in Methods). The statistical analysis focused on 1) the number of correctly recollected information in each level and 2) number of levels that were performed without recollection error for each category (“What”, “Where”, “When”).

Table 2. Descriptives of the Correct Responses for Parameters What and Where of the Toy Store Game.

|

Parameter |

Level |

Total number |

Min–Max |

M |

SD |

|

“What” parameter |

Level 1 |

3 |

0–3 |

2.64 |

0.85 |

|

(Correct object) |

Level 2 |

4 |

0–4 |

3.50 |

1.10 |

|

|

Level 3 |

5 |

0–5 |

4.14 |

1.21 |

|

“Where“ parameter |

Level 1 |

3 |

0–3 |

2.05 |

0.95 |

|

(Correct position) |

Level 2 |

4 |

0–4 |

2.14 |

1.17 |

|

|

Level 3 |

5 |

0–5 |

2.68 |

1.46 |

|

Note. The “When” parameter is not reported as it was recorded only as an “all correct level” (see Methods). |

|||||

The average number of correct responses in remembering the designated objects (“What”) and their spatial position (“Where”) for levels 1 to 3 is shown in Table 2. The difference in the difficulty of levels was also analyzed in this task (using the nonparametric Friedman’s test) for both parameters separately. The difference between the tested game levels was significant for the parameter “What”, χ2(2) = 29.5; p < .001, while for the parameter “Where” the difference was not confirmed, χ2(2) = 3.77; p = .152.

To compare children’s performances in all parameters (“What”, “Where”, “When”), it was necessary to convert the results to categorical values as was the case with the parameter “When” (see above). The three types of errors were evaluated separately for each level, depending on whether the child managed to correctly determine all objects, their position, or their order (0 = at least one mistake made / 1 = all correct). For the purposes of further analysis, the number of the level reached without an error was assigned to each child. The results can be seen in Figure 7 showing the percentage of children who were able to finish the particular level (1–3) for the given parameter without errors.

The difficulty of the three parameters was analyzed separately using the Wilcoxon signed-rank test, and the post-hoc comparison confirmed a difference between the parameter “What” and other two parameters “Where” and “When”. However, no difference was found between the “Where” and “When” parameters (Table A5).

Figure 7. The Success Rate for Parameters “What”, “Where”, “When” in Levels 1–3 of the Toy Store Game.

The Percentage Scores Indicate the Proportion of Children Who Meet the Criteria (“All Correct“) for the Targeted Parameter.

Standard Cognitive Assessment

The descriptives of paper and pencil tasks used in the study sample are presented in Table 3.

Table 3. Descriptives of Paper and Pencil Tasks.

|

Task |

N |

Min–Max |

M |

SD |

|

Sentence Repetition Task |

20 |

10–30 |

18.1 |

5.73 |

|

Bells Test |

20 |

18–35 |

30.3 |

5.11 |

|

Verbal fluency |

||||

|

Animals |

20 |

5–20 |

10.8 |

4.29 |

|

Vegetables |

20 |

2–8 |

4.65 |

1.84 |

|

Note. The sample size does not match the GBA sample due to missing data in 2 participants. |

||||

Comparison Between Game-Based and Standard Methods

Bells test: The number of correct visual target symbols (bells) that the child was able to mark was calculated (descriptives in Table 3). As described above, the maximum score was 35. The achieved score was correlated with the number of correct responses (marking the target stimuli) in the Book game as both tasks are designated to assess selective attention and are based on similar principles (searching target stimuli among distractors). The identified moderate correlation between these two tasks was statistically significant (r = .587, p = .007).

Verbal fluency test: Success in this task is evaluated according to the total number of words that the child was able to list within the time limit. Based on studies of semantic fluency, it can be assumed that the category Vegetables is more complicated than the category Animals (Nikolai et al., 2015). This was also confirmed by our data (descriptives in Table 3). Performance on this task was compared with the success rates (“go“ and “no-go“) in the Animal Feeding game (using the Spearman correlation coefficient. The results (Table 4) were significant (p < .05) for the success rate “go“ and the category Vegetables in all levels of the Animal Feeding game (level 1: r = .453; level 2: r = .465; level 3: r = .456; level 4: r = .542), but not for the other category. For the category Animals, the only significant result was the correlation with the success rate “no-go“ in level 4 of the Animal Feeding game (level 1: r = .214, p = .365; level 2: r = .124, p = .726; level 3: r = .202, p = .410; level 4: r = .304, p = .383).

Table 4. Correlation of Total Success Rate (“Go“ & “No-Go“) in Levels 1–4 of the Animal Feeding Game

With the Results of the Verbal Fluency Test (Categories “Animals” and “Vegetables”).

|

Level |

Animals Statistics |

p |

Vegetables Statistics |

p |

|

Level 1 |

.214 |

.365 |

.453 |

.045* |

|

Level 2 |

.124 |

.726 |

.465 |

.039* |

|

Level 3 |

.202 |

.410 |

.456 |

.043* |

|

Level 4 |

.304 |

.383 |

.542 |

.014* |

|

Note. *p < .05 |

||||

In addition, a possible association between the results of the Book game (the number of correctly marked symbols) and the Animal Feeding game (Success rate “go”) was analyzed, as both of the games are performance-type tasks employing attention. Correlation results (using the Spearman correlation coefficient) were significant supporting the association between the two tasks’ results (r = .759, p < .001).

Sentence repetition task: In this task, the maximum number of points that can be achieved by repeating sentences is 34 (see Methods). The number of points achieved was calculated for every participant (descriptives in Table 3). The task results were correlated with the results of the Toy Store game (Step 2—remembering objects), but no significant correlation was found (r = .014, p = .952).

Effect of Age on the Tested Cognitive Performance

The correlation between age and performance tested in individual tasks is presented in Table 5. The main findings were as follows: Correlations with age were significant in all tested methods except for the Toy Store game. The category Animals in the Verbal fluency test, and the success of stopping the reaction during the Animal Feeding game for “no-go“ stimuli were also not associated with age.

Table 5. Correlation of Task Results With Age.

|

Task |

Category |

r (Spearman) |

p-value |

|

Sentence repetition task |

.68 |

< .001 |

|

|

Bells test |

.53 |

.016 |

|

|

Verbal fluency |

|||

|

|

Animals |

.38 |

.097 |

|

|

Vegetables |

.62 |

.004 |

|

Book game |

Correct Symbols |

.84 |

< .001 |

|

|

Wrong Symbols |

−.47 |

.029 |

|

Animal Feeding game |

Success “go“ |

.82 |

< .001 |

|

|

Success “no-go“ |

.01 |

.960 |

|

|

Reaction Time |

−.87 |

< .001 |

|

Toy Store game |

“What“—Object |

−.14 |

.487 |

|

(# all correct in level values) |

“Where“—Position |

.05 |

.548 |

|

|

“When”—Order |

.18 |

.429 |

|

Note. All parameters of the Toy Store game (“What“—Object, “Where“—Position, “When“—Order) were correlated in the form of “all correct in level“ values (see Methods). |

|||

Usability Outcomes

Since one of the study goals was to optimize the functionality of the screening battery as such, all technical errors, misunderstandings of instructions or other complications were recorded by the researcher during the testing. Based on this information, game modifications have been made, including the elimination of functional errors, output recordings (performance log) and game levels adjustments. Also a shortening of instructions (narrated by the in-game guide character) was made based on the children’s verbal feedback. They were too repetitive and some of the children described them as “boring”, or “lengthy”. Overall, the games were well received by the children. This information is based on the short questioning at the end of the testing, where the children were asked about their experience playing the games. Children referred to them mostly with terms such as “fun”, “cute”, wanting to play “one more time”.

Discussion

This pilot study focused on the usability testing of a newly developed battery of interactive games, intended for preliminary screening of selected cognitive functions in preschool children. The battery consists of three interactive games testing selected cognitive abilities: selective attention, inhibitory control, and episodic-like working memory. These targeted cognitive abilities have been selected as they overlap with particular executive functions, the maturation of which is critical for school readiness and performance (Huizinga et al., 2018).

The main outcome of the usability testing in the reported study is that the games were well received by the tested children, both in terms of the comprehensibility of the game instructions and their execution. The games usability was demonstrated in the tested sample of children of all ages, although effect of age was not observed in all tested games due to some study limitations (see Limitations), thus a more rigorous testing of these effects is needed. In addition, the correlations between the newly designed cognitive games and the standard cognitive tests were applied to address whether the developed games meet some of the preliminary requirements of construct validity that should be studied in more detail in future studies.

Effect of Age on Cognitive Performance

The research studies focusing on cognitive assessment in children show that cognitive functions represent a complex interconnected system (Rosenqvist et al., 2014) and that tested cognitive performance generally improves with age (Brüne & Brüne-Cohrs, 2006).

We have confirmed that tested performance improved with age in all standard tests selected for this study, except the Animals subtest of the Verbal fluency test. Based on the average number of listed words in each category and on in person interaction with children during the assessment, we hypothesized that younger children may know more words from the category Animals because it is more attractive and may reflect their interest more than the category Vegetables. The higher variability in the category Animals (independent of age) also suggests that this category can be strongly affected by the child’s interests.

For interactive games, we found the correlation of age and performance in some of the tested parameters, but not in all of them. Two of the tested interactive games, the Book game and the Animal Feeding game, require the child to distinguish between stimuli while searching for a target object following a simple rule. This ability increases around the child’s third year (Atkinson & Braddick, 2012) and attention develops significantly mainly in preschool age (Hanania & Smith, 2010; Zupan et al., 2018). Indeed, improved performance with increasing age has been identified in all measured variables associated with attention control—performance in the Book game and in the correct (“go“) stimuli success rate and reaction time in the Animal Feeding game. However, the successful inhibition of the “no-go“ reaction during the Animal Feeding game was not associated with age (see Table 5). This finding is in line with a study showing that the most significant developmental changes in inhibition control appear between the ages of 6–7 and 8–10 years (Pureza et al., 2013). As our study tested only children up to 8 years, we did not cover the crucial developmental period.

In addition, the Toy Store game performance did not show an association with age in any of the tested categories. We attribute this to the fact that the three tested parameters (“What”, “Where”, “When”) were recorded in the form of errors that are not directly comparable (see above). Therefore, it was necessary to choose a different type of analysis transforming the original error value (for each parameter separately) into the level number that the child achieves without error (“all correct” level), which might not be sensitive to the effect of age.

Relationship of GBA to Standard Methods

In the Book game, the number of correct responses (correctly marked symbols) during the given time limit (60 seconds) has been selected as the main performance variable. The Bells test (Gauthier et al., 1989) is designed in a similar way—the goal is to find as many target symbols as possible among distractors (so-called cancellation task). The moderate association between the number of correctly selected target symbols in the Bells test and the Book game supports the association between the cognitive constructs tested by the designed game aimed at testing a child’s ability to distinguish between various stimuli while searching for a target object following a simple rule (Pureza et al., 2013).

In the case of the Animal Feeding game, we performed a correlation analysis between the number of correctly selected target (“go“) stimuli and the Verbal fluency test results (number of named animals or vegetables). The association between these tasks is not so straightforward. However, it has been suggested that executive functions such as cognitive flexibility and inhibition control, as well as processing speed, are the strongest predictors of Verbal fluency performance (Amunts et al., 2020). This is also supported by our findings, as the correlation coefficients for all tested levels of the Animal Feeding game showed moderate association with the number of vegetables named by the children. Importantly, the number of named animals did not correlate with the Animal Feeding game results. This could be associated with potentially reduced sensitivity of the verbal fluency in naming animals, where higher scores can be observed in children showing personal interests in animals independent on their verbal fluency ability.

Moreover, we did identify a strong positive correlation between the correct scores of the Animal Feeding game (“go” stimuli) and performance (correct symbols) in the Book game. This supports the idea that performance in these two games requires not only selective attention but also effective visual search strategies and identification of rules-defined correct stimuli. This is also confirmed by a study (Pureza et al., 2013) showing that scores in the Bells test correlate with the maturation of executive functions.

Finally, we did not find a correlation between performance in the Toy Store game and the Sentence repetition task testing verbal memory in our sample. This result can be attributed to the fact that these two tasks tested different memory subtypes (episodic-like vs. verbal working memory), even though we originally assumed they contained a common working memory component. In the Toy Store game, the task is to select the correct objects and return them to the correct positions and in the same order in which they were previously selected (episodic buffer of working memory), whereas sentence repetition tests the child’s ability to remember and repeat sentences with increasing complexity (verbal working memory, so called phonological loop component in the Baddeley’s model of working memory).

We chose an episodic memory paradigm for a few reasons – this test mimics real-life situations, and it has high ecological validity. The instructions of this task are easy to understand. Moreover, we also used the same paradigm in our previous experiments focusing on adults which allows us to compare the task performance by means of ontogeny.

The results of episodic memory research using WWW tasks (Bauer et al., 2012; Bauer & Leventon, 2013; Hayne & Imuta, 2011) showed that it is easier for children to remember an object’s identity than to remember its position and the most difficult appears to be the order. However, an error in the order or position automatically generates another error—compared to an incorrectly selected object. This can lead to the impression that the order or position (parameters “When” and “Where”) are more difficult than the “What” parameter. In order to avoid such an artifact, we evaluate the level the child reached without any errors. Even after applying this data conversion, the difference in the difficulty of parameters “What”, “Where”, “When” was confirmed by our study, showing higher scores in the object identity recall in comparison to position and order. This can be explained by greater complexity in remembering the order and position than in the simple memory of the objects presented. Please note that in the Toy Store game the object identity is selected in a separate phase, while the order and position are selected as a combination, which makes the task more demanding.

Serious Games in Cognitive Screening in Children—Future Directions

We aimed to develop a set of interactive games for cognitive screening suitable both for preschool and younger school-age children. As children were able to complete all the games without any problems, we can assume that the games were manageable for the children of the targeted age and the instructions were clear to them. Moreover, children described the games as engaging and attractive. This was also confirmed by interviewing individual children. Verbal feedback from children allowed us to improve some game features (e.g., we changed the length of particular levels in the Book game (attention), reduced the number of stimuli in the Animal Feeding game for the next stage of testing, and also modified the recording of some data parameters).

Finally, the gradually changing performance associated with the increasing game level confirmed in all tested cognitive games suggests that they were designed well in terms of their difficulty for the targeted age group. This feature is extremely important to address the cognitive performance screened in children of variable ages. The above-reported correlations between the cognitive games and the standard test methods suggests that our interactive battery is testing targeted cognitive domains, however the construct validity of individual games has to be yet properly addressed in a future study.

The next step will be the optimization of the screening battery and validation through widespread testing in normative samples in schools and kindergartens, and also expanding the battery to assess more complex cognitive functions. For that reason, the final form of this cognitive battery already incorporates an additional game focused on the assessment of social cognition that is being tested in an ongoing study to address this developing cognitive skill.

Limitations

One of the limitations of this pilot study is its small sample size. The study was conducted during the Covid-19 pandemic lockdowns with limited possibility of testing in kindergarten or elementary schools and, for that reason, the testing was carried out individually, in a more time-consuming way. As this study was conducted with preschool children, some of the results may have been affected by fluctuating motivation, or by interaction with an unfamiliar person (researcher), a larger sample would therefore be beneficial.

Another limitation is that the children’s previous experience with touch screens wasn’t recorded. For subsequent testing this question is included. Current research (Jin & Lin, 2022) indicates that children who spend more time on a touch screen had a faster reaction time and greater accuracy in a game-like attention task (the Attentional Network Task ANT-C). On the other hand, their performance was weaker when it came to the executive function in the same task.

In addition, the parent’s highest level of education, and the number of siblings of the tested child, including sibling order were recorded, but this information was not processed in detail, given the research objectives and the small sample size. However, we consider it appropriate to explore the possible relationship between these factors and children’s performance in the consecutive study.

Some studies find gender differences, for example in tests of episodic and semantic memory (K. A. Willoughby et al., 2012) or in tests of verbal fluency, verbal comprehension, and graphical reproduction (Kraft & Nickel, 1995), while other studies show that gender differences in cognitive development are only minimal (Ardila et al., 2011; Hyde, 2016). Unbalanced distribution of age between girls and boys prevented us from analyzing the possible effect of gender on cognitive performance. Even though gender differences in performance were not our priority in this pilot study, this may be addressed in future studies, as the findings of previous studies are ambiguous.

Another study limitation is associated with the selection of paper and pencil tasks. While we were predominantly guided by the cognitive games tested in this study, other factors also affected method selection, such as the availability of methods validated for the child population and the time demands of the methods used. Therefore, some of the selected methods do not fully correspond with the construct validity of the tested cognitive games and this limitation should be addressed in the following study.

Conclusions

The primary goal of this study was to verify the usability and the comprehensibility of instructions of the newly-designed screening battery of cognitive games intended for cognitive screening in preschool and younger school children. Overall, the tasks are comprehensible and attractive to children, even at the age of 4 years. Although correlations of game results with standard tests suggest that our interactive battery is evaluating targeted cognitive domains, the construct validity of individual games should be thoroughly addressed in future studies. In conclusion, the preliminary results of this pilot study indicate that the battery is feasible in its current form, representing an easy and attractive tool for children’s cognitive screening.

Conflict of Interest

The authors have no conflicts of interest to declare.

Acknowledgement

The research leading to the presented results was conducted with the financial support of the Technology Agency of the Czech Republic project TL02000561, by the Grant Agency of the Czech Republic project nr. 22-10493S and with institutional support from the Ministry of Health CZ – DRO (NUDZ, 00023752). The game design of the presented tasks has been developed by the team of programmers and designers of the interactive studio 3dsense, namely Jiří Wild, Filip Vorel, and Jakub Řidký, with the financial assistance provided by this funding.

Appendix

Table A1. Descriptives for the Number of Correct and Incorrect Responses in Levels 1 to 4 of the Book Game.

|

Parameter |

Level |

Min–Max |

M |

SD |

|

Correct Responses |

Level 1 |

14–55 |

30.1 |

12.1 |

|

|

Level 2 |

7–60 |

25.5 |

12.5 |

|

|

Level 3 |

7–21 |

15.0 |

4.7 |

|

|

Level 4 |

3–27 |

11.3 |

5.9 |

|

Incorrect Responses |

Level 1 |

0–3 |

0.2 |

0.7 |

|

|

Level 2 |

0–29 |

3.3 |

8.3 |

|

|

Level 3 |

0–22 |

3.2 |

6.1 |

|

|

Level 4 |

0–36 |

6.9 |

8.8 |

Table A2. Pairwise Comparison of Levels 1-4 for the Number of Correct and Incorrect Responses in the Book Game.

|

Levels |

Levels |

Correct responses |

Incorrect responses |

||

|

Statistic |

p |

Statistic |

p |

||

|

Level 1 |

Level 2 |

2.28 |

.027 |

2.44 |

.018 |

|

Level 1 |

Level 3 |

7.86 |

< .001 |

2.62 |

.011 |

|

Level 1 |

Level 4 |

10.82 |

< .001 |

6.15 |

< .001 |

|

Level 2 |

Level 3 |

5.58 |

< .001 |

0.18 |

.857 |

|

Level 2 |

Level 4 |

8.54 |

< .001 |

3.71 |

< .001 |

|

Level 3 |

Level 4 |

2.96 |

.004 |

3.52 |

< .001 |

Table A3. Descriptive Statistic for Success Rates ('Go' and 'No-Go') and Reaction time in the Animal Feeding game.

|

Parameter |

Level |

Min–Max |

M |

SD |

|

Success Rate |

Level 1 |

0.40–1.00 |

0.89 |

0.18 |

|

'Go' |

Level 2 |

0.36–1.00 |

0.82 |

0.19 |

|

|

Level 3 |

0.00–1.00 |

0.75 |

0.27 |

|

|

Level 4 |

0.00–1.00 |

0.72 |

0.27 |

|

Success Rate |

Level 1 |

|

|

|

|

'No-go' |

Level 2 |

0.67–1.00 |

0.96 |

0.10 |

|

|

Level 3 |

0.71–1.00 |

0.93 |

0.09 |

|

|

Level 4 |

0.33–1.00 |

0.82 |

0.19 |

|

Reaction Time |

Level 1 |

0.63–1.06 |

0.82 |

0.13 |

|

(average) |

Level 2 |

0.72–1.01 |

0.87 |

0.09 |

|

|

Level 3 |

0.74–1.02 |

0.89 |

0.08 |

|

|

Level 4 |

0.79–1.05 |

0.95 |

0.08 |

Table A4. Pairwise Comparison of the Success Rate of 'Go' Reactions and Average Reaction Time in Levels 1-4 of the

Animal Feeding Game.

|

Levels |

Levels |

Success rate “Go” |

Average reaction time |

||

|

Statistic |

p |

Statistic |

p |

||

|

Level 1 |

Level 2 |

2.49 |

.016 |

2.70 |

.009 |

|

Level 1 |

Level 3 |

4.39 |

< .001 |

4.82 |

< .001 |

|

Level 1 |

Level 4 |

5.41 |

< .001 |

9.83 |

< .001 |

|

Level 2 |

Level 3 |

1.90 |

.062 |

2.12 |

.038 |

|

Level 2 |

Level 4 |

2.93 |

.005 |

7.13 |

< .001 |

|

Level 3 |

Level 4 |

1.02 |

.310 |

5.01 |

< .001 |

Table A5. Pairwise Comparison of Parameters What, Where, When of the Toy Store Game. Wilcoxon W Paired Samples T-Test.

|

Parameters |

|

|

Statistic |

p |

|

All Objects Correct |

- |

All Positions Correct |

167.5 |

< .001 |

|

All Objects Correct |

- |

All Order Correct |

199.0 |

< .001 |

|

All Positions Correct |

- |

All Order Correct |

44.0 |

.186 |

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Copyright © 2024 Markéta Jablonská, Iveta Fajnerová, Tereza Nekovářová