The use of artificial intelligence in mental health services in Turkey: What do mental health professionals think?

Vol.18,No.1(2024)

Artificial intelligence (AI) supported applications have become increasingly prevalent in health care practice, with mental health services being no exception. AI applications can be employed at various stages of mental health services and with different roles. This study aims to understand the potential advantages and disadvantages of using AI in mental health services, to explore its future roles, and outcomes through the opinions of mental health professionals engaged with AI. Thus, we conducted a qualitative study with semi-structured interviews with 13 mental health professionals who have expertise in AI, and a content analysis of the interview transcripts. We concluded that the use of AI in mental health services revealed advantages and disadvantages for clients, the profession itself, and experts. Our study emphasized four findings. Firstly, the participants were likely to have positive opinions about using AI in mental health services. Increased satisfaction, widespread availability of mental health services, reduced expert-driven problems, and workload were among the primary advantages. Secondly, the participants stated that AI could not replace a clinician but could serve a functional role as an assistant. However, thirdly, they were skeptical about the notion that AI would radically transform mental health services. Lastly, the participants expressed limited views on ethical and legal issues surrounding data ownership, the ‘black box’ problem, algorithmic bias, and discrimination. Although our research has limitations, we expect that AI will play an increasingly important role in mental health care services.

mental health ; artificial intelligence; technology-assisted psychotherapies; machine learning; virtual therapist

Mücahit Gültekin

Department of Psychological Counselling and Guidance, Afyon Kocatepe University, Afyonkarahisar, Turkey

Mücahit Gültekin (Ph. D.) is an assistant professor in the field of Guidance and Psychological Counseling at Afyon Kocatepe University. He has published books, book chapters and articles on topics such as cyber psychology, artificial intelligence and psychology, gender, political psychology.

Meryem Şahin

Department of Psychology, Afyon Kocatepe University, Afyonkarahisar, Turkey

Meryem Şahin (Ph. D.) is an assistant professor in the department of psychology at Afyon Kocatepe University, Turkey. Her research interests include positive psychology, psychology of religion, drug addiction. She has several publications on these topics.

Abd-alrazaq, A. A., Alajlani, M., Alalwan, A. A., Bewick, B. M., Gardner, P., & Househ, M. (2019). An overview of the features of chatbots in mental health: A scoping review. International Journal of Medical Informatics, 132, Article 103978, https://doi.org/10.1016/j.ijmedinf.2019.103978

Aboujaoude, E., Gega, L., Parish, M. B., & Hilty, D. M. (2020). Editorial: Digital interventions in mental health: Current status and future directions. Frontiers Psychiatry, 11, Article 111. https://doi.org/10.3389/fpsyt.2020.00111

Ahmed, A., Ali, N., Aziz, S., Abd-alrazaq, A. A., Hassan, A., Khalifa Elhusein, M. B., Ahmed, M., Ahmed, M. A. S., & Househ, M. (2021). A review of mobile chatbot apps for anxiety and depression and their self-care features. Computer Methods and Programs in Biomedicine Update, 1, Article 100012. https://doi.org/10.1016/j.cmpbup.2021.100012

Aktan, M. E., Turhan, Z., & Dolu, İ. (2022). Attitudes and perspectives towards the preferences for artificial intelligence in psychotherapy. Computers in Human Behavior, 133, Article 107273. https://doi.org/10.1016/j.chb.2022.107273

Allen, B., Agarwal, S., Coombs, L., Wald, C., & Dreyer, K. (2021). 2020 ACR Data Science Institute Artificial Intelligence Survey. Journal of the American College of Radiology, 18(8), 1153–1159. https://doi.org/10.1016/j.jacr.2021.04.002

Al-Medfa, M. K., Al-Ansari, A. M. S., Darwish, A. H., Qreeballa, T. A., & Jahrami, H. (2023). Physicians’ attitudes and knowledge toward artificial intelligence in medicine: Benefits and drawbacks. Heliyon, 9(4), Article e14744. https://doi.org/10.1016/j.heliyon.2023.e14744

Anthes, E. (2016). Mental health: There’s an app for that. Nature, 532(7597), 20–23. https://doi.org/10.1038/532020a

Ardito, R. B., & Rabellino, D. (2011). Therapeutic alliance and outcome of psychotherapy: Historical excursus, measurements, and prospects for research. Frontiers in Psychology, 2, Article 270. https://doi.org/10.3389/fpsyg.2011.00270

Barrett, M. S., Chua, W.-J., Crits-Christoph, P., Gibbons, M. B., & Thompson, D. (2008). Early withdrawal from mental health treatment: Implications for psychotherapy practice. Psychotherapy: Theory, Research, Practice, Training, 45(2), 247–267. https://doi.org/10.1037/0033-3204.45.2.247

Bazeley, P., & Jackson, K. (2013). Qualitative data analysis with NVivo (2nd ed.). SAGE Publications.

Bennett, C. C., & Doub, T. W. (2016). Expert systems in mental health care: AI applications in decision-making and consultation. In D. D. Luxton (Ed.), Artificial intelligence in behavioral and mental health care (pp. 27–51). Elsevier Academic Press. https://doi.org/10.1016/B978-0-12-420248-1.00002-7

Bickman, L. (2020). Improving mental health services: A 50-year journey from randomized experiments to artificial intelligence and precision mental health. Administration and Policy in Mental Health and Mental Health Services Research, 47, 795–843. https://doi.org/10.1007/s10488-020-01065-8

Bilge, Y., Gül, E., & Birçek, N. I. (2020). Bir sosyal fobi vakasında bilişsel davranışçı terapi ve sanal gerçeklik kombinasyonu [Cognitive behavioral therapy and virtual reality combination in a case of social phobia]. Bilişsel Davranışçı Psikoterapi ve Araştırmalar Dergisi, 9(2), 158–165. https://doi.org/10.5455/JCBPR.61718

Blease, C., Locher, C., Leon-Carlyle, M., & Doraiswamy, M. (2020). Artificial intelligence and the future of psychiatry: Qualitative findings from a global physician survey. Digital Health, 6, 1–18. https://doi.org/10.1177/2055207620968355

Braga, A., & Logan, R. K. (2019). AI and the singularity: A fallacy or a great opportunity? Information, 10(2), Article 73. https://doi.org/10.3390/info10020073

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

Broadbent, E. (2017). Interactions with robots: The truths we reveal about ourselves. Annual Review of Psychology, 68, 627–652. https://doi.org/10.1146/annurev-psych-010416-043958.

Brown, C. H. (2020). Three flavorings for a soup to cure what ails mental health services. Administration and Policy in Mental Health and Mental Health Services Research, 47, 844–851. https://doi.org/10.1007/s10488-020-01060-z

Buck, C., Doctor, E., Hennrich, J., Jöhnk, J., & Eymann, T. (2022). General practitioners’ attitudes toward artificial intelligence–enabled systems: Interview study. Journal of Medical Internet Research, 24(1), Article e28916. https://doi.org/10.2196/28916

Burns, M. N., Begale, M., Duffecy, J., Gergle, D., Karr, C. J., Giangrande, E., & Mohr, D. C. (2011). Harnessing context sensing to develop a mobile intervention for depression. Journal of Medical Internet Research, 13(3), Article e55. https://doi.org/10.2196/jmir.1838

Bzdok, D., & Meyer-Lindenberg, A. (2018). Machine learning for precision psychiatry: Opportunities and challenges. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging, 3(3), 223–230. https://doi.org/10.1016/j.bpsc.2017.11.007

Carlbring, P., Hadjistavropoulos, H., Kleiboer, A., & Andersson, G. (2023). A new era in Internet interventions: The advent of Chat-GPT and AI-assisted therapist guidance. Internet Interventions, 32, 100621. https://doi.org/10.1016/j.invent.2023.100621

Cao, B., Zheng, L., Zhang, C., Yu, P. S., Piscitello, A., Zulueta, J., Ajilore, O., Ryan, K., & Leow, A. D. (2017). DeepMood: Modeling mobile phone typing dynamics for mood detection. In KDD ’17: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (pp. 747–755). https://doi.org/10.1145/3097983.3098086

Capecci, M., Pepa, L., Verdini, F., & Ceravolo, M. G. (2016). A smartphone-based architecture to detect and quantify freezing of gait in Parkinson’s disease. Gait & Posture, 50, 28–33. https://doi.org/10.1016/j.gaitpost.2016.08.018

Carvalho, L. D., & Pianowski, G. (2019). Digital phenotyping and personality disorders: A necessary relationship in the digital age. Psicologia: Teoria e Prática, 21(2), 122–133. http://dx.doi.org/10.5935/1980-6906/psicologia.v21n2p153-171

Cosgrove, L., Karter, J. M., McGinley, M., & Morril, Z. (2020). Digital phenotyping and digital psychotropic drugs: Mental health surveillance tools that threaten human rights. Health and Human Rights Journal, 22(2), 33–39. https://pubmed.ncbi.nlm.nih.gov/33390690/

Cavallo, F., Esposito, R., Limosani, R., Manzi, A., Bevilacqua, R., Felici, E., Di Nuovo, A., Cangelosi, A., Lattanzio, F., & Dario, P. (2018). Robotic services acceptance in smart environments with older adults: User satisfaction and acceptability study. Journal of Medical Internet Research, 20(9), Article e264. https://doi.org/10.2196/jmir.9460

Cecula, P., Yu, J., Dawoodbhoy, F. M., Delaney, J., Tan, J., Peacock, I., & Cox, B. (2021). Applications of artificial intelligence to improve patient flow on mental health inpatient units – Narrative literature review. Heliyon, 7(4), Article e06626. https://doi.org/10.1016/j.heliyon.2021.e06626

Cheng, S. W., Chang, C. W., Chang, W. J., Wang, H. W., Liang, C. S., Kishimoto, T., Chang, J. P. C., Kuo Kuan-Pin Su, J. S. (2023). The now and future of ChatGPT and GPT in psychiatry. Psychiatry and Clinical Neuroscience, 77(11), 592–596. https://doi.org/10.1111/pcn.13588

Chew, H. S. J., & Achananuparp, P. (2022). Perceptions and needs of artificial intelligence in health care to increase adoption: Scoping review. Journal of Medical Internet Research, 24(1), Article e32939. https://doi.org/10.2196/32939

Coeckelbergh, M. (2011). You, robot: On the linguistic construction of artificial others. AI & Society, 26, 61–69. https://doi.org/10.1007/s00146-010-0289-z

Connolly, S. L., Kuhn, E., Possemato, K., & Torous, J. (2021). Digital clinics and mobile technology implementation for mental health care. Current Psychiatry Reports, 23, Article 38. https://doi.org/10.1007/s11920-021-01254-8

Cresswell, K., Cunnigham-Burley, S., & Sheikh, A. (2018). Health care robotics: Qualitative exploration of key challenges and future directions. Journal of Medical Internet Research, 20(7), Article e10410. https://doi.org/10.2196/10410

Creswell, J. W. (2012). Educational research: Planning, conducting, and evaluating quantitative and qualitative research (4th ed.). Pearson Education.

Creswell, J. W. (2013). Qualitative inquiry & research design: Choosing among five approaches (3rd ed.). SAGE Publications.

D’Alfonso, S. (2020). AI in mental health. Current Opinion in Psychology, 36, 112–117. https://doi.org/10.1016/j.copsyc.2020.04.005

Damiano, L., & Dumouchel, P. (2018). Anthropomorphism in human–robot co-evolution. Frontiers in Psychology, 9, Article 468. https://doi.org/10.3389/fpsyg.2018.00468

Damiano, L., & Dumouchel, P. (2020). Emotions in relation. Epistemological and ethical scaffolding for mixed human–robot social ecologies. Humana Mente, 13(37), 181–206. https://philpapers.org/rec/DAMEIR

Darling, K., Nandy, P., & Breazeal, C. (2015). Empathic concern and the effect of stories in human-robot interaction. In 24th IEEE International Symposium on Robot and Human Interactive Communication (pp. 770–775). https://doi.org/10.1109/ROMAN.2015.7333675

de Visser, E. J., Monfort, S. S., McKendrick, R., Smith, M. A. B., McKnight, P. E., Krueger, F., & Parasuraman, R. (2016). Almost human: Anthropomorphism increases trust resilience in cognitive agents. Journal of Experimental Psychology: Applied, 22(3), 331–349. https://doi.org/10.1037/xap0000092

Doi, H. (2020). Digital phenotyping of autism spectrum disorders based on color information: Brief review and opinion. Artificial Life and Robotics, 25, 329–334. https://doi.org/10.1007/s10015-020-00614-6

Doraiswamy, P. M., Blease, C., & Bodner, K. (2020). Artificial intelligence and the future of psychiatry: Insights from a global physician survey. Artificial Intelligence in Medicine, 102, Article 101753. https://doi.org/10.1016/j.artmed.2019.101753

Duffy, B. R. (2003). Anthropomorphism and the social robot. Robotics and Autonomous Systems, 42(3–4), 177–190. https://doi.org/10.1016/S0921-8890(02)00374-3

Epley, N., Waytz, A., & Cacioppo, J. T. (2007). On seeing human: A three-factor theory of anthropomorphism. Psychological Review, 114(4), 864–886. https://doi.org/10.1037/0033-295X.114.4.864

Erebak, S. (2018). Attitudes toward potential robot coworkers: An experimental investigation on anthropomorphism and caregivers’ trust, work intention and preference of level of automation [Unpublished master’s thesis]. University of Marmara.

Fan, S. (2020). Önemli sorular yapay zeka yerimizi alacak mı? 21. yüzyıl için bir rehber [Will AI replace us? A primer for the 21st century] (İ. G. Çıgay, Trans.). Hep Kitap. (Original work published 2019).

Feijt, M., de Kort, Y., Westerink, J., Bierbooms, J., Bongers, I., & IJsselsteijn, W. (2023). Integrating technology in mental healthcare practice: A repeated cross-sectional survey study on professionals’ adoption of digital mental health before and during COVID-19. Frontiers in Psychiatry, 13, Article 1040023. https://doi.org/10.3389/fpsyt.2022.1040023

Festerling, J., & Siraj, I. (2021). Anthropomorphizing technology: A conceptual review of anthropomorphism research and how it relates to children’s engagements with digital voice assistants. Integrative Psychological and Behavioral Science, 56, 709–738. https://doi.org/10.1007/s12124-021-09668-y

Fiske, A., Henningsen, P., & Buyx, A. (2019). Your robot therapist will see you now: Ethical implications of embodied artificial intelligence in psychiatry, psychology, and psychotherapy. Journal of Medical Internet Research, 21(5), Article e13216. https://doi.org/10.2196/13216

Fitzpatrick, K. K., Darcy, A., & Vierhile, M. (2017). Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): A randomized controlled trial. JMIR Mental Health, 4(2), Article e19. https://doi.org/10.2196/mental.7785

Fong, T., Nourbakhsh, I., & Dautenhahn, K. (2003). A survey of socially interactive robots. Robotics and Autonomous Systems, 42(3–4), 143–166. https://doi.org/10.1016/S0921-8890(02)00372-X

Fulmer, R., Joerin, A., Gentile, B., Lakerink, L., & Rauws, M. (2018). Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety: Randomized controlled trial. JMIR Mental Health, 5(4), Article e64. https://doi.org/10.2196/mental.9782

Glesne, C. (2011). Becoming qualitative researchers (4th ed.). Pearson Education.

Gordjin, B., & ten Have, H. (2023). ChatGPT: Evolution or revolution? Medicine, Health Care and Philosophy, 26, 1–2 https://doi.org/10.1007/s11019-023-10136-0

Graham, S., Depp, C., Lee, E. L., Nebeker, C., Tu, X., Kim, H. C., & Jeste, D. V. (2019). Artificial intelligence for mental health and mental illnesses: An overview. Current Psychiatry Reports, 21(11), Article 116. https://doi.org/10.1007/s11920-019-1094-0

Gültekin, M. (2022). Human-social robot interaction, anthropomorphism and ontological boundary problem in education. Psycho-Educational Research Reviews, 11(3), 751–773. https://doi.org/10.52963/PERR_Biruni_V11.N3.11

Guthrie, S. E. (1993). Faces in the clouds: A new theory of religion. Oxford University Press.

Güvercin, C. H. (2020). Tıpta yapay zekâ ve etik [Artificial Intelligence in medicine and ethics]. In Ekmekci, P. E., (Ed.). Yapay Zekâ ve Tıp Etiği [Artificial Intelligence and Medical Ethics] (pp. 7–13). Türkiye Klinikleri.

Guzman, A. L. (2020). Ontological boundaries between humans and computers and the implications for human-machine communication. Human-Machine Communication, 1, 37–54. https://doi.org/10.30658/hmc.1.3

Haggadone, B. A., Banks, J., & Koban, K. (2021). Of robots and robotkind: Extending intergroup contact theory to social machines. Communication Research Reports, 38(3), 161–171. https://doi.org/10.1080/08824096.2021.1909551

Hollis, C., Morriss, R., Martin, J., Amani, S., Cotton, R., Denis, M., & Lewis, S. (2015). Technological innovations in mental healthcare: Harnessing the digital revolution. British Journal of Psychiatry, 206(4), 263–265. https://doi.org/10.1192/bjp.bp.113.142612

Hopster, J. (2021). What are socially disruptive technologies? Technology in Society, 67, Article 101750. https://doi.org/10.1016/j.techsoc.2021.101750

Horn, R. L., & Weisz, J. R. (2020). Can artificial intelligence improve psychotherapy research and practice? Administration and Policy in Mental Health and Mental Health Services Research, 47, 852–855. https://doi.org/10.1007/s10488-020-01056-9

Hudlicka, E. (2016). Virtual affective agents and therapeutic games. In D. L. Luxton (Ed.), Artificial intelligence in behavioral and mental health care (pp. 81–115). Elsevier Academic Press.

Hung, L., Liu, C., Woldum, E., Au-Yeung, A., Berndt, A., Wallsworth, C., Horne, N., Gregorio, M., Mann, J., & Chaudhury, H. (2019). The benefits of and barriers to using a social robot PARO in care settings: A scoping review. BMC Geriatrics, 19, Article 232. https://doi.org/10.1186/s12877-019-1244-6

Inkster, B., Sarda, S., & Subramanian, V. (2018). An empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: Real-world data evaluation mixed-methods study. JMIR mHealth uHealth, 6(11), Article e12106. https://doi.org/10.2196/12106

Jacobson, N. C., Summers, B., & Wilhelm, S. (2020). Digital biomarkers of social anxiety severity: Digital phenotyping using passive smartphone sensors. Journal of Medical Internet Research, 22(5), Article e16875. https://doi.org/10.2196/16875

Jan, A., Meng, H., Gaus, Y. F., & Zhang, F. (2018). Artificial intelligent system for automatic depression level analysis through visual and vocal expressions. İEEE Transactions Cognitive Develop Systems, 10(3), 668–680. https://doi.org/10.1109/TCDS.2017.2721552

Janssen, R. J., Maurao-Miranda, J., & Schnack, H. G. (2018). Making individual prognoses in psychiatry using neuroimaging and machine learning. Biological Psychiatry Cognitive Neuroscience and Neuroimaging, 3(9), 798–808. https://doi.org/10.1016/j.bpsc.2018.04.004

Joerin, A., Rauws, M., & Ackerman, M. (2019). Psychological artificial intelligence service, Tess: Delivering on-demand support to patients and their caregivers: Technical report. Cureus, 11(1), Article e3972. https://doi.org/10.7759/cureus.3972

Joy, M. (2005). Humanistic psychology and animal rights: Reconsidering the boundaries of the humanistic ethic. Journal of Humanistic Psychology, 45(1), 106–130. https://doi.org/10.1177/0022167804272628

Kahn, P. H., Jr., Kanda, T., Ishiguro, H., Freier, N. G., Severson, R., Gill, B. T., Ruckert, J. H., & Shen, S. (2012). “Robovie, you’ll have to go into the closet now”: Children’s social and moral relationships with a humanoid robot. Devolepmental Psychology, 48(2), 303–314. https://doi.org/10.1037/a0027033

Kahn, P. H., Jr., & Shen, S. (2017). NOC NOC, who’s there? A new ontological category (NOC) for social robots. In N. Budwig, E. Turiel, & P. D. Zelazo (Eds.), New perspectives on human development (pp. 106–122). Cambridge University Press. https://doi.org/10.1017/CBO9781316282755.008

Kalmady, S. V., Greiner, R., Agrawal, R., Shivakumar, V., Narayanaswamy, J. C., Brown, M. R. G., Greenshaw, A., Dursun, S. M., & Venkatasubramanian, G. (2019). Towards artificial intelligence in mental health by improving schizophrenia prediction with multiple brain parcellation ensemble-learning. NPJ Schizophrenia, 5, Article 2. https://doi.org/10.1038/s41537-018-0070-8

Kamath, J., Leon Barriera R., Jain, N., Keisari, E., & Wang, B. (2022). Digital phenotyping in depression diagnostics: Integrating psychiatric and engineering perspectives. World Journal of Psychiatry, 12(3), 393–409. https://doi.org/10.5498/wjp.v12.i3.393

Kim, J. W., Jones, K. L., & D’Angelo, E. (2019). How to prepare prospective psychiatrists in the era of artificial intelligence. Academic Psychiatry, 43(3), 337–339. https://doi.org/10.1007/s40596-019-01025-x

Kim, M. S. (2019). Robot as the “mechanical other”: Transcending karmic dilemma. AI & Society, 34, 321–330. https://doi.org/10.1007/s00146-018-0841-9

Kuhn, E., Greene, C., Hoffman, J., Nguyen, T., Wald, L., Schimidt, J., Ramsey, K. M., & Ruzek, J. (2014). Preliminary evaluation of PTSD coach, a smartphone app for post-traumatic stress symptoms. Military Medicine, 179(1), 12–18. https://doi.org/10.7205/MILMED-D-13-00271

Kuhn, J. L. (2001). Toward an ecological humanistic psychology. Journal of Humanistic Psychology, 41(2), 9–24. https://doi.org/10.1177/0022167801412003

Lattie, E. G., Stiles-Shields, C., & Graham, A. K. (2022). An overview of and recommendations for more accessible digital mental health services. Nature Reviews Psychology, 1, 87–100 https://doi.org/10.1038/s44159-021-00003-1

Lee, E. E., Torous, J., De Choudhury, M., Depp, C. A., Graham, S. A., Kim, H.-C., Paulus, M. P., Krystal, J. H., & Jeste, D. V. (2021). Artificial intelligence for mental health care: Clinical applications, barriers, facilitators, and artificial wisdom. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging, 6(9), 856–864. https://doi.org/10.1016/j.bpsc.2021.02.001

Leite, I., Castellano, G., Pereira, A., Martinho, C., & Paiva, A. (2014). Empathic robots for long-term interaction. International Journal of Social Robotics, 6(3), 329–341. https://doi.org/10.1007/s12369-014-0227-1

Liu, S., Yang, L., Zhang, C., Xiang, Y. T., Liu, Z., Hu, S., & Zhang, B. (2020). Online mental health services in China during the COVID-19 outbreak. Lancet Psychiatry, 7(4), e17–e18. https://doi.org/10.1016/S2215-0366(20)30077-8

Liyanage, H., Liaw, S. T., Jonnagaddala, J., Schreiber, R., Kuziemsky, C., Terry, A. L., & de Lusignan, S. (2019). Artificial intelligence in primary health care: Perceptions, issues, and challenges. Yearbook of Medical Informatics, 28(1), 41–46. https://doi.org/10.1055/s-0039-1677901.

Lord, S. E., Campbell, A. N. C., Brunette, M. F., Cubillos, L., Bartels, S. M., Torrey, W. C., Olson, A. L., Chapman, S. H., Batsis, J. A., Polsky, D., Nunes, E. V., Seavey, K. M., & Marsch, L. A. (2021). Workshop on implementation science and digital therapeutics for behavioral health. JMIR Mental Health. 8(1), Article e17662. https://doi.org/10.2196/17662.

Lucas, G. M., Gratch, J., King, A., & Morency, L. P. (2014). It’s only a computer: Virtual humans increase willingness to disclose. Computers in Human Behavior, 37, 94–100. https://doi.org/10.1016/j.chb.2014.04.043

Luxton, D. D. (2014). Artificial intelligence in psychological practice: Current and future applications and implications. Professional Psychology: Research and Practice, 45(5), 332–339. https://doi.org/10.1037/a0034559

Luxton, D. D. (2016). Artificial intelligence in behavioral and mental health care. Elsevier Academic Press. https://doi.org/10.1016/B978-0-12-420248-1.00001-5

Malinowska, J. K. (2021). What does it mean to empathise with a robot? Minds and Machines, 31, 361–376. https://doi.org/10.1007/s11023-021-09558-7

Mann, D. (2023). Artificial intelligence discusses the role of artificial intelligence in translational medicine. JACC: Basic to Translational Science, 8(2), 221–223. https://doi.org/10.1016/j.jacbts.2023.01.001

Mastoras, R. E., Iakovakis, D., Hadjidimitriou, S., Charisis, V., Kassie, S., Alsaadi, T., Khandoker, A., & Hadjileontiadis, L. J. (2019). Touchscreen typing pattern analysis for remote detection of the depressive tendency. Scientific Reports, 9, Article 13414. https://doi.org/10.1038/s41598-019-50002-9

McShane, M., Beale, S., Nirenburg, S., Jarrel, B., & Fantry, G. (2012). Inconsistency as a diagnostic tool in a society of intelligent agents. Artificial Intelligence in Medicine, 55(3), 137–148. https://doi.org/10.1016/j.artmed.2012.04.005

Melcher, J., Lavoie, J., Hays, R., D’Mello, R., Rauseo-Ricupero, N., Camacho, E., Rodriguez-Villa, E., Wisniewski, H., Lagan, S., Vaidyam, A., & Torous, J. (2021). Digital phenotyping of student mental health during COVID-19: an observational study of 100 college students. Journal of American College Health, 71:3, 736-748. https://doi.org/10.1080/07448481.2021.1905650.

Merriam, S. B. (2009). Qualitative research: A guide to design and implementation (3rd ed.). Jossey-Bass.

Miles, M. B., & Huberman, A. M. (1994). Qualitative data analysis (2nd ed.). SAGE.

Miner, A. S., Shah, N., Bullock, K. D., Arnow, B. A., Bailenson, J., & Hancock, J. (2019). Key considerations for incorporating conversational AI in psychotherapy. Frontiers in Psychiatry, 10, Article 746. https://doi.org/10.3389/fpsyt.2019.00746

Monteith, S., Glenn, T., Geddes, J., Whybrow, P. C., Achtyes, E., & Bauer, M. (2022). Expectations for artificial intelligence (AI) in psychiatry. Current Psychiatry Reports, 24, 709–721. https://doi.org/10.1007/s11920-022-01378-5

Nogueira-Leite, D., & Cruz-Correia, R. (2023). Attitudes of physicians and individuals toward digital mental health tools: protocol for a web-based survey research project. JMIR Research Protocols, 12, Article e41040. https://doi.org/10.2196/41040

Păvăloaia, V. D., & Necula, S. C. (2023). Artificial intelligence as a disruptive technology—A systematic literature review. Electronics, 12(5), Article 1102. https://doi.org/10.3390/electronics12051102

Pham, K. T., Nabizadeh, A., & Selek, S. (2022). Artificial intelligence and chatbots in psychiatry. Psychiatric Quarterly, 93, 249–253. https://doi.org/10.1007/s11126-022-09973-8

Philippe, T. J., Sikder, N., Jackson, A., Koblanski, M. E., Liow, E., Pilarinos, A., & Vasarhelyi, K. (2022). Digital health interventions for delivery of mental health care: Systematic and comprehensive meta-review. JMIR Mental Health, 9(5), Article e35159. https://doi.org/10.2196/35159

Potts, A. (2010). Introduction: Combating speciesism in psychology and feminism. Feminism & Psychology, 20(3), 291–301. https://doi.org/10.1177/0959353510368037

Powell, T. P. (2017, December 5). The ‘smart pill’ for schizophrenia and bipolar disorder raises tricky ethical questions. STAT. https://www.statnews.com/2017/12/05/smart-pill-abilify-ethics/

Pradhan, A., Findlater, L., & Lazar, A. (2019). “Phantom friend” or “Just a box with information”: Personification and ontological categorization of smart speaker-based voice assistants by older adults. Proceedings of the ACM on Human-Computer Interaction, 3(CSCW), Article 214. https://doi.org/10.1145/3359316

Prescott, J., & Hanley, T. (2023). Therapists’ attitudes towards the use of AI in therapeutic practice: Considering the therapeutic alliance. Mental Health and Social Inclusion, 27(2), 177–185. https://doi.org/10.1108/MHSI-02-2023-0020

Prescott, T. J., & Robillard, J. M. (2020). Are friends electric? The benefits and risks of human-robot relationships. Perspective, 24(1), Article 101993. https://doi.org/10.1016/j.isci.2020.101993

Rabbi, M., Philyaw-Kotov, M., Lee, J., Mansour, A., Dent, L., Wang, X., Cunningham, R., Bonar, E., Nahum-Shani, I., Klasnja, P., Walton, M., & Murphy, S. (2017). SARA: A mobile app to engage users in health data collection. In ACM International Joint Conference on Pervasive and Ubiquitous Computing (pp. 781–789). https://doi.org/10.1145/3123024.3125611

Rein, B. A., McNeil, D. W., Hayes, A. R., Hawkins, T. A., Ng, H. M., & Yura, C. A. (2018). Evaluation of an avatar-based training program to promote suicide prevention awareness in a college setting. Journal of American College Health, 66(5), 401–411. https://doi.org/10.1080/07448481.2018.1432626

Rojas, G., Martínez, V., Martínez, P., Franco, P., & Jiménez-Molina, Á. (2019). Improving mental health care in developing countries through digital technologies: A mini narrative review of the Chilean case. Frontiers in Public Health, 7, Article 391. https://doi.org/10.3389/fpubh.2019.00391

Rosenthal-von der Pütten, A. M., Krämer, N. C., Hoffmann, L., Sobieraj, S., & Eimler, S. C. (2013). An experimental study on emotional reactions towards a robot. International Journal of Social Robotics, 5(1), 17–34. https://doi.org/10.1007/s12369-012-0173-8

Saldaña, J. (2015). The coding manual for qualitative researchers (3rd ed.). SAGE.

Sarris, J. (2022). Disruptive innovation in psychiatry. Annals of the New York Academy of Sciences, 1512(1), 5–9. https://doi.org/10.1111/nyas.14764

Scholten, M. R., Kelders, S. M., & Van Gemert-Pijnen, J. E. (2017). Self-guided web-based interventions: Scoping review on user needs and the potential of embodied conversational agents to address them. Journal of Medical Internet Research, 19(11), Article e383. https://doi.org/10.2196/jmir.7351

Sebri, V., Pizzoli, S. F. M., Savioni, L., & Triberti, S. (2020). Artificial intelligence in mental health: Professionals’ attitudes towards AI as a psychotherapist. In B. K. Wiederhold & G. Riva (Eds.), Annual review of cybertherapy and telemedicine (pp. 229–234). Interactive Media Institute.

Sedlakova, J., & Trachsel, M. (2023). Conversational artificial intelligence in psychotherapy: A new therapeutic tool or agent? The American Journal of Bioethics, 23(5), 4–13. https://doi.org/10.1080/15265161.2022.2048739

Senders, J. T., Maher, N., Hulsbergen, A. F. C., Lamba, N., Bredenoord, A. L., & Broekman, M. L. D. (2019). The ethics of passive data and digital phenotyping in neurosurgery. In M. L. D. Broekman (Ed.), Ethics of innovation in neurosurgery (pp. 129–142). Springer. https://doi.org/10.1007/978-3-030-05502-8

Serholt, S., Barendregt, W., Vasalou, A., Alves-Oliveira, P., Jones, A., Petisca, S., & Paiva, A. (2017). The case of classroom robots: Teachers’ deliberations on the ethical tensions. AI & Society, 32, 613–631. https://doi.org/10.1007/s00146-016-0667-2

Severson, R. L., & Carlson, S. M. (2010). Behaving as or behaving as if? Children’s conceptions of personified robots and the emergence of a new ontological category. Neural Networks, 23(8–9), 1099–1103. https://doi.org/10.1016/j.neunet.2010.08.014

Sharkey, A. J. C. (2016). Should we welcome robot teachers? Ethics and Information Technology, 18, 283–297. https://doi.org/10.1007/s10676-016-9387-z

Singh, O. P. (2023). Artificial intelligence in the era of ChatGPT - Opportunities and challenges in mental health care. Indian Journal of Psychiatry, 65(3), 297–298. https://doi.org/10.4103/indianjpsychiatry.indianjpsychiatry_112_23

Stein, D. J., Naslund, J. A., & Bantjes, J. (2022). COVID-19 and the global acceleration of digital psychiatry. Lancet Psychiatry, 9(1), 8–9. https://doi.org/10.1016/S2215-0366(21)00474-0

Sweeney, C., Potts, C., Ennis, E., Bond, R., Mulvenna, M. D., O’Neill, S., Malcolm, M., Kuosmanen, L., Kostenius, C., Vakaloudis, A., Mcconvey, G., Turkington, R., Hanna, D., Nieminen, H., Vartiainen, A., Robertson, A., & Mctear, M. F. (2021). Can chatbots help support a person’s mental health? Perceptions and views from mental healthcare professionals and experts. ACM Transactions on Computing for Healthcare, 2(3), Article 25. https://doi.org/10.1145/3453175

The European Commission’s High-Level Expert Group on Artificial Intelligence. (2018). A definition of AI: Main capabilities and scientific disciplines. European Commission. https://ec.europa.eu/futurium/en/system/files/ged/ai_hleg_definition_of_ai_18_december_1.pdf

Tong, Y., Wang, F., & Wang, W. (2022). Fairies in the box: Children’s perception and interaction towards voice assistants. Human Behavior and Emerging Technologies, Article 1273814. https://doi.org/10.1155/2022/1273814

Turkle, S. (2010). In good company? In Y. Wilks (Ed.), Close engagements with artificial companions (pp. 3–10). Benjamins.

Turkle, S. (2018). Empathy machines: Forgetting the body. In V. Tsolas & C. Anzieu-Premmereur (Eds.), A psychoanalytic exploration of the body in today’s world on body. Routledge.

Twomey, C., O’Reilly, G., & Meyer, B. (2017). Effectiveness of an individually-tailored computerised CBT programme (Deprexis) for depression: A meta-analysis. Psychiatry Research, 256, 371–377. https://doi.org/10.1016/j.psychres.2017.06.081

Usta, M. B., Karabekiroglu, K., Say, G. N., Gumus, Y. Y., Aydın, M., Sahin, B., Bozkurt, A., Karaosman, Y., Aral, A., Cobanoglu, C., Kurt, D. A., Kesim, N., & Sahin, I. (2020). Can we predict psychiatric disorders at the adolescence period in toddlers? A machine learning approach. Psychiatry and Behavioral Sciences, 10(1), 7–12. https://doi.org/10.5455/PBS.20190806125540

Van de Sande, D., van Genderen, M. E., Huiskens, J., Gommers, D., & van Bommel, J. (2021). Moving from bytes to bedside: A systematic review on the use of artificial intelligence in the intensive care unit. European Journal Of Intensive Care Medicine, 47(7), 750–760. https://doi.org/10.1007/s00134-021-06446-7

Vigo, D., Thornicroft, G., & Atun, R. (2016). Estimating the true global burden of mental illness. The Lancet Psychiatry, 3(2), 171–178. https://doi.org/10.1016/S2215-0366(15)00505-2

Waldrop, M. M. (1987). A question of responsibility. AI Magazine, 8(1), 29–39. https://doi.org/10.1609/aimag.v8i1.572

Werntz, A., Amado, S., Jasman, M., Ervin, A., & Rhodes, J. E. (2023). Providing human support for the use of digital mental health interventions: Systematic meta-review. Journal of Medical Internet Research, 25, Article e42864 https://doi.org/10.2196/42864

Wind, T. R., Rijkeboer, M., Andersson, G., & Riper, H. (2020). The COVID-19 pandemic: The ‘black swan’ for mental health care and a turning point for e-health. Internet Interventions, 20, Article 100317. https://doi.org/10.1016/j.invent.2020.100317

World Health Organization. (2004). Promoting mental health: Concepts, emerging evidence, practice. https://apps.who.int/iris/bitstream/handle/10665/42940/9241591595.pdf

World Health Organization. (2020). COVID-19 disrupting mental health services in most countries, WHO survey. https://www.who.int/news/item/05-10-2020-covid-19-disrupting-mental-health-services-in-most-countries-who-survey

Xue, V. W., Lei, P., & Cho, W. C. (2023). The potential impact of ChatGPT in clinical and translational medicine. Clinical and Translational Medicine, 13(3), Article e1216. https://doi.org/10.1002/ctm2

Zangani, C., Ostinelli, E. G., Smith, K. A., Hong, J. S. W., Macdonald, O., Reen, G., Reid, K., Vincent, C., Syed Sheriff, R., Harrison, P. J., Hawton, K., Pitman, A., Bale, R., Fazel, S., Geddes, J. R., & Cipriani, A. (2022). Impact of the COVID-19 pandemic on the global delivery of mental health services and telemental health: Systematic review. JMIR Mental Health, 9(8), Article e38600. https://doi.org/10.2196/38600

Authors’ Contribution

Mücahit Gültekin: conceptualization, data curation, investigation, methodology, project administration, validation, writing—original draft, writing—review & editing. Meryem Şahin: data curation, formal analysis, investigation, methodology, validation, visualization, writing—original draft, writing—review & editing.

Editorial Record

First submission received:

August 10, 2022

Revisions received:

June 15, 2023

September 22, 2023

November 11, 2023

Accepted for publication:

November 13, 2023

Editor in charge:

Lenka Dedkova

Introduction

Mental health problems are a common and widespread problem (Vigo et al., 2016; World Health Organization [WHO], 2004). However, it is noted that the demand for mental health services is not being met. While there are nine psychiatrists per 100,000 people in developed countries, it is only 0.1 per 1,000,000 people in lower-income countries (Abd-alrazaq et al., 2019). WHO reports that 55% of people in developed countries and 85% of those in developing countries lack access to mental health services (Anthes, 2016). According to WHO (2020) the Covid-19 pandemic increased the demand for mental health services, which overwhelmingly hindered the access to mental health services.

Digital Interventions in Mental Health have been utilized for nearly 25 years (Aboujaoude et al., 2020; Hollis et al., 2015; Lattie et al., 2022; Rojas et al., 2019). The literature has proven the prevalent use of digital applications in mental health services during the Covid-19 pandemic (Connolly et al., 2021; Liu et al., 2020; Philippe et al., 2022; Stein et al., 2022; Wind et al., 2020; Zangani et al., 2022). AI-driven digital applications have recently gained much attention due to their potential to minimize the need for resources such as time, cost, and professional expertise (Blease et al., 2020; Lattie, 2022). Although the mentioned studies yield promising results for the use of artificial intelligence (AI) in mental health services, they are relatively new. There is still a debate on the pros and cons of such applications in mental health services (Broadbent, 2017; Gültekin, 2022). Taking into consideration that there are obstacles in accessing mental health services, high morbidity, and mortality in psychiatric patients, there is an urgent need for the integration of AI into mental health services (Lee et al., 2021). Nevertheless, health care professionals play a critical role in the acceptance and integration of this new technology into the field of mental health (Buck et al., 2022; Monteith et al., 2022; Sebri et al., 2020). In this regard, very few studies have investigated the views of mental health professionals (Blease et al., 2020; Sweeney et al., 2021). Even those few studies were conducted on experts who have not engaged in AI, and their opinions were primarily collected through questionnaires. In the literature, no study has examined experts’ perceptions of AI experiences through in-depth interviews.

In this study, we contributed to filling this gap in the literature by focusing on the opinions of mental health professionals using AI in therapeutic interventions in Turkey. Given that the use of AI in mental health services in Turkey is still very new (Bilge et al., 2020; Erebak, 2018; Usta et al., 2020), the insights of professionals engaging with this technology are essential to predict the future of AI in the field of mental health. Accordingly, answers to the following questions were sought:

RQ1: How do mental health professionals evaluate the advantages and disadvantages of using AI in health care services?

RQ2: What role do mental health professionals assign to AI in mental health services?

At the time of this study, AI technologies encompassed a range of advanced techniques, such as artificial neural networks, deep learning, reinforcement learning, machine reasoning (planning, scheduling, knowledge representation, search, and optimization), robotics (cyber-physical system integration of all techniques related to control, sensing, sensors, and actuators; The European Commission’s High-Level Expert Group on AI, 2018). It is noteworthy that the ChatGPT language model, which is based on deep learning (Cheng et al., 2023), represents a ground-breaking development (Xue et al., 2023), and has yet to be released.

The Use of AI in Mental Health Services

AI-driven digital applications have recently gained much attention for their potential to minimize the need for resources such as time, cost, and professional expertise (Blease et al., 2020; Lattie, 2022). Research has shown that AI technologies such as wearable technology, virtual reality glasses, chatbots, and smartphone applications are used in mental health services (Fiske et al., 2019). In this sense, the use of AI holds promising potential for early diagnosis (Kalmady et al., 2019), treatment (Fitzpatrick et al., 2017), training of mental health professionals (Rein et al., 2018), and drug development (Powell, 2017). For example, a web-based chatbot Woebot, grounded in the cognitive-behavioral model, tested students with depression and anxiety symptoms. Woebot engages users via smartphones and tablets, emphasizing that its service is not a therapy nor provided by a psychologist. Woebot’s algorithm is tuned for specific therapeutic skills (e.g., asking relevant questions, providing empathetic responses). Through conversations about moods, thoughts, and feelings (e.g., “How do you feel?”, “What are you doing?”), Woebot offers proper books, videos, and activities, focusing on detecting clients’ cognitive distortions by using some applications such as word games. Students in the Woebot group reported improvements in anxiety and depression symptoms (Fitzpatrick et al., 2017). Other similar applications have been developed to treat mental health problems as well. For example, Mobilyze, a mobile phone and internet-based application to treat depression and anxiety disorder (Burns et al., 2011); the PTSD Coach to treat post-traumatic stress disorder (E. Kuhn et al., 2014); Tess, a chatbot to cope with depression, loneliness and anxiety problems (Fulmer et al., 2018; Joerin et al., 2019); Wysa, an AI supported mobile application to treat depression (Inkster et al., 2018); SARA, an android application for the adolescents with substance addiction (Rabbi et al., 2017), and Deprexis, an application based on cognitive behavioral therapy model to treat depression are among those mediums with promising outcomes (Twomey et al., 2017). Several studies have proven the accurate and functional use of AI-supported applications in early diagnosing mental health problems, and developmental conditions, including autism (Doi, 2020), personality disorders (Carvalho & Pianowski, 2019), and Parkinson’s disease by “digital phenotyping” method (Capecci et al., 2016). The digital phenotyping method has mainly been preferred to treat depression and anxiety problems (D’Alfonso, 2020), and has proven to be an effective method in the early diagnosis of those disorders (Cao et al., 2017; Jacobson et al., 2020; Kamath et al., 2022; Mastoras et al., 2019; Melcher et al., 2021).

The Advantages and Disadvantages of Using AI in Mental Health Services

Although the mentioned studies yield promising results for the integration of AI in mental health services, it is still in its early stages., sparking debates on the pros and cons of such applications in mental health services (Broadbent, 2017; Gültekin, 2022). Scholars highlight some advantages of AI-supported applications, including better diagnosis, treatment, and care (Cresswell et al., 2018; Janssen et al., 2018; Luxton, 2016, pp. 27–51; McShane et al., 2012); less workload (Cecula et al., 2021; Fiske et al., 2019); easy access to mental health services and less stigmatization (Lucas et al., 2014; Luxton, 2014; Miner et al., 2019; Pham et al., 2022); less cost (Ahmed et al., 2021; Chew & Achananuparp, 2022); interesting and engaging therapies (Hudlicka, 2016); less risks in certain therapeutic interventions (Bilge et al., 2020; Luxton, 2014;); constant support for those living alone (Cavallo et al., 2018; Hung et al., 2019) and better personalization of the treatment for patients (Bzdok & Meyer-Lindenber, 2018). Conversely, some draw attention to ethical, legal, and practical concerns regarding using AI in mental health (Cosgrove et al., 2020; Turkle, 2010). For instance, the inability to establish empathy and therapeutic bonds (Scholten et al., 2017) as well as prejudice and discrimination problems (Fan, 2020, p. 76) are among the given disadvantages of such applications. Another issue is about the responsibility (Waldrop, 1987). Who would be responsible for an AI application’s misdiagnoses or biased treatment? Another problem is security. AI programs collect extensive information about a client’s cultural, social, economic, political, and health-related activities, which might lead to problems of confidentiality, privacy, and autonomy (Bennett & Doub, 2016; Broadbent, 2017). It is noteworthy that there are still no ethical guidelines for the safe use of AI in mental health (Fiske et al., 2019).

The ontological boundary problem further complicates the interaction between humans and AI technologies like social robots and digital assistants (Gültekin, 2022). Robots with humanoid features may lead users to perceive them as a new ontological category (Kahn & Shen, 2017; Pradhan et al., 2019; Tong et al., 2022), leading to misconceptions about cognitive and social attachment or isolation (Festerling & Siraj, 2021; Haggadone et al., 2021; Serholt et al., 2017; Sharkey, 2016). While AI can save time in diagnosis, offer personalized treatment and more accurate medical decisions in other health services such as pathology, radiology, surgery, dermatology, and ophthalmology, there are still concerns about privacy, confidentiality, stigmatization, and the possibility of wrong diagnosis and unemployment in health sector (Al-Medfa et al., 2023; Güvercin, 2020).

Mental Health Experts’ Perspective on the Use of AI

The limited evidence on mental health professionals’ views on the use of AI revealed a cautious stance among 791 psychiatrists, with 48.7% of the participants stating that AI would have little or no impact on psychiatrists in the next 25 years (Doraiswamy et al., 2020). In this global survey, only 3.8% of the participants believed AI would render their profession redundant. Another 47% believed that AI would have a moderate impact on their professions. In the same study, most participants (83%) reported that AI could carry out laborious tasks such as managing medical records and organizing and synthesizing documents.

By comparison, 83% stated that AI cannot replace an average mental health specialist in providing empathetic care. In a qualitative study by Blease et al. (2020), some participants emphasized that machines and humans would establish a “work-sharing” environment that complements each other and enriches the professional process, whereas others argued that it would increase administrative and bureaucratic workloads. The study also revealed opinions that AI might reduce the psychiatric skills of experts, cause over-dependence on technology, and underscore the significance for experts handling possible system errors.

Although present findings are promising, the adoption of digital interventions in mental health is not yet widespread (Lord et al., 2023). One of the factors is undoubtedly clinicians’ attitudes (Nogueira-Leite & Cruz-Correia, 2023). It is reported that obstacles to the widespread adoption of such technologies by therapists involve a lack of training, concerns about the quality of therapy, and limited experience (Feijt et al., 2023). For example, in recent studies on the opinions of mental health professionals, those who did not have AI experience tended to approach therapeutic interventions with AI with caution (J. Prescott & Hanley, 2023). Notably, experience in AI-supported psychotherapy may change cautious attitudes toward such practices (Aktan et al., 2022).

Methods

Study Design

In this study, we employed a qualitative design to explore the views of mental health professionals regarding the use of AI in mental health services. Accordingly, we aimed to elicit participants’ insightful and comprehensive opinions and thoughts about the topic, through their own expressions.

Participants

We used the purposive maximum variation sampling method (Creswell, 2012) to select the participants and conducted the study in Turkey. The criteria for inclusion in the study comprised working as a mental health professional in Turkey and incorporating AI practices into their professional life. The reason for this criterion was to benefit from the insights of those with knowledge and experience in AI practices. Hence, we aimed to obtain in-depth information about participants’ AI experiences. We conducted semi-structured interviews with 13 mental health professionals who met the inclusion criteria. To identify potential participants, we firstly scanned the internet for open sources and listed the mental health professionals in Turkey who have academic papers on AI or apply it in mental health care. Then, we sent an informative invitation email explaining the purpose, scope, and method of the study. We interviewed the volunteers, and we also received suggestions for alternative experts in the field. We contacted by email. As seen, we also used the snowball sampling method.

The participants encompassed a diverse group, including nine psychologists at varying levels of expertise, three psychiatrists, and one psychological counsellor and guide. All participants have had hands-on experience with AI in their professional lives, engaging in technology-assisted psychotherapies (e.g., virtual reality, augmented reality, cognitive-behavioral therapy-based digital applications), human-robot interaction, machine learning, Gerom technology, predictive medicine, and self-help interventions (see Table 1). Data diversity was achieved by involving individuals interested in different facets of AI.

Table 1. Participant Characteristics.

|

Nickname |

Gender |

Profession |

Interest in AI |

Professional experience (years) |

Interview duration (minute) |

|

P1 |

Male |

Adult psychiatrist, Academician |

|

11 |

58 min. |

|

P2 |

Male |

Ph.D. Psychologist |

|

10 |

75 min. |

|

P3 |

Male |

Child and Adolescent Psychiatrist, Academician, PhD. student in Neuroscience |

|

8 |

48 min. |

|

P4 |

Male |

Clinical Psychologist, Ph.D. student |

|

5 |

52 min. |

|

P5 |

Male |

Adult Psychiatrist, Academician |

|

7 |

44 min. |

|

P6 |

Male |

Clinical Psychologist,Ph.D. student |

|

16 |

58 min. |

|

P7 |

Female |

Clinical Psychologist, PhD. student |

|

10 |

55 min. |

|

P8 |

Female |

Clinical Psychologist, Ph.D. student in Neuroscience |

|

9 |

43 min. |

|

P9 |

Female |

Ph.D. Clinical Psychologist, Academician |

|

11 |

45 min. |

|

P10 |

Female |

Clinical Psychologist, Ph.D. student, Academician |

|

7 |

64 min. |

|

P11 |

Male |

Ph.D. Psychological Counselor and Guide, Academician

|

|

11 |

55 min. |

|

P12 |

Female |

Psychologist, Neuroscience graduate student |

|

2,5 |

45 min. |

|

P13 |

Male |

MSc, Psychologist, Family counselor |

|

14 |

75 min. |

Data Collection Process

We designed a semi-structured interview form following the review of the relevant literature on the use of AI in mental health. As suggested by Glesne (2011), we took feedback from three experts and conducted a pilot interview to finalize the interview form. We included open-ended questions to identify the perceived advantages and disadvantages of AI in mental health services and ascertain the optimal stages for applying AI in practice. The interviews centered around five questions:

- Why do you prefer using AI-assisted applications in your professional life?

- What do you think about using AI in mental health services? What are the pros and cons of using such applications?

- What do you think about the therapeutic relationship between a client and AI-assisted applications?

- What do you think about the potential applications of AI-assisted technologies in mental health services?

- In the event of a conflict between an AI-assisted application and a human mental health professional’s diagnosis or treatment plan, how would you evaluate this situation?

Before beginning the study, we received ethical approval from Afyon Kocatepe University Social and Human Sciences Research and Publication Ethics Committee (2020/137).

We conducted semi-structured interviews via ZOOM with willing participants, and we recorded the interviews with the consent of the participants. The interview phase spanned from July 2020 to June 2021, with sessions lasting between 43 and 75 minutes. Of the 13 interviews, six were conducted by the first researcher, and seven by the second researcher. Participation was voluntary, and no incentives were offered.

Data Analysis

We prepared the exact transcriptions of the recorded interviews, which fostered our familiarity with the study data (Braun & Clarke, 2006). The transcriptions were reviewed and confirmed by the participants. We referred to each participant as P1, P2 (see Table 1).

We followed Creswell’s (2012) suggestions: (1) We meticulously read and noted key points, (2) we delved into the content to decipher underlying meanings, (3) we created relevant codes, (4) we categorized the codes for systematic analysis, (5) we reviewed our codes again, and (6) we generated themes and sub-themes by combining similar codes and categorizing hierarchically. Especially in the third and fourth stages, considering the research questions, we created codes to describe and label the data in the analysis process (Saldaña, 2015), and we coded using symbolic abbreviations to represent the data. For example, while trying to figure out what participants think about the role of AI in mental health services, one participant said, “…I think technology-assisted applications support therapy and facilitate our work… So, they can be used as a support for therapy instead of being used alone”. Here, participants especially emphasized the auxiliary role of AI-assisted applications in clinical intervention and psychotherapy, so we created a code labelled “Assistance to interventions.” Then, we created a category called “expert assistant” and grouped any codes implying assistant roles that fell under this category. As Glesne (2011) states, we gradually extracted the codes from the data.

After the coding phase, our research team collaboratively reviewed and refined the codes for clarity and consistency. Some statements were cross-referenced into more than one code. Therefore, we organized the codes that associated similar meanings into categories involving common themes in order to achieve semantic integrity. For example, we created the category of “advantages for clients”, encompassing service satisfaction, affordability, easy accessibility, non-stigmatization, transparency, and risk control. Then, we reviewed all the data to minimize the overlap and redundancy in the codes. We conducted a content analysis using the interview transcripts and the MAXQDA program. The employed analysis program contributed to a more rigorous analysis thanks to its ability to manage extensive and complex data, provide quick and easy access to the codes created by the researchers, and allow for closer scrutiny of the data (Creswell, 2013). Thus, our work became more systematic, refined, and meticulous (Bazeley & Jackson, 2013). We also benefited from the program to visualize our findings.

In the study, we did not consider participant frequency as an indicator, and only one participant’s opinions were used to create codes. Instead, we prioritized how many participants mentioned a code to have a general overview of the data distribution (Miles & Huberman, 1994). The relevant figures show the number of participants who mentioned the codes by using the symbol N.

Credibility and Dependability

As Merriam (2009) suggested, several researchers play a role in ensuring the credibility of the findings. As researchers, we often conducted collaborative session to discuss the codes and categories and agreed on them all throughout the analysis process. We devoted sufficient time (13 months) to collecting data and made a detailed description of the study group characteristics and data collection and analysis processes. We enhanced sample diversity by interviewing a range of mental health specialists interested in AI.

In the final coding stage, we employed an external audit and asked a field expert to check the coding to enhance credibility. Lastly, we utilized the reliability formula of Miles and Huberman (1994), and it was .94. We used direct quotations in the findings section to reflect the participants’ views. Additionally, we reduced the margin of error by performing computer-assisted analysis, and digitally stored the transcripts, data, and code contents to ensure confirmability.

Results

Findings were presented under four themes, considering the responses to the research questions. Accordingly, the themes of the perceived advantages and disadvantages of using AI-assisted applications in mental health were generated following the responses to those questions: Why do you prefer AI-supported applications in your professional life? What do you think about using AI in mental health services? What are the pros and cons of using such applications? What do you think about the therapeutic relationship between a client and AI-assisted applications?. The theme of the perceived role of AI in mental health services was generated following the responses to the questions of What do you think about the possible ways to use AI-assisted applications in mental health services? When an AI-assisted application conflicts with a human mental health professional’s diagnosis or treatment plan, how would you evaluate this situation? Apart from them, the theme of the future of AI in mental health services was generated according to the overall assessment of the responses.

The Perceived Advantages of Using AI in Mental Health Services

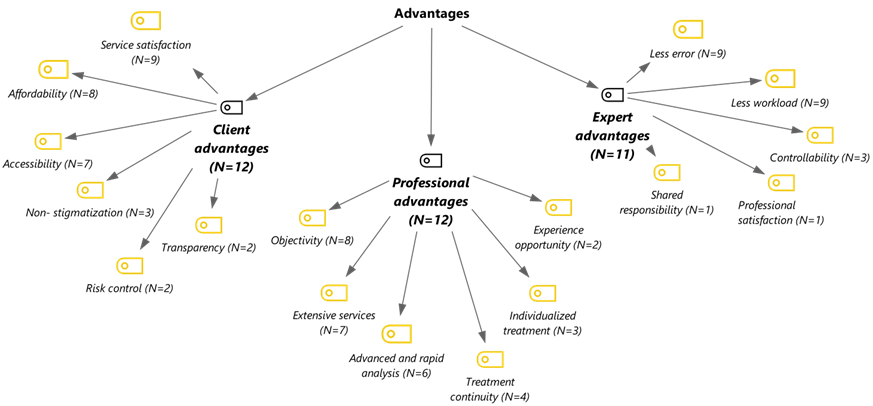

According to the analysis results, the advantages can be grouped into three categories: the advantages to clients (N = 12), professional advantages (N = 12), and advantages to mental health care experts (N = 11). The “the professional advantages” category covers the effects related to the nature and practice of the mental health profession, the “the advantages to mental health care experts” category covers the effects related to the personal and professional experience of mental health experts, and lastly, the “the advantages to clients” category describes the effects that the service recipient may experience. The codes in the given categories are shown in Figure 1.

Figure 1. The Categories and Codes of Advantages.

Code descriptions and direct quotations related to each code are presented in Table 2.

Table 2. The Categories, Codes, Code Descriptions and Example Quotations Related to the Perceived Advantages of Using AI in Mental Health Services.

|

Category |

Code |

Description |

Example quotations |

|

Client advantages

|

Service satisfaction |

Enhanced service satisfaction via AI-supported applications |

“For instance, when a patient goes to a doctor, he gets support and treatment there. If that patient goes to someone else, he does not have to tell the same things from the beginning. As a doctor, you would already have all his data on the system… for example, his past experiences, negative life events, or treatment interventions. A different doctor may also use this system to make a treatment plan. It would be very beneficial for the patient.” (P3) |

|

Accessibility |

Facilitating access to mental health services via AI-supported applications |

“A client may not easily contact with a psychotherapist, but an AI can be reached at any time. This is a critical point.” (P2) |

|

|

Affordability |

Cheaper than traditional methods |

“It is less costly for a client or patient.” (P5) |

|

|

Non- stigmatization |

Ensuring privacy through the use of applications that are not supported by humans or anonymous accounts |

“Indeed, it is something that really reduces stigmatization. A person can use it anonymously, and she/he does not have to tell anyone or go anywhere.” (P11) |

|

|

Risk control

|

The opportunity to control their exposure in virtual or augmented reality applications offers a sense of safety for the client |

Referring to virtual reality applications: “They provide a safer place to clients because it is a virtual environment.” (P10) |

|

|

Transparency |

Sharing data, analyses, and results related to diagnosis and therapeutic processes of AI-assisted applications with the client |

“…in fact, transparency also matters. (…) such support systems can give justifications about the decisions they made on and officially show me the decision-making process.” (P2) |

|

|

Professional advantages |

Advanced and rapid analysis |

The capacity to perform complex data analyses far beyond human cognitive capabilities |

“As humans, we have a limit, but a robot may not. For example, you are a marriage therapist, and a couple consults you. In this case… the couple tells their problems to an AI robot. Then, that robot would automatically match and assess all the symptoms following what is told in the session. Such technology can scan all the articles about marriage, family, or couples maybe in one or two minutes.” (P2) |

|

Objectivity |

Making evaluations based only on measurement and data without having emotional considerations |

“Another advantage is the objective assessment opportunity without a therapist’s own counter-transference or biases.” (P5) |

|

|

Extensive services |

Making mental health services available to diverse groups via easily accessible and inexpensive services |

“…I think it can reach more people. These are positive developments.” (P12) |

|

|

Treatment continuity |

Increasing client satisfaction and enhancing treatment continuity by offering technology-based solutions that require remote access |

“The more we incorporate technology, the fewer drop rates there will be.” (P4) |

|

|

Individualized treatment |

Individualized treatment plans created through advanced analysis techniques |

“They can provide individualized diagnosis and individualized medicine.” (P3) |

|

|

Experience opportunity |

Providing a space for mental health experts to gain practical experience during their training |

“Mental health students need a lot of practice and experience. Therefore, before having sessions with real clients, it is really likely to create simulations using AI technologies. Thus, in a simulated environment, a therapist candidate can practice and gain experience by working on a scenario of a client; he can get feedback from supervisors, and thus improving himself with such artificial technologies.” (P10) |

|

|

Expert advantages |

Less error |

Using a greater wealth of data and parameters, making more accurate inferences in diagnosis and treatment compared to human experts |

“It is a fact that AI reduces human error.” (P1) |

|

Less workload |

Reducing the workload of the expert by delegating some tasks to AI, just like an assistant |

“For example, in our project, we apply the cognitive behavioral therapy models and have to explain them to clients. However, we’re tired of talking about it, so now we just say, ‘Watch Module 1 and then come’.” (P11) |

|

|

Controllability |

The ability to control the process steps and the environment more than in real life through the expert’s application content |

Referring to virtual reality applications: “We are in control, there are no surprises, there are no unknowns, and we can actually change the process gradually. It provides the sense of control for the therapist.” (P10) |

|

|

Shared responsibility |

Sharing responsibility as decisions and interventions will be based on AI data

|

“Indeed, such systems protect the expert (…), it is something that relieves a person of the responsibility.” (P2) |

|

|

Professional satisfaction |

Improved satisfaction levels for both the service recipients and professionals due to faster results |

“Well, you feel satisfaction in a shorter time.” (P4) |

The Perceived Disadvantages of the Use of AI in Mental Health Services

According to the experts’ opinions on the possible disadvantages of using AI applications in mental health services, we generated three categories: professional disadvantages (N = 13), the disadvantages to clients (N = 10), and the disadvantages to experts (N = 8). Figure 2 below shows the codes and categories.

Figure 2. The Categories and Codes of Disadvantages.

Code descriptions and direct quotations related to each code are shown in Table 3 below.

Table 3. The Categories, Codes, Code Descriptions and Example Quotations Related to the Perceived Disadvantages of Using AI in Mental Health Services.

|

Category |

Code |

Description |

Example quotations |

|

Professional disadvantages |

Inability to meet the therapeutic needs |

The shortcomings of AI compared to humans in therapeutic settings, such as empathy, emotional reflection, and content-specific responses beyond algorithms, due to the ontological difference between human and machine |

“A therapeutic relationship cannot be established on such systems.” (P7) |

|

Reliability issues |

Distrust of the black-box nature of AI, the findings and applicability of relevant publications, and the tendency towards monopolization in services |

“…how can I trust a machine? All in all, AI is a black box. Yes, an answer comes out of a box, but we do not know the exact process. For example, we do not know exactly how it operates deep learning algorithms.” (P3) |

|

|

Lack of the infrastructure |

It is not enough to rely solely on mental health expertise. It is a must to cooperate with experts from various fields, such as computer science and biology professionals and to possess the necessary technological materials |

“There must be a team for this. You have to work with engineers and software developers. Not everyone has the opportunity to have this service or develop their product.” (P9) |

|

|

Not suitable for all clients |

Its use is not appropriate or effective for all clients, both because of certain personality traits and pathology. |

“Web-based interventions are not suitable for everybody, just as not every therapy model is for everyone.” (P11) |

|

|

Role confusion |

The development or use of applications without a mental health expert confuses the expert’s professional identity. |

“In other words, not all those in businesses that would provide consultancy services through AI are not mental health professionals. I have concerns about the service delivery… Who will provide the service?” (P8) |

|

|

Loss of subjectivity |

AI-supported applications will implement standardized structured procedures without considering a client’s subjectivity. |

“...one of the criticisms is about offering the same interventions for everyone. Of course, some emphasize emotions and feelings. I think the biggest problem with such AI systems is the individualization of the service.” (P11) |

|

|

Standardization problems |

Consideration of various parameters by diverse companies when developing AI-supported applications and standardization problems due to the lack of authority |

“I fear that there would be many different applications that would give different results. Assessment and measurement would cause trouble. (…) For example, would different firms do different assessments and measurements? Frankly, I think there might be such problems.” (P4) |

|

|

Client disadvantages |

The possibility of being harmed |

AI-supported applications can potentially trigger certain psychopathologies or cause psychological and physiological harm. |

“Another dimension is the possibility of being harmed by such applications.” (P5) |

|

Low motivation |

Without expert follow-up in AI systems, a client would not be motivated to continue and finish the process. |

“As you know, the most important predictor of treatment success in all types of therapy is the client-doctor relationship. In this sense, I guess that client motivation would be low in digital interviews without an expert, at least under current conditions.” (P5) |

|

|

Privacy and security issues |

The possibility that the information shared with AI applications may be shared with third parties |

“AI might be under suspicion: Now this machine records anything about me, and this is AI, I mean, something that is directed by others. Can it share my privacy? For example, chatbots. What if it is a Facebook chatbot and takes all my data? How would I know that it would not do anything about my data? Here, my privacy is being invaded. Alternatively, a client might hesitate to share something that is a crime. That client might think that the government controls the AI systems, so it might reach his data and use against him as well.” (P2) |

|

|

Transfer issues |

Challenges in transferring the skills acquired through AI-supported applications to the real world |

“I mean, clients might say that this is not real somehow and can’t make me feel better. In the real world, he might say it doesn’t work for me.” (P9) |

|

|

Unsociability |

Non-human or remotely conducted practices may have a negative impact on clients’ social interactions. |

“… AI support system might promote avoidant attitudes.” (P8) |

|

|

Expert disadvantages |

Unemployment |

As a result of AI-supported applications reducing the workload of experts and enabling independent intervention, the demand for human experts decreases, leading to unemployment problems. |

“There would be less need for professionals.” (P13) |

|

High cost |

The high cost of having AI-supported applications for experts |

“… software systems are costly. The development and integration of new software is a challenging task. Production and uses are very expensive.” (P7) |

|

|

Machine dependency |

Reduces in experts’ motivation to learn, develop professional relationships, and produce new knowledge due to the transfer of some of the professional work to the machine |

“If things get easy, people might become lazy and lose their enthusiasm for reading. They might think if an AI application could read all the literature for me, then I don’t need to read it myself.” (P3) |

|

|

Responsibility issues |

Who will be responsible for the decisions made through AI-supported applications is unclear. |

“Who will be responsible for the decisions? I think that’s the real problem.” (P1) |

The Perceived Roles of AI in Mental Health Services

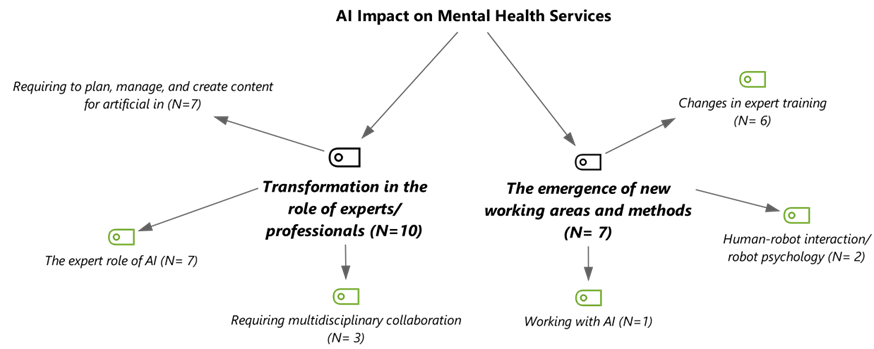

We found three fundamental roles of AI in mental health services: expert assistant (N = 13), mental health expert (N = 8), and care-support services provider (N = 4; Figure 3). The “expert role” of AI refers to using AI applications as subject or agent, while the role of a mental health specialist is either absent or auxiliary. “Expert assistant role” refers to using mental health experts as the primary subjects and AI applications as a tool in the role of expert helper. In the care-support services provider role, AI applications can offer social, emotional, and cognitive support to the elderly, children, or adults, which signals its additional roles in preventive mental health services. Figure 3 below shows the codes and categories.

Figure 3. The Categories and Codes Regarding the Perceived Roles of AI in Mental Health Services.

Participants mostly believed that AI applications would assist experts. For AI’s roles as an expert assistant, the participants stressed assistance to interventions (N = 11).

Regarding AI’s roles as a mental healthcare expert, they emphasized the potentials of non-human interventions (N = 4) and non-human diagnosis systems (N = 2) thanks to the long-term developments in AI technology. However, some were hesitant about the complete expert role of AI under all circumstances and stated that it could depend on the extent of the problem, the title of the mental health specialist, and the development level of the AI technology (N = 6). Although the participants mentioned the possibility of AI’s expert roles, they emphasized the differences between the virtual service and the one offered by a human mental health specialist: “But of course, they cannot give feedback like a human and convey the empathy feeling.” (P6). Such a point of view can be considered as a mechanism to protect professional self-esteem. The category, code, and sample statements regarding the roles of AI in mental health services are presented in Table 4.

Table 4. The Categories, Codes, Code Descriptions, and Example Statements on the Role of AI in Mental Health Services.

|

Category |

Code |

Description |

Example quotations |

|

|

Expert assistant |

Assistance to interventions |

Supporting the presence of human experts and serving as an assistance tool for interventions during and outside the session (including developing personalized treatment plans, contributing to the psycho-education, participating in counseling, and monitoring a client’s treatment progress)

|

“I think technology-assisted applications support therapy and facilitate their work (…) So, they can be used as a support for therapy instead of being used alone.” (P9) |

|

|

Assistance to diagnosis, screening, and prediction |

Using AI as an assistance tool for human experts in diagnosis and prediction through the analysis of client-related data (such as data-based decision support systems and risk scanning) |

“I think it cannot make a clear diagnosis but can facilitate the diagnosis process. In other words, before having a session with a clinician, the patient can actually undergo an evaluation with an AI application, and then see a psychiatrist or a psychologist who would make a clearer and easier diagnosis.” (P6) |

||

|

Self-help applications |

The use of self-help practices as a tool to support treatment, either recommended by an expert or accessed by the client |

“Just like an expert can recommend books to his patients, I mean he offers bibliotherapy, and he can also recommend an AI program. In this sense, its use in treatment and self-help applications is quite possible.” (P5) |

||

|

Assistance to expert training |

Use as a tool in an auxiliary role in expert training through simulations and virtual clients. |

“Well, it is much easier to design a virtual client than a virtual therapist. In this sense, it might be beneficial to students of psychological counseling, psychology, and psychiatry; that is, certain prototypes can be developed to gain experience.” (P11) |

||

|

The expert role |

Non-human interventions |

AI applications directly intervene as the subject rather than as a tool assisting the expert. |

“AI applications will treat without human assistance. I think it is possible. (…) I think about it from a psychotherapeutic or a pharmacological point of view. You know, they can do it psychotherapeutically. I mean, they can do therapy. Maybe it will do it pharmacologically.” (P1) |

|

|

Non-human diagnosis |

AI applications diagnose as the subject rather than in the role of an auxiliary tool. |

“Maybe not today, but we will accept the diagnosis of machines in the future. Perhaps it would be much more reliable, and we would not bother with diagnosis at all, and we would proceed according to that report.” (P11) |

||

|

Attributing expertise depending on the circumstances |

The ability to make independent diagnoses and treatment by attributing agency to the AI in interventions for mild problems (but not in all cases) |

Depending on the extent of the problem |

“When we consider the whole psychiatry field, no, AI applications cannot treat all cases, but I think they can be customized. For example, an expert can diagnose a specific phobia. Then, he can include an AI application such as virtual reality in the therapy program. Thus, a client can use and follow that application software without the supervision of an expert. A person can learn to control their stress without an expert. (…) Therefore, yes, they can be used for specific and simple diagnoses, but not for complex cases.” (P6) |

|

|

Not yet, but with the development of more advanced AI-supported applications, independent diagnosis and treatment through attributing agency becomes a possibility. |

Depending on the developments in AI technology

|

“If it is an advanced and verified technology, it could be used in this sense (…) I think that if we cannot see neural fluctuation and fires in the brain with the naked eye, and if there are machines that can see it, we should believe in the machine.” (P8) |

||

|

Attributing agency/subjectivity to a human expert or AI application by making comparisons according to knowledge and experience parameters |

Depending on the title of the human expert |

“Of course, this is also dependent on the title of the human mental health expert.” (P5) |

||

|

Care-support role services provider |

|

“I think that we would use more such technologies, I mean robotic technologies assisted by AI and software, primarily for the elderly and child care.” (P13) |

||

Regarding the expert role of AI in mental health services, we observed discrepancies between participants’ anticipations about the future of AI and their desires. The possibility and desirability of AI’s role in mental health services are shown in Table 5.

Table 5. The Possibility and Desirability of Using AI in Mental Health Services.

|

|

P1 |

P2 |

P3 |

P4 |

P5 |

P6 |

P7 |

|||||||

|

Pos. |

Des. |

Pos. |