No secrets between the two of us: Privacy concerns over using AI agents

Vol.16,No.4(2022)

The diverse spread of artificial intelligence (AI) agents provides evidence of the most notable changes in the current media landscape. AI agents mostly function based on voluntary and involuntary sharing of users’ personal information. Accordingly, users’ privacy concerns have become key to understanding the varied psychological responses towards AI agents. In this study, we adopt the “computers are social actors” paradigm to identify the association between a set of relational variables—intimacy, para-social interactions, and social presence—and privacy concerns and to determine whether a user’s motivations moderate this relationship. The results from an online survey (N = 562) revealed that this occurs primarily to gratify three AI agent user needs: entertainment motivation, instrumental motivation, and passing time. The results also confirmed that social presence and intimacy significantly influence users’ privacy concerns. These results support the moderating effect of both entertainment and instrumental motivation on the relationship between intimacy, para-social interaction, social presence, and privacy concerns about using AI agents. Further implications for privacy concerns in the context of AI-mediated communications are discussed.

AI; intelligent agent; privacy concerns; para-social interaction; social presence; intimacy; motivation

Sohye Lim

Ewha Womans University, Republic of Korea

Dr. Sohye Lim is a professor in the School of Communication and Media at Ewha Womans University, Korea. Her research interests include media users’ psychological responses to various emerging media technologies and A.I. mediated communication.

Hongjin Shim

Korea Information Society Development Institute (KISDI), Republic of Korea

Dr. Hongjin Shim is a research fellow in Center for AI and Social Policy at the Korea Information Society Development Institute. His research interest covers new communication technology, media psychology and media effect.

Alepis, E., & Patsakis, C. (2017). Monkey says, monkey does: Security and privacy on voice assistants. IEEE Access, 5, 17841–17851. https://doi.org/10.1109/ACCESS.2017.2747626

Bailenson, J. N., Beall, A. C., & Blascovich, J. (2002). Gaze and task performance in shared virtual environments. The Journal of Visualization and Computer Animation, 13(5), 313–320. https://doi.org/10.1002/vis.297

Barry, M. (2014). Lexicon: A novel. Penguin Books.

Berscheid, E., Snyder, M., & Omoto, A. M. (1989). The Relationship Closeness Inventory: Assessing the closeness of interpersonal relationships. Journal of Personality and Social Psychology, 57(5), 792–807. https://doi.org/10.1037/0022-3514.57.5.792

Brandtzæg, P. B., & Følstad, A. (2018). Chatbots: Changing user needs and motivations. Interactions, 25(5), 38–43. https://doi.org/10.1145/3236669

Cao, C., Zhao, L., & Hu, Y. (2019). Anthropomorphism of Intelligent Personal Assistants (IPAs): Antecedents and consequences. In PACIS 2019 proceedings, Article 187. AIS eLibrary. https://aisel.aisnet.org/pacis2019/187

Carey, M. A., & Asbury, J. (2016). Focus group research. Routledge. https://doi.org/10.4324/9781315428376

Cho, E., Molina, M. D., & Wang, J. (2019). The effects of modality, device, and task differences on perceived human likeness of voice-activated virtual assistants. Cyberpsychology, Behavior, and Social Networking, 22(8), 515–520. https://doi.org/10.1089/cyber.2018.0571

Chung, H., & Lee, S. (2018). Intelligent virtual assistant knows your life. arXiv. http://arxiv.org/abs/1803.00466

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. https://doi.org/10.2307/249008

Dibble, J., Hartmann, T., & Rosaen, S. (2016). Parasocial interaction and parasocial relationship: Conceptual clarification and a critical assessment of measures. Human Communication Research, 42(1), 21–44. https://doi.org/10.1111/hcre.12063

Elish, M. C., & boyd, d. (2018). Situating methods in the magic of Big Data and AI. Communication Monographs, 85(1), 57–80. https://doi.org/10.1080/03637751.2017.1375130

Eskine, K. J., & Locander, W. H. (2014). A name you can trust? Personification effects are influenced by beliefs about company values. Psychology & Marketing, 31(1), 48-53. https://doi.org/10.1002/mar.20674

Foehr, J., & Germelmann, C. C. (2020). Alexa, can I trust you? Exploring consumer paths to trust in smart voice-interaction technologies. Journal of the Association for Consumer Research, 5(2), 181–205. https://doi.org/10.1086/707731

Gambino, A., Fox, J., & Ratan, R. A. (2020). Building a stronger CASA: Extending the computers are social actors paradigm. Human-Machine Communication, 1, 71–86. https://doi.org/10.30658/hmc.1.5

Genpact (2017, December 16). Consumers want privacy, better data protection from artificial intelligence, finds new Genpact research. https://www.genpact.com/about-us/media/press-releases/2017-consumers-want-privacy-better-data-protection-from-artificial-intelligence-finds-new-genpact-research

Go, E., & Sundar, S. S. (2019) Humanizing chatbots: The effects of visual, identity and conversational cues on humanness perceptions. Computers in Human Behavior, 97, 304–316. https://doi.org/10.1016/j.chb.2019.01.020

Ha, Q. A., Chen, J. V., Uy, H. U., & Capistrano, E. P. (2021). Exploring the privacy concerns in using intelligent virtual assistants under perspectives of information sensitivity and anthropomorphism. International Journal of Human–Computer Interaction, 37(6), 512-527. https://doi.org/10.1080/10447318.2020.1834728

Hallam, C., & Zanella, G. (2017). Online self-disclosure: The privacy paradox explained as a temporally discounted balance between concerns and rewards. Computers in Human Behavior, 68, 217–227. https://doi.org/10.1016/j.chb.2016.11.033

Han, S., & Yang, H. (2018). Understanding adoption of intelligent personal assistants: A parasocial relationship perspective. Industrial Management and Data Systems, 118(3), 618–636. https://doi.org/10.1108/IMDS-05-2017-0214

Heravi, A., Mubarak, S., & Choo K. (2018). Information privacy in online social networks: Uses and gratification perspective. Computers in Human Behavior, 84, 441–459. https://doi.org/10.1016/j.chb.2018.03.016

Hijjawi, M., Bandar, Z., & Crockett, K. (2016). A general evaluation framework for text based conversational agent. International Journal of Advanced Computer Science and Applications, 7(3), 23–33. https://doi.org/10.14569/IJACSA.2016.070304

Hinde, R. A. (1978). Interpersonal relationships - in quest of a science. Psychological Medicine, 8(3), 373–386. https://doi.org/10.1017/S0033291700016056

Ho, A., Hancock, J., & Miner, A. S. (2018). Psychological, relational, and emotional effects of self-disclosure after conversations with a chatbot. Journal of Communication, 68(4), 712–733. https://doi.org/10.1093/joc/jqy026

Hoffmann, L., Krämer, N. C., Lam-Chi, A., & Kopp, S. (2009). Media equation revisited: Do users show polite reactions towards an embodied agent? In Z. Ruttkay, M. Kipp, A. Nijholt, & H. H. Vilhjálmsson (Eds.), Intelligent virtual agents (pp. 159–165). Springer. https://doi.org/10.1007/978-3-642-04380-2_19

Horton, D., & Wohl, R. R. (1956). Mass communication and para-social interaction: Observations on intimacy at a distance. Psychiatry, 19(3), 215–229. https://doi.org/10.1080/00332747.1956.11023049

Howard, M. C. (2016). A review of exploratory factor analysis decisions and overview of current practices: What we are doing and how can we improve? International Journal of Human-Computer Interaction, 32(1), 51–62. https://doi.org/10.1080/10447318.2015.1087664

Huang, Y., Obada-Obieh, B., & Beznosov, K. (2020). Amazon vs. my brother: How users of shared smart speakers perceive and cope with privacy risks. In Proceedings of the 2020 CHI conference on human factors in computing systems (pp. 1–13). Association for Computing Machinery. http://doi.org/10.1145/3313831.3376529

Kim, D., Park, K., Park, Y., & Ahn, J.-H. (2019). Willingness to provide personal information: Perspective of privacy calculus in IoT services. Computers in Human Behavior, 92, 273–281. https://doi.org/10.1016/j.chb.2018.11.022

Klimmt, C., Hartmann, T., Schramm, H., Bryant, J., & Vorderer, P. (2006). Parasocial interactions and relationships. In J. Bryant & P. Vorderer (Eds.), Psychology of entertainment (pp. 291–313). Routledge.

Krueger, R. A. & Casey, M. A. (2014). Focus groups: A practical guide for applied research. Sage Publications.

Lee, S., & Choi, J. (2017). Enhancing user experience with conversational agent for movie recommendation: Effects of self-disclosure and reciprocity. International Journal of Human-Computer Studies, 103, 95–105. https://doi.org/10.1016/j.ijhcs.2017.02.005

Lee, N., & Kwon, O. (2013). Para-social relationships and continuous use of mobile devices. International Journal of Mobile Communication, 11(5), 465–484. https://doi.org/10.1504/IJMC.2013.056956

Liao, Y., Vitak, J., Kumar, P., Zimmer, M., & Kritikos, K. (2019). Understanding the role of privacy and trust in intelligent personal assistant adoption. In N. G. Taylor, C. Christian-Lamb, M. H. Martin, & B. Nardi (Eds.), Information in contemporary society (pp. 102–113). Springer. https://doi.org/10.1007/978-3-030-15742-5_9

Lortie, C. L., & Guitton, M. J. (2011). Judgment of the humanness of an interlocutor is in the eye of the beholder. PLoS One, 6(9), Article e25085. https://doi.org/10.1371/journal.pone.0025085

Lucas G. M., Gratch J., King A., & Morency, L.-P. (2014). It’s only a computer: Virtual humans increase willingness to disclose. Computers in Human Behavior, 37, 94–100. https://doi.org/10.1016/j.chb.2014.04.043

Lutz, C., & Newlands, G. (2021). Privacy and smart speakers: A multi-dimensional approach. The Information Society, 37(3), 147–162. https://doi.org/10.1080/01972243.2021.1897914

Lutz, C., & Tamò-Larrieux, A. (2021). Do privacy concerns about social robots affect use intentions? Evidence from an experimental vignette study. Frontiers in Robotics and AI, 8, Article 627958. https://doi.org/10.3389/frobt.2021.627958

Mehta, R., Rice, S., Winter, S., Moore, J., & Oyman, K. (2015, April 3). Public perceptions of privacy toward the usage of unmanned aerial systems: A valid and reliable instrument [Poster presentation]. The 8th Annual Human Factors and Applied Psychology Student Conference, Daytona Beach, FL. https://commons.erau.edu/hfap/hfap-2015/posters/39/

Moorthy, A. E., & Vu, K.-P. (2015). Privacy concerns for use of voice activated personal assistant in the public space. International Journal of Human-Computer Interaction, 31(4), 307–335. https://doi.org/10.1080/10447318.2014.986642

Nass, C., & Steuer, J. (1993). Voices, boxes, and sources of messages: Computers and social actors. Human Communication Research, 19(4), 504-527. https://doi.org/10.1111/j.1468-2958.1993.tb00311.x

Nass, C., & Moon, Y. (2000). Machines and mindlessness: Social responses to computers. Journal of Social Issues, 56(1), 81–103. https://doi.org/10.1111/0022-4537.00153

Nowak, K. L., & Biocca, F. (2003). The effect of the agency and anthropomorphism on users’ sense of telepresence, co-presence, and social presence in virtual environments. Presence: Teleoperators and Virtual Environments 12(5), 481–494. https://doi.org/10.1162/105474603322761289

O’Brien, H. L. (2010). The influence of hedonic and utilitarian motivations on user engagement: The case of online shopping experiences. Interacting with Computers, 22(5), 344–352. https://doi.org/10.1016/j.intcom.2010.04.001

Park, M., Aiken, M., & Salvador, L. (2019). How do humans interact with chatbots?: An analysis of transcripts. International Journal of Management & Information Technology, 14, 3338–3350. https://doi.org/10.24297/ijmit.v14i0.7921

Reeves, B., & Nass, C. I. (1996). The media equation: How people treat computers, television, and new media like real people. Cambridge University Press.

Rubin, A. M., & Step, M. M. (2000). Impact of motivation, attraction, and parasocial interaction on talk radio listening. Journal of Broadcasting & Electronic Media, 44(4), 635–654. https://doi.org/10.1207/s15506878jobem4404_7

Schroeder, J., & Epley, N. (2016). Mistaking minds and machines: How speech affects dehumanization and anthropomorphism. Journal of Experimental Psychology: General, 145(11), 1427–1437. https://doi.org/10.1037/xge0000214

Schuetzler, R. M., Grimes, G. M., & Giborney, J. S. (2019). The effect of conversational agent skill on user behavior during deception. Computers in Human Behavior, 97, 250–259. https://doi.org/10.1016/j.chb.2019.03.033

Schwartz, B., & Wrzesniewski, A. (2016). Internal motivation, instrumental motivation, and eudaimonia. In J. Vittersø (Ed.), Handbook of eudaimonic well-being (pp. 123–134). Springer. https://doi.org/10.1007/978-3-319-42445-3_8

Short, J., Williams, E., & Christie, B. (1976). The social psychology of telecommunications. Wiley.

Slater, M. D. (2007). Reinforcing spirals: The mutual influence of media selectivity and media effects and their impact on individual behavior and social identity. Communication Theory, 17(3), 281–303. https://doi.org/10.1111/j.1468-2885.2007.00296.x

Smith, H. J., Dinev, T., & Xu, H. (2011). Information privacy research: An interdisciplinary review. MIS Quarterly, 35(4), 989–1015. https://doi.org/10.2307/41409970

Sundar, S. S., Jia, H., Waddell, T. F., & Huang, Y. (2015). Toward a theory of interactive media effects (TIME): Four models for explaining how interface features affect user psychology. In S. S. Sundar (Ed.), The handbook of the psychology of communication technology (pp. 47–86). Wiley-Blackwell. http://dx.doi.org/10.1002/9781118426456.ch3

Taddicken, M. (2014). The ‘privacy paradox’ in the social web: The impact of privacy concerns, individual characteristics, and the perceived social relevance on different forms of self-disclosure. Journal of Computer-Mediated Communication, 19(2), 248–273. https://doi.org/10.1111/jcc4.12052

Weller, S. C. (1998). Structured interviewing and questionnaire construction. In H. R. Bernard (Ed.), Handbook of methods in cultural anthropology (pp. 365–409). AltaMira Press.

Authors' Contribution

Sohye Lim: formal analysis, investigation, project administration, resources, writing-original draft, writing-review & editing. Hongjin Shim: conceptualization, data curation, formal analysis, methodology, visualization, validation, writing-original draft, writing-review & editing.

Editorial Record

First submission received:

November 24, 2020

Revisions received:

February 2, 2022

April 27, 2022

July 6, 2022

Accepted for publication:

July 11, 2022

Editor in charge:

Michel Walrave

Introduction

The Artificial intelligence (AI) in various forms has rapidly become a feature of everyday life: it has been actively applied to enhance existing media services and has given rise to new forms of technology. These new developments have mostly enabled unprecedented types of interactions with greater ease. Software that operates across different platforms with “the ability to respond to users’ demands synchronically, engage in humanoid interaction, even learn users’ behavior preferences and evolve over time” is referred to as AI agents (Cao et al., 2019, p. 188).

The platform of AI agents in particular has advanced in almost all areas of information-based services to promote efficient and effective communication. Individuals use a wide range of AI agents such as Siri (Apple), Cortana (Microsoft), Alexa (Amazon), and Bixby (Samsung) that operate on various platforms like smartphones, smart speakers, and car navigators.

Increased use of such AI agents caters to various user needs; however, as well as the benefits and convenience they provide, the usefulness and convenience of AI devices are inevitably linked to sharing and disclosure of users’ personal information, given that data is at the core of all AI agent functionality (Elish & boyd, 2018). Consequently, this raises critical questions regarding users’ privacy. Genpact (2017) reported that 71% of over 5,000 users polled in the United States, the United Kingdom, and Australia reported they did not want companies to use AI technologies that threaten infringement of their privacy, even if the technology could improves user experience. A recent study by Chung and S. Lee (2018) demonstrates how a wide range of personal information (from user interests to sleeping/waking patterns) can be collected by virtual assistants. In accordance with increasing awareness of privacy-related issues, recent studies have identified user privacy as the most prominent concern (Aleipis & Patsakis, 2017; Liao et al., 2019), although theorization is still in its infancy. While a more rigorous approach is applied in some studies to elucidate the formation and effects of user privacy (Lutz & Newlands, 2021; Lutz & Tamò-Larrieux, 2021), investigating the social nature of human-agent communication can offer a significant contribution to the related field.

The aim of this paper is to explore the conditions that affect users’ privacy concerns. Not all AI-agent users have the same level of concern about their privacy during use. Drawing on the overarching “computers are social actors” (CASA) theoretical framework (Nass & Moon, 2000; Reeves & Nass, 1996), the psychology of close relationships offers a lens through which to understand user responses toward AI agents. Accordingly, three variables—intimacy, para-social interaction (PSI), and social presence—are applied that relate to the psychological relationship between the user and AI agents and that reflect the extent to which the user perceives the AI-based agent as a human partner. It is assumed that once a user perceives a close relationship with the AI agent, they will disclose more personal information, which in turn amplifies privacy concerns. In addition, users’ various motivations are noted as a key variable that could bring about significant changes in the extent of users’ privacy concerns. This study considers the moderating role of motivations in using AI agents to explore the relationship between factors associated with users’ privacy concerns in greater depth.

Theoretical Framework and Hypotheses

A wide deployment of AI technology has engendered new forms of media agents in recent years. A number of similar terms have been used interchangeably to refer to speech-based AI technology, such as voice assistants, conversational agents, AI agents, and chatbots. Most AI agents are capable of providing “small talk,” offering factual information, and answering complex questions in real time. To illustrate, if a user tells their Apple Siri to marry them, Siri will answer: “My end-user licensing agreement does not cover marriage. My apologies.” It is this hedonic use of AI agents that distinguishes them from all preceding information systems, thus expanding new possibilities for computer-human interaction by simulating the naturalness of human conversation. Previous findings suggest that small talk deepens relationships between AI agents and human users (Park et al., 2019). Whether feelings that users apply to their agents are similar to those experienced in interpersonal relationships presents an interesting way of exploring the issue of users’ privacy concerns about information transmitted and stored via the AI-agent. If user experiences of AI interaction assimilate the psychological closeness that are unique to human-to-human communication, it could be questioned whether their concerns over personal information could be alleviated.

Presented with the new possibility of human-like conversations between AI agents and their users, CASA theory is considered the most suitable framework to underpin the current study. According to the CASA perspective, humans treat computers and media like real people, mindlessly applying scripts to interact with humans and for interactions with social technologies (Gambino et al., 2020). The CASA approach has gained validity across various types of media agent, such as embodied agents (Hoffmann et al., 2009), smart speakers (Foehr & Germelmann, 2020), and chatbots (Ho et al., 2018). The CASA approach is particularly suited to elucidating the nature of human interaction with social technologies that offer both “social cues” (Nass & Moon, 2000) and “sourcing” (Nass & Steuer, 1993). Social technologies need to present the user enough cues to induce social responses; moreover, users should be able to perceive the technologies as autonomous sources. In this context, chatbots lie within the scope of CASA as social cues and sourcing are two important features of chatbots.

More recently, it has been suggested that the new conceptual element “social affordances” (Gambino et al., 2020) should be incorporated to assess how humans interpret the social potential of a media agent. For instance, Foehr and Germelmann (2020) argue that users not only consider anthropomorphic cues but regard technologies as social actors with which they form interpersonal relationships. The social affordances of AI agents are operating at an unprecedented level; however, this means the security of personal information is also increasingly being challenged.

Privacy Concerns Toward AI Agents

There is an exponential increase in the breadth and volume of personal information that can potentially be gathered through the use of AI agents. Communication with AI agents inevitably leads to the disclosure of personal and private information (with or without the user’s knowledge), ranging from product information sought by the user to the food that the user is having delivered for dinner. Being mostly aware of this, users share information by their own volition to serve various other interests; however, they may not always be aware of the nature, type, or purpose of information collected. A study by Huang et al. (2020) reveals that users of smart speakers tend to lack understanding about what data is available and what is kept private. Accordingly, concerns about personal information infringement have become a major issue related to the expansion of AI-agent services. While privacy is a multifaceted concept, the “social informational privacy” definition used in Lutz and Tamò-Larrieux’s (2021, p. 7) study is applied here as we understand social robots to be a type of embodied AI agent. Thus, in this study, social informational privacy focuses on users’ understanding of how information shared with AI agents is processed, especially considering the anthropomorphic effect of the agent.

In particular, the “privacy calculus” theory sheds light on the mechanism by which users assess the cost and benefit of revealing private information. Personal privacy interests are understood to be an exchange of information and benefits and provide the foundation for the trade-off between privacy risks and benefits when users are requested to provide their personal information (Smith et al., 2011). During communication with an AI agent, individuals often calculate the potential benefits and risks that information sharing might bring about. In turn, this will affect individuals’ usage behavior. Variables such as network externalities, trust, and information sensitivity are known to affect users’ benefit-and-risk analysis and the consequent provision of personal information (Kim et al., 2019). The extent to which the user is concerned about the risk of disclosing their private information thus plays a key role in determining not only adoption of the technology but also many qualitative aspects of the experience. Moorthy and Vu (2015) reported that AI agent users were more cautious in disclosing private than non-private information, emphasizing that privacy concerns are a major reason for not using AI agents.

Psychology of Close Relationships: Intimacy, Para-Social Interaction, and Social Presence

Based on the CASA perspective, the psychology of close relationships might offer an important means of predicting privacy concerns. Lutz and Tamò-Larrieux’s (2021) research on social robots found that social influence is a key factor in privacy concerns: the degree the user anthropomorphizes social robots is directly related to the degree of privacy concerns they bear. Thus, it can be inferred that users’ privacy concerns may be affected by the extent to which they evaluate an AI agent to be worthy of a social relationship. Disclosure intimacy, para-social interaction (PSI), and social presence are the three predominant constructs that have been applied in human-agent communication to develop close relationships.

First, disclosure intimacy is a principal variable in explaining relationship-building. Related studies maintain that people may experience a greater level of disclosure intimacy when interacting with AI agents compared to human partners due to reduced concerns about impression management and no risk of negative evaluation. For example, Ho et al. (2018) examined the downstream effects that occurred after emotional and factual disclosures in conversations with either a supposed AI agent or a real person. Their experiment revealed that the effects of emotional disclosure were equivalent, regardless of who participants thought they were disclosing to. According to Ho et al. (2018), the disclosure processing frame could explain how the user’s experience of disclosure intimacy toward an agent is not as weak as expected compared with those toward a human partner. The disclosure processing frame postulates that computerized agents reduce impression management and increase disclosure intimacy, especially in situations where a negative evaluation may be prominent, such as when asked potentially embarrassing questions (Lucas et al., 2014). Moreover, it suggests that more intimate disclosure from the user results in emotional, relational, and psychological benefits in human-agent communication (Ho et al., 2018).

Despite this, intimacy is not without cost because disclosure of personal information may be associated with greater privacy concerns. The famous novelist Barry (2014) illustrates an individual’s delicate balance between openness and privacy with the quote: “so we exchange privacy for intimacy.” In a related study by S. Lee and Choi (2017), a high level of self-disclosure and reciprocity in communication with conversational agents was found to significantly increase trust, rapport, and user satisfaction. Assuming that exchanging and sharing personal information is likely to enhance relational intimacy, and that researchers have not empirically identified a significant difference across human-to-human and human-to-agent communication, the extent of intimacy perceived by the user is likely to be a significant factor in their concern about privacy. Thus, the following hypothesis is proposed:

H1: Intimacy with AI agents will be negatively associated with users’ privacy concerns.

Para-social interaction is a unique and intriguing psychological state that may arise from users’ interactions with AI agents. The concept originally referred to a media user’s reaction to a media performer, whereby the media user perceives the performer as an intimate conversational partner. Users perceive PSI as an intimate reciprocal social interaction, despite knowing it is only an illusion (Dibble et al., 2016). The media user may respond to the character “similarly to how they feel, think and behave in [a] real-life encounter” (Klimmt et al., 2006). With traditional media, the media performer might typically be a character (persona) within a media narrative, or a media figure such as a radio personality. Emerging interactive media have enabled more intense PSI experiences for users through features that evoke a sense of interaction, while the parameters of such interactions have been extended by AI agents. Several distinctive features of AI agents, including names (e.g., Alexa for Echo; Eskine & Locander, 2014), vocal qualities (Schroeder & Epley, 2016), word frequency (Lortie & Guitton, 2011), and responsiveness (Schuetzler et al., 2019), evoke particularly strong anthropomorphic responses among users, upon which they are able to build PSI.

The possibility that users could form a particular relationship with AI agents due to new anthropomorphic features has started to attract scholarly attention. For instance, Han and Yang (2018) argue that users could form para-social relationships with their chatbots, using names, human-like verbal reactions, and personality, all of which are important antecedents of satisfaction and continuance intentions. Moreover, they report the negative influence of users’ perceived privacy risks on PSI with AI agents (Han & Yang, 2018). That is, users’ PSI experiences are weaker when the privacy risk is considered to be greater. Accordingly, PSI appears to be a primary psychological response that is affected by AI agents’ features while simultaneously resulting in user satisfaction. Accordingly, we propose the following hypothesis:

H2: Para-social interaction with AI agents will be negatively associated with users’ privacy concerns.

Social presence has been described as a major psychological effect brought about by human-agent communication. In short, social presence is a psychological state in which an individual perceives themself to exist within an interpersonal environment (Bailenson et al., 2002). A number of studies have attempted to identify agent features as important for altering the extent of the social presence experience, primarily because social presence is demonstrated to be a key predictor of favorable attitudes and behaviors by affecting emotional closeness and social connectedness (Go & Sundar, 2019). Greater emotional closeness and social connectedness will naturally lead to a positive evaluation of the AI agents; therefore, many studies focus on the predictors and consequences of social presence (e.g., Go & Sundar, 2019; N. Lee & Kwon, 2013; Sundar et al., 2015).

In particular, a high level of contingency in the exchange of messages with an AI agent is postulated to increase the user’s social presence. Sundar et al. (2015) found that higher message interactivity in a chat context heightens a feeling of the other’s presence. Similarly, Go and Sundar (2019) discovered that the ability of a chatbot to deliver contingent and interactive messages compensated for its impersonal nature. This suggests the experience of social presence is dictated not only by technological features but also by the dynamic nature of message exchange. It can be inferred that both the quantitative and qualitative aspects of information exchange are deeply linked to the experience of social presence. In this context, the experience of social presence can be assumed to be more important for the self-disclosure of sensitive and emotional information, such as feelings that are more intimate, than for the disclosure of factual information such as names (Taddicken, 2014). In this study, it is suggested that users’ experience of social presence should play a key role in the user’s sensitivity to disclosing their private information after using an AI agent with different motivations. This leads to the following hypothesis:

H3: The social presence of AI agents will be negatively associated with users’ privacy concerns.

AI Agent Users’ Motivations

An AI agent is used to gratify various user needs, with the user’s motivation accounting for any ensuing psychological reaction. While little research has investigated AI-agent users’ motivations, Cho et al. (2019) broadly categorized the nature of virtual assistant task types into utilitarian and hedonic, depending on the topics that arise in the interaction. Hijjawi et al. (2016) suggested that conversations with a system typically consist of a combination of question and non-question statements that rely on factors such as topic or context (for example, informational vs. entertainment use). Brandtzæg and Følstad (2018) classified AI-agent users’ motivations into “productivity” (effectiveness/efficiency) in conducting tasks such as access to specific content, “entertainment and social experiences,” and “novelty.” Their results revealed that the majority of users seek either assistance or information to enhance the effectiveness or efficiency of their productivity, whereas others do so for fun (Brandtzæg & Følstad, 2018). The novelty aspect emphasizes an interest in new media technologies. Although AI agents generally serve a wider range of purposes for their users, researchers have not sufficiently investigated the role that user motivation plays when using AI agents. The aim of this study is to provide a more comprehensive and generalizable catalog of user motivations to enhance understanding of the various psychological effects arising from use of AI agents.

At the same time, motivations that underlie the sharing of personal information should resonate with the privacy calculus in human-computer interaction as well as interpersonal communication. Social media research provides evidence that supports the possibility of a meaningful association between users’ motivations and privacy concerns (Hallam & Zanella, 2017; Heravi et al., 2018). For example, Slater (2007) found that the relationship between social gratification and information self-disclosure is reciprocal, following a reinforcing spiral process. When a user is hedonically motivated (rather than functionally motivated), the meaning and benefit gained from self-disclosure become widely different. Taddicken (2014) also illustrated the possible role played by user motivation on the association between relational experiences and privacy concerns. Therefore, those who have different motivations for using AI agents likely have varying levels of privacy concerns. This leads to the following research question and subsequent hypothesis:

RQ1: What is the motivation for using AI agents?

H4: Different types of motivation for using AI agents will significantly moderate the relationship between factors related to privacy concerns and users’ privacy concerns.

H4a: All motivations for using AI agents will significantly moderate the relationship between users’ intimacy and privacy concerns.

H4b: All motivations for using AI agents will significantly moderate the relationship between users’ PSI and privacy concerns.

H4c: All motivations for using AI agents will significantly moderate the relationship between users’ social presence and privacy concerns.

Methods

Procedure and Sample

Focus Group Interviews

As mentioned in the literature review, studies concerning AI agents have recently increased, although there is still a lack of studies on motivation for their use. Accordingly, in this study, development of the questionnaire entailed conducting focus group interviews (FGIs) about AI agent motivations, which was then enriched by literature about AI agent motivation. Thus, the FGIs enabled us to develop items for measuring the motives of AI agents. We selected FGIs because of the potential for participants to interact and generate ideas beyond what each individual can contribute (Carey & Asbury, 2016). Furthermore, it is possible to observe a significant amount of interaction on a particular topic within a limited time. We conducted FGIs according to Weller's (1998) “structured interviewing and questionnaire construction.” Focus group interviews were conducted by each group from March 6 to 9, 2020.

The Interviewees

The recruitment of interview respondents1 was supported by a marketing agency. Interviewees comprised 36 users (male and female) aged from 17 to 50 years with experience using AI agents.

There were 16 participants who had used AI agents over a long period (at least six months). Because of the multifunctionality of AI agents, it was considered highly likely that various motives for using AI agents would be revealed. Accordingly, it was considered that participants with longer AI agent experience might mention various motives for use.

Table 1. Socio-Demographic Information of Interviewees—Extended Use (N = 16).

|

Age |

Gender |

AI agent usage period |

||

|

Males |

Females |

Six months to less than a year |

More than a year |

|

|

Under 19 |

1 |

4 |

3 |

2 |

|

20–30 |

4 |

2 |

5 |

1 |

|

31–40 |

2 |

2 |

1 |

3 |

|

41–50 |

0 |

1 |

1 |

0 |

|

Total |

7 |

9 |

10 |

6 |

The Interview Process

As Table 1 shows, participants were divided into four groups according to age as follows: teens (n = 5), 20–30 (n = 6), 31–40 (n = 4), and 41–50 (n = 1). Interviews were conducted for approximately 60–70 mins (refreshments were provided to create a comfortable atmosphere in the seminar room). During the FGI, we acted as a moderator, posing questions and adding prompts with follow-up questions.

The FGI consisted of two stages. In the first stage, a “free listing” interview was conducted to enable interviewees to write freely why they used AI agents. During this stage, each of the 16 interviewees identified 10 motivations (i.e., items) for using AI agents based on their own experiences. Of the AI agent items (160 = 16 interviewees × 10 items) collected through pre-listing, 42 were mentioned by at least two interviewees, which were then selected for the next stage. This free listing helps to pose key questions in the second stage of the interview (Krueger & Casey, 2014).

During the second stage2, the researchers explained the 42 items one-by-one to each group of interviewees in turn and counted the items corresponding to interviewees’ own motivation for AI agent use. Then, to exhaustively and exclusively elicit the items, the items were selected in the order of motivation for using AI agents with the least mention in each group. As a result, a total of 15 items for using AI agents were selected in Group A, and a total of 13 items were selected in Group B. However, those considered problematic3 were excluded based on the judgment of the researchers (for example, I have an AI agent at home, so I am just using it). After qualitatively refining interviewees’ items, we finally elicited 20 items from which the questionnaire could be developed to examine why users employ AI agents.

Measures

Online survey recruited from a professional research company was conducted with 562 respondents in Korea in May 4 to 29 of 2020. According to age and gender proportions of Korea, an invitation email was randomly sent to an online panel of the research company.

The survey was conducted to investigate individuals’ motivations for using AI agents and the factors related to privacy concerns while using AI agents. We focused on three measures: (1) PSI, social presence, and intimacy as independent variables, (2) variables for motivation for using AI agents, and (3) AI agent users’ privacy concerns. The survey took approximately 35−45 minutes.

Independent Variables4

Horton and Wohl (1956) defined PSI as a “simulacrum of conversational give-and-take” (p. 215) that users experience in response to a media performer (the “persona”) in a media exposure situation. Here, PSIs—adopted and modified from Dibble and colleagues (2016)—were measured with eight items on a 7-point Likert scale (1 = strongly disagree; 7 = strongly agree; Cronbach α = .88). The original PSI Scale (Dibble et al., 2016) was oriented to capture reactions toward “Amy” (a romantic partner for the participants in Dibble et al.’s study). Therefore, the current study adjusted the wording to reflect conversation with AI agents used for this study. The measurement included items such as, I talk to an AI agent like a friend, I like to talk to an AI agent, and I like hearing the voice of an AI agent.

Short et al. (1976) defined social presence as “the degree of salience of the other person in the interaction and the consequent salience of the interpersonal relationship” (p. 65). Social presence, adopted from Nowak and Biocca (2003), was measured with eight items and modified on a 7-point Likert scale (1 = strongly disagree; 7 = strongly agree; Cronbach α = .81). The measurement included items such as, An AI agent seems to accelerate interaction and I feel warm interacting with an AI agent. Nowak and Biocca (2003) verified the social presence implemented when an imagined partner and a respondent interact in a virtual environment through experiments. Thus, this study revised the words and expressions of the items by assuming a conversation situation with AI agents (rather than a virtual partner) in a survey situation rather than an experimental setting.

Hinde (1978) defines intimacy as “the number of different facets of the personality which are revealed to the partner and to what depth” (p. 378). Intimacy, adopted from Berschied et al. (1989), was measured using five items on a 7-point Likert scale (1 = strongly disagree; 7 = strongly agree; Cronbach α = .94). Items included, I feel emotionally close to an AI agent, An AI agent uses supportive statements to build favor with me, and I develop a sense of familiarity with an AI agent. Because Berschied et al.’s (1989) definition of intimacy is formed from romantic, friend, and family relationships, we modified the words and expressions in this study so that their intimacy scale could be used to measure the intimacy formed between AI agents and users.

AI Agent Motivation Variables

For the moderated variable, motivations for using an AI agent were developed through FGIs and measured with 20 items (see Table 3) on a 7-point Likert scale (1 = strongly disagree; 7 = strongly agree). Respondents were asked questions about their motivation for using AI agents; for example, To get to know the latest issues in our society, To have a conversation with an AI agent, To use an audio book service, and To manage the schedule.

Privacy Concerns

For the dependent variable, privacy concerns were measured and adapted to the current study from Mehta et al. (2015) with four items modified on a 7-point Likert scale (1 = strongly disagree; 7 = strongly agree; Cronbach α = .82). These items included, While using AI agents, an AI agent seems to have access to my personal information, While using an AI agent, I am uncomfortable that my personal information will be leaked, Using an AI agent increases the possibility of infringement of personal information, and While using AI agents, I think that AI agent system manufacturers can know my personal information.

Results

Hypotheses Tests

Table 2 presents the results for the linear regression analysis performed to examine the potential relationships between the measured variables. The regression model predicted AI agent users’ privacy concerns that result from using AI agents as a function of the socio-demographic variables and AI technology usage (Block 1), the motivations for using AI agents (Block 2), the independent variables (Block 3), and the moderating effects between the motivation variables for using AI agents and independent variables on predicting privacy concerns (Block 4).

With regard to H1 (Block 3, Table 2), two of the independent variables (intimacy, B = 0.11, SE = 0.05, p = .046, PSI, B = −0.012, SE = 0.06, p = .063, and social presence, B = −0.18, SE = 0.06, p = .007 were significantly associated with privacy concerns when the demographic variables—daily AI technology use (Block 1 of Table 2) and the motivations for using AI agents (Block 2, Table 2)—were controlled, which was consistent with our first hypothesis. In other words, when AI agent users feel close to AI agents, they are more concerned about exposure to privacy. Conversely, when they perceived that AI agents had a high social presence, concerns about privacy exposure decreased relatively. Therefore, H1 and H2 were supported.

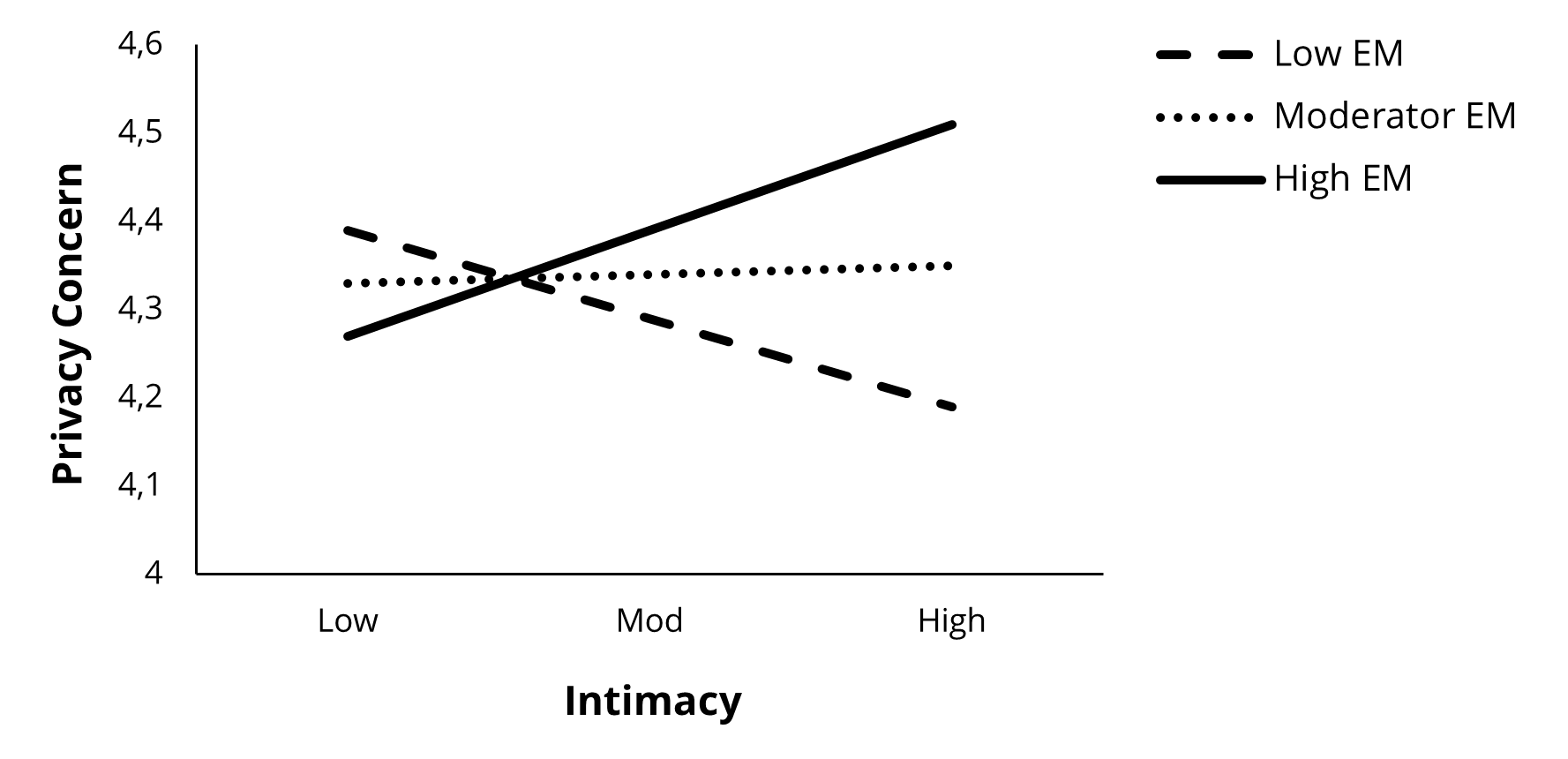

Regarding H4 (Block 4, Table 2), simple slope analysis revealed significant interaction effects between some of the independent variables and the motivation for using AI agents on AI agent users’ privacy concerns. As shown in Table 2, there was a significant interaction effect of intimacy × entertainment motivation (B = 0.10, SE = 0.04, p = .004, Fig. 1). Thus, it can be inferred that the more AI agent users use AI agents to satisfy their entertainment needs, the more they feel intimate with AI agents, and the more hesitant they are to risk their privacy.

Table 2. Results of Ordinary Least Squares Regressions

Predicting Privacy Concern (N = 562).

|

Variables |

B (SE) |

p |

|

Block 1. Controls |

|

|

|

(Intercept) |

4.14 (0.22) |

<.001 |

|

Socio-demographic |

|

|

|

Gender (male = 1) |

−0.05 (0.08) |

.252 |

|

Age |

0.01 (0.00) |

.519 |

|

Education |

0.13 (0.05) |

.009 |

|

Daily AI use |

|

|

|

Time on AI usage |

−0.07 (0.05) |

.159 |

|

R2 |

.01 |

.062 |

|

Block 2. Motivations |

|

|

|

EM |

0.19 (0.04) |

<.001 |

|

IM |

0.19 (0.04) |

<.001 |

|

PT |

0.01 (0.03) |

.326 |

|

ΔR2 |

.01 |

.034 |

|

Block 3. Independent variables |

|

|

|

Intimacy |

0.11 (0.05) |

.046 |

|

Para-social interaction |

−0.01 (0.06) |

.283 |

|

Social presence |

−0.18 (0.06) |

.007 |

|

ΔR2 |

.02 |

.044 |

|

Block 4. Independent variables × Moderator |

|

|

|

Intimacy × EM |

0.10 (0.04) |

.004 |

|

Para-social interaction ×EM |

0.16 (0.07) |

<.001 |

|

Social presence × EM |

−0.18 (0.05) |

<.001 |

|

Intimacy × IM |

−0.25 (0.05) |

<.001 |

|

Para-social interaction × IM |

−0.04 (0.05) |

.261 |

|

Social presence × IM |

−0.07 (0.05) |

.445 |

|

Intimacy × PT |

0.15 (0.04) |

.080 |

|

Para-social interaction × PT |

0.13 (0.04) |

.249 |

|

Social presence × PT |

−0.05 (0.04) |

.311 |

|

ΔR2 |

.10 |

<.001 |

|

Total R2 |

.135 |

<.001 |

|

Note. Coefficients indicate non-standardized OLS regression coefficients; standard errors are given in parentheses. EM = Entertainment motivation, IM = Instrumental motivation, PT = Passing time |

||

Figure 1. Moderation Effect of Entertainment Motivation (EM) on the Association

Between Intimacy and AI Agent User’s Privacy Concerns.

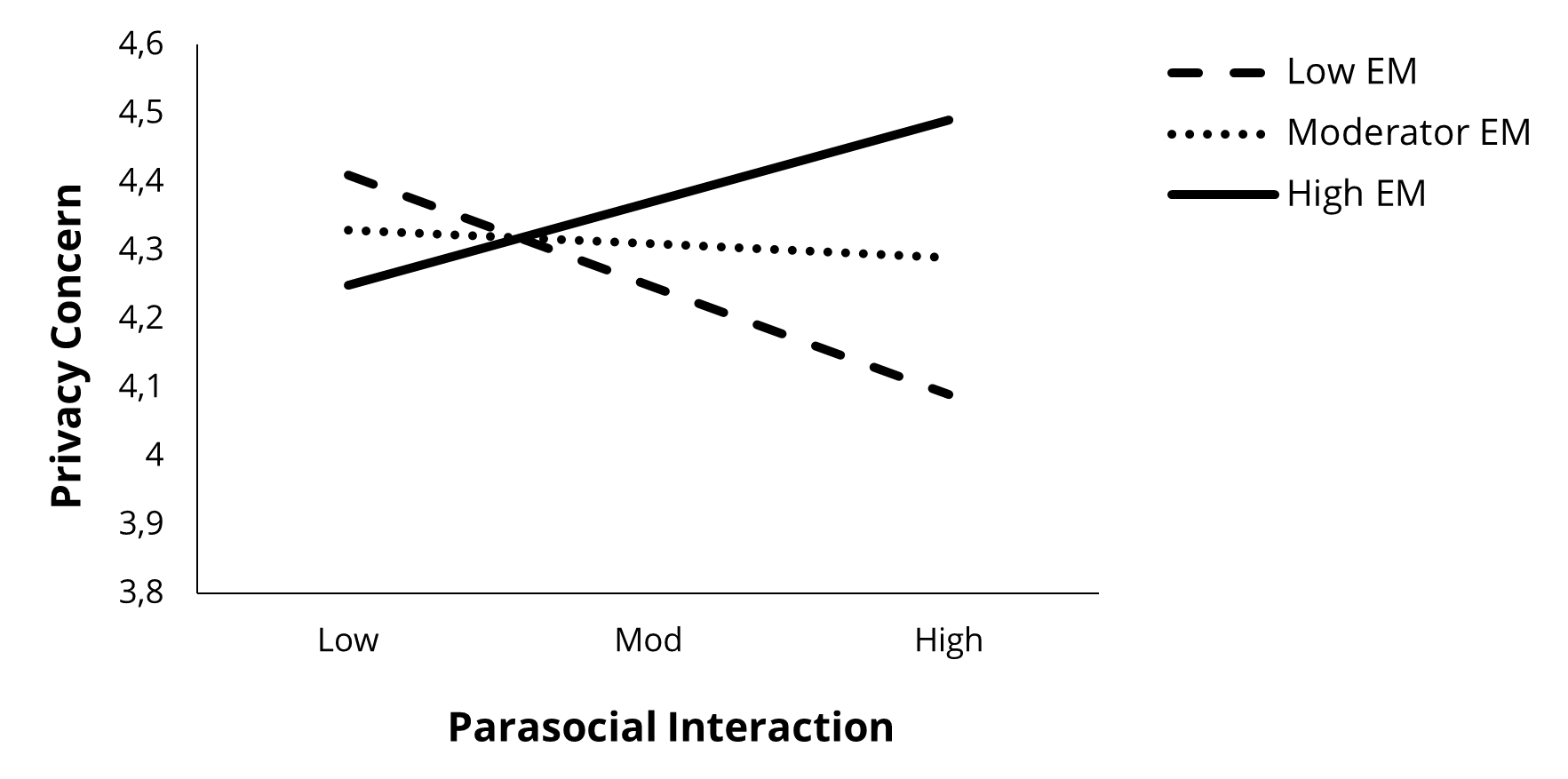

The results also demonstrated a significant interaction effect of PSI × entertainment motivation (B = 0.16, SE = 0.07, p < .001, Fig. 2). When AI agent users employ AI agents to satisfy their entertainment needs, the more they talk with or think about AI agents in a human-like way, and the more sensitive they may become to privacy exposure.

Figure 2. Moderation Effect of Entertainment Motivation (EM) on the Association

Between Parasocial Interaction and AI Agent User’s Privacy Concerns.

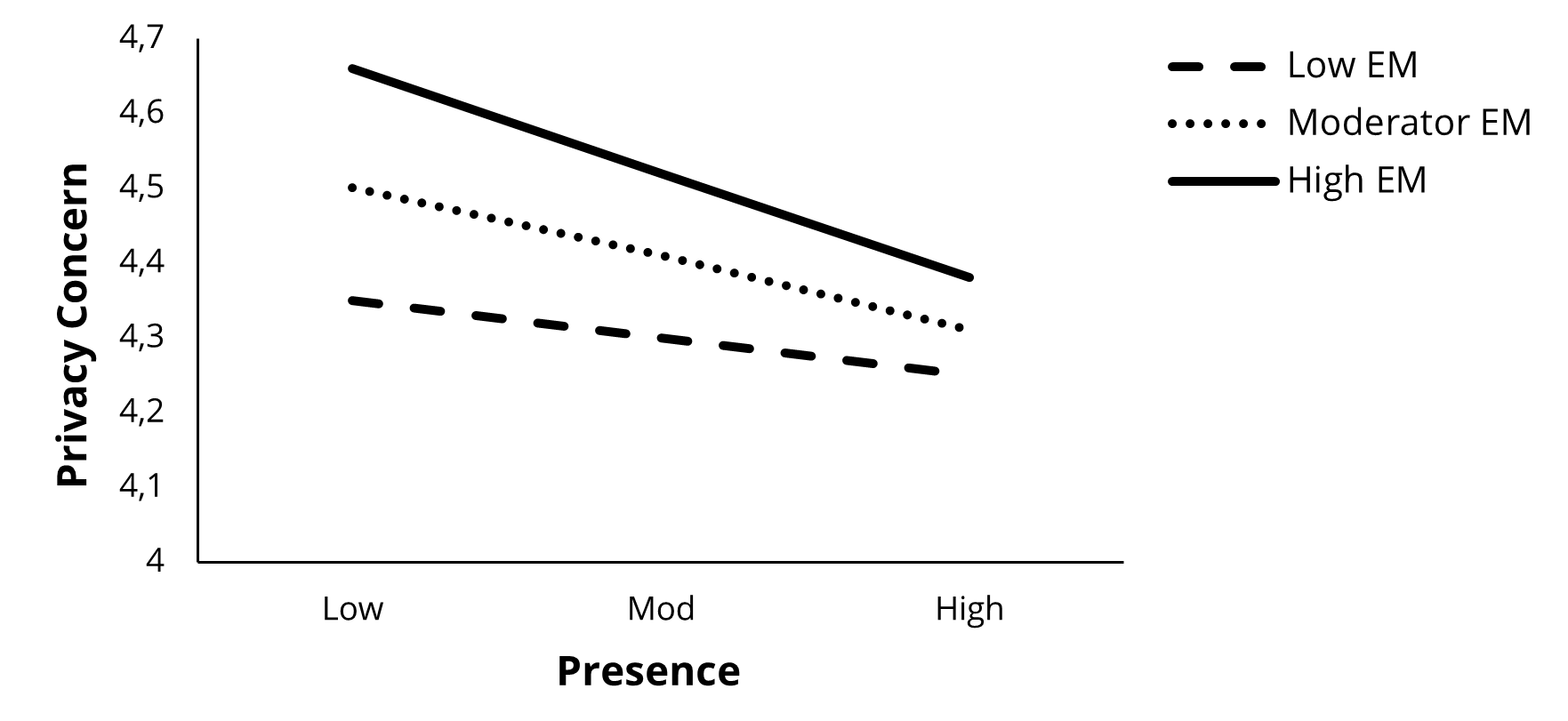

Meanwhile, the result suggested an interaction effect of social presence × entertainment motivation (B = −0.18, SE = 0.05, p < .001, Fig. 3). As AI agent users use AI agents to satisfy their amusement needs, the more often AI agent users perceive themselves as actually interacting with AI agents, the lower concerns about privacy exposure can be.

Figure 3. Moderation Effect of Entertainment Motivation (EM) on the Association

Between Social Presence and AI Agent User’s Privacy Concerns.

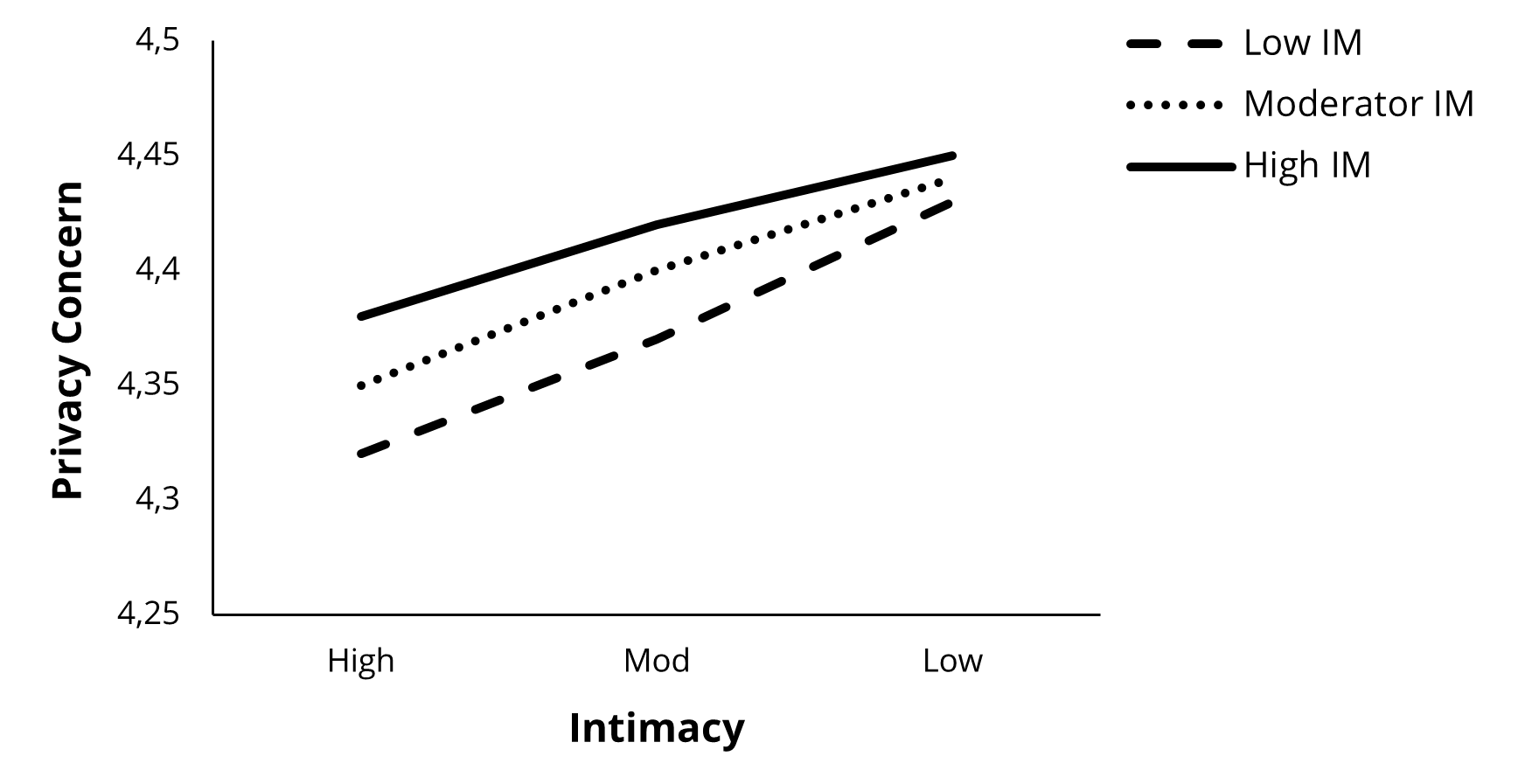

For AI agent users with high instrumental motivation, the results show that there was a significant interaction effect of intimacy × instrumental motivation (B = −0.25, SE = 0.05, p < .001, Fig. 4). This suggests that the greater the intimacy AI agent users feel toward AI agents when using them functionally, the fewer concerns they have about their privacy.

Figure 4. Moderation Effect of Instrumental Motivation (IM) on the Association

Between Intimacy and AI Agent User’s Privacy Concerns.

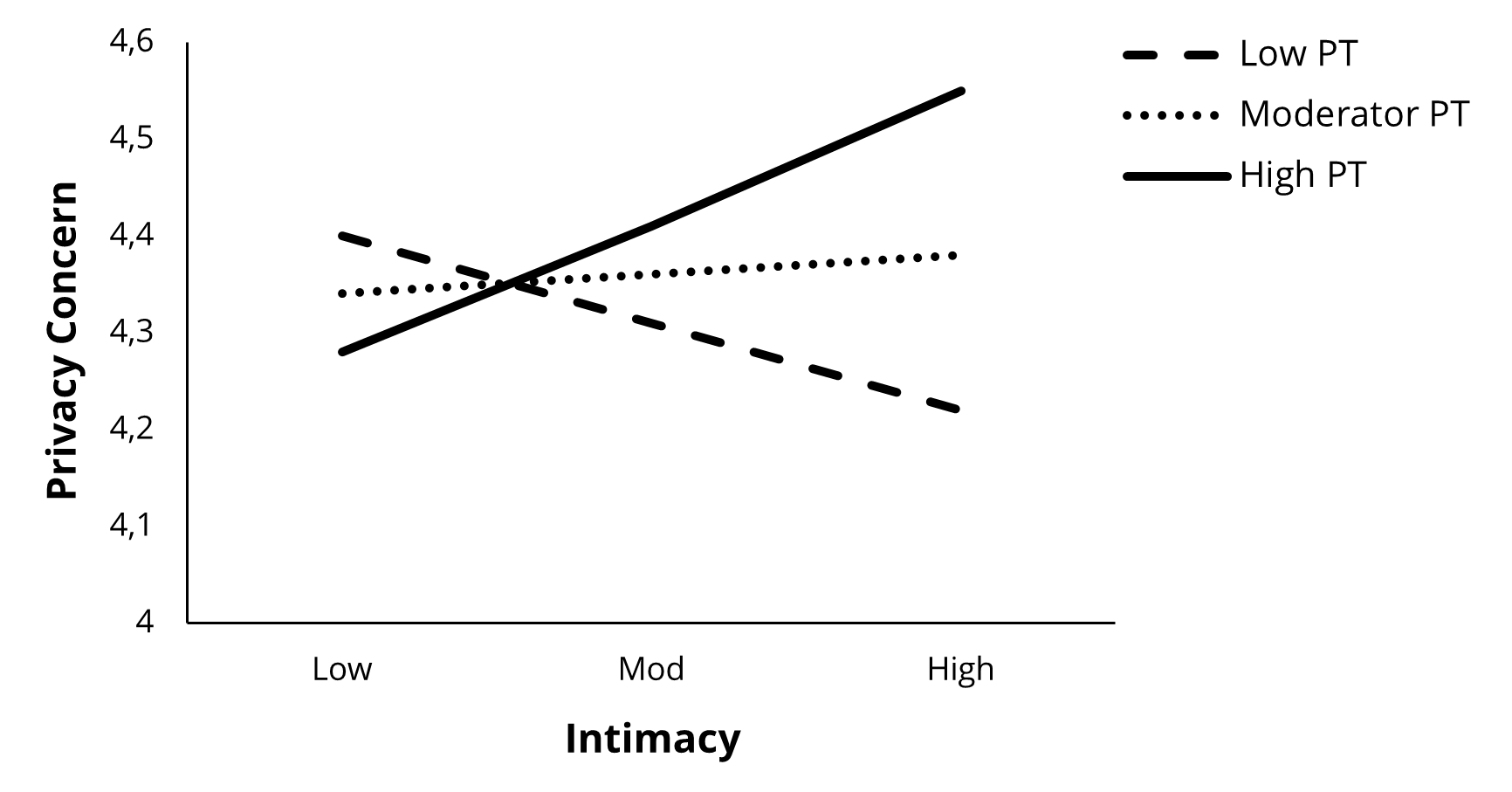

Finally, the findings indicated there was a marginally significant interaction effect of intimacy × passing time (B = 0.15, SE = 0.04, p = .080, Fig. 5). As AI agent users use AI agents to spend time, the more intimate they feel with the AI agent, and the greater the concern about privacy exposure

Figure 5. Moderation Effect of Passing Time (PT) on the Association

Between Intimacy and AI Agent User’s Privacy Concerns.

Taken together, the results of our analyses partially support H4a, H4b, and H4c.

Testing the Research Question

Because there is little previous research that explores the motivations for using AI agents, we conducted FGIs and explored the 20 items related to individuals’ motivation for using AI agents.

To address our research question (RQ1), we performed exploratory factor analysis (EFA) to investigate the distinct motivations for AI agents. As shown in Table 3, a three-factor solution was identified, and five of the 20 items were removed according to the .40–.30–.20 rule of Howard’s factor loading cutoff (Howard, 2016). These factors were labeled as follows: entertainment motivation (EM: seven items), instrumental motivation (IM: six items), and passing time (PT: two items). The terms and definitions were then adapted from previous studies (O’Brien, 2010; Rubin & Step, 2000; Schwartz & Wrzesniewski, 2016) to name and define these motivations in the context of computer-human interaction. These three factors accounted for 59.35% of the variance and exhibited strong reliability (i.e., the Cronbach's alpha and Pearson's r values were greater than 0.85 and 0.60, respectively), which indicates good face validity of the measurement items. As shown in Table 4, there was significant correlation between the factors, suggesting that such motivations are complementary rather than mutually exclusive.

Table 3. Factor Analysis Results for AI Agent Motivations (N = 562).

|

Questionnaire items |

Factors |

||||

|

EM |

IM |

PT |

M |

SD |

|

|

To get to know the latest issues in our society |

.58 |

.28 |

.24 |

3.92 |

1.56 |

|

To order food |

.77 |

.22 |

.12 |

3.47 |

1.63 |

|

To enjoy the game |

.75 |

.13 |

.16 |

3.32 |

1.63 |

|

To read a book |

.76 |

.25 |

.13 |

3.46 |

1.62 |

|

To have a conversation with a speaker |

.62 |

.11 |

.40 |

3.50 |

1.66 |

|

To purchase goods |

.82 |

.20 |

.05 |

3.38 |

1.64 |

|

To use audio book service |

.69 |

.11 |

.07 |

3.22 |

1.82 |

|

To search the Internet |

.14 |

.57 |

.34 |

4.90 |

1.37 |

|

To manage the schedule |

.23 |

.65 |

.22 |

4.71 |

1.57 |

|

To listen to music |

.05 |

.77 |

.21 |

5.02 |

1.49 |

|

To check the time. |

.21 |

.74 |

.18 |

4.63 |

1.62 |

|

To connect to music services |

.23 |

.75 |

.16 |

4.70 |

1.65 |

|

To check the weather forecast |

.19 |

.79 |

.10 |

4.84 |

1.65 |

|

To rest alone |

.23 |

.27 |

.79 |

4.14 |

1.53 |

|

To spend time |

.15 |

.29 |

.83 |

4.50 |

1.45 |

|

Eigenvalue |

5.51 |

4.40 |

1.96 |

|

|

|

% Variance explained |

27.56 |

22.01 |

9.78 |

|

|

|

Cronbach’s α |

.895 |

.862 |

|

|

|

|

Pearson’s α |

|

|

.673 (p < .001) |

|

|

|

Note. Listed 16 items on the table indicate only clearly differentiated items. Four items were excluded. EM: Entertainment motivation, IM: Instrumental motivation, PT: Passing time. |

|||||

Table 4. Zero-Order Correlations Among Key Study Variables.

|

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

|

1. Gender |

1 |

|

|

|

|

|

|

|

|

|

|

|

2. Edu |

.070 |

1 |

|

|

|

|

|

|

|

|

|

|

3. Age |

.041 |

.095* |

1 |

|

|

|

|

|

|

|

|

|

4. AU |

.022 |

.029 |

.020 |

1 |

|

|

|

|

|

|

|

|

5. EM |

.134** |

.035 |

.156** |

.112** |

1 |

|

|

|

|

|

|

|

6. IM |

−.006 |

.032 |

.080 |

.251** |

.499** |

1 |

|

|

|

|

|

|

7. PT |

.043 |

−.041 |

.124** |

.144** |

.443** |

.525** |

1 |

|

|

|

|

|

8. PSI |

.077 |

−.029 |

.174** |

.167** |

.578** |

.465** |

.457** |

1 |

|

|

|

|

9. PN |

−.012 |

−.109** |

.173** |

.094* |

.292** |

.035 |

.101* |

.470** |

1 |

|

|

|

10. IT |

.048 |

−.065 |

.201** |

.129** |

.496** |

.220** |

.273** |

.646** |

.713** |

1 |

|

|

11. PC |

−.033 |

.083* |

.017 |

−.050 |

.083* |

.183** |

.046 |

.033 |

−.058* |

.048* |

1 |

|

Mean |

.49 |

2.84 |

39.9 |

1.85 |

3.46 |

4.80 |

4.32 |

4.18 |

4.03 |

3.63 |

4.39 |

|

SD |

.50 |

.76 |

10.56 |

.93 |

1.25 |

1.20 |

1.37 |

1.03 |

.96 |

1.33 |

.94 |

|

Note. Cell entries are two-tailed Pearson’s correlation coefficients. AU = AI usage, EM = Entertainment motivation A, IM = Instrumental motivation, PT = Passing time, PSI = Para-social interaction, PN = Presence, IT = Intimacy, PC = Privacy concerns. *p < .05, **p < .01. N = 562. |

|||||||||||

Discussion

The findings of this study propose that psychological aspects of close relationships (including intimacy, PSI and social presence) offers valuable insights into understanding the relationship between AI agents and individuals’ privacy concerns in the newly emerging communication environment. The first aim of the study was to determine the motivations for AI agent use. The EFA results show that individuals used AI agents primarily to gratify three needs: EM, IM, and PT. The findings indicate that individuals mainly use AI agents to satisfy their various recreational needs (EM), to add efficiency to their own tasks related to their daily life (IM), and to rest (PT). In particular, the EFA results suggest that IM is well suited to the concept of perceived usefulness derived from the technology acceptance model (TAM; Davis, 1989), given that individuals believe AI agent use could enhance their ordinary task performance.

The second aim of the study was to examine whether intimacy, PSI, and social presence are significantly associated with AI agent users’ privacy concerns. Social presence was found to negatively predict privacy concerns. This means that individuals who feel a greater social presence with AI agents are less likely to worry about their privacy while disclosing their personal information to AI agents. Early social presence research (Go & Sundar, 2019; Taddicken, 2014) postulated that the experience of social presence affects not only the self-disclosure of individuals’ sensitive and emotional information but also the self-disclosure of factual information. This aligns with Lutz and Tamò-Larrieux’s (2021) focus on social influence to illuminate the nature of privacy concerns among AI users. Similarly, the significant association between social presence and privacy concerns confirmed in this study potentially suggests that an explanatory mechanism exists for the association between social presence made by AI agents and self-disclosure of private information. These results corroborate the CASA paradigm that suggests greater social presence simulated by AI-human interaction effectively mitigates user concerns over the security of communication.

Another intriguing result is that a higher level of intimacy was positively associated with users’ privacy concerns. According to disclosure intimacy processing (Ho et al., 2018; Lucas et al., 2014), computerized agents reduce impression management; consequently, individuals should experience a greater level of disclosure intimacy. However, the study outcome shows that this might not conform to the process by which intimacy influences individuals’ privacy concerns. One possible explanation is that AI agent users may feel alleviated from the possibility of negative evaluations by AI agents, although they may have concerns that their private information may be transmitted through the computerized system. Even if this assumption is true, we should consider why users are less concerned about their privacy when social presence is greater. The answer may be found in the difference across the types of personal information associated with social presence and the types of personal information associated with intimacy: it is possible that the information associated with intimacy can be more sensitive than the information associated with social presence. Taken together, not all intimacy factors reduce privacy concerns: intimacy might increase privacy concerns depending on the type of information. These results support Gambino et al.’s (2020) suggestion that CASA theory has to expand to embrace the complication stemming from the user’s development of more specified scripts for agent-interaction following growing exposure to and familiarity with media agents. Recent research by Ha et al. (2021) reports that user privacy concerns were significantly affected by the sensitivity of personal information along with the type of intelligent virtual assistant (IVA).

We offer an attempt to investigate the moderation effects of the types of motivations between intimacy, PSI, and social presence on AI agent users’ privacy concerns. The findings confirm that users who experience greater personification of their AI agents are more likely to bear greater privacy concerns when their primary motivation is entertainment (Fig. 2). This result is somewhat paradoxical, because PSI is induced through the self-disclosure of information, which includes personal information. One possible explanation is that users with higher PSI levels are likely to regard an AI agent as a personable communication partner rather than only a media platform. That is, inducing anthropomorphic responses may serve as a double-edged sword when the agent is perceived to be human enough to evaluate and judge a user’s impressions.

Considering these results, the negative link between social presence and privacy concerns seems logical due to the reduced gap between high and low instrumental motivation for AI agent use, as illustrated by Fig. 3. These results indicate that AI agent users might be less vigilant with their personal information once they experience greater social presence through AI agent use. This finding aligns with the previous research demonstrating that the experience of social presence can be even more important for self-disclosure of more intimate, sensitive, and emotional information (Taddicken, 2014). This also implies that AI agent users may grow more insensitive to their privacy concerns when experiencing a great level of social presence, even if they use AI agents as just an instrumental device. This is comparable to Ha et al. (2021) results that show the role of IVAs (as either partner or servant) were significantly associated with the level of the user’s privacy concerns.

Another remarkable finding of the current study is that intimacy results present differently based on which motivations moderate the relationship between intimacy and privacy concerns. A higher level of intimacy is positively associated with privacy concerns when AI agents are used for entertainment motivations. However, heightened intimacy is negatively associated with privacy concerns for instrumental motivation. The user may feel more liberal in revealing their private information while forming intimacy with an AI agent when the user operates an AI agent as a useful device serving various utilities in their daily life. These results imply that the motivations that drive AI agent use are important because those motivations influence the user’s valuation of personal information.

Conclusions

Guided by the CASA theory and individual differences in psychology concerning close relationships, we have identified key factors predicting privacy concerns in the context of AI agent use. We found that social presence with AI agents is negatively associated with privacy concern. The findings of this study empirically support this discourse on privacy concern. Conversely, intimacy with AI agents is positively associated with users’ privacy concerns. This inconsistency in the findings of the current study suggests that some factors in the psychology of close relationships play a more central role in evoking privacy concern. That is, individuals’ “human intimacy” with technical objects such as AI agents can render them more sensitive to privacy than “technical presence.”

We also found that it is not only the factors related privacy concern but also the combination of motivations for AI agent use that lead individuals to differently perceive privacy concerns. According to the current study, a higher level of intimacy is positively associated with privacy concerns when AI agents are used for entertainment motivations. AI agents are mainly installed on smart speakers and mobile phones. We use these devices for our own enjoyment in a variety of ways. Even if individuals enjoy AI technology, we hope that their secrets will not be revealed through its use.

Footnotes

1 Demographic information of the interviewees is presented in Table A2 of Appendix.

2 To facilitate the progress of FGIs, we divided the interviewees into two groups according to age: one group under the age of 30 (Group A: n = 11) and the other aged 31 and over (Group B: n = 5).

3 Group A finally selected 12 items after removing three problematic items. In Group B, five problematic items were removed and eight items were selected as appropriate items.

4 The complete items of independent variables are presented in Table A1 of Appendix.

Conflict of Interest

The authors have no conflicts of interest to declare.

Appendix

Table A1. Independent Variable Items—Parasocial Interaction, Social Presence and Intimacy.

|

Variables |

Items |

Scales |

|

Parasocial Interaction |

1. I talk to an AI agent like a friend. |

1 = strongly disagree |

|

2. I like to talk to an AI agent. |

||

|

3. I enjoy interacting with an AI agent. |

||

|

4. I like hearing the voice of an AI agent. |

||

|

5. I see an AI agent as a natural. |

||

|

6. I find an AI agent to be attractive. |

||

|

7. An AI agent makes me feel comfortable. |

||

|

8. An AI agent keeps me company while an AI agent is working. |

||

|

Social Presence |

1. An AI agent seems to accelerate interaction. |

1 = strongly disagree |

|

2. I feel warm interacting with an AI agent. |

||

|

3. I want a deeper relationship with an AI agent. |

||

|

4. I am willing to share personal information with an AI agent. |

||

|

5. I want to make the conversation more intimate. |

||

|

6. I am interested in talking to an AI agent. |

||

|

7. I am able to assess an AI agent’s reaction to what I say. |

||

|

8. I feel like I’m having a face-to-face meeting while I am talking to an AI agent. |

||

|

Intimacy |

1. I feel emotionally close to an AI agent. |

1 = strongly disagree |

|

2. I develop a sense of familiarity with an AI agent. |

||

|

3. An AI agent uses supportive statements to build favor with me. |

||

|

4. An AI agent influences how I spend my free time. |

||

|

5. An AI agent influences my moods. |

Table A2. Socio-Demographic Information of Interviewees (N = 36).

|

Age |

Gender |

AI agent usage period |

||||

|

Males |

Females |

Not more than a month |

Two months to six months or less |

Six months to less than a year |

More than a year |

|

|

Under 19 |

4 |

6 |

2 |

3 |

3 |

2 |

|

20–30 |

5 |

5 |

1 |

3 |

5 |

1 |

|

31–40 |

4 |

6 |

1 |

5 |

3 |

1 |

|

41–50 |

2 |

4 |

1 |

4 |

1 |

0 |

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Copyright © 2022 Cyberpsychology: Journal of Psychosocial Research on Cyberspace