Investigating differences in the attention distribution strategies of high and low media multitaskers through a two-dimensional game

Vol.13,No.3(2019)

The rapid advancement of mobile computing devices and the ever-growing range of infotainment services they enable have cultivated high levels of media multitasking. Studies have considered the effects of this form of behaviour for cognitive control ability, with findings suggesting that chronic media multitasking is associated with reduced inhibitory control. In this study we advance knowledge in this domain by investigating differences in the attention distribution strategies of high and low media multitaskers (HMMs and LMMs) through a simple, two-dimensional game. 1 063 university students completed a web-based survey concerning their media multitasking behaviour and played the 2D game. Contributing to the ecological validity of the study the game was played within the respondent’s web-browser, as part of the survey, at a time and place (and on a computer) of their choosing. During gameplay one of two different banners, both irrelevant to the game, were displayed adjacent to the game. No instructions were provided in relation to the banners. Our analysis considered respondents’ performance in the game in relation to both their media multitasking and the content of the banner displayed. Our findings suggest that while HMMs attend to distracting stimuli independent of their content or salience, LMMs are more selective. This selectivity enables improved primary task performance when distracting stimuli are deemed unimportant. Additionally, we found that LMMs generally recalled banner information more accurately after the game was played.

Media multitasking; attention distribution; inhibitory control; distraction; recall; 2D game

Daniel B. le Roux

Department of Information Science, Stellenbosch University, South Africa

Daniel B. le Roux (PhD) is a senior lecturer at Stellenbosch University in South Africa. His research concerns complex, self-organising socio-technical systems and, more recently, behavioural effects of technology adoption and use. Over the past five years he has focussed on the effects of the rapid advancement and adoption of mobile computing devices (smartphones in particular) and the manner in which the ubiquity and pervasiveness of these devices influence, firstly, behaviour in personal and social contexts, and, secondly, cognitive processes and capabilities. He established and heads up the Cognition and Technology Research Group (CTRG) at Stellenbosch University (see http://suinformatics.com/ctrg).

Douglas A. Parry

Department of Information Science, Stellenbosch University, South Africa

Douglas A. Parry is an early career researcher interested in the meeting point between people and technology. He currently holds a faculty position teaching and supervising within the undergraduate and postgraduate Socio Informatics programs as part of the Department of Information Science at Stellenbosch University. As a member of the Cognition and Technology Research Group (CTRG), he is involved in a number of research projects concerning the interplay between emerging digital technologies, human cognition and affective wellbeing. His research interests include: media effects, human computer interaction, and cyberpsychology. He is currently working towards a doctoral degree specifically focusing on media multitasking and cognitive control.

Barkley, R. A., & Fischer, M. (2011). Predicting impairment in major life activities and occupational functioning in hyperactive children as adults: Self-reported executive function (EF) deficits versus EF tests. Developmental Neuropsychology, 36, 137-161. https://doi.org/10.1080/87565641.2010.549877

Baumgartner, S. E., Lemmens, J. S., Weeda, W. D., & Huizinga, M. (2017). Measuring media multitasking: Development of a short measure of media multitasking for adolescents. Journal of Media Psychology, 29, 188-197. https://doi.org/10.1027/1864-1105/a000167

Baumgartner, S. E., & Sumter, S. R. (2017). Dealing with media distractions: An observational study of computer-based multitasking among children and adults in the Netherlands. Journal of Children and Media, 11, 295-313. https://doi.org/10.1080/17482798.2017.1304971

Benbunan-Fich, R., Adler, R. F., & Mavlanova, T. (2011). Measuring multitasking behavior with activity-based metrics. ACM Transactions on Computer-Human Interaction, 18(2), article 7. https://doi.org/10.1145/1970378.1970381

Cain, M. S., & Mitroff, S. R. (2011). Distractor filtering in media multitaskers. Perception, 40, 1183-1192. https://doi.org/10.1068/p7017

Carr, N. (2010). The Shallows: How the internet is changing the way we think, read and remember. London, UK: Atlantic Books Ltd.

Clapp, W. C., Rubens, M. T., & Gazzaley, A. (2010). Mechanisms of working memory disruption by external interference. Cerebral Cortex, 20, 859-872. https://doi.org/10.1093/cercor/bhp150

Corbetta, M., & Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience, 3, 201-215. https://doi.org/10.1038/nrn755

De la Casa, L. G., Gordillo, J. L., Mejías, L. J., Rengel, F., & Romero, M. F. (1998). Attentional strategies in Type-A individuals. Personality and Individual Differences, 24, 59-69. https://doi.org/10.1016/S0191-8869(97)00140-2

Deng, T., Kanthawala, S., Meng, J., Peng, W., Kononova, A., Hao, Q., . . . David, P. (2019). Measuring smartphone usage and task switching with log tracking and self-reports. Mobile Media Communication, 7, 3-23. https://doi.org/10.1177/2050157918761491

Deuze, M. (2012). Media life. Cambridge, UK: Polity Press.

Dewan, P. (2014). Can I have your attention? Implications of the research on distractions and multitasking for reference librarians. The Reference Librarian, 55, 95-117. https://doi.org/10.1080/02763877.2014.880636

Dux, P. E., Tombu, M. N., Harrison, S., Rogers, B. P., Tong, F., & Marois, R. (2009). Training improves multitasking performance by increasing the speed of information processing in human prefrontal cortex. Neuron, 63, 127-138. https://doi.org/10.1016/j.neuron.2009.06.005

Dzubak, C. M. (2008). Multitasking: The good, the bad, and the unknown. Synergy: The Journal of the Association for the Tutoring Profession, 2, 1-12. Retrieved from https://www.myatp.org/synergy-volume-2/

Foehr, U. G. (2006). Media multitasking among American youth: Prevalence, predictors, and pairings. Washington, DC, US: The Henry J. Kaiser Family Foundation. Retrieved from https://eric.ed.gov/?id=ED527858

Forster, S., & Lavie, N. (2014). Distracted by your mind? Individual differences in distractibility predict mind wandering. Journal of Experimental Psychology: Learning, Memory, and Cognition, 40, 251-260. https://doi.org/10.1037/a0034108

Fried, C. B. (2008). In-class laptop use and its effects on student learning. Computers & Education, 50, 906-914. https://doi.org/10.1016/j.compedu.2006.09.006

Gazzaley, A., & Rosen, L. D. (2016). The distracted mind: Ancient brains in a high-tech world. Cambridge, MA, US: The MIT Press.

Ishizaka, K., Marshall, S. P., & Conte, J. M. (2001). Individual differences in attentional strategies in multitasking situations. Human Performance, 14, 339-358. https://doi.org/10.1207/S15327043HUP1404_4

Jans, B., Peters, J. C., & De Weerd, P. (2010). Visual spatial attention to multiple locations at once: The jury is still out. Psychological Review, 117, 637-682. https://doi.org/10.1037/a0019082

Jeong, S.-H., & Fishbein, M. (2007). Predictors of multitasking with media: Media factors and audience factors. Media Psychology, 10, 364-384. https://doi.org/10.1080/15213260701532948

Jeong, S.-H., & Hwang, Y. (2012). Does multitasking increase or decrease persuasion? Effects of multitasking on comprehension and counterarguing. Journal of Communication, 62, 571-587. https://doi.org/10.1111/j.1460-2466.2012.01659.x

Kätsyri, J., Kinnunen, T., Kusumoto, K., Oittinen, P., & Ravaja, N. (2016) Negativity bias in media multitasking: The effects of negative social media messages on attention to television news broadcasts. PLoS ONE, 11(5), e0153712. https://doi.org/10.1371/journal.pone.0153712

Kirschner, P. A., & De Bruyckere, P. (2017). The myths of the digital native and the multitasker. Teaching and Teacher Education, 67, 135-142. https://doi.org/10.1016/j.tate.2017.06.001

Lang, P. J., Bradley, M. M., & Cuthbert, B. N. (1997). Motivated attention: Affect, activation, and action. In P. J. Lang, R. F. Simons, & M. T. Balaban (Eds.), Attention and orienting: Sensory and motivational processes (pp. 97-135). Mahwah, NJ, US: Lawrence Erlbaum Associates Publishers.

Lang, A., & Chrzan, J. (2015). Media multitasking: Good, bad, or ugly? Annals of the International Communication Association, 39, 99-128. https://doi.org/10.1080/23808985.2015.11679173

Lawson, D., & Henderson, B. B. (2015). The costs of texting in the classroom. College Teaching, 63, 119-124. https://doi.org/10.1080/87567555.2015.1019826

le Roux, D. B., & Parry, D. A. (2017a). In-lecture media use and academic performance: Does subject area matter? Computers in Human Behavior, 77, 86-94. https://doi.org/10.1016/j.chb.2017.08.030

le Roux, D. B., & Parry, D. A. (2017b). A new generation of students: Digital media in academic contexts. In J. Liebenberg, & S. Gruner (Eds.), ICT Education: 46th Annual Conference of the Southern African Computer Lecturers' Association, SACLA 2017, Magaliesburg, South Africa, July 3-5, 2017, Revised Selected Papers (pp. 19-36). Cham, Switzerland: Springer International Publishing. https://doi.org/10.1007/978-3-319-69670-6_2

Leysens, J.-L., le Roux, D. B., & Parry, D. A. (2016). Can I have your attention, please?: An empirical investigation of media multitasking during university lectures. In Proceedings of the Annual Conference of the South African Institute of Computer Scientists and Information Technologists SAICSIT ’16 (Article No. 21). New York, NY, US: ACM. https://doi.org/10.1145/2987491.2987498

Lin, L. (2009). Breadth-biased versus focused cognitive control in media multitasking behaviors. Proceedings of the National Academy of Sciences, 106, 15521-15522. https://doi.org/10.1073/pnas.0908642106

Lottridge, D. M., Rosakranse, C., Oh, C. S., Westwood, S. J., Baldoni, K. A., Mann, A. S., & Nass, C. I. (2015). The effects of chronic multitasking on analytical writing. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 2967-2970). New York, NY, US: ACM. https://doi.org/10.1145/2702123.2702367

Matthews, K. A., & Brunson, B. I. (1979). Allocation of attention and the Type A coronary-prone behavior pattern. Journal of Personality and Social Psychology, 37, 2081-2090. https://doi.org/10.1037/0022-3514.37.11.2081

Miller, E. K., & Cohen, J. D. (2001). An integrative theory of prefrontal cortex function. Annual Review of Neuroscience, 24, 167-202. https://doi.org/10.1146/annurev.neuro.24.1.167

Miyake, A., Friedman, N. P., Emerson, M. J., Witzki, A. H., Howerter, A., & Wager, T. D. (2000). The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: A latent variable analysis. Cognitive Psychology, 41, 49-100. https://doi.org/10.1006/cogp.1999.0734

Moch, A. (1984). Type A and Type B behaviour patterns, task type and sensitivity to noise. Psychological Medicine, 14, 643-646. https://doi.org/10.1017/S0033291700015245

Monsell, S. (2003). Task switching. Trends in Cognitive Sciences, 7, 134-140. https://doi.org/10.1016/S1364-6613(03)00028-7

Moreno, M. A., Jelenchick, L., Koff, R., Eikoff, J., Diermyer, C., & Christakis, D. A. (2012). Internet use and multitasking among older adolescents: An experience sampling approach. Computers in Human Behavior, 28, 1097–1102. https://doi.org/10.1016/j.chb.2012.01.016

Oken, B. S., Salinsky, M. C., & Elsas, S. M. (2006). Vigilance, alertness, or sustained attention: Physiological basis and measurement. Clinical Neurophysiology, 117, 1885-1901. https://doi.org/10.1016/j.clinph.2006.01.017

Ophir, E., Nass, C., & Wagner, A. D. (2009). Cognitive control in media multitaskers. Proceedings of the National Academy of Sciences, 106, 15583-15587. https://doi.org/10.1073/pnas.0903620106

Oulasvirta, A., & Saariluoma, P. (2004). Long-term working memory and interrupting messages in human computer interaction. Behaviour & Information Technology, 23, 53-64. https://doi.org/10.1080/01449290310001644859

Parry, D. A. (2017). The digitally-mediated study experiences of undergraduate students in South Africa (Master’s thesis). Retrieved from https://hdl.handle.net/10019.1/102667

Parry, D. A., & le Roux, D. B. (2018a). Off-task media use in lectures: Towards a theory of determinants. In S. Kabanda, H. Suleman, & S. Gruner (Eds.), ICT Education: 47th Annual Conference of the Southern African Computer Lecturers' Association, SACLA 2018, Gordon's Bay, South Africa, June 18–20, 2018, Revised Selected Papers. Cham, Switzerland: Springer International Publishing.

Parry, D. A., & le Roux, D. B. (2018b). In-lecture media use and academic performance: Investigating demographic and intentional moderators. South African Computer Journal, 30, 85-107. https://doi.org/10.18489/sacj.v30i1.434

Pashler, H. (1994). Dual-task interference in simple tasks: Data and theory. Psychological Bulletin, 116, 220-244. https://doi.org/10.1037/0033-2909.116.2.220

Ralph, B. C. W., & Smilek, D. (2017). Individual differences in media multitasking and performance on the n-back. Attention, Perception, & Psychophysics, 79, 582-592. https://doi.org/10.3758/s13414-016-1260-y

Ralph, B. C. W., Thomson, D. R., Cheyne, J. A., & Smilek, D. (2014). Media multitasking and failures of attention in everyday life. Psychological Research, 78, 661-669. https://doi.org/10.1007/s00426-013-0523-7

Ralph, B. C. W., Thomson, D. R., Seli, P., Carriere, J. S. A., & Smilek, D. (2015). Media multitasking and behavioral measures of sustained attention. Attention, Perception, & Psychophysics, 77, 390-401. https://doi.org/10.3758/s13414-014-0771-7

Ravizza, S. M., Hambrick, D. Z., & Fenn, K. M. (2014). Non-academic internet use in the classroom is negatively related to classroom learning regardless of intellectual ability. Computers & Education, 78, 109-114. https://doi.org/10.1016/j.compedu.2014.05.007

Rosen, C. (2008). The myth of multitasking. The New Atlantis: A Journal of Technology and Society, 20, 105-110. Retrieved from https://www.thenewatlantis.com/publications/the-myth-of-multitasking

Rouis, S., Limayem, M., & Salehi-Sangari, E. (2011). Impact of Facebook usage on students’ academic achievement: Role of self-regulation and trust. Electronic Journal of Research in Educational Psychology, 9, 961-994.

Rubinstein, J. S., Meyer, D. E., & Evans, J. E. (2001). Executive control of cognitive processes in task switching. Journal of Experimental Psychology: Human Perception and Performance, 27, 763-797. https://doi.org/10.1037//0096-1523.27.4.763

Salvucci, D. D., Taatgen, N. A., & Borst, J. P. (2009). Toward a unified theory of the multitasking continuum: From concurrent performance to task switching, interruption, and resumption. In CHI 2009: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 1819-1828). New York, NY, US: ACM. https://doi.org/10.1145/1518701.1518981

Snyder, H. R., Miyake, A., & Hankin, B. L. (2015). Advancing understanding of executive function impairments and psychopathology: Bridging the gap between clinical and cognitive approaches. Frontiers in Psychology, 6, article 328. https://doi.org/10.3389/fpsyg.2015.00328

Stokes, K. (2016). Attention capture: Stimulus, group, individual, and moment-to-moment factors contributing to distraction (Doctoral dissertation). Retrieved from http://hdl.handle.net/10464/9306

Toplak, M. E., West, R. F., & Stanovich, K. E. (2013). Practitioner Review: Do performance-based measures and ratings of executive function assess the same construct? Journal of Child Psychology and Psychiatry, 54, 131-143. https://doi.org/10.1111/jcpp.12001

Uncapher, M. R., Thieu, M. K., & Wagner, A. D. (2016). Media multitasking and memory: Differences in working memory and long-term memory. Psychonomic Bulletin & Review, 23, 483-490.

Uncapher, M. R., Lin, L., Rosen, L. D., Kirkorian, H. L., Baron, N. S., Bailey, K., . . . Wagner, A. D. (2017). Media multitasking and cognitive, psychological, neural, and learning differences. Pediatrics, 140(Suppl. 2), S62-S66. https://doi.org/10.1542/peds.2016-1758D

Uncapher, M. R., & Wagner, A. D. (2018). Minds and brains of media multitaskers: Current findings and future directions. Proceedings of the National Academy of Sciences, 115, 9889-9896. https://doi.org/10.1073/pnas.1611612115

van der Schuur, W. A., Baumgartner, S. E., Sumter, S. R., & Valkenburg, P. M. (2015). The consequences of media multitasking for youth: A review. Computers in Human Behavior, 53, 204-215. https://doi.org/10.1016/j.chb.2015.06.035

Wiradhany, W., & Nieuwenstein, M. (2017). Cognitive control in media multitaskers: Two replication studies and a meta-Analysis. Attention, Perception, & Psychophysics, 79, 2620-2641. https://doi.org/10.3758/s13414-017-1408-4

Witt, B., & Ward, L. (1965). IBM Operating system/360 concepts and facilities. New York, NY: International Business Machines Corporation.

Yap, J. Y., & Lim, S. W. H. (2013). Media multitasking predicts unitary versus splitting visual focal attention. Journal of Cognitive Psychology, 25, 889-902. https://doi.org/10.1080/20445911.2013.835315

Zhang, W., & Zhang, L. (2012). Explicating multitasking with computers: Gratifications and situations. Computers in Human Behavior, 28, 1883-1891. https://doi.org/10.1016/j.chb.2012.05.006

Editorial Record:

First submission received:

September 13, 2018

Revisions received:

February 8, 2019

Accepted for publication:

May 24, 2019

Editor in charge:

Michel Walrave

Introduction

As digital media continue to permeate all dimensions of our lives, a growing number of research studies into the effects of chronic media use are being reported (e.g., Baumgartner, Lemmens, Weeda, & Huizinga, 2017; Baumgartner & Sumter, 2017; Cain & Mitroff, 2011; Jeong & Hwang, 2012; le Roux & Parry, 2017b). An important subset of these studies investigate the effects of chronic media-multitasking for attentional control (e.g., Cain & Mitroff, 2011; Ophir, Nass, & Wagner, 2009; Ralph & Smilek, 2017; Ralph, Thomson, Seli, Carriere, & Smilek, 2015; Uncapher, Thieu, & Wagner, 2016; Yap & Lim, 2013). Media multitasking describes a form of behaviour during which a person simultaneously performs one or more activities of which some involve the use of media (Lang & Chrzan, 2015). Mobile digital devices and ubiquitous connectivity to an ever-greater range of infotainment platforms have normalised high levels of media multitasking, particularly among younger generations (van der Schuur, Baumgartner, Sumter, & Valkenburg, 2015). While there remains a lack of definitive evidence, studies suggest that this form of behaviour may be detrimental to attention control functions (Cain & Mitroff, 2011; Ophir et al., 2009).

In an early study Ophir et al. (2009) demonstrated that high media multitaskers (HMMs) show a reduced ability to inhibit the processing of irrelevant stimuli during tasks, i.e. reduced inhibitory control. Subsequent to this, while a large body of empirical evidence have since been collected and analysed to further understand associations between high levels of media multitasking and aspects of attentional control, clear evidence of either negative or positive effects remains to be demonstrated. As Uncapher and Wagner (2018, p. 9894) note, “the literature demonstrates areas of both convergence and divergence”. Wiradhany and Nieuwenstein (2017), for instance, demonstrate through a limited meta-analysis of performance-based assays that evidence for a negative association between high levels of media multitasking and aspects of cognitive control is particularly weak. In contrast, however, while acknowledging the existence of null associations and methodological differences, Uncapher et al. (2017, p. 63) postulate that across the full evidence-base higher levels of media multitasking are associated with reduced performance in a number of cognitive domains. This interpretation is supported by Uncapher and Wagner (2018, p. 9889) who, following a review of extent evidence, indicate that, relative to low media multitaskers (LMMs), HMMs are more likely to experience attentional lapses. These authors do, however, acknowledge that, despite a decade of enquiry, the nature and causes of associations between media multitasking and attention remain open questions.

One interpretation of this finding posits that chronic media multitasking over long periods of time may produce deficits in attention control ability by normalising continuous, rapid switching between multiple streams of stimuli and atrophying sustained attention ability, a deficit-producing hypothesis (Ophir et al., 2009; Ralph, Thomson, Cheyne, & Smilek, 2014). An alternative interpretation is that HMMs, by virtue of trait-level attributes, approach and perform tasks with a broader attentional distribution which makes them more susceptible to distraction by irrelevant stimuli in their environments, an individual differences hypothesis (Ralph et al., 2015).

To measure attentional control researchers have relied primarily on two techniques: the measurement of performance in lab-based tasks designed to test particular cognitive functions (e.g., working memory, inhibition and cognitive flexibility), and the use of self-reports through a range of survey instruments. In a number of meta-level studies the findings produced by these techniques have been compared (see van der Schuur et al., 2015, for example). Overall, the observed negative relationship between media multitasking and inhibitory control has been stronger when self-report measures were utilised. This has prompted debate about which approach is more appropriate and valid (Lin, 2009; Ralph et al., 2014).

In this study we extend knowledge in this domain by investigating the relationship between media multitasking and attention distribution. Two key objectives guided the research design. The first objective was to investigate differences in attention distribution strategies between HMMs and LMMs during task performance in an ecologically valid setting. This implied that our subjects had to perform a designed task in a setting and time of their own choosing, exposed to the range of environmental factors that characterise the context in which they normally perform tasks. Moreover, we wanted to avoid creating the perception among subjects that they were being tested or measured. Our second objective was to test the effect on task performance of meaningful, non-homogeneous distracting stimuli. In particular, we aimed to establish whether different forms of distracting stimuli would have different impacts on the manner in which HMMs and LMMs distribute their attention during tasks.

To achieve these objectives we designed a simple two-dimensional (2D) game that was played as part of a web-based survey, enabling us to manipulate the distracting stimuli which appeared during gameplay. By combining media multitasking data (collected as part of the survey) with game performance data, we endeavoured to address the following primary research question: Are there differences in the attentional strategies of HMMs and LMMs, based on their game performance data, under different conditions of distraction?

Because our measurement technique is novel the study is exploratory and descriptive in nature. Rather than stating hypotheses, we formulated four secondary research questions to guide our analysis. These relate to the range of variables the were recorded during gameplay and are presented after these variables are described in Section 3. 1 063 university students completed the survey and played the game. Our findings suggest LMMs are more selective than HMMs when distributing their attention to distracting stimuli during task execution, enabling them to perform better under particular conditions.

To provide background to the present study we briefly consider previous research concerning the nature and prevalence of media multitasking. Extending from this, we consider relationships between chronic media multitasking and cognitive control, with specific emphasis on studies considering patterns of attentional distribution.

What Is High or Chronic Media Multitasking?

Multitasking, a term first used to describe a capability of computing machines (Witt & Ward, 1965), refers to the simultaneous performance of two or more tasks (Benbunan-Fich, Adler, & Mavlanova, 2011). More recently, the term has been adopted to describe the “human attempt to do simultaneously as many things as possible, as quickly as possible” (Rosen, 2008, p. 105). In this context, two variations in the interpretation of the term are apparent. The first frames multitasking as parallel tasking, implying concurrent processing (Benbunan-Fich et al., 2011); while the second frames it as sequential task-switching, implying rapid switching between multiple tasks (Dzubak, 2008). To consolidate these interpretations, Salvucci, Taatgen, and Borst (2009) developed a continuum along which multitasking is described by the amount of time spent on a task before switching to another.

While multitasking by humans is often perceived as parallel tasking, Gazzaley and Rosen (2016, p. 77) argue that, at a neural level, the human brain is incapable of this feat. Instead, the brain enables the impression of parallel tasking by dynamically and rapidly switching between multiple neural networks during the execution of multiple tasks (Clapp, Rubens, & Gazzaley, 2010). Importantly, however, such switching implies performance-costs which manifest as reductions in speed and accuracy on primary tasks (Monsell, 2003; Oulasvirta & Saariluoma, 2004; Pashler, 1994; Rubinstein, Meyer, & Evans, 2001).

Media multitasking, as a special case of multitasking, is generally defined as either the simultaneous use of multiple media (e.g., browsing the Internet while watching television) (Ophir et al., 2009), or as media use while conducting one or more non-media activities (e.g., sending a text-message while driving) (Jeong & Hwang, 2012). Zhang and Zhang (2012, p. 1883) consolidate these two definitions by describing media multitasking as “engaging in one medium along with other media or non-media activities”. Lang and Chrzan (2015, p. 100), accordingly, describe it as the act of “performing two or more tasks simultaneously, one of which involves media use”.

In-line with previous studies, we use the phrase chronic media multitasking to describe frequent and habitual media multitasking by individuals (Ophir et al., 2009; Uncapher et al., 2016; Yap & Lim, 2013). Such individuals typically own one or more mobile computing device and subscribe to multiple infotainment or communication services/platforms. Additionally, they often use mobile computing devices while performing other activities (Deng et al., 2019; Moreno et al., 2012; Parry & le Roux, 2018a). Kirschner & De Bruyckere (2017) argue that one should not assume that chronic media multitasking implies an aptitude for technology or an improved multitasking ability. They posit that, despite creating an illusion of effective multitasking, such individuals still experience task performance costs.

Media multitasking behaviour is reflective of the parallel information processing strategies which are developed to cope with the increasingly vast amount of informational stimuli in our environments (Carr, 2010; Ophir et al., 2009). Deuze (2012, p. 10) emphasises the increasingly important role of media in shaping these environments by stating that media have become to us as water is to fish. A byproduct of such media saturation, Dewan (2014) argues, is a culture of distraction in which an individual’s attention is constantly competed for. This, in turn, becomes the wellspring for the development of behavioural coping strategies which enable navigation through continuous experiences of distraction and goal-conflict. Underlying and dictating these behavioural efforts are individuals’ attentional distribution strategies and the manner in which they filter stimuli in relation to their goals.

How Prevalent Is Chronic Media Multitasking Among Students?

A large and diverse body of evidence provide support, firstly, for the observation that chronic media multitasking is a defining characteristic of the millennial generation (individuals born between 1981 and 1996) (Foehr, 2006; Jeong & Fishbein, 2007; le Roux & Parry, 2017b; Moreno et al., 2012; van der Schuur et al., 2015) and, secondly, that it is particularly prevalent among the current cohort of university students. In addition to being exposed to digital technology from childhood, university students typically have the financial means to procure mobile computing devices coupled with ubiquitous access to Internet connectivity (Jeong & Fishbein, 2007; le Roux & Parry, 2017b; Parry & le Roux, 2018b; Zhang & Zhang, 2012). The prevalence of chronic media multitasking among this population is well-illustrated by studies indicating that it has become the norm for students to media multitask in academic settings like lectures and study sessions (Fried, 2008; Parry, 2017; Rouis, Limayem, & Salehi-Sangari, 2011; Zhang & Zhang, 2012). Parry and le Roux (2018b), for example, report that, during a 50-minute lecture, students engage in an average of over 15 media use instances, most of which are unrelated to the lecture content. Similarly, Moreno et al. (2012) found that, over the course of a day, 56.5% of students’ media use involves multitasking of some sort. Such behaviour typically involves over 100 switches between different media-related activities (Deng et al., 2019).

Are There Links Between Chronic Media Multitasking and Cognitive Control?

Cognitive control describes individuals’ ability to select and maintain thoughts and actions that represent internal goals and means to achieve these goals (Miller & Cohen, 2001). Three core executive functions combine to enable cognitive control — working memory, cognitive flexibility or shifting, and inhibition (Miyake et al., 2000). These functions can be isolated and measured through a range of standardised tests involving computer-based tasks conducted in laboratory environments. Alternatively, researchers have adopted self-report instruments to measure “conscious experience of one’s attentional functioning in everyday life” (Ralph et al., 2014, p. 668).

A number of studies conducted over the past decade have reported links between chronic media multitasking and diminished cognitive control (Cain & Mitroff, 2011; Ophir et al., 2009; Ralph et al., 2014; Uncapher et al., 2016; van der Schuur et al., 2015). These findings suggest, in particular, that it is associated with bottom-up attentional control — the tendency to let one’s environment dictate one’s attentional focus. This makes individuals more exposed to environmental distractions when they attend to stimuli that are irrelevant to their goals (Cain & Mitroff, 2011). Van der Schuur et al. (2015, p. 208), based on a review of 43 studies, conclude that results are mixed and that, given current evidence, chronic media multitasking is “unrelated to performance-based measures of working memory capacity, task switching, and response inhibition”. However, they report that a more consistent pattern of negative association is observable in studies adopting self-report measures. This pattern supports the “scattered attention” or “breadth-biased” hypothesis which dictates that chronic media multitaskers do not filter irrelevant stimuli in their environments effectively (Ophir et al., 2009; van der Schuur et al., 2015).

An important question which follows from these findings concerns the possibility that the observed association is causal in nature. This would imply that chronic media multitasking induces, over time, cognitive control deficits. Underlying this line of reasoning is the principle that “constant repetition of a behaviour changes the way we process information” (Dux et al., 2009). There remains, however, a lack of definitive evidence to support this proposition. Ralph et al. (2014), accordingly, warn that the observed associations may be explained by differences at trait-level, rather than repeated behaviour. This line of reasoning suggests that media multitaskers, through their broad attentional focus allow themselves to “respond to stimuli outside the realm of their immediate task” (Ophir et al., 2009, p. 15585). Such a breadth-bias attentional strategy not only explains the dependent variable (i.e., perceived cognitive control deficits), but also the independent variable (i.e., a propensity for media multitasking).

The observed differences between findings from studies adopting performance-based and self-report measures have prompted investigations about the degree to which the two approaches assess the same construct. Toplak, West, and Stanovich (2013), following a review of 20 studies testing the level of association between these measures, conclude that they measure different aspects of executive function. Performance-based measures provide an indication of processing efficiency of a given executive function in an isolated context. This typically involves highly structured settings (i.e., laboratories) and specific directions from examiners. Self-report measures, on the other hand, provide an indication of everyday, behavioural aspects of cognitive control and the extent to which goals are accomplished in complex real-world situations (Toplak et al., 2013, p. 140). Ralph et al. (2014, p. 667), accordingly, argue that self-reports are indicative of “individuals’ subjective awareness of their attentional experiences in everyday life. Differences in findings across studies that utilised self-reports and those that utilised performance-based indicators have triggered debate about which approach is more appropriate in research of media multitasking effects. Barkley and Fischer (2011), for instance, suggest that self-report measures offer a greater level of ecological validity, with more accurate predictions of real-world cognitive impairments in the course of everyday activities. Snyder, Miyake, and Hankin (2015), however, warn that they are subject to interpretational challenges as a result of the interplay between contextual factors, and executive and nonexecutive processes. Yap and Lim (2013, p. 890) point out that, in contrast to controlled experimental settings where performance-based measures are typically elicited, the “real world requires the constant processing of rather complex scenes”. Despite the growing body of empirical evidence concerning media multitasking and cognitive control, the exact nature of the relationship between these constructs remains unclear.

An important aspect of attentional control in everyday life situations concerns the nature of distracting stimuli. Stokes (2016, p. 81) argues that “long- and short-term experience with stimuli shapes their significance for attention such that highly salient stimuli associated with reward, fear, or past experience can capture attention even though they do not match current selection goals and are not otherwise physically salient”. Moreover, as behavioural studies have demonstrated, salient but task-irrelevant distractors typically attract the allocation of attention (see Corbetta & Shulman, 2002 for a review). It follows that the stimuli which present as distracting to an individual should be framed as a product of both the features of the stimuli and its subjective construction by the individual. Kätsyri, Kinnunen, Kusumoto, Oittinen, and Ravaja (2016), for instance, show that negative social media messages attract the allocation of attention more so than similar positive messages while media multitasking. While the authors found that the semantic nature of the distractors affected the allocation of attention while media multitasking, no affect on attentional performance was found, nor did they consider effects at differential levels of media multitasking.

Lab-based tasks involving distracting stimuli that do not carry particular meaning may indicate a general tendency to attend to irrelevant stimuli, but they are agnostic to the complexities of attention distribution in real-world environments where different forms of distracting stimuli are experienced as less or more salient by different individuals. Lottridge et al. (2015) consider associations between chronic media multitasking levels and analytical writing quality under conditions of either task-relevant or task-irrelevant distractors. Both forms of distractor affected performance. However, when the authors controlled for switches and time spent writing, the negative effect occurred as a function of media multitasking level. When exposed to task-relevant distractors HMMs’ performance was superior. When exposed to task-irrelevant distractors HMMs required more time and produced inferior outputs. LMMs, in contrast, inhibited attentional allocation to irrelevant distractors. The authors conclude that the effect of distractor relevance is greater for HMMs than it is for LMMs. Despite these findings and indications that attentional allocation is directed by motivational processes (Lang, Bradley, & Cuthbert, 1997), there is an absence of research explicitly considering effects associated with media multitasking under conditions of different distraction types.

Chronic Media Multitasking and Attentional Strategy

We use the term attentional strategy to describe an individual’s attention distribution during task performance. Hence, while attention distribution is associated with a particular point in time, attentional strategy describes attention distribution patterns over the course of task execution and is, furthermore, indicative of a general approach to task-execution (Ralph et al., 2015). It is posited that the attentional strategy to be adopted during a particular task (or tasks) is the product of a range of both subjective and situational factors. For example, characteristics of the tasks and the individual’s level of interest in or motivation for the task will determine the attentional strategy employed (Oken, Salinsky, & Elsas, 2006; Zhang & Zhang, 2012). We also acknowledge, however, that trait-level factors will influence general tendencies in attentional strategy adoption (Baumgartner & Sumter, 2017; Forster & Lavie, 2014; Ralph & Smilek, 2017). Some individuals tend to distribute attention broadly while others focus attention narrowly. Based on this conceptualisation and the empirical evidence reported in literature, one may argue that chronic media multitasking is associated with the tendency to voluntarily distribute attention between multiple streams of stimuli (Cain & Mitroff, 2011; Ophir et al., 2009).

In a number of studies researchers have investigated attentional strategy differences between individuals based on subjective factors. Particular emphasis has fallen on differences between Type A vs Type B behavioural patterns (Matthews & Brunson, 1979). Findings suggest that Type A individuals tend to focus more intensely on primary tasks than Type B individuals (De la Casa, Gordillo, Mejías, Rengel, & Romero, 1998; Ishizaka, Marshall, & Conte, 2001; Moch, 1984). In addition, De la Casa et al. (1998) found that Type A individuals tend to pay attention to stimuli even when not instructed to do so in an attempt to obtain as much information as possible for future use.

A limited number of studies have considered media multitasking as a predictor or producer of attentional strategy. Yap and Lim (2013) investigated individuals’ ability to deploy multiple visual “attentional foci in noncontiguous locations” (i.e., split their visual focal attention). Whether the human brain is capable of this feat remains a point of debate (Yap & Lim, 2013), but Jans, Peters and De Weerd (2010) suggest that the ability may be acquirable through training. During experiments conducted by Yap and Lim (2013), it was found that “individuals who tend to consume multiple visual media forms simultaneously” (i.e., high media multitaskers) employed a “split mode of attention when presented with cued non-contiguous locations, whereas those who tend to consume fewer visual media forms simultaneously ... employed a unitary mode of attention when presented with these same locations” (Yap & Lim, 2013, p. 899). Noting that, in general there do not appear to be differences in the ability to control attentional allocation across individual levels of media multitasking, Ralph et al. (2015) suggest that task-approach or attentional strategies may account for empirical evidence of performance differences for HMMs. Moreover, the researchers propose that such differences may not appear under controlled laboratory settings where any implicit motivations to adopt a particular strategy are lacking. Studies of media multitasking among university students (Lawson & Henderson, 2015; le Roux & Parry, 2017a; Leysens, le Roux, & Parry, 2016; Ravizza, Hambrick, & Fenn, 2014; Rouis et al., 2011) suggest that better performing students regulate their in-lecture media use more effectively. This suggests that they adopt attentional strategies characterised by narrow distribution to avoid the distractions of media while conducting academic tasks. However, le Roux and Parry (2017a), based on a survey of undergraduate students (N = 1678), found that a negative association between in-lecture media use and academic performances is only observable for students in the Arts and Social Sciences and not other subject areas. The finding prompted them to question whether the abstract, non-linear thinking styles generally associated with students in this domain can be associated with broad attention distribution and, as a result, a tendency to attend to off-task stimuli, like social media, during academic tasks.

Methods

To extend understanding of the interaction between media-multitasking behaviour and attentional strategy, we investigated these constructs through a web-based survey involving a simple, two-dimensional (2D) game. Three key aims guided our design of the study.

Firstly, while we wanted to obtain measures through task performance, we did not want to perform the study in a lab environment where participants would perceive that they were being observed or measured. Our aim, rather, was to have participants perform a task in a real-world environment where they would be exposed to the range of distracting stimuli that are usually present. To achieve this we developed the task (2D game) as a part of a web-based survey, to be completed voluntarily by participants at a time and place of their own choosing. While we acknowledge that the survey and task do have a degree of agency in determining participants’ behaviour and attentional distribution, we argue that this agency is substantially less influential than would be the case in standardised tests performed in a formal lab setting.

Secondly, in addition to investigating the manner in which attention distribution differed between high and low media multitaskers, we also wanted to explore the manner in which different types of distractors influence attentional strategies. In particular, we wanted to investigate how more or less interesting or compelling/attractive stimuli impact attentional distribution during task performance in combination with media multitasking level.

Finally, we tested participants’ recall of distracting stimuli after completing the task to investigate the impact of media multitasking level and distractor type on recall ability.

To address these aims we designed a survey which included a two-dimensional computer-based game. The game was developed in JavaScript and could be played, as part of the survey, within a web browser.

Ethical clearance and institutional permission to perform the study were obtained from the researchers’ institution — a large, residential university in South Africa. Requests to complete the survey were sent to just under 20 000 undergraduate students at the university. The request outlined the objectives of the study and informed invitees that their participation would be both anonymous and voluntary. The survey was available online for two weeks.

Instruments and Measurement

Media multitasking. Media multitasking was measured using Baumgartner et al. (2017)’s shortened version of the instrument proposed by Ophir et al. (2009) — the Media Multitasking Index (MMI). The daily media use frequency for five primary media were elicited using a Likert scale ranging from 0 (not at all) to 5 (3 hours or more). The media included Watching TV; Sending messages via phone or computer (text messages, Whatsapp, Instant messages etc.); Using social network sites (e.g. Facebook, Twitter, Instagram etc.); Watching series, movies or Youtube on the computer; and Other computer activities (e.g., e-mail, surfing the web, Photoshop etc.). Thereafter, respondents were asked to indicate how often they used these media simultaneously. For each medium four questions were asked, one for every other medium. Respondents indicated how often they multitasked with each media pair using a four-point Likert scale ranging from never to very often. In accordance with Ophir et al. (2009)’s method, the values were recoded as follows: Never=0; Sometimes=0.33; Often=0.67; Very often=1.

Attention distribution. Attention distribution was measured using a simple 2D game. During the game the respondent had to keep a ball from falling to the bottom by moving a paddle from side to side within a 480x320 pixel window. A screen capture of the game is presented in Figure 1 and the source code for this game is available through the Open Science Framework (https://osf.io/auhvx/?view_only=a5d89e8f99474655bf98ce29b691a569). The ball bounced off the sides and top of the window, and upwards from the paddle if it was successfully positioned under the ball as it fell towards the bottom. Respondents were given an opportunity to practise the game and become familiar with the movement of the paddle. When the actual game started the respondent was given three “lives” (i.e., three chances to drop the ball and continue playing). At the start of each life the ball-speed was reset making it move slowly. However, as time passed the speed was increased incrementally making it more difficult to keep the ball from falling on the floor. The duration of each of the three lives were recorded as separate variables (i.e., life1, life2, life3).

Figure 1. A screen capture of the 2D game as displayed within the browser window.

During gameplay a banner was displayed on the right-hand side of the game window. The banner was 220x320 pixels and remained unchanged throughout the game. Respondents were shown one of two different banners. The first (bank banner) displayed the logo of a prominent South African bank which would have been familiar to most (if not all) respondents. Under the bank’s logo appeared general marketing text for the bank (62 words). The second banner (Facebook banner) contained the feed of a popular Facebook group used by and well-known to the students at the institution. The group allows students to post anonymous confessions. Because these confessions are sometimes explicit in nature, a screenshot of a non-explicit confession was used (64 words in length) as opposed to a live feed. This upheld ethical restrictions by ensuring that participants would not be offended by explicit language or graphics. In addition to the text of the confession, the banner displayed the name and information of the group to create the appearance of a live feed. The text used in the two banners were the same size. No instructions regarding the banners were provided before or during gameplay. After gameplay two multiple-choice recall questions about the banners and their content were asked. The first question asked respondents to indicate which bank or Facebook group the relevant banner represented. The second asked respondents to recall a particular fact from the text on the relevant banner. These questions, therefore, required a level of semantic processing of the content of the two banners. In total, eight variables about the game were recorded for each respondent: the input device used (mouse or touchpad); the duration, in seconds, of lives 1 to 3; overall game performance calculated as the sum of lives 1 to 3; the banner shown (bank banner or Facebook banner); and the two recall questions (correct or incorrect for each).

Pilot Test of the 2D Game

To test the 2D game we conducted a pilot study. We invited eight postgraduate students to play the game (one by one) in a lab environment while we observed them. After completion of the game we asked the students about their experience of the difficulty of the game, whether they paid attention to the banners and if they were able to recall the information on the banners. Their feedback indicated that the ball initially moved at a slow enough speed to enable reading of the text in the banner without risk of dropping the ball in the game. We proceeded by adjusting the initial ball speed to be faster to ensure that shifting attention to the banner would have an impact on game performance.

Research Questions

Because our method and instrument for eliciting attention distribution strategies was novel, we did not define hypotheses. We opted, rather, to formulate a single primary and four secondary research questions (RQs):

Primary RQ: Are there differences in game performance between HMMs and LMMs under different conditions of distraction?

Secondary RQ 1: Did media multitasking level impact game performance?

Secondary RQ 2: Did distractor type impact game performance?

Secondary RQ 3: Did the interaction between media multitasking level and distractor type impact game performance?

Secondary RQ 4: How did media multitasking level and distractor type impact distractor recall?

Results

Sample Composition

1 127 respondents (49.4% female) with a mean age of 21.28 years commenced the survey. Of these responses, 25 did not contain valid values for the variables associated with gameplay. The game was not playable on smartphones or tablets and, while the invitation to complete the survey did state this, it is suspected that some respondents did not read these instructions. These 25 responses were removed from the dataset. To limit the study to our targeted demographic we removed all respondents younger than 18 and older than 29 at the time of data collection from the dataset (18 respondents, 1.6%). We also removed all respondents who used a navigation tool other than a mouse or touchpad (21 respondents, 1.9%). The remaining sample (N = 1063) included 533 females, 524 males and 6 respondents who identified with other gender descriptors, with an overall mean age of 20.96 years (SD = 1.79).

Data Preparation

The mean MMI for the sample was 1.67 (SD = 0.78). In accordance with Ophir et al. (2009) respondents were classified as high media multitaskers (HMMs; n = 266) if their MMI score was higher than one standard deviation above the mean (2.45), and low media multitaskers (LMMs; n = 266) when it was lower than one standard deviation below the mean (0.89). The rest of the sample were classified as average media multitaskers (AMMs) (n = 531). HMMs had a mean MMI of 2.73 (SD = 0.47), with AMMs on 1.58 (SD = 0.29) and LMMs on 0.76 (SD = 0.28).

To investigate the effect of input device on game performance, we calculated the mean overall game performance (sum of three lives) for those who used a mouse and those who used a touch pad. The 603 respondents that used a mouse had a mean score of 78.75 seconds (SD = 30.1), while the 460 that used a touch pad had a mean score of 62.20 seconds (SD = 25.6). An independent-samples t-test confirmed the statistical significance of this difference (t(1048.70) = 9.69, p < 0.01, d = 0.55). To standardise the scores we calculated the mean difference between scores for the different input devices for each of the three lives (life 1: 4.13, life 2: 5.62, life 3: 6.80), and added this difference to the scores for each life for those who used a touch pad. This ensured that the mean scores for each of the three lives were equal between users of the different input devices. The final performance scores were approximately normally distributed, with a moderate (but acceptable) skew to the right (skewness = 0.62, kurtosis = 1.12).

Secondary Research Questions Addressed

Did media multitasking level impact game performance? Across the full sample (N = 1 063) the mean game performance, calculated as the sum of all three lives, was 78.75 seconds (SD = 28.2). HMMs had a mean game performance of 77.4 seconds (SD = 27.7), while LMMs had a mean game performance of 79.7 seconds (SD = 28.6). An independent-samples t-test indicated that the observed difference was not statistically significant (t(526.42) = -0.95, p = 0.34). This suggests that media multitasking level was not a predictor of game performance in general. Additionally, a simple linear regression was performed to test if MMI predicted game performance for the full sample. The results indicated that this was not the case (R2 = 0.002, F(1,1061) = 1.80, p = 0.18), confirming the earlier outcome.

Table 1 provides the mean scores for the full sample, HMMs and LMMs in each of the three lives played and overall. As was expected, performance improved from life 1 to 3 as respondents became more skilled in the game. However, in both cases there was substantial initial improvement (i.e., from life 1 to 2), but only minor improvement from life 2 to 3. The results of separate independent-samples t-tests performed for each of the three lives indicated that game performance did not differ significantly in life 1 (t(529.45) = -0.83, p = 0.41), life 2 (t(523.25) = −1.24, p = 0.22) or life 3 (t(524.72) = −0.02, p = 0.98) for HMMs and LMMs. Additionally, three separate linear regressions on the full sample (i.e., including AMMs) indicated that MMI did not predict performance in life 1 (R2 = 0.002, F(1,1061) = 1.90, p = 0.17), life 2 (R2 = 0.001, F(1,1061) = 0.93, p = 0.34) or life 3 (R2 = 0.000, F(1, 1061) = 0.32, p = 0.17) of the game.

Table 1. Mean Performance in Each of the Three Lives Categorised by MMI Category.

|

Group |

N |

Life 1 (M) |

Life 2 (M) |

Life 3 (M) |

Total |

|

M(SD) |

M(SD) |

M(SD) |

M(SD) |

||

|

Full sample |

1063 |

21.32 (11.8) |

28.17 (13.6) |

29.26 (15.0) |

78.8 (28.2) |

|

LMMs |

266 |

21.3 (12.2) |

29.1 (14.7) |

29.4 (15.1) |

77.4 (27.7) |

|

HMMs |

266 |

20.4 (11.8) |

27.6 (13.1) |

29.5 (13.6) |

79.7 (28.6) |

Did distractor type impact game performance? The respondents to whom the bank banner was displayed (n = 547) had a mean game performance of 80.00 seconds (SD = 29.0), while those to whom the Facebook banner was displayed (n = 516) had a mean game performance was 77.40 seconds (SD = 27.2). An independent-samples t-test indicated that this difference was not statistically significant (t(1061) = 1.52, p = 0.13). This suggests that the banner displayed did not have a significant impact on game performance across the full sample.

Table 2 provides the mean scores for the full sample, those who were shown the bank banner, and those who were shown the Facebook banner. Three separate independent-samples t-tests were performed for each of the three lives. The tests indicated that, during life 1, those who were shown the bank banner performed significantly better than those who were shown the Facebook banner (t(1061) = 2.16, p = 0.03, d = 0.13). While the effect size is negligible, it suggests that the Facebook banner presented as more distracting during the first life of gameplay. However, for both life 2 (t(1059.9) = 1.75, p = 0.08) and life 3 (t(1061) = -0.43, p = 0.67) the differences in performance were non-significant.

Table 2. Mean Performance in Each of the Three Lives Categorised by Distractor Type.

|

Group |

N |

Life 1 (M) |

Life 2 (M) |

Life 3 (M) |

Total |

|

M(SD) |

M(SD) |

M(SD) |

M(SD) |

||

|

Full sample |

1063 |

21.32 (11.8) |

28.17 (13.6) |

29.26 (15.0) |

78.8 (28.2) |

|

Bank banner |

547 |

22.1 (12.1) |

28.9 (14.2) |

29.1 (15.4) |

80.0 (29.0) |

|

Facebook banner |

516 |

20.5 (11.4) |

27.4 (12.9) |

29.5 (14.5) |

77.4 (27.2) |

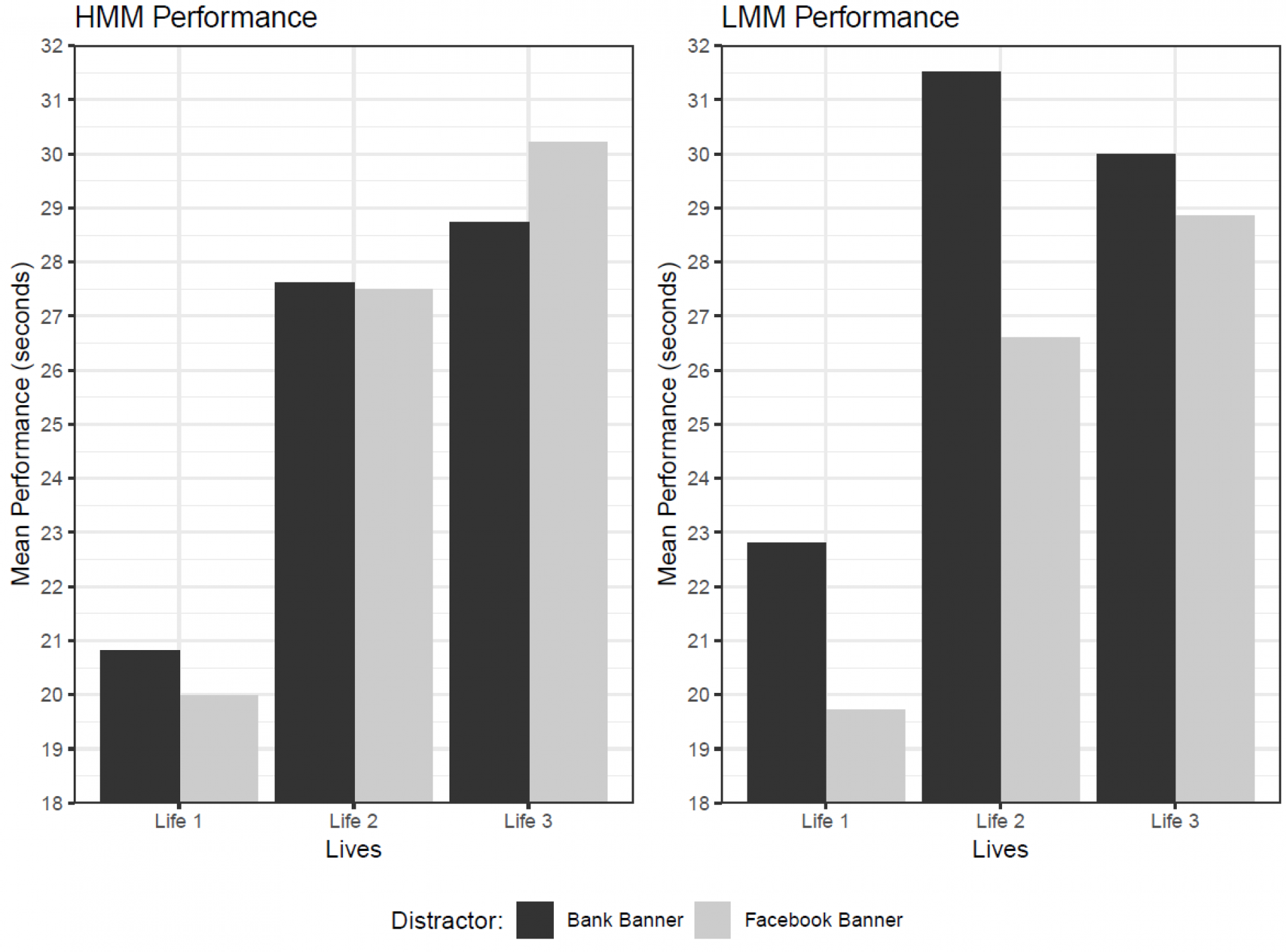

Did the interaction between media multitasking level and distractor type impact game performance? Table 3 displays the scores of HMMs and LMMs in each of the three lives for the two distractor categories. Independent samples t-tests indicated that HMMs’ overall performance was not significantly different based on the banner displayed (t(262.1) = -0.15, p = 0.88), nor did it differ significantly for life 1 (t(263.28) = 0.57, p = 0.57), life 2 (t(260.19) = 0.08, p = 0.93) or life 3 (t(263.04) = -0.90, p = 0.37) of the game. For LMMs, however, a different pattern is observable with performance being significantly better when the bank banner was displayed. This was true for overall performance (t(262.39) = 2.63, p < 0.01, d = 0.32), performance in life 1 (t(253.72) = 2.09, p = 0.04, d = 0.26) and performance in life 2 (t(258.97) = 2.75, p < 0.01, d = 0.34), with moderate effect sizes in each. However, performance was not impacted by distractor type in life 3 (t(263.79) = 0.62, p = 0.54, d = 0.08).

Table 3. Mean Performance in Each of the Three Lives Categorised by MMI Category and Banner Displayed.

|

Group |

N |

Life 1 |

Life 2 |

Life 3 |

Total |

|

|

|

M(SD) |

M(SD) |

M(SD) |

M(SD) |

|

HMMs Bank banner |

137 |

20.8 (11.8) |

27.6 (12.8) |

28.7 (14.4) |

77.2 (27.4) |

|

HMMs Facebook banner |

129 |

20.0 (11.8) |

27.5 (13.6) |

30.2 (12.8) |

77.7 (28.1) |

|

LMMs Bank banner |

133 |

22.8 (13.3) |

31.5 (15.6) |

30.0 (15.3) |

84.3 (29.4) |

|

LMMs Facebook banner |

133 |

19.7 (10.8) |

26.6 (13.5) |

28.9 (14.9) |

75.2 (27.2) |

We also considered the differences between HMMs and LMMs to whom the different banners were displayed. The Facebook banner was displayed 129 HMMs and 133 LMMs (n = 262). Independent-samples t-tests indicated that there was no difference in overall performance or in any of the three lives between the groups when the Facebook banner was displayed. However, differences in performance were observed between HMMs and LMMs to whom the bank banner was displayed (n = 270 LMMs and HMMs). LMMs (n = 133) performed significantly better than HMMs (n = 137) overall (t(265.37) = -2.07, p = 0.04, d = -0.25) and in life 2 (t(255.19) = -2.24, p = 0.03, d = -0.27). However, in life 1 (t(262.73) = -1.31, p = 0.19) and in life 3 (t(265.86) = -0.70, p = 0.48), performance did not differ significantly between the two groups. These differences are visualised in Figure 2.

How did media multitasking level and distractor type impact distractor recall? The bank banner was randomly displayed to 547 of the 1063 respondents during gameplay. 77% of these respondents correctly recalled the name of the bank, but only 43% recalled the information in the banner correctly. The Facebook banner was displayed to the other 516 respondents. 60% of them correctly recalled the name of the relevant Facebook page, while 62% correctly recalled the information in the post displayed on the banner. These results are displayed in Table 4.

Pearson’s chi-squared tests indicated that, for both the first (χ2(1, N = 1063) = 32.94, p < 0.001) and second (χ2(1, N = 1063) = 34.33, p < 0.001) recall questions, the accuracy rate was significantly related to the banner displayed for the full sample. Respondents recalled the name of the bank more accurately than the name of the Facebook group, but they recalled the content of the Facebook post more accurately than that of the bank’s marketing text.

The lower accuracy rate for the Facebook banner on the first recall question may have resulted from the fact that there are a number of similar Facebook pages that are popular among students at the institution. Unlike the bank banner which displayed the distinctive logo of the bank, the name of the Facebook group was less distinctive, and students may have had more difficulty recalling it as a result. Alternatively, it is possible that they quickly recognised it as a Facebook feed and proceeded to read the text before or without paying attention to the name of the group. The higher accuracy rate for the second recall question when the Facebook banner was displayed suggests that students read the confession displayed there attentively, while the marketing text that appeared on the bank banner received less attention.

Figure 2. Game performance of HMMs and LMMs for the two different banners.

Table 4. Recall Accuracy Categorised by MMI and Banner Displayed.

|

Group |

N |

Recall 1 |

Recall 2 |

|

Full sample |

1063 |

69% |

53% |

|

Full sample Bank banner |

547 |

77% |

43% |

|

Full sample Facebook banner |

516 |

60% |

62% |

|

HMMs |

266 |

68% |

46% |

|

LMMs |

266 |

69% |

55% |

|

HMMs Bank banner |

137 |

77% |

35% |

|

HMMs Facebook banner |

129 |

59% |

57% |

|

LMMs Bank banner |

133 |

78% |

47% |

|

LMMs Facebook banner |

133 |

60% |

63% |

We now consider differences in recall accuracy between HMMs and LMMs. As shown in Table 4, 182 of the 266 HMMs (68%) correctly recalled the name of the bank or Facebook group for which the banner was displayed. However, only 123 (46%) correctly recalled the information within the banner. 69% of LMMs (184 respondents) correctly recalled the name of the bank or Facebook group for which the banner was displayed, while 55% of LMMs correctly recalled the information within the banners. To test the independence of MMI category and recall on each of the two questions, Pearson’s Chi-Squared tests were performed. The results indicated that MMI category and recall accuracy were not related for the first recall question (χ2(1, N = 532) = 0.09, p = 0.93). However, for the second recall question, accuracy was dependent on MMI category (χ2(1, N = 532) = 3.98, p < 0.05). This result suggests that, in general, LMMs were significantly better than HMMs at recalling the information contained in the banners.

We now consider the differences in recall accuracy for HMMs and LMMs for each of the two banners independently. As shown in table 3, the results for HMMs on the first recall question closely mirrored the results for the full sample (77% accuracy for the bank banner, 59% accuracy for the Facebook banner). The same pattern is observable for LMMs (78% accuracy for the bank banner, 60% accuracy for the Facebook banner). Pearson’s Chi-Squared tests indicated, firstly, that, for both groups, as with the full sample, the name of the bank was recalled more accurately than the name of the Facebook group (p < 0.01) and, secondly, the content of the Facebook banner was recalled more accurately than that of the bank banner (p < 0.05).

When only considering those respondents for whom the bank banner was displayed, Pearson’s Chi-Square tests indicated there wasn’t significant difference between HMMs and LMMs on their recall of the bank’s name. For the recall of the bank’s marketing text LMMs performed better (47% vs 35% for HMMs), but a Pearson’s Chi-Square test indicated that, while substantial, this difference was not statistically significant (χ2(1, N = 270) = 3.28, p = 0.07). Similarly, when only considering those respondents for whom the Facebook banner was displayed, there were no significant differences in recall accuracy for either the first or second question.

Summary of Findings

Before discussing our findings, we briefly summarise key aspects thereof for clarity. The findings indicated that

- media multitasking level was not a predictor of game performance when considering the full sample and ignoring the type of banner displayed.

- the banner displayed was not a predictor of game performance when considering the full sample of respondents independent of media multitasking level.

- when the bank banner was displayed, LMMs performed significantly better in lives 1 and 2 of the game while HMMs’ performance was not influenced by the banner displayed.

- in general, LMMs recalled the information contained within the banners more accurately than HMMs.

Discussion

In this study we investigated individuals’ attention distribution during task performance in relation to, firstly, the frequency with which they multitask with media and, secondly, the nature of distracting stimuli presented to them while performing the task. To achieve this, we developed a 2D game that was played by 1 063 respondents within a web browser. In the discussion which follows we consider the implications of our findings in relation to, firstly, inhibitory control and, secondly, recall of distracting stimuli.

As described in preceding sections, we based the development of our 2D game on the premise that individuals with greater inhibitory control ability would ignore or pay less attention to the banners presented adjacent to the game and, as a result, perform better in gameplay. Some earlier research on media multitasking and inhibitory control has indicated that HMMs show reduced inhibitory control and are more likely than LMMs to pay attention to irrelevant stimuli (Ophir et al., 2009). Our data, however, suggest that, if one ignores the nature or type of the distracting stimuli presented, there is no difference in task performance between HMMs and LMMs. This finding seems to support Wiradhany and Nieuwenstein (2017) who question the association between media multitasking and performance in task-based assays of cognitive control.

However, when considering the two types of distractors displayed together with media multitasking level, a different pattern emerges. While HMMs’ performance in the game was not affected by the banner type, LMMs performed significantly better when the Bank banner was displayed. This may suggest that LMMs paid more attention to the Facebook banner and less to the bank banner, while HMMs attended equally to the different banner types.

Three important deductions can be made on the basis of these findings. The first is that, when considering inhibitory control differences between HMMs and LMMs, our findings suggest that the nature of the distracting stimuli is significant. This implies that standardised lab tests which utilise homogeneous distracting stimuli that do not carry particular meaning may not provide researchers with an accurate indication of whether or not an individual attends to irrelevant stimuli during task performance, and what the impact of such attendance is on primary task performance. The second is that while LMMs distinguished between the two banners and attended to one more than the other, the data suggest that HMMs attended equally two both banner types. Interestingly, HMMs actually performed better when the Facebook banner was displayed, though the difference was negligible. We argue, on the basis of this finding, that LMMs’ attentional strategy is characterised by a degree of pre-processing of task-irrelevant stimuli which informs decision making about the salience of the stimuli and, ultimately, the distribution of attention. HMMs, on the other hand, seem to attend to distracting stimuli independent of their perceived relevance or salience. Thirdly, it is worth considering the implications of our findings for the notion of visual attention splitting. In the context of the game the ability to split visual attention would, arguably, enable the player to view the banner without necessarily incurring costs in terms of game performance. While the data do not provide definitive proof of attention switching between the game and the banner, the recall accuracy rates provide some indication of this. Two interpretations are possible in this regard. Firstly, because LMMs recalled the information within the banners (recall 2) more accurately than HMMs, it can be argued that they performed more semantic processing of the banners. Considering this in combination with the finding that LMMs either outperformed or matched HMMs in gameplay, it is reasonable to conclude that HMMs do not have superior multitasking or visual splitting abilities. The second possible interpretation is that the recall accuracy rates are indicative of working memory capacity rather than or in addition to the level of semantic processing undertaken. Such an interpretation would suggest that, while both groups may have undertaken equal levels of semantic processing of the distractors, LMMs’ superior working memory enabled better recall of the information. In both interpretations, however, the data suggest that the capacity to split visual attention is not produced by chronic media multitasking.

When considering the findings of this study in their totality, we are persuaded to argue that any observed differences in cognitive control between HMMs and LMMs should be interpreted in relation to differences in attentional strategy. This aligns with the individual differences hypothesis suggested by Ophir et al. (2009) and described by Ralph et al. (2015). Individual differences in media multitasking are indicative of general behavioural strategies and, extending from this, any observed differences in performance occur as a result of these strategies. Our study has shown that a key difference in attentional strategy between HMMs and LMMs is that LMMs are more selective when choosing to attend to stimuli that are irrelevant to the task they are performing. HMMs, by contrast, are less selective and tend to distribute their attention to such stimuli independent of their content. While we support a strategic interpretation, we are mindful of the possibility that, rather than choosing to attend to distractors, HMMs (and LMMs under certain conditions) may lack the ability to ignore them. Our data do not enable this distinction and, as such, we cannot reject this interpretation. To make such a distinction we envision that game performance would have to be incentivised to the extent that one can disregard lack of motivation to sustain attention on gameplay as a performance factor.

Our findings are not definitive, and the data collected in the present investigation do not address the issue of causality between chronic media multitasking and selective attention distribution. Rather, in describing differences in the attentional distributions of HMMs and LMMs in the context of a more ecologically valid setting, we provide further support for assertions that there exists an association between media multitasking behaviour and attentional control. In particular, the data indicate that there exists an association between non-selective attentional strategies and higher levels of media multitasking. Because they are more selective when distributing attention, LMMs may be perceived as employing narrow attention distribution in contrast to HMMs. Our study has shown, however, that LMMs also initially attend to irrelevant stimuli but employ stricter criteria when choosing whether or not to continue consuming such stimuli. While, as Ralph et al. (2015) suggest, these strategic differences may be causal in nature, they may also be explained by trait-level differences or individual differences in thresholds for engagement. The present data do not support a particular direction of causality, nor does it reject the possibility that chronic media multitasking may engender non-selective attentional strategies.

Limitations

The 2D game we used to measure attention distribution is a novel instrument and, as such, has not been tested, used or validated in other studies. Moreover, we chose the content contained within the banners displayed based on our knowledge of previous findings in this domain and our familiarity with the life-worlds of the respondents in our sample.

Consequently, we have a limited understanding of how changes in variables of the game (e.g., ball speed, window size, colour etc.) and the distractors (e.g., content, size, colour etc.) would influence observations. This implies that, while our findings indicate that HMMs and LMMs experience different performance costs under certain conditions, our study provides little insight into what those conditions might be. We suggest that future research investigate these conditions in greater detail.

Additionally, while the 2D game was implemented to create a more ecologically valid performance-setting and task, it does still hold many similarities with more traditional performance-based tasks. Both the 2D game and performance-based assessments of cognitive control require participants to sustain allocation of attention to the task-requirements and inhibit task-irrelevant distractors. Consequently, outcomes across both task paradigms may not differ substantially. Moreover, while the banners were intended to present as salient distractors, the contents may not have attracted attentional allocation as intended. Finally, while the code for the 2D game has been made publically available and the items in the MMI scale are in the public domain, exact replication of the present study is hindered due to the nature of the banners used as distractors. In this case, the banners represented relevant stimuli to the sample in question. The same banners may not present as salient distractors for a different sample. To investigate this, future investigations should endeavour to further consider the relative salience of different online content presented as distractors or secondary-page elements.

Finally, it is necessary to acknowledge that differences in performance between HMMs and LMMs occurred during life 1 and life 2 and, by life 3, differences were no longer present. Consequently, it may be the case that, as participants became familiar with the task, differences between LMMs and HMMs disappeared. The methodology adopted in this study did not allow for the investigation of the possibility that differences may only occur during novel tasks or situations, or whether such differences persist in more familiar situations and interpretation of the results should be limited to novel situations. This constraint on interpretation is particularly important because, as is evident by the prevalence of media multitasking, such behaviour occurs in many, and likely familiar or routine, situations where such differences may not exist. Future investigations should endeavour to isolate this aspect by providing participants with different tasks to do, running the same game for a longer duration, for more rounds, or returning to the game after an intermediate task.

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Copyright © 2019 Daniel B. le Roux, Douglas A. Parry