Measurement invariance of the Perceived online racism scale across age and gender

Vol.12,No.3(2018)

The Perceived Online Racism Scale (PORS) is the first measure specifically developed to assess online racist interpersonal interactions and exposure to online racist content. To advance and strengthen the psychometric foundation of the PORS, the current study evaluated the measurement invariance of PORS across gender and age, two major demographic categories that can differentially affect how racism is perceived. Based on the framework of intersectionality, the salience and significance of social identities, such as gender and age, influence how racism is perceived with different meanings and interpretations. The current study examined data collected through an online survey from 946 racial/ethnic minority participants (59% women, mean age = 27.42) in the United States. Measurement invariance across gender (men and women) and age groups (ages 18 to 24, 25 to 39, and 40 to 64) was tested via comparison of a series of models with increasing constraints. Measurement invariance across configural, metric, and scalar models for age and gender was supported. Latent means were compared across gender and age groups. The results advance the psychometric property of the PORS as a general measure of online racism. Differences in the PORS scores reflect true differences among gender and age groups rather than response bias. Implications for future research are discussed.

Online racism; perceived online racism; measurement invariance; age; gender

Brian TaeHyuk Keum

Department of Counseling, Higher Education, and Special Education, University of Maryland, College Park

Brian TaeHyuk Keum, (M.A. Columbia University, 2014) is a Ph.D. Candidate at the Department of Counseling, Higher Education, and Special Education, College of Education, University of Maryland-College Park. His research interests include: (a) contemporary issues in discrimination and mental health correlates (e.g., online racism), (b) stigma reduction and promotion of psychological help-seeking, (c) social justice interest development in counselor training, and (d) measurement of multicultural psychological constructs.

Matthew J. Miller

Department of Counseling, Higher Education, and Special Education, University of Maryland, College Park

Matthew J. Miller, (Ph.D. Loyola University Chicago, 2005) is an Associate Professor and Co-Director at the Department of Counseling, Higher Education, and Special Education, College of Education, University of Maryland-College Park. His research interests include: (1) the cultural and racial experiences of racially diverse populations, (2) the psychology of social justice engagement, (3) the career development of diverse populations, and (4) the measurement of multicultural psychological constructs.

Ang, R. P., & Goh D. H. (2010). Cyberbullying among adolescents: The role of affective and cognitive empathy, and gender. Child Psychiatry & Human Development, 41, 387-397. https://doi.org/10.1007/s10578-010-0176-3

Arnett, J. J. (2007). Emerging adulthood: What is it, and what is it good for?. Child Development Perspectives, 1, 68-73. https://doi.org/10.1111/j.1750-8606.2007.00016.x

Bastos, J. L., Celeste, R. K., Faerstein, E., & Barros, A. J. (2010). Racial discrimination and health: a systematic review of scales with a focus on their psychometric properties. Social Science & Medicine, 70, 1091-1099. https://doi.org/10.1016/j.socscimed.2009.12.020

Beckman, L., Hagquist, C., & Hellström, L. (2013). Discrepant gender patterns for cyberbullying and traditional bullying–An analysis of Swedish adolescent data. Computers in Human Behavior, 29, 1896-1903. https://doi.org/10.1016/j.chb.2013.03.010

Brown, D. L., & Tylka, T. L. (2011). Racial discrimination and resilience in African American young adults: Examining racial socialization as a moderator. Journal of Black Psychology, 37, 259-285. https://doi.org/10.1177/0095798410390689

Chen, F. F. (2007). Sensitivity of goodness of fit indexes to lack of measurement invariance. Structural Equation Modeling: A Multidisciplinary Journal, 14, 464-504. https://doi.org/10.1080/10705510701301834

Cheung, G. W., & Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Structural Equation Modeling: A Multidisciplinary Journal, 9, 233-255. https://dx.doi.org/10.1207/S15328007SEM0902_5

Cleland, J. (2014). Racism, football fans, and online message boards: How social media has added a new dimension to racist discourse in English football. Journal of Sport and Social Issues, 38, 415-431. https://doi.org/10.1177/0193723513499922

Cohen, J. (2001). Defining identification: A theoretical look at the identification of audiences with media characters. Mass Communication & Society, 4, 245-264. https://doi.org/10.1207/S15327825MCS0403_01

Crenshaw, K. (1989). Demarginalizing the intersection of race and sex: A black feminist critique of antidiscrimination doctrine, feminist theory and antiracist politics. In K. T. Bartlett & R. Kennedy (Eds.), Feminist legal theory: Readings in law and gender (pp. 139-167). New York: Taylor & Francis.

Daniels, J., & Lalone, N. (2012). Racism in video gaming: connecting extremist and mainstream expressions of white supremacy. In D. G. Embrick, J. T. Wright, & A. Luckas (Eds.), Social exclusion, power, and video game play: new research in digital media and technology (pp. 85-100). Plymouth: Lexington Books.

Else-Quest, N. M., & Hyde, J. S. (2016). Intersectionality in quantitative psychological research: I. Theoretical and epistemological issues. Psychology of Women Quarterly, 40, 155–170. https://doi.org/10.1177/0361684316629797

Erdur-Baker, Ö. (2010). Cyberbullying and its correlation to traditional bullying, gender and frequent and risky usage of internet-mediated communication tools. New Media & Society, 12, 109-125. http://doi.org/10.1177/1461444809341260

Fabrigar, L. R., Wegener, D. T., MacCallum, R. C., & Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4, 272-299. http://dx.doi.org/10.1037/1082- 989X.4.3.272

Hargittai, E., & Hinnant, A. (2008). Digital inequality: Differences in young adults' use of the Internet. Communication Research, 35, 602-621. http://doi.org/10.1177/0093650208321782

Harrison, C., Tayman, K., Janson, N., & Connolly, C. (2010). Stereotypes of black male athletes on the Internet. Journal for the Study of Sports and Athletes in Education, 4, 155-172.

Howard, P. E., Rainie, L., & Jones, S. (2001). Days and nights on the Internet: The impact of a diffusing technology. American Behavioral Scientist, 45, 383-404.

Hu, L. T., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1-55. http://dx.doi.org/10.1080/10705519909540118

Jakubowicz, A., Dunn, K., Mason, G., Paradies, Y., Bliuc, A. M., Bahfen, N., Oboler, A., Atie, R., & Connelly, K. (2017). Cyber racism and community resilience: strategies for combating online race hate. Cham: Springer Nature.

Jones, S., & Fox, S. (2009). Generations online in 2009. Washington, DC: Pew Internet and American Life Project. Retrieved from http://www.pewinternet.org/2009/01/28/generations-online-in-2009/

Keum, B. T., & Miller, M. J. (2017). Racism in digital era: Development and initial validation of the Perceived Online Racism Scale (PORS v1.0). Journal of Counseling Psychology, 64), 310-324. https://doi.org/10.1037/cou0000205

Keum, B. T., & Miller, M. J. (2018). Racism on the Internet: Conceptualization and recommendations for research. Psychology of Violence. Advance online publication. http://dx.doi.org/10.1037/vio0000201

Li, Q. (2006). Cyberbullying in schools: A research of gender differences. School Psychology International, 27, 157-170. http://dx.doi.org/10.1177/0143034306064547">http://dx.doi.org/10.1177/0143034306064547

Love, A., & Hughey, M. W. (2015). Out of bounds? Racial discourse on college basketball message boards. Ethnic and Racial Studies, 38, 877-893. http://doi.org/10.1080/01419870.2014.967257

Museus, S. D., & Truong, K. A. (2013). Racism and sexism in cyberspace: Engaging stereotypes of Asian American women and men to facilitate student learning and development. About Campus, 18, 14-21. http://dx.doi.org/10.1002/abc.21126

Paradies, Y. (2006). A systematic review of empirical research on self-reported racism and health. International Journal of Epidemiology, 35, 888-901. https://doi.org/10.1093/ije/dyl056

Pew Research Internet Project. (2018, February 5). Social media fact sheet. Washington, DC: Pew Internet and American Life Project . Retrieved from http://www.pewinternet.org/data-trend/social-media/social-media-use-by- age-group/

Pieterse, A. L., Nicolas, A. I., & Monachino, C. (2017). Examining the factor structure of the perceived ethnic discrimination questionnaire in a sample of Australian university students. International Journal of Culture and Mental Health, 10, 97-107. https://doi.org/10.1080/17542863.2016.1265998

Snyder, L. B., & Rouse, R. A. (1995). The media can have more than an impersonal impact: The case of AIDS risk perceptions and behavior. Health Communication, 7, 125-145. http://doi.org/10.1207/s15327027hc0702_3

Thayer, S. E., & Ray, S. (2006). Online communication preferences across age, gender, and duration of Internet use. CyberPsychology & Behavior, 9, 432-440. https://doi.org/10.1089/cpb.2006.9.432

Tynes, B. M., Giang, M. T., Williams, D. R., & Thompson, G. N. (2008). Online racial discrimination and psychological adjustment among adolescents. Journal of Adolescent Health, 43, 565-569. https://doi.org/10.1016/j.jadohealth.2008.08.021

Tynes, B. M., Rose, C. A., & Markoe, S. L. (2013). Extending campus life to the Internet: Social media, discrimination, and perceptions of racial climate. Journal of Diversity in Higher Education, 6, 102-114. http://dx.doi.org/10.1037/a0033267

Tynes, B. M., Rose, C. A., & Williams, D. R. (2010). The development and validation of the online victimization scale for adolescents. Cyberpsychology: Journal of Psychosocial Research on Cyberspace, 4(2), article 2. Retrieved from https://cyberpsychology.eu/article/view/4237/3282

Umaña-Taylor, A. J., Tynes, B. M., Toomey, R. B., Williams, D. R., & Mitchell, K. J. (2015). Latino adolescents’ perceived discrimination in online and offline settings: An examination of cultural risk and protective factors. Developmental Psychology, 51, 87 100. http://dx.doi.org/10.1037/a0038432

Editorial Record:

First submission received:

October 11, 2017

Revisions received:

June 13, 2018

September 25, 2018

Accepted for publication:

October 10, 2018

Introduction

Growing research has started to focus on assessment of online racism for empirical investigations (Jakubowicz et al., 2017; Keum & Miller, 2018). Tynes, Rose, and Williams (2010) adapted a seven-item subscale of the Online Victimization Scale (OVS; Tynes et al., 2010) to examine individual and vicarious domains of interpersonal racial victimization for adolescents. Using the OVS, scholars concerned with the racial victimization in cyberbullying have conducted quantitative studies and found that online racism was significantly linked to poorer mental health among adolescent (Tynes, Giang, Williams, & Thompson, 2008; Umana-Taylor, Tynes, Toomey, Williams, & Mitchell, 2015). Recently, Pieterse, Nicolas, and Monachino (2017) added two items to the existing Perceived Ethnic Discrimination Questionnaire to assess online experiences of ethnic discrimination for Australian university students. Both of these measures help expand the racism literature to incorporate the relevant online experiences in today’s digital age. However, both measures are adaptations with limited psychometric properties (e.g., no measurement invariance testing or validation in other samples) and lacks a comprehensive and specific focus on diverse online racism experiences that may be generalizable across multiple racial/ethnic minority groups.

Against this backdrop, the Perceived Online Racism Scale (PORS) is the first measure specifically designed to assess the unique diverse online activities through which people experience racism as they interact with others in online social platforms and consume online content. PORS was developed using a large-scale sample (N = 1023) of diverse racial/ethnic minority individuals (Keum & Miller, 2017). The measure may be a promising tool for researchers investigating the impact of racism in today’s digital era. However, although the PORS demonstrated good initial psychometric properties, it lacks substantial psychometric evidence as a general measure. Thus, the current study examined measurement invariance of PORS across age and gender, two major demographic categories that can differentially affect how racism is perceived.

Our theoretical rationale for testing the measurement invariance of PORS across gender and age groups is based on the framework of intersectionality, which describes how interlocking systems of oppression impact marginalized groups with regard to diverse social identities such as race, gender, class, sexual orientation, ability, and religion (Crenshaw, 1989). The salience and significance of social identities, such as gender and age, influence how racism is perceived with different meanings and interpretations (Else-Quest & Hyde, 2016). Given the implications of intersectionality, it would be difficult to assume that online racism experiences are similar across age and gender. Scholars have suggested that intersectional analyses should be applied to understand the breadth, depth, and nuances of people’s experiences in multiple contexts of privilege and oppression. As recommended by Else-Quest and Hyde (2016), conceptual and measurement invariance tests (i.e., whether the measure is assessing the same construct and interpreted similarly across different groups) of the PORS can help examine how age and gender might intersect the perception of online racism.

Perceived Online Racism Scale (PORS)

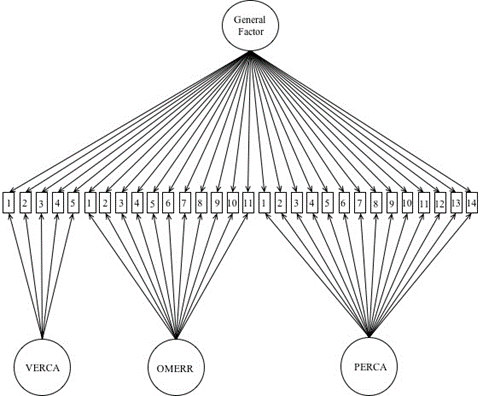

The PORS is a 30-item measure designed to assess people’s experiences of racist online interaction and exposure to racist online content and information (Keum & Miller, 2017). Participants rate how often they have experienced the online racism represented by each of the 30 items in the past six months on a five-point Likert-type scale ranging from 1 (Never) to 5 (All the time). The PORS is modeled as a bifactor structure (Figure 1) comprised of a general factor sharing variance in all of the items (scores ranging from 30 to 150) and three domain-specific factors with unique variance represented by the respective items in each domain. The Personal Experience of Racial Cyber-Aggression subscale (PERCA) contains 14 items (scores ranging from 14 to 70) and represents the direct online racial aggression that individuals can face in their online interactions with others. A sample item reads, “I have received racist insults regarding my online profile (e.g., profile pictures, user ID).” The Vicarious Exposure to Racial Cyber-Aggression subscale (VERCA) contains five items (scores ranging from five to 25) and represents observation of racial aggression experienced by other racial/ethnic minority users in their online interactions. A sample item reads, “I have seen other racial/minority users being treated like a second-class citizen.” The Online-Mediated Exposure to Racist Reality subscale (OMERR) contains 11 items (scores ranging from 11 to 55) and characterizes people’s exposure to online content (e.g., racist incidents happening in another location or online information illuminating various systemic racial inequalities) through which they may realize and witness the apparent reality of racism in society. A sample item reads, “I have been informed about a viral/trending racist event happening elsewhere (e.g., in a different location).” Please see Appendix A for the scale.

Keum and Miller (2017) established good initial psychometric properties for the PORS. Internal consistency estimates ranged from .90 to .95 across the subscales. All items loaded significantly (p < .001) on the hypothesized latent factors and ranged from .61 to .83 in the confirmatory factor analysis. Regarding construct validity, the PORS scores were significantly and positively correlated to an existing measure of racism and racism-related stress for convergent validity evidence and with psychological distress and unjust views of society for criterion-related validity evidence. The scores also significantly predicted these negative mental health outcomes for predictive validity evidence. Keum and Miller (2017) also tested the incremental validity of PORS by examining whether the scores of PORS would predict unique variance in mental health outcomes over and above an existing measure of racism. The VERCA subscale scores were found to predict unique variance in psychological distress over and above an existing measure of racism. In the original development study, the correlations across the three subscales ranged from .49 to .70 during the assessment of the correlated three-factor structure. Measurement equivalence of PORS was also demonstrated across the four racial/ethnic groups (Black, Asian, Latinx, Multiracial) in the development sample (Keum & Miller, 2017) indicating that score differences among these groups reflect true results rather than response bias.

Measurement Invariance of the PORS Across Age and Gender

Age. Age is an important demographic indicator that may differentiate perception of racism. Extant racism literature suggests mixed results on the self-report of racism depending on people’s age. A review on racism measures (Paradies, 2006) found that some studies found higher self-report racism among older adults while other studies have found that younger adults reported more racism. Another handful of studies did not find any variations on self-report racism based on age (Paradies, 2006).

Age also has unique implications for potential discrepancies in perception of online racism. National statistics on Internet use suggests that Internet use declines with age. A national survey of Internet use by PEW (PEW, 2014) found that among all Americans who use the Internet, 75% were younger adults aged 18 to 29. Younger adults also comprised of more than 75% of the Americans who use social media services such as Facebook. Evidence suggests that younger adults engage in a richer array of online activities than older adults (Hargittai & Hinnant, 2008). Thayer and Ray (2006) found that younger adults exhibited significantly greater preference for online communication and relationships than older adults. More specifically, college-aged young adults (ages 18 to 24) were more likely to use a host of services on the Internet including communication tools such as messaging and chatting (Jones & Fox, 2009) and information consumption and leisurely entertainment (Howard, Rainie, & Jones, 2001). Beyond college years, working-aged young adults (ages 25 or older) increased in Internet use for job purposes and access to social services as they transitioned into their careers and toward middle-aged adulthood (Hargittai & Hinnant, 2008; Jones & Fox, 2009; PEW, 2014). Thus, literature and statistics suggest that there are differences among age groups regarding their online activities; hence, the scope of perceived online racism across age groups may not be equivalent.

For younger adults, especially college-aged young adults, their experiences of online racism may be more encompassing of the three domains represented by PORS given the wide range of their online activities (Jones & Fox, 2009). However, it is possible that the PERCA and VERCA subscales comprise the majority of their experiences given that online social interactions and communication tools are at the forefront of their Internet use. Conversely, as young adults transition into working years and then to middle-aged adulthood (40 years old or older; Arnett, 2007), their Internet use may focus more on information consumption (e.g., online news, media) rather than participation in online social interactions (Hargittai & Hinnant, 2008; Thayer & Ray, 2006). Thus, middle-aged and older adults may be increasingly exposed to the content based exposure to racism as represented by the OMERR. These implications, however, do not suggest definitive trends as Internet use among adults are constantly increasing and changing. For instance, recent PEW survey in 2014 on older adults suggest that Internet use and adoption has been steadily increasing; six in ten older adults now go online. Taken together, these discrepancies suggest that perception of online racism may hold different meaning and exposure based on age groups. It is possible that the conceptual validity of the PORS may be more applicable to younger adults rather than middle-aged and older adults, and the three-factor structure of the PORS may not hold for the latter group particularly regarding the PERCA and VERCA factors. Thus, based on developmental and Internet use considerations, measurement invariance of PORS was tested across college-aged young adults (18 to 24), working-aged young adults (25 to 39), and middle-aged adults (40 to 64; Arnett, 2007).

Gender. In addition to age, gender is another component that may differentiate perception of online racism. In general, more studies have found that men reported greater prevalence of racism than women, particularly in settings related to work and social services such as medical care and legal system (Paradies, 2006). In service-oriented positions, studies found that women reported greater prevalence of racism. In the online context, research is scant on examining gender differences on perceived online racism although differential perceptions seem likely. Notably, Tynes, Rose, & Markoe (2013) found that among college-aged young adults, men reported significantly higher online racial discrimination. Some of the differences may be attributed to the context of the online exchanges. For example, handful of studies have documented racist discourses in online social platforms with themes such as English football (Cleland, 2014), basketball (Harrison et al., 2010; Love & Hughey, 2015), and video games (Daniels, 2012) that are stereotypically dominated by male users. Yet, literature has also documented salience of racist materials specific to women such as stereotypical representation of Asian American women online (Museus & Truong, 2013).

Literature on cyber-bullying also provides some relevant context for understanding potential gender differences in how online racism may be perceived given that forms of online victimization may include racial victimization (Tynes et al., 2010). For example, studies on cyberbullying among adolescents have found that boys are more likely to assume the perpetrator role while girls were more likely to be victimized (Ang & Goh, 2010; Erdur-Baker, 2010; Li, 2006), and that girls were more likely to report the incidents. The results suggest the possibility that women may be more likely to report on online racism experiences, and may be more likely to be victimized by racist perpetrators on the Internet. Yet, such differences may not be warranted, as a recent study by Beckman, Hagquist, and Hellstrom, (2013) found that girls were just as likely to perpetuate cyber-aggression and that gender differences were more related to traditional offline cyberbullying. Taken together, the literature presents with mixed findings and points to potential discrepancies in how online racism may be perceived based on gender. However, literature seem to suggest that boys or men may be more sensitive to the perception of online racism compare to girls or women. It is possible that the validity of the three-factor structure of the PORS may be more applicable to men, particularly for the PERCA factor given that men are often found to be perpetrators of cyber aggression. On the other hand, given that women are often found to be victims of cyber aggression, it is possible that the VERCA and OMERR factors may be particularly valid for their perception of online racism. Yet, it should not be assumed that such stereotypical depictions of gender dynamics in online racism to be generalizable which is another rationale for testing measurement invariance. In sum, to study the potential differences due to age and gender, the current study evaluated whether the PORS can demonstrate measurement equivalence across age and gender groups.

Method

Participants and Procedure

The current sample is a subset from previous study (Keum & Miller, 2017) and was conducted in compliance with the Institutional Review Board of the host institution. Data was collected via an online survey consisting of informed consent, study variable measures, and demographic items hosted by Qualtrics. The survey was advertised through multiple online communication platforms such as listservs, discussion forums, and social network sites (e.g., Facebook).

The average age of the participants was 27.42 (SD = 9.77) and ranged from 18 to 67. About 33% (306) of the participants self-identified themselves as Black/African American, 20% (185) as East Asian/East Asian American, 17% (163) as Hispanic/Latino/a American, 13% (125) as Southeast Asian/Southeast Asian American, 11% (108) as Multiracial, 2.5% (26) Native American Indian/Alaskan Native, 2% (19) Middle Eastern, 1% (9) Native Hawaiian, and .5% (5) other. About 59% (555) of the participants were women, 39% (372) men, and 2% (19) transgender

Analytic Approach

We used Mplus 7.11 to conduct multi-group CFA to evaluate measurement invariance of the 30-item bifactor model (Figure 1) of the PORS. Items were treated as continuous indicators. We tested whether the measure operated in an equivalent manner across age (younger and older adult cohorts) and gender (men and women) in our sample. There were 372 men and 555 women in our sample. Age group breakdown were as follows: ages 18 to 24 (N = 484), 24 to 39 (N = 345), and 40 to 64 (N = 113). We were not able to test the model with older adults (65 years old or older) due to limited number of participants in this age group.

Invariance testing was conducted via comparison of a series of models with increasing constraints: baseline configural model (no constraints), metric model (factor loadings constrained across the groups), and scalar model (factor loadings and item intercepts constrained across the groups). Model fit was evaluated by the following fit indices (Fabrigar et al., 1999; Hu & Bentler, 1999): (a) comparative fit index (CFI; > .95 for good fit; .92 to .94 for adequate fit), (b) the standardized root mean square residual (SRMR; close to < .08 for acceptable fit), (c) and the root mean square error of approximation (RMSEA; close to < .08 for acceptable fit). Evaluation of the invariance was conducted by assessment of changes in the fit index. A change in CFI ( CFI) less than .01, change in RMSEA ( RMSEA) less than .015, and change in SRMR ( SRMR) less than .03 suggests no significant decrease in model fit and supports measurement invariance (Chen, 2007). The S-B chi-square tests were also conducted to assess significant changes in fit of the invariance models. However, we placed little emphasis on S-B chi-square tests as we anticipated these tests to be significant (suggesting non-invariance) given that chi-square tests are known to be sensitive to sample size and even a small difference may be found to be significant with increasing sample sizes (Cheung & Rensvold, 2002).

Results

Descriptives and Cronbach’s alphas are presented in Table 2. PORS factor based scale scores produced Cronbach’s alpha coefficients of .87 and higher among our age and gender groups. Fit statistics for invariance models and tests are displayed in Table 1.

Age

Invariance testing. As anticipated, S-B chi-square tests were all significant in comparing the invariance models (Table 1).

Table 1. Goodness-of-Fit Indicators for Structural Equation Modeling Analyses.

|

Models/Samples |

df |

RMSEA |

90% CI |

CFI |

SRMR |

BIC |

AIC |

|

|

Men (N = 372) |

375 |

680.846** |

.047 |

[.041, .052] |

.948 |

.042 |

26620.43 |

26150.16 |

|

Women (N = 555) |

375 |

826.716** |

.047 |

[.042, .051] |

.941 |

.039 |

41006.48 |

40488.21 |

|

Ages 18 to 24 (N = 484) |

375 |

685.479** |

.041 |

[.036, .046] |

.950 |

.051 |

35584.77 |

35463.80 |

|

Ages 25 to 39 (N = 345) |

375 |

701.803** |

.050 |

[.044, .056] |

.945 |

.045 |

24728.33 |

24647.78 |

|

Ages 40 to 64 (N = 113) |

375 |

586.944** |

.071 |

[.060, .082] |

.896 |

.070 |

7679.41 |

7731.39 |

|

Age groups |

|

|

|

|

|

|

|

|

|

Configural model |

1125 |

2444.100** |

.061 |

[.058, .064] |

.926 |

.052 |

68444.904 |

67842.958 |

|

Metric model |

1237 |

2673.289** |

.061 |

[.058, .064] |

.919 |

.061 |

68262.821 |

67848.147 |

|

Scalar model |

1289 |

2813.181** |

.061 |

[.058, .064] |

.914 |

.063 |

68211.765 |

67884.039 |

|

Gender groups |

|

|

|

|

|

|

|

|

|

Configural model |

750 |

1950.562** |

.059 |

[.056, .062] |

.932 |

.040 |

67795.702 |

66636.033 |

|

Metric model |

806 |

2069.217** |

.058 |

[.055, .061] |

.928 |

.055 |

67531.768 |

66642.688 |

|

Scalar model |

832 |

2129.194** |

.058 |

[.055, .061] |

.926 |

.055 |

67414.114 |

66650.665 |

|

MI Model Comparison |

(df) |

p |

CFI |

RMSEA |

SRMR |

|

|

|

|

Age groups |

|

|

|

|

|

|

|

|

|

Configural vs. Metric |

229.189(112) |

<.001 |

-.007 |

0 |

.009 |

|

|

|

|

Metric vs. Scalar |

139.893(52) |

<.001 |

-.005 |

0 |

.002 |

|

|

|

|

Scalar vs. Configural |

369.081(164) |

<.001 |

-.012 |

0 |

.011 |

|

|

|

|

Gender groups |

|

|

|

|

|

|

|

|

|

Configural vs. Metric |

118.655(56) |

<.001 |

-.004 |

-.001 |

.015 |

|

|

|

|

Metric vs. Scalar |

178.632(26) |

<.001 |

-.002 |

0 |

0 |

|

|

|

|

Scalar vs. Configural |

59.977(82) |

<.001 |

-.006 |

-.001 |

.015 |

|

|

|

|

Note: RMSEA = root-mean-square error of approximation; CI = confidence interval for RMSEA; CFI = comparative fit index; SRMR = standardized root-mean-square residual; BIC = Bayesian information criterion; AIC = Akaike information criterion; MI = measurement invariance *p < .05. **p < .01. |

||||||||

The baseline bifactor configural model (Figure 1) had a good fit to the data across the two age groups. The configural model was compared to the metric model with factor loadings on the general and specific factors constrained across the groups. The metric model had an adequate fit to the data and the changes in fit index indicated no significant decrement in fit from configural to the metric model (∆CFI = -.007, ∆RMSEA = 0, ∆SRMR = .009). We then compared metric model to the scalar model with factor loadings and intercepts on the general and specific factors constrained across the groups. The scalar model had an adequate fit to the data and the changes in fit index indicated no significant decrement in fit from metric to the scalar model (∆CFI = -.005, ∆RMSEA = 0, ∆SRMR = .002). We decided to accept our results as evidence for measurement invariance as the changes in CFI, RMSEA, and SRMR across the increasingly constrained models did not indicate significant decrement in model fit (Chen, 2007; Cheung & Rensvold, 2002).

Figure 1. Bifactor model of the Perceived Online Racism Scale (PORS) with the general factor and three specific factors.

Note: PERCA = Personal Experience of Racial Cyber-Aggression; VERCA = Vicarious Exposure to Racial Cyber-Aggression;

OMERR = Online-Mediated Exposure to Racist Reality

Latent means. Given the evidence of PORS measurement equivalence across the age groups, we conducted latent mean comparisons. We set the factor means at 0 for the ages 18 to 24 group. The ages 25 to 39 group in our sample reported significantly lower means for VERCA and OMERR subscales but showed significantly higher means for PERCA subscale (Table 2). Compared to those aged 18 to 24, the ages 40 to 64 group reported significantly lower means in all three subscales (Table 2).

Table 2. Latent means, standard deviations, and Cronbach’s alphas.

|

|

PERCA |

|

VERCA |

|

OMERR |

|

PORS-total |

||||||||

|

Sample group |

M |

SD |

α |

|

M |

SD |

α |

|

M |

SD |

α |

|

M |

SD |

α |

|

Women (N = 555) |

0 |

1 |

.94 |

|

0 |

1 |

.89 |

|

0 |

1 |

.92 |

|

0 |

1 |

.95 |

|

Men (N = 372) |

.19 |

1.09 |

.95 |

|

-.55** |

.79 |

.89 |

|

-.52** |

.87 |

.92 |

|

-.01 |

.99 |

.96 |

|

Ages 18 to 24 (N = 484) |

0 |

1 |

.93 |

|

0 |

1 |

.89 |

|

0 |

1 |

.92 |

|

0 |

1 |

.95 |

|

Ages 25 to 39 (N = 345) |

.26* |

1.25 |

.95 |

|

-.46** |

.78 |

.90 |

|

-.44** |

.96 |

.93 |

|

.22 |

1.01 |

.96 |

|

Ages 40 to 64 (N =113) |

-.38* |

.85 |

.92 |

|

-.84** |

.77 |

.87 |

|

-.92** |

.90 |

.92 |

|

.28 |

.92 |

.94 |

|

Note: PERCA = Personal Experience of Racial Cyber-Aggression; VERCA = Vicarious Exposure to Racial Cyber-Aggression; OMERR = Online-Mediated Exposure to Racist Reality; PORS = Perceived Online Racism Scale; SD = standard deviation, ** p < .01 * p < .05. |

|||||||||||||||

Gender

Invariance testing. As anticipated, S-B chi-square tests were all significant in comparing the invariance models (Table 1). The baseline bifactor configural model (Figure 1) had a good fit to the data across men and women. The configural model was compared to the metric model with factor loadings on the general and specific factors constrained across the groups. The metric model had a good fit to the data and the changes in fit index indicated no significant decrement in fit from configural to the metric model (∆CFI = -.004, ∆RMSEA = -.001, ∆SRMR = .015). We then compared metric model to the scalar model with factor loadings and intercepts on the general and specific factors constrained across the groups. The scalar model had a good fit to the data and the changes in fit index indicated no significant decrement in fit from metric to the scalar model (∆CFI = -.002, ∆RMSEA = 0, ∆SRMR = 0). We decided to accept our results as evidence for measurement invariance as the changes in CFI, RMSEA, and SRMR across the increasingly constrained models did not indicate significant decrement in model fit (Chen, 2007; Cheung & Rensvold, 2002).

Latent means. Given the evidence of PORS measurement equivalence across gender, we conducted latent mean comparisons. We set the factor means at 0 for the women in our sample. The men in our sample reported significantly lower means for VERCA and OMERR subscales (Table 2). Compared to those aged 18 to 24, the ages 40 to 64 group reported significantly lower means in all three subscales (Table 2).

Discussion

The current study advances the psychometric property of the PORS as a general measure of online racism. We found evidence for PORS as an equivalent and reliable measure across gender (men and women) and age groups (ages 18 to 24, 25 to 39, and 40 to 64). Thus, differences in the PORS scores reflected true differences among gender and age groups rather than response bias. The results add to the promising utility of the PORS for future studies on online racism.

Additionally, our latent mean comparisons found meaningful score differences among gender and age groups in line with extant literature. Across the age groups, we observed a general trend that adults in later age groups (25 to 39 and 40 to 64) had significantly lower scores in PERCA, VERCA, and OMERR subscales. The findings align with existing literature suggesting lower Internet usage and reduced engagement with online social interactions with increasing age (Thayer & Ray, 2006) which in turn suggests that there might be less chance that older adults are exposed to online racism. Thus, it appears that younger adults (ages 18 to 24) may be at greater risk in being exposed to online racism. However, one exception was the significantly higher scores in PERCA score for the working-aged adults compared to college-aged adults. This difference may be attributed to the trend that working-aged adults, especially those younger in this group, still engage in considerable online social interactions and communication tools which could increase the chance that they are exposed to interpersonal racial violence in online social platforms (Jones & Fox, 2009; PEW, 2014; Thayer & Ray, 2006). It is also possible that working-aged adults, from a developmental perspective, may be more sensitive to perceiving racism or be further along the socialization process of racial experiences in the United States (Brown & Tylka, 2010).

Regarding gender, the men in our sample exhibited significantly lower scores in VERCA and OMERR. Given that men report greater online racial discrimination (Tynes et al., 2013) and the multitude of online social platforms based on topics that likely attract more men than women (Cleland, 2014; Daniels, 2012; Love & Hughey, 2015), men may be more exposed to online racism in the interpersonal context (PERCA) rather than the observation of others (VERCA) and contents (OMERR). The men in our sample did report greater mean in PERCA although the difference was not significant. Alternatively, the difference may also be traced to cyberbullying literature suggesting that girls are more likely to report greater rates of online aggression (Ang & Goh, 2010; Erdur-Baker, 2010; Li, 2006) which may have influenced women’s greater report on online racism experienced by other users and seen in online materials. Collectively, our findings suggest potentially greater vulnerability for women to perceive online racism. This is an interesting juxtaposition to the theme that men have reported higher rates of racism in extant literature based on traditional offline racism (Paradies, 2006) and should be studied further.

At the subscale level, age and gender may be differentiating factors between the PERCA and the VERCA and OMERR subscales. Notably, the PERCA subscale scores differentiated the three age groups, with working-aged adults reporting the most personal experiences of online racism and middle-aged adults reporting the least. The significance of the personal experience subscale may be explained by online communication research suggesting that personal identification with online content may predict greater perceived relevance and risk of that material for an individual (Cohen, 2001; Snyder & Rouse, 1995). Thus, personal attacks may invariably be perceived as personally threatening. Within this framework, it is possible that the lower scores on VERCA and OMERR for men and adults with increasing age is due to their lower identification with other users being racially victimized in online interactions and with contents illuminating the reality of racism in society. This would be a noteworthy aspect to examine in future studies of online racism.

There are several limitations to the current study. First, we were not able to extend our invariance testing for adults older than 65. It is possible that differences in Internet use and online involvement may be more distinct and limited for older adults who are further down the age continuum. On the other hand, we were also not able to test the psychometric properties of PORS with adolescents despite the salience of racial victimization among adolescents (Tynes et al., 2010). Thus, it would be important to examine these groups in a future study to expand utility. Second, the current study treats gender and age categories as strictly separate and little is known about the intersectionality of these demographics. For example, Bastos, Celeste, Faerstein, and Barros (2010) synthesized from their review of racism measures that attributional ambiguity (i.e., difficulty interpreting feedback from others) might make it difficult for participants to report on their racism experiences without actively separating how gender and age could influence their perception. Moreover, PORS items focus on the singularity of race/ethnicity in characterizing online racism experiences and additional compounding experiences related to gender (e.g., sexism) and age (e.g., ageism) are not represented. Thus, although our study allows assessment of varying levels of online racism experiences among different gender and age groups, these differences may not necessarily represent the nuanced experiences in these groups. It would be interesting for future studies to examine how the intersections of age, gender, and race/ethnicity may lead to distinct perceptions of online racism.

Despite the limitations, our findings strengthen the psychometric foundation of the PORS as a general measure of perceived online racism. The findings contribute to future empirical studies and theory building regarding the nature of online racism. Future research can examine the role of gender and age in further differentiating the subscales of the PORS. They may serve as useful moderators in future studies investigating predictors and outcomes associated with online racism. It would be important to identify factors and interventions that can help mitigate the risks of online racism (Jakubowicz et al., 2017; Keum & Miller, 2018), especially for women and younger adults, given that they reported greater levels of online racism.

Acknowledgements

No acknowledgements.

Author Disclosure Statement

Brian TaeHyuk Keum, M.A., has no disclosures to report and there are no conflicts of interests.

Matthew J. Miller, Ph.D., has no disclosures to report and there are no conflicts of interests.

Appendix

The Perceived Online Racism Scale (PORS v1.0)

INSTRUCTION: We are interested in your personal experiences of racism in online settings as you interact with others and surf the Internet. As you answer the questions below, please think about your online experiences in the past 6 months.

Please rate your responses based on the following options: 1=Never, 2=Rarely, 3=Sometimes, 4=Often, 5=Always.

In the past 6 months, I have…

- Received racist insults regarding my online profile (e.g., profile pictures, user ID).

- Been kicked out of an online social group because I talked about race/ethnicity.

- Been intentionally invited to join racist online social groups/hate groups.

- Received replies/posts suggesting that I should avoid connecting online with friends from my own racial/ethnic group.

- Received racist insults about how I write online.

- Been threatened of being harmed or killed due to my race/ethnicity.

- Received replies/posts hinting that my success is surprising for a person of my race/ethnicity.

- Received a message with a racist acronym such as FOB (Fresh Off the Boat) or PIBBY (Put In Black’s BackYard).

- Been harassed by someone (e.g., troll) who started a racist argument about me for no reason.

- Received a racist meme (e.g., racist catchphrases, captioned photos, #hashtags etc.).

- Been tagged in (or shared) racist content (e.g., websites, photos, videos, posts) insulting my race/ethnicity.

- Received posts with racist comments.

- Received replies/posts hinting that what I share online cannot be trusted due to my race/ethnicity.

- Been unfriended/lost online ties because I disagreed with racist posts.

- Been informed about a viral/trending racist event happening elsewhere (e.g., in a different location).

- Been informed about unfairness in healthcare for racial/ethnic minorities (e.g., biased quality of treatment, insurance issues).

- Seen online videos (e.g., YouTube) that portray my racial/ethnic group negatively.

- Encountered online resources (e.g., Urban Dictionary) promoting negative racial/ethnic stereotypes as if they are true.

- Been informed about unfairness in financial gains for racial/ethnic minorities (e.g., earning less money than Whites for doing the same work, unfair housing and loan opportunities).

- Been informed about unfairness in education for racial/ethnic minorities (e.g., higher suspension rates for racial/ethnic minority students).

- Been informed about a viral/trending racist event that I was not aware of.

- Seen online news articles that describe my racial/ethnic group negatively.

- Seen photos (e.g., Google images) that portray my racial/ethnic group negatively.

- Encountered a viral/trending online racist content (e.g., many likes, stars).

- Encountered online hate groups/communities against non-White racial/ethnic groups

- Seen other racial/minority users receive racist comments.

- Seen other racial/minority users being treated like a second-class citizen.

- Seen other racial/minority users being treated like a criminal.

- Seen other racial/minority users receive racist insults regarding their online profile (e.g., profile pictures, user ID).

- Seen other racial/minority users being threatened to be harmed or killed.

Personal Experience of Racial Cyber-aggression: Items 1-14

Online-mediated exposure to racist reality: Items 15-25

Vicarious exposure to racial cyber-aggression: Items 26-30

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Copyright © 2018 Brian TaeHyuk Keum, Matthew J. Miller