A method for collecting and interpreting interpersonal behavioral data in Second Life: A sample study on Asians’, Blacks’, and Whites’ social distances

John Tawa1, Assawin Gongvatana2, Marcos Anello3, Uma Shanmugham4, Timothy Lee-Chuvala5, Karen L. Suyemoto62 Brown University, Providence, RI, USA

3 Independent research collaborator, Boston, MA, USA

4 Massachusetts School for Professional Psychology, Newton, MA, USA

Abstract

Keywords: Second Life, virtual world, social distance, interpersonal behavioral data

Introduction

Research on inter-group behavior is increasingly being conducted using virtual world technology (e.g., Eastwick & Gardner, 2009; Feldon & Kafai, 2008). Although this research approach is still in its early development, its potential for facilitating social psychological research has already been recognized (Blascovich et al., 2002; Lazer et al., 2009). For example, social psychologists doing experimental research have long recognized the tension between the need for developing tightly controlled environments (e.g., a laboratory) while avoiding the creation of mundane social environments that lack the nuances of a real life environment (Blascovich et al., 2002). Virtual worlds offer a setting in which a realistic social environment can be approximated, while maintaining a tightly controlled environment.

In addition, this method of study is promising in that it offers us contexts in which to examine participants interacting in real-time and with other live participants (Feldon & Kafai, 2008). Previously, research examining real-time group interactions has primarily been the work of ethnographers, and their methods commonly face challenges related to accurate and unbiased recording of their observations. Undoubtedly, the vast majority of research in social psychology has fallen back on paper and pencil survey measures that rely primarily on participants’ imagined or self-reported perceptions. For example, intergroup relations researchers have long been interested in the concept of social distance, or, a person’s feeling of proximity and closeness to another social group. Standard measures of social distance ask people to respond to the degree in which they feel comfortable interacting with members of various racial/ethnic groups (Bogardus, 1925; Rollock & Vrana, 2005). Research using standard social distance measures has found that Blacks and Asians, when compared to other racial dyads, maintain a relatively large social distance from one another (e.g., Hraba, Radloff, & Gray-Ray, 1999; Smith, Bowman, & Hsu, 2007; Thornton & Taylor, 1988; Weaver, 2008). Hraba (1999) found that Black undergraduates (n = 208) self-reported more social distance to Asians than White undergraduates reported towards Asians (n = 192). Consistently, Smith et al. (2007) found that Asian undergraduates (n = 575) self- reported significantly more social distance towards Blacks than White undergraduates (n = 122) reported towards Blacks. Despite the important contribution of standardized measures of social distance for understanding race-relations, responses are invariably a participants’ self-perception of their feelings towards members of other ethnic/racial groups, which are prone to self-perception biases such as social desirability.

Virtual technology offers social psychologists an approach for studying social distance with acutely precise behavioral data. Environments in which participants interact can be tightly controlled but rich with detail, replicating real-life environments. Participants do not have to be aware of what they are being assessed for, avoiding challenges related to self-perception and social desirability. As an example of social distance research using virtual technology, Bailenson, Blascovich, and Guadagno (2008) fitted 64 subjects with a headset that displayed a virtual landscape and an avatar (that either bore a photographic resemblance of the subject or did not). As the subject walked around in an empty room, their movement was reflected in the virtual landscape. Participants’ interpersonal distance to the avatar was continually tracked and later operationalized as the closest distance achieved during the course of the study trial. As another approach, Yee and Bailenson (2008) have written a script to collect user data within Second Life, and have measured social distance by collecting users’ Cartesian coordinates on the Second Life grid.

Virtual world research has also begun to establish some measure of validity for virtual world behavior more generally as representative of real life behavior. This has largely been achieved by replicating established findings from social psychological research within virtual worlds. For example, classic social psychological theories such as “foot in the door” techniques (Eastwick & Gardner, 2009), attractiveness bias (Yee, Bailenson, Urbanek, Chang, & Merget, 2007), and even Stanley Milgram’s study on obedience (as cited in Eastwick & Gardner, 2009) have been replicated in virtual worlds, arriving at similar findings. In the study using the virtual headsets described above, Bailenson, Blascovich, and Guadagno (2008) found that people tended to maintain closer interpersonal distance and were more willing to commit embarrassing acts in relation to virtual persons that more closely resembled themselves as opposed to those that resembled other people. They also found avatars that more closely resembled themselves to be more attractive and likeable. Using the computer script developed by Yee and Bailenson (2008), Yee et al. (2007) found that, consistent with social psychological theory, male-to-male dyads maintained a larger social distance when compared to female-to-female dyads.

One challenge facing virtual world researchers is the possibility of an “online disinhibition effect” in which participants tend to display more extroverted, assertive, and even hostile behavior online than in real life (Postmes, Spears, & Lea, 2002; Suler, 2004). One possible interpretation is that these behaviors are actually a better reflection of one’s “true self” when compared to self-report methods that may be subject to social desirability effects. For instance, a participant who holds relatively hostile views about inter-racial interactions may be more willing to express this hostility in a virtual world where he or she is anonymous. In any case, it remains undetermined the extent to which behavior in an online environment is more or less reflective of real behavior when compared to self-report methods. Ultimately, we propose that the strongest approach to approximating behavior is the triangulation of multiple methods. At present, the behavioral sciences have a surplus of self-report methods and greater inclusion of virtual method research provides a widely useful alternative

In this paper, we provide details for collecting and interpreting interactive behavioral data in Second Life. While we do provide a sample research study to help illustrate our techniques, our focus is on the method and full results and implications of the descriptor study are beyond the scope of this paper. Our methodological approach adapts the Second Life computer script developed by Yee and Bailenson (2008). Our contribution is the application of this script to an interactive environment in Second Life in which all participants are wearing the script. Thus, we describe our data set as interactive; each participant’s social distance is not measured in isolation, but rather is measured in relation to the other participants. We find this approach promising in that it replicates real life conditions in which people with whom we interact are rarely physically stationary, and are themselves interacting with multiple people simultaneously. In addition to providing readers with the adaptation of the computer script developed by Yee and Bailenson (2008), we also emphasize our approach to computing social distance (once data has been collected) and the logistics of setting up the study (e.g., attaching the script to participants’ avatars).

A Study of Social Distance in Second Life

As a relatively new method of data collection, the prospect of developing a research study in a virtual world may seem overwhelming. This paper is a “how-to” guide for setting up a virtual world study for interactive behavioral data, using our study as an example. Researchers may choose to follow our method in its entirety, or glean components of it (e.g., user data collection, data reduction) for adaption for their own study approaches. A complete replication of our study involves the following components: 1) setting up a virtual environment in Second Life, 2) collecting user data in Second Life, 3) logistics of running a study trial, and 4) reducing user data to usable study variables.

Setting the Second Life Context

Although designing and setting up a study in a virtual world does take considerable effort, we believe Second Life (SL) as a research method is approachable even to a novice. First time users may experience a relatively steep learning curve, and we hope to facilitate this learning with this paper. SL allows the researcher to create almost any virtual environment imaginable: tropical, barren, urban, etc. Study trials could be conducted in an indoor setting (e.g., a function hall or University setting) or outdoor setting (e.g., a park, a beach, a garden, etc.). Almost any décor or detail that one can imagine is available on SL, from the most commonplace (e.g., flower beds) to the most obscure (e.g., a pet dolphin). The first step is for the researcher to obtain an “island” or a plot of land on the mainland which to build. Prefabricated land can be leased but tends to be quite costly (approximately $1,000 for a plot of land as well as $300 monthly maintenance fees). Researchers may seek other affordable options; for example, in our study, we borrowed an island that was already owned and maintained by the primary researcher’s University. This island is a 65,536 m2 space that was developed by volunteer students and administrators at the University. In either case, once land is obtained, the researcher can develop this land and set “permissions” which enable or restrict specific functions and behaviors on your island. One consideration for researchers interested in running a study on Second Life is that as a general rule, each region (e.g., our island) can only host a maximum of approximately 50 avatars at a time. Our method for addressing this limitation in Second Life was to run multiple “trials” of approximately 20-30 participants at a time and then combining the data.

Building an island in Second Life. When a SL island is new, it is a nondescript plain of land surrounded by water. The developer should begin by landscaping the island, meaning parts of the land can be raised or lowered (to create hills, valleys, etc.) or removed (to create ponds, etc). The SL viewer provides all the tools necessary to “build” the land, which the developer uses to landscape the island. Terrain is sculpted with a bulldozer shaped tool, which allows the developer to raise, lower, smooth or texture land. Landscaping items, such as trees and tall grass are included as default items; however, specific kinds of trees, shrubs and flowers can be purchased from virtual garden shops. Developers also have the option to subdivide, or “parcel” out the land and different user settings can be applied to each parcel. This option is very useful to researchers who may want to contain participants to a smaller portion of the island. For example, in our study of social distance, we wanted to keep everyone within eye-sight of each other. Thus we restricted participants to a parcel of exactly 9840 square meters, with the furthest distance across the parcel never being greater than approximately 100 meters. Participants in our study primarily gathered underneath a gazebo although they did have the option of moving outside of the gazebo to an adjacent garden and deck. A screenshot of participants interacting in the gazebo from one of our study trials is shown in Figure 1.

Buildings on the island can be purchased from other individuals or built from primitive objects (referred to as “prims”). To buy a prefabricated building from another individual, one would enter in the parameters what he or she was looking for (e.g. prefab office building) in the search function and teleport to the link provided. Alternatively, one can build one’s own buildings or structures from prims, which are a set of 15 basic shapes including: cube, prism, pyramid, tetrahedron, cylinder, hemicylindar, cone, hemicone, sphere, hemisphere, torus, tube, ring, tree, and grass. These basic shapes and plants can be adjusted in size and shape. For example, a basic pyramid can be repositioned, resized, rotated, twisted, sliced, and the slope can be adjusted to a desired shape. Land building is a dynamic process, as users’ needs change so does the shape and function of the land. To begin manipulating the land in SL one only needs a parcel of land. By selecting the “Land Tool” from the Build menu, one can begin to create mountains and valleys, rivers and lakes, an oasis or an ocean front property.

Setting permissions on your Second Life island. “Permissions” are the rights governing what is and is not allowed on the island. In order to limit participants’ functions and run computer scripts on an island, the researcher needs to configure the island (or specific parcels on an island) to allow certain kinds of behavior and disallow others. In SL, participants can fly (the default setting) or that capability can be disabled in order to better mirror a real world environment. In our study, we did not allow flying because it would appear unrealistic and because our script was written to only compute 2-dimensional social distance (calculation of social distance among flying participants is possible, however, as Second Life does provide 3-dimensional coordinates). Similarly, we did not allow voice chatting so that all conversations between avatars could be saved as a text file. Researchers can also allow or disallow “pushing” (i.e., bumping into other people and knocking them backwards) that could be tallied for gaming purposes or as a possible approximate measure of aggression. Other permissions include what electronic media plays through the parcel. For example, one could stream music throughout the parcel or post a video including participant instructions about how to navigate through SL. Permissions also need to be set to determine which users can build prims or edit the terrain. It is important to limit these particular permissions to only the researcher so that the participants do not change the landscape.

Lastly, if one is using a computer script to collect data, one will need to specify that scripts be allowed to run on the specified parcels. Scripts, generally speaking, are programs created by SL users with building privilege that allow virtual objects to take on a greater functionality. As an example, a script that collects behavioral data can be hidden inside a virtual hat that is worn by the participant. Setting permissions usually involves checking or un-checking a box in the “about land” window. Once the land has been sculpted and permissions have been set, the researcher is ready to run scripts on the island that collects user data. In the next section, we describe the development of data collecting scripts.

Collecting User Data: Participants’ Coordinates on the Second Life Grid

“User data” is a general term and encompasses any information that reflects a participant (or SL users) activity in SL. In this paper, we focus on data collection of participants’ coordinates (one type of user data) and the analysis of the coordinates as a measure of social distance. In order to collect this data, participants’ coordinates are collected via the computer script on SL. The data is then piped to a website (we used www.1and1.com) in which the data is stored and can then be downloaded into an Excel or SPSS file. In SL scripts must be attached to a prim or a virtual object in order to run. This virtual object could be, for example, a hat that is worn by participants, or it can just be a shape placed on the user’s Head’s Up Display (HUD; the user’s viewer or screen). In our study, we created a wooden box that contained the script and that was placed on the upper left hand corner of the user’s screen. The object containing the script had to be configured for permissions so that everyone who had a copy of the object could run the script. To change permissions of the object, right click on the object and edit the object’s details. In our study, we allowed permissions to transfer (or copy) the object to another user, but disallowed anyone to modify the computer script.

Participants’ coordinates on the SL grid were collected using a modified version of the computer script for SL behavioral data collection offered by Yee and Bailenson (http://www.nickyee.com/pubs/secondlife.html; 2008). Yee and Bailenson’s script triggered at regular 30 second time intervals and was written to collect multiple forms of user data including coordinates, indicators of whether users were walking, running, or flying, and the chats spoken between participants. The script was developed to collect information from avatars within a 200-meter range of the researcher’s avatar. In our study, the participants themselves were given the script (contained in a virtual box) so that we would have data from the subjective perspective of every avatar as it interacted with other avatars (rather than only from the researcher’s perspective). Thus, our modified script recorded participants’ own coordinates on the SL grid, which were then used to compute their social distances from one another. Additionally, because we wanted to compute the most precise social distance data possible, our script triggered every 1 second. This enabled us to more accurately “match” participant pairs when computing their social distances. Since we were calculating social distances based on participant pair’s coordinates on the grid, we needed to make sure that we were using coordinate pairs that were recorded at the exact same time. Additionally, because we were collecting data at one second intervals from more than 20 people at one time—all of which was being simultaneously piped to a single website database—we had to reduce the types of data being collected and downsize the data buffer size so that there would not be a data overflow (given the vast amounts of data flowing at once). Our script only collects the avatar’s coordinates. Our adapted script is available in Appendix A.

Running Study Trials

Recruiting participants to participate in a study using virtual world technology presents some logistic challenges. Research participants are not necessarily familiar with virtual world technology, and asking them to do such things such as attaching scripts to their HUD’s can be overwhelming and can lead to participant drop-out and selection bias. Thus, we present some logistic strategies specific to our study to circumvent some of these problems.

We recruited for subjects on both online formats (e.g., Craigslist, Facebook) and in live college classes. To aid our online recruitment, we created a website containing our project information and live contact information (mailing addresses and phone numbers at our University) so that we would be seen as legitimate. Regardless of whether we recruited online or in classrooms, we directed potential participants to an online survey “screener” that was used to determine eligibility. In our study we were only recruiting monoracial Black, White, and Asian participants. Participants selecting multiple racial backgrounds were screened out of this study. We also used the screener to ask prospective participants the type of computer they had access to because Second Life can only run on relatively recent computers. We also asked if they had prior experience using Second Life or any other virtual world. While we did not require a specific level of SL experience to participate in this study, we wanted to have this information to later be able to run analyses to control for any possible confounding effects of user experience on social distance.

After identifying qualifying participants, we emailed them a welcome email with the dates and times of upcoming trials and asked them to specify when they wanted to participate. Once they confirmed a date with us, we then sent them an informed consent document, which explained the study and had simple instructions on how to download Second Life and how to set up an account with a username and password. We then asked them to send an email back to us saying that they agreed with the consent form and asked them to share with us their username and password to their new Second Life account so that we could configure their account (e.g., attach the data collection script).

After receiving this email, we then logged into their SL accounts to attach the script, which appeared as a visible “wooden box” on the top left side of their screen. Participants were explicitly told that the wooden box was used to “collect data” from them, although we did not specify what kind of data we were collecting because we did not want to influence their behavior. We also deposited 100 Linden Dollars (SL currency) into their account for them to buy clothing that best fit their sense of style. We then added them to a “user group” that was created for the purpose of this study and which allowed us to communicate with the group as a whole during the study trial (e.g., it allowed us to simultaneously send instructions to everyone participating in the trial). We then asked them to customize their avatar to resemble themselves as closely as possible. We emailed them an “avatar customization” document that gave them detailed but simplified instructions on how to change their avatar’s body (facial features, hair, torso, legs, etc.) and skin color by using the sliding bars found in the menu on Second Life. We then asked them to buy clothes that closely resembled what they wore in real life from the stores on Second Life with the Linden Dollars that we had given them. We asked that they spend no more than an hour doing all of this.

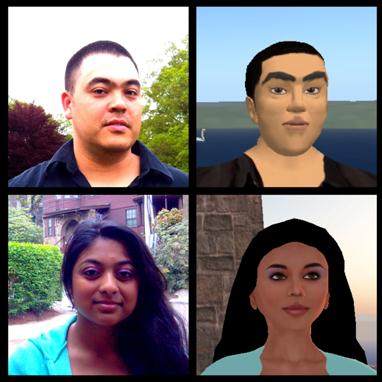

Participants generally felt that they were able to create avatars that resembled themselves in “real life.” In a follow up survey to the study we asked participants: “To what extent did your avatar resemble you” (where 1 = very much; 7 = not very much). Among the 32 participants in the sample study described above, participants average score on this question was 2.81 (SD = 1.47). We also asked how much their avatar’s resembled them racially and the average score was 1.87 (SD = 1.31). We did not find significant differences on these questions by racial group. In figure 3 we provide pictures of two of the authors of this study, side-by-side with their avatars to illustrate the approximate closeness one may create an avatar to resemble a real life person.

On the trial date, we again signed into each participant’s account and teleported each participant’s avatar to our study location, so that participants wouldn’t have to figure out how to do this. We also created our own avatars so that we could observe the study taking place. We asked the participants to log onto their account about 10 minutes before the trial time so that we could start the trial in a timely manner. After taking attendance, we sent out group instructions/notifications through the user group about what they had to do for the study.

While the participants were interacting with each other through their avatars, our avatars walked around and observed the study. We had attached an invisibility object to our avatars and so once activated (or worn), we couldn’t be seen by the other avatars. We recorded their chats by saving the chatlogs (as text) onto our computer, an option in the preferences section of Second Life. We were able to pick up the chat feed specific to a group of avatars if we stood about 20 feet from the avatars. Although we collected chat logs as supplemental data, our primary interest was in the actual social distance between participants throughout the study.

Data Reduction: Creating a Social Distance Variable from Participants’ Coordinates

One major challenge facing virtual world researchers is the considerable amount of data that are acquired during an experiment, and the methods for transforming them into meaningful and usable variables. In our study, Asian, Black, and White participants interacted for 15 minutes at a virtual social event on our island in Second Life. The purpose of our study was to test the sociological theory (Blumer, 1958) that suggests that competition for resources creates distance between minority groups (e.g., Blacks and Asians). Thus, we modeled a context of competition for resources in a virtual world setting by asking participants to imagine that they were members of a town called “Anytown, USA,” and that their task was to select five other participants that they believed represented the hardest working and most intelligent citizens. Participants were led to believe that the person who put together the strongest “team” would win a $300 gift certificate (in real life). In actuality, a $300 gift certificate was awarded, but was randomly selected and not based on participants’ peer selections.

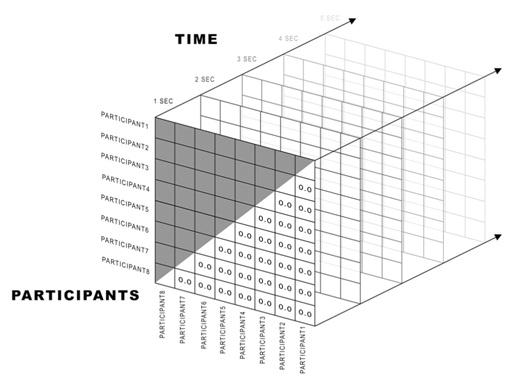

Within this context of competition, we measured each participant’s average social distance score to each racial group as they interacted across the entire 15 minutes. Figure 2 represents how the data was collapsed. Social distance was calculated for every possible participant pair, at every one-second time interval, and then averaged across the 15-minute session.

To illustrate, consider one of our study trials, which included 5 Asian, 4 Black, and 11 White participants. In the first second of the trial, one of the Asian participants (username: jtaznboy123) stood 8.33, 9.14, 10.40, and 12.69 meters away from each of the Black participants respectively. In the first second of the trial, jtaznboy123 had a “social distance to Blacks” score of 10.14 (8.33+9.14+10.40+12.69/4 = 10.14). This process was then repeated for every second across the 15-minute trial and was then averaged across all time points (for a total of 900 time points), resulting in a single social distance score in relation to each group across the 15-minute trial. Jtaznboy123’s final social distance score to Blacks was 8.80. Ultimately, each participant’s interactive behavior results in one social distance score in relation to each racial group; for example, jtaznboy123 had an average social distance score of 8.60 towards the other 4 Asian participants, an average social distance of 8.80 toward the 4 Black participant, and an average social distance score of 7.84 toward the 11 White participants.

One potential problem with measuring social distance in this manner is that once another person or group of people is outside of one’s visible range, then the absolute distance becomes insignificant. For example, while it is significant, as an indicator of social distance, if someone stands 5 meters versus 10 meters from a subject, it is insignificant if someone stands 100 meters versus 200 meters from a subject, since both of the these people will be outside of the subject’s visual field. In Second Life, other avatars become visible to a subject’s avatar when they enter within a 50-meter range, which is referred to in Second Life as a “draw distance.” This problem can be addressed by restricting the parcel that participants interact on to less than 50 feet across in any direction. In our study, the parcel in some directions was greater than 50 meters, thus, obtained distance scores of greater than 50 were recoded to 50. For example, both scores of 51 and 200 were recoded as 50.

The physical distance between two avatars was determined by entering both avatars’ Cartesian coordinates (where they are on the SL grid at any second) into the following equation (Pythagorean Theorem):

Distance = sqrt((x1-x2)^2+(y1-y2)^2))

Because of the large number of data points required to determine social distance scores for each participant, our computations were aided by the R computer algorithm (see Appendix B), which creates a 3-dimesional matrix like the one in Figure 2, including participant pairs (on the x and y axes) and time (on the z axis). Any distance determined to be greater than 50 meters was recoded as “50 meters” in order to control for the variability in distance among avatars more than 50 meters apart from one another.

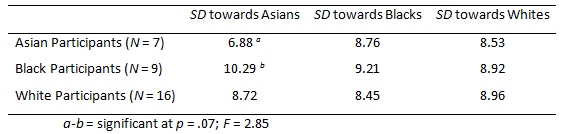

In addition to examining individual social distance scores, group based data can be found by averaging individual scores (or the algorithm could be revised to produce this directly). As an example, Table 1 lists mean scores for each racial group from a combined sample of data that includes the trial mentioned above as well as one other trial (for a total of 7 Asian, 9 Black, and 16 White participants).

Consistent with previous research on social distance described earlier, some of our results suggest that Blacks and Asians do seem to have considerable social distance from one another, at least within context of resource competition. Asians’ social distance towards Asians (6.88) was closer than Blacks social distance to Asians (10.29) and these findings trended towards significance (p = .07) even with the relatively small sample. Again, a full analysis of these results is beyond the scope of this paper, and the primary objective here is to share this research method.

Conclusion

This paper is a “how-to” guide for conducting interactive behavioral studies in Second Life. The details of our study are provided so that they can be adapted for future social science research projects in Second Life. We believe virtual world research has the potential to become a standard and valuable tool in the social sciences for several reasons: 1) the relative ease of bringing together research participants from all over the world in a single shared space; 2) the ability to study people interacting in real time and with other “real” subject; 3) the precision and variety of user data which is easily available; and 4) the ability to create realistic, richly detailed environments that are simultaneously tightly controlled. Our study is, to our knowledge, the first to collect data from the perspective of the research subjects as they interact with one another in a virtual world. Through meticulous planning and some trial and error, we have developed a relatively user-friendly method for collecting and analyzing this data. This paper provides social scientists—both novice and expert to virtual technology—details for an innovative methodological approach for studying complex human behavior in interaction with others.

Acknowledgements

In addition to his co-authors, the first author would like to thank his research assistants Ruqian "Daisy" Ma, Cameka Hazel, Delia Gleason, Maryanne Chow, and Karla Ortega for their contributions to this project, and graphic designer Yeheshua Johnson for providing Figure 3.

References

Bailenson, J. N., Blascovich, J., & Guadagno, R. E. (2008). Self-representations in immersive virtual environments, Journal of Applied Social Psychology, 38, 2673–2690.

Blascovich, J., Loomis, J., Beall, A. C., Swinth, K. R., Hoyt, C. L., & Bailenson, J. N. (2002). Immersive virtual environment technology: Just another methodological tool for social psychology?, Psychological Inquiry, 13, 146–149.

Bogardus, E. S. (1925). Measuring social distances. Journal of Applied Sociology, 9, 299-308.

Bogardus, E. S. (1926). Social distances between groups. Journal of Applied Sociology, 10, 473-479.

Eastwick, P. W., & Gardner, W. L. (2009). Is it a game? Evidence for social influence in the virtual world, Social Influence, 4, 18-32.

Feldon, D. F., & Kafai, Y. B. (2008). Mixed methods for mixed reality: Understanding users' avatar activities in virtual worlds. Educational Technology Research and Development, 56, 575-593.

Hraba, J., Radloff, T., & Gray-Ray, P. (1999). A Comparison of black and white social distance. The Journal of Social Psychology, 139 , 536-539.

Lazer, D., Pentland, A., Adamic, L., Aral, S., Barabasi, A. L., Brewer, D., Christakis, N., Contractor, N., Fowler, J., Gutmann, M., Jebara, T., King, G., Macy, M., Roy, D., & Van Alstyne, M. (2009). Computational social science. Science, 323 ,721–723.

Postmes, T., Spears, R., & Lea, M. (2002). Intergroup differentiation in computer mediated communication: Effects of depersonalization. Group Dynamics: Theory, Research, and Practice, 6, 3–16.

Rollock, D., & Vrana, S. R. (2005). Ethnic social comfort I: Construct validity through social distance measurement. Journal of Black Psychology, 31, 386-417.

Smith, T. B., Bowman, R., & Hsu, S. (2007). Racial attitudes among Asian and European American college students: A cross-cultural examination. College Student Journal, 41, 436-443.

Suler, J. (2004). The online disinhibition effect, CyberPsychology & Behavior, 7, 321–326.

Thornton, M. C., & Taylor, R. J. (1988). Intergroup attitudes: Black American perceptions of Asian Americans. Ethnic & Racial Studies, 11, 474-488.

Weaver, C. N. (2008). Social distance as a measure of prejudice among ethnic groups in the United States. Journal of Applied Social Psychology, 38, 779-795.

Yee, N., & Bailenson, J. N. (2008). A method for longitudinal behavioral data collection in Second Life. Presence, 17, 594-596.

Yee, N., Bailenson, J. N., Urbanek, M., Chang, F., & Merget, D. (2007). The unbearable likeness of being digital: The persistence of nonverbal social norms in online virtual environments. CyberPsychology & Behavior, 10, 115-121.

Correspondence to:

John Tawa

Department of Psychology

University of Massachusetts, Boston

100 Morrissey Blvd

Boston, MA

02125

Email: saitawa(at)gmail.com

Appendix A:

/////////////////////////////////////////////////////////////////////////////

// SL Data Collection Script //

// Originally written by Nick Yee //

// (http://www.nickyee.com/pubs/secondlife.html) //

// Modified by Marcos Anello //

// //

// This script is written in the LSL language which runs in the //

// Second Life client. The original script included variables such as //

// character, motion rotation, chat logging, avatars states such as //

// flying, sitting, lying down, etc. //

// //

// We have modified the script to log only the following: Region Name (or //

// World), Character Name, and position in the environment (X, Y, Z //

// coordinates). The script also saves the data via an http query to a //

// PHP engine that processes the saving of data to a MySQL database. //

/////////////////////////////////////////////////////////////////////////////

// # of seconds between data collection snapshots

float gap = 1.0;

// # of meters to look for nearby avatars

float range = 7.00; // meters

// Set to true for debugging printouts in SL

string verbose = "false";

float counter = 0.0;

integer gRadius = 0;

default

{

// state_entry function activates the script's clock and data listener.

// It also activates the proximity sensor detecting other Avatars in

// the vicinity.

state_entry()

{

//Reset Script cache, and other script stuff

llResetScript();

// Activate the timer listener every 2 seconds

llSetTimerEvent(gap);

// Set up callback event

llListen(0, "", llGetOwner(), "");

// Set up sensor - This sets up the variables to detect Avatars that

// a closest depending on the range variable that is statically set.

llSensorRepeat("", "", AGENT, range, TWO_PI, gap);

}

// The timer function is the main engine that caches information

// (region, character name, coordinates, etc.) and then ports the data

// out to the PHP engine that is hosted on a web server for data storage

timer()

{

// Grabs the data array for the current Avatar

key owner = llGetOwner();

// Grabs Avatar Name from Owner data array

string name = llKey2Name(owner);

// Gets current position of the Avatar

vector pos = llList2Vector(llGetObjectDetails(owner,

[OBJECT_POS]),0);

// Gets name of the region name (or world) the character is in

string region = llGetRegionName();

// If the verbose flag (in the beginning of this script) is set to

// "true" then it will display the information

// via the client's console (Coordinate Position, and Region)

if (verbose == "true")

{

llOwnerSay("Position: " + (string)pos.x + ", " + (string)pos.y +

", " + (string)pos.z);

llOwnerSay("Region: " + region);

}

// This set will set the URL which PHP engine resides for data

// storage

string url = "http://s331137149.onlinehome.us/update.php?";

// Then we put all the data together into the URL query string for

// the PHP engine to process and store

url += "name="+llEscapeURL(name)+"&";

url += "region="+llEscapeURL(region)+"&";

url += "posx="+(string)pos.x+"&";

url += "posy="+(string)pos.y+"&";

url += "posz="+(string)pos.z+"&";

// We then ask the SL client to send the data to the PHP via http

// protocol using the URL query string mentioned above.

llHTTPRequest(url, [HTTP_METHOD, "POST"], "");

}

// The Sensor function grabs the names and amount of Avatars that are

// in a certain proximity.

sensor (integer numberDetected)

{

gRadius = numberDetected;

string msg = "Detected "+(string) numberDetected+" avatar(s): ";

integer i;

msg += llDetectedName(0);

for (i = 1; i < numberDetected; i++)

{

msg += ", ";

msg += llDetectedName(i);

}

//llOwnerSay(msg);

}

// This function is a safeguard if the script cannot use the sensor

// which then it will automatically turn off.

no_sensor()

{

gRadius = 0;

}

// We had to add this function because the script would stop functioning

// after a certain time. This will allow

// the script to keep running regardless of idle timeout.

attach(key id)

{

if (id)

{

llResetScript();

}

}

}

Appendix B:

# participant recorded every second. All pairwise distances between

# participants in each session are then computed for each time point.

# Computed distances are then averaged over time to reflect average social

# distances for each participant pair. Results are written to text files.

# Read data file in .csv format. This assumes the data file has 4 columns

# consisting of id number, x and y coordinates, and the 24h time of data

# recording, respectively.

datafilename <- "inputdata.csv"

data.secondlife <- read.csv(datafilename)

names(data.secondlife) <- c("id", "coord_x", "coord_y", "timestamp")

x1 <-

data.frame(

timestamp = unique(sort(data.secondlife$timestamp)),

timepoint = 1:length(unique(sort(data.secondlife$timestamp)))

)

data.secondlife <-

merge(data.secondlife, x1, by="timestamp", all=T)

data.secondlife <-

data.secondlife[order(data.secondlife$timepoint, data.secondlife$id),]

data.secondlife <-

data.secondlife[!duplicated(data.secondlife[c("id", "timepoint")]),]

# Adjust ID numbers to minimize array sizes, i.e., min(id) are transformed

# to 1. These will later be corrected when writing results to output files.

id.min <- min(data.secondlife$id)

data.secondlife$id <- (data.secondlife$id-id.min)+1

# Initialize results tables and perform computation of all pairwise spatial

# distances, which are then averaged over time.

n_time <- max(data.secondlife$timepoint)

n_subject <- max(data.secondlife$id)

data.secondlife.coord <-

array(NA, dim=c(n_subject, n_subject, 4, n_time))

data.secondlife.D <-

array(NA, dim=c(n_subject, n_subject, n_time))

for (s1 in (unique(data.secondlife$id))) {

for (s2 in (unique(data.secondlife$id))) {

for (time in 1:n_time) {

x1 <-

data.secondlife$coord_x[

data.secondlife$id==s1 &

data.secondlife$timepoint==time]

y1 <-

data.secondlife$coord_y[

data.secondlife$id==s1 &

data.secondlife$timepoint==time]

x2 <-

data.secondlife$coord_x[

data.secondlife$id==s2 &

data.secondlife$timepoint==time]

y2 <-

data.secondlife$coord_y[

data.secondlife$id==s2 &

data.secondlife$timepoint==time]

for ( i in c("x1", "y1", "x2", "y2") ) {

if (length(get(i))==0) {

assign(i, NA)

}

if (length(get(i))>1) {

assign(i, get(i)[1])

}

}

data.secondlife.coord[s1,s2,1,time] <- x1

data.secondlife.coord[s1,s2,2,time] <- y1

data.secondlife.coord[s1,s2,3,time] <- x2

data.secondlife.coord[s1,s2,4,time] <- y2

D <-

sqrt((x1-x2)^2 + (y1-y2)^2)

data.secondlife.D[s1, s2, time] <- D

}

}

}

data.secondlife.D.mean <- rowMeans(data.secondlife.D, na.rm=T, dims=2)

# Output results to text files. ID numbers are adjusted back using id.min.

a <- data.secondlife.coord

a.dump <- matrix(ncol=length(dim(a))+1, nrow=prod(dim(a)))

counter=1

for (s1 in 1:dim(a)[1]) {

for (s2 in 1:dim(a)[2]) {

for (dim in 1:dim(a)[3]) {

for (time in 1:dim(a)[4]) {

a.dump[counter,] <-

c(s1+id.min-1, s2+id.min-1, dim, time, a[s1,s2,dim,time])

counter <- counter+1

}}}}

output.coord <- data.frame(a.dump)

names(output.coord) <- c("s1", "s2", "dim", "time", "coord")

output.coord <- subset(output.coord, !is.na(coord))

write.csv(output.coord, paste("output.coord.", datafilename, sep=""))

a <- data.secondlife.D

a.dump <- matrix(ncol=length(dim(a))+1, nrow=prod(dim(a)))

counter=1

for (s1 in 1:dim(a)[1]) {

for (s2 in 1:dim(a)[2]) {

for (time in 1:dim(a)[3]) {

a.dump[counter,] <- c(s1+id.min-1, s2+id.min-1, time, a[s1,s2,time])

counter <- counter+1

}}}

output.distance <- data.frame(a.dump)

names(output.distance) <- c("s1", "s2", "time", "distance")

output.distance <- subset(output.distance, !is.na(distance))

write.csv(output.distance, paste("output.distance.", datafilename, sep=""))

a <- data.secondlife.D.mean

a.dump <- matrix(ncol=length(dim(a))+1, nrow=prod(dim(a)))

counter=1

for (s1 in 1:dim(a)[1]) {

for (s2 in 1:dim(a)[2]) {

a.dump[counter,] <- c(s1+id.min-1, s2+id.min-1, a[s1,s2])

counter <- counter+1

}}

output.distance.mean <- data.frame(a.dump)

names(output.distance.mean) <- c("s1", "s2", "distance")

output.distance.mean <- subset(output.distance.mean, !is.na(distance))

write.csv(

output.distance.mean,

paste("output.distance.mean.", datafilename, sep=""))